10개의 클래스(butterfly, dog, spider, horse, sheep, cow, cat, squirrel, elephant, chicken)로 이미지를 분류하는 모델을 만들어봤다. pytorch에서 제공하는 라이브러리를 활용했다.

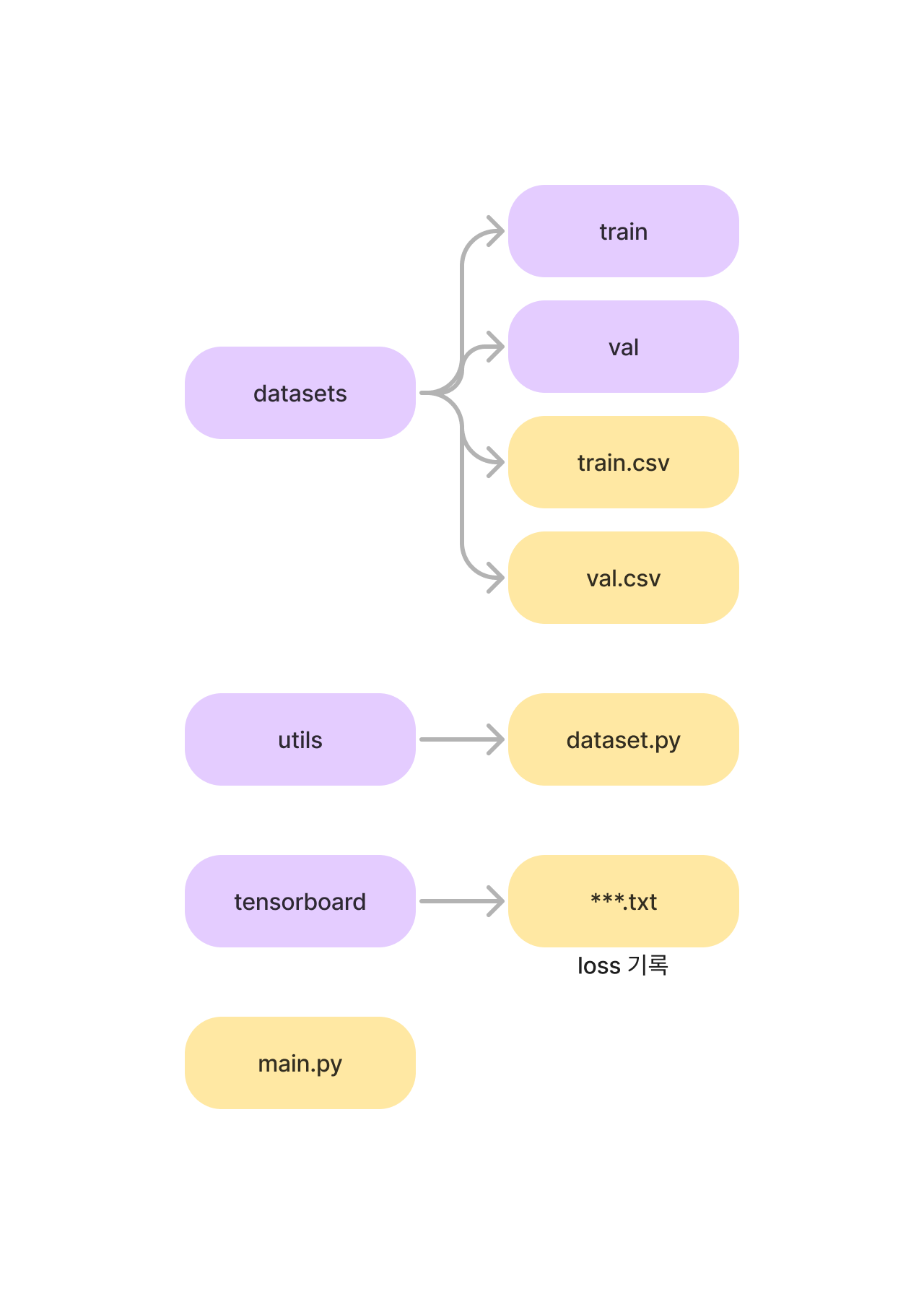

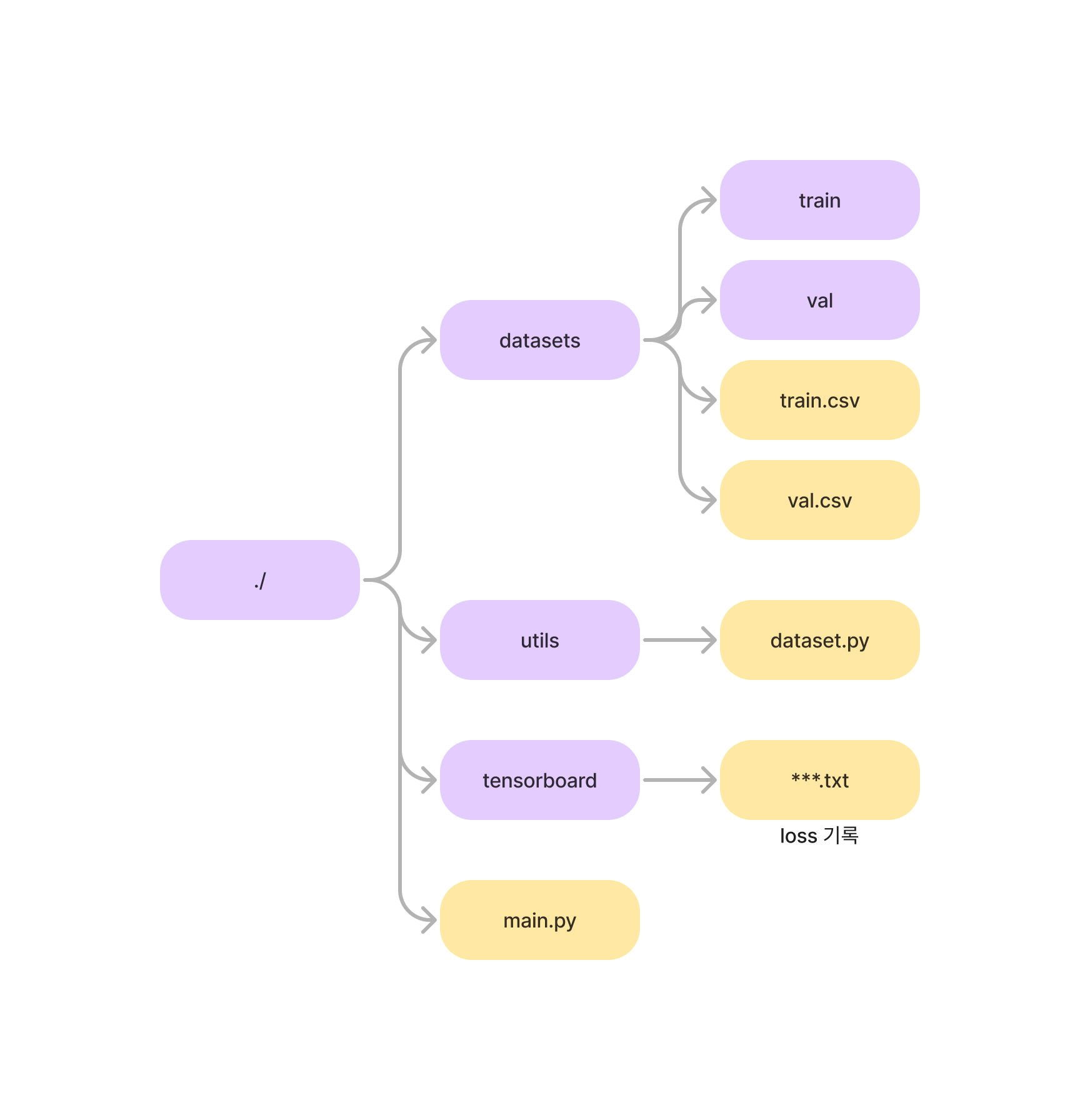

1. 디렉토리 구조

2. dataset.py

(1) 구조

- 생성자

- len 메소드

- getitem 메소드

(2) 필요성

- 로컬, url 등을 통해 데이터를 다운로드

- 데이터 경로, 데이터 레이블(Ground Truth) 리스트 저장

- 데이터 길이 저장

- 데이터 가공(transform) : augmentation, tensor

main.py 파일에서 한꺼번에 코드를 작성하면 코드의 가독성, 모듈성이 떨어지기 때문에 커스텀 데이터셋 클래스를 만들어서 밀키트처럼 편하게 조리할 수 있게 한다!

(3) 구현

1. Import Dependencies

import os # download data

import pandas as pd # get label

from PIL import Image # process image

from torch.utils.data import Dataset # inherit Dataset to make CustomDataset2. Class CustomDataset

class CustomDataset(Dataset): # inherit Dataset

## 1. 생성자

def __init__(self, root = './datasets', transform = transform, mode = 'train'):

# Initialize member variables

self.root = root

self.transform = transform

self.mode = mode

if self.mode == 'train':

self.dataset_path = os.path.join(self.root, 'train_images')

self.annotations_file = pd.read_csv(os.path.join(self.root, 'train.csv'))

elif self.mode == 'val':

self.dataset_path = os.path.join(root, 'val_images')

self.annotations_file = pd.read_csv(os.path.join(root, 'val.csv')

else:

raise NotImplementedError(f"Mode {self.mode} is not implemented yet...")

# Get image name

image_names = self.annotations_file.iloc[:,0].tolist() # Image names in first column

# Load image path

self.image_paths = []

for i in image_names:

path = os.path.join(self.dataset_path, i)

self.image_paths.append(path)

# Get corresponding labels

self.labels = self.annotations_file.iloc[:,1].tolist()

# Get class names

self.class_names = list(set(self.labels))

## 2. len

def __len__(self):

return len(self.image_paths)

## 3. getitem

def __getitem__(self, idx):

images = PIL.open(self.image_paths[idx])

label = self.labels[idx]

# Transform image to tensor

if self.transform:

image = self.transform(image)

return image, label

dataset = CustomDataset()3. main.py

(0) Import Dependencies

- torch + name space 줄일 수 있도록 torch 하위 모듈들

- CustomDataset

- argparse (CLI에서 사용자로부터 입력 받은 argument parsing)

- tqdm (진행 바 표시)

import torch

from torch.utils.data import DataLoader

import torchvision

from torchvision import transforms

from utils.dataset import CustomDataset

from torchvision.models import vgg16

import argparse

from tqdm import tqdm

from torch.utils.tensorboard import SummaryWriter

import numpy as np (1) Transform

- input image에 대한 augmentation, tensor 변환 등 설정

- 기본 transform

# Define Transforms

transform = transforms.Compose([

transforms.Resize([224, 224]),

transforms.ToTensor()

])- Dataset 객체 생성

# Define Dataset

train_dataset = CustomDataset(root = './datasets', transform = transform, mode = 'train')

val_dataset = CustomDataset(root = './datasets', transform = transform, mode = 'val')

print(f"Length of train, validation dataset: {len(train_dataset)}, {len(val_dataset)}")- RGB channel의 평균, 표준편차 계산

# Get Mean, Standard Deviation of RGB Channel

def get_mean_std(dataset):

mean_RGB = [np.mean(image.numpy(), axis = (1, 2)) for image, _ in dataset]

std_RGB = [np.std(image.numpy(), axis = (1, 2)) for image, _ in dataset]

mean_R = np.mean([r for (r, _, _) in mean_RGB])

mean_G = np.mean([g for (_, g, _) in mean_RGB])

mean_B = np.mean([b for (_, _, b) in mean_RGB])

std_R = np.mean([r for (r, _, _) in std_RGB])

std_G = np.mean([g for (_, g, _) in std_RGB])

std_B = np.mean([b for (_, _, b) in std_RGB])

return [mean_R, mean_G, mean_B], [std_R, std_G, std_B]

train_mean_rgb, train_std_rgb = get_mean_std(train_dataset)

val_mean_rgb, val_std_rgb = get_mean_std(val_dataset)- transform 이용 Data Augmentation

# Data Augmentation

transform_train = transforms.Compose([

transforms.Resize([256, 256]),

transforms.RandomCrop([224, 224]),

transforms.ColorJitter(brightness = 0.2, contrast = 0.2, saturation = 0.2, hue = 0.2),

transforms.RandomHorizontalFlip(p = 1),

transforms.RandomVerticalFlip(p = 1),

transforms.ToTensor(),

transforms.Normalize(train_mean_rgb, train_std_rgb)

])

transform_val = transforms.Compose([

transforms.Resize([256, 256]),

transforms.RandomCrop([224, 224]),

transforms.Normalize(val_mean_rgb, val_std_rgb)

])

(2) Define Dataset

dataset.py 모듈에서 정의한 CustomDataset 클래스를 이용하여 실제 train, validation dataset을 정의한다.

즉, 모델에 넣기 위해, 데이터셋을 다운로드 받고, Input(image)와 Output(label) 형태로 변환한다.

train_dataset = CustomDataset(root = './datasets', transform = transform_train, mode = 'train')

val_dataset = CustomDataset(root = './datasets', transform = transform_val, mode = 'val')(3) DataLoader

torch.utils.data 라이브러리의 DataLoader 클래스를 불러와서 train, validation dataset을 배치 단위로 로드한다. 이때 shuffle 유무(shuffle = True), 데이터에 사용할 서브 프로세스의 수(num_workers = 0)를 파라미터로 지정할 수 있다.

train_dataloader = DataLoader(train_dataset, batch_size = 32, shuffle = True, num_workers = 0)

val_dataloader = DataLoader(val_dataset, batch_size = 32, shuffle = False, num_workers = 0)모델을 학습시키기 위해서 데이터를 텐서로 변환해야한다. 텐서로 변환하기 전에 문자로 된 레이블을 정수 인덱스로 바꿔야한다.

# Index to change labels

index_for_class = {'butterfly': 0, 'dog': 1, 'spider': 2, 'horse': 3, 'sheep' : 4,

'cow': 5, 'cat': 6, 'squirrel': 7, 'elephant': 8, 'chicken': 9}(4) Device

torch는 device 클래스를 제공한다. CUDA는 NVIDIA GPU에서 실행되도록 설계된 소프트웨어 환경이다. CUDA를 사용하면 NVIDIA GPU의 병렬 처리 능력을 최대한으로 활용하여 딥러닝 네트워크의 학습 시간을 많이 단축시킬 수 있다.

# Device

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

print(f"Using {device} for inference")(5) Model

torch에서 제공하는 패키지를 이용하여 모델 인스턴스를 만들고, 모델을 device로 보낸다.

# Model

model = vgg16(pretrained = True)

model.classifier[6] = torch.nn.Linear(in_features = 4096, out_features = 10)

model.to(device)(6) Loss, Optimizer

- criterion : 인공신경망을 학습시킬때 현재 모델이 얼마나 구린지(?) 평가할 수 있는 지표가 필요하다.

- optimizer : 이 지표가 바로 loss인데, 분류 문제에서는 주로 크로스엔트로피를 사용한다.

또한 모델의 파라미터를 업데이트할 때 다양한 경사하강법들이 존재한다. (Adam, SGD, Mini-batch 등)

# Loss, Optimizer

criterion = torch.nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(model.parameters(), lr = 0.0001)(7) Train Loop

- Epoch : 에포크는 전체 데이터셋을 한번 훑는 것을 의미한다. 따라서 N 에포크의 경우, 전체 데이터셋에 대해 학습이 총 N번 진행되었음을 뜻한다. for loop을 통해 10번의 에포크에 걸쳐 모델을 학습시킨다.

- Batch : 각 에포크 내에서 배치 단위로 학습을 진행한다. 이때 배치의 크기는 DataLoader에서 지정한대로 32이다. image와 label을 각각 device로 넘겨주고, image를 model안에 넣어서 예측을 수행한다. 이때 파라미터 업데이트를 하는 기준(criterion)은 앞서 크로스 엔트로피로 지정했다. 역전파를 시켜서 파라미터마다 손실함수의 기울기를 구하고, 경사하강법 시 사용하는 optimizer에 따라 손실함수값이 작아지는 방향으로 최적화를 진행한다. 저장된 기울기를 초기화하여 다음번 배치 시 새롭게 기울기를 계산할 수 있도록 한다.

# Train Loop

for ep in range(10):

model.train() # Set model in training mode

for batch, (images, labels) in enumerate(tqdm(train_dataloader)):

# Make labels to indices

label_to_index = [index_for_class[label] for label in labels]

labels = torch.tensor(label_to_index).to(device)

# Send (images, labels) to device

images = images.to(device)

# Compute prediction and loss

outputs = model(images)

print(outputs.shape, labels.shape)

loss = criterion(outputs, labels)

writer.add_scalar("loss", loss.item(), ep)

# Backpropagation

loss.backward()

optimizer.step()

optimizer.zero_grad()

if batch % 10 == 0:

print(f"Epoch : {ep}, Loss : {loss.item()}")(8) Validation

모델을 validation mode로 설정한다. torch.no_grad() 컨텍스트 매니저를 통해 validation 시에는 gradient 계산을 비활성화하여 파라미터 업데이트를 하지 않는다. (메모리 절약)

# Validation

model.eval() # Set model in validation mode

total = 0

correct = 0

with torch.no_grad(): # No gradients computed during validation mode

for (images, labels) in val_dataloader:

total += len(images)

# Make labels to indices

label_to_index = [index_for_class[label] for label in labels]

labels = torch.tensor(label_to_index).to(device)

# Send (images, labels) to device

images = images.to(device)

# Forward pass : Compute prediction and loss

outputs = model(images)

_, pred = torch.max(outputs, dim = 1)

correct += (pred == labels).sum().items()

print(f"After training epoch {ep}, Validation Accuracy : {correct / total}")4. 출처

- YBIGTA DS 자료

- pytorch tutorial