📌 Spark 개발 환경 옵션

- Local Standalone Spark + Spark Shell

- Python IDE – PyCharm, Visual Studio

- Databricks Cloud – 커뮤니티 에디션을 무료로 사용

- 다른 노트북 – 주피터 노트북, 구글 Colab, 아나콘다 등등

📌 Local Standalone Spark

-

Spark Cluster Manager로 local[n] 지정한다.

- master를 local[n]으로 지정한다.

- master는 클러스터 매니저를 지정하는데 사용한다.

-

주로 개발이나 간단한 테스트 용도이다.

-

하나의 JVM에서 모든 프로세스를 실행된다.

- 하나의 Driver와 하나의 Executor가 실행된다.

- 1+ 쓰레드가 Executor안에서 실행된다.

-

Executor안에 생성되는 쓰레드 수

- local:하나의 쓰레드만 생성

- local[*]: 컴퓨터 CPU 수만큼 쓰레드를 생성

📌 Google Colab에서 Spark 사용

- PySpark + Py4J를 설치

!pip install pyspark==3.3.1 py4j==0.10.9.5 -

구글 Colab 가상서버 위에 로컬 모드 Spark을 실행

-

개발 목적으로는 충분하지만 큰 데이터의 처리는 불가

-

Spark Web UI는 기본적으로는 접근 불가

- ngrok을 통해 억지로 열 수는 있음

-

Py4J

- 파이썬에서 JVM내에 있는 자바 객체를 사용가능하게 해줌

📌 Linux에서 Spark 사용

- JDK 8/11 설치

sudo apt install openjdk-8-jdk

sudo apt install openjdk-11-jdk- JDK 8/11 버전 바꾸는 방법

sudo update-alternatives --config java- 환경변수 (시작 스크립트)

nano ~/.bashrcexport JAVA_HOME=/usr/lib/jvm/java-11-openjdk-amd64

export PATH=$JAVA_HOME/bin:$PATHJAVA 2가지 버전 사용방법

이렇게 하고 보니까 환경변수가 하나의 걸림돌이가 되었다.

나는 여러 방법이 있겠지만 두개의 스크립트를 작성해서 source 명령어로 버전을 바꾸면서 사용하려고 한다.

nano ~/use_java11.sh

export JAVA_HOME=/usr/lib/jvm/java-11-openjdk-amd64

export PATH=$JAVA_HOME/bin:$PATH

echo "witched to JDK 11"nano ~/use_java8.sh

export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64

export PATH=$JAVA_HOME/bin:$PATH

echo "witched to JDK 8"마무리로 둘다 권한을 부여해주어야한다.

chmod +x ~/use_java11.sh

chmod +x ~/use_java8.sh이제 사용할 때는 아래처럼 명령어를 입력하면 된다.

source ~/use_java8.sh

source ~/use_java11.shSpark Install

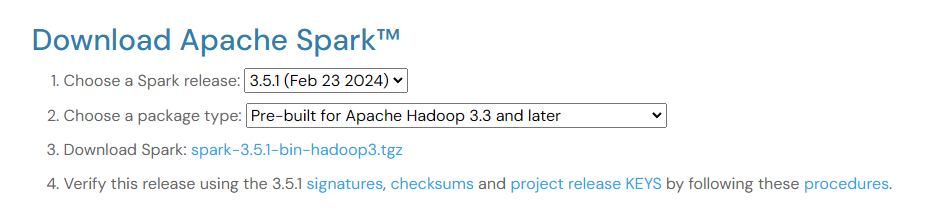

이제 Spark를 다운로드 받아보자.

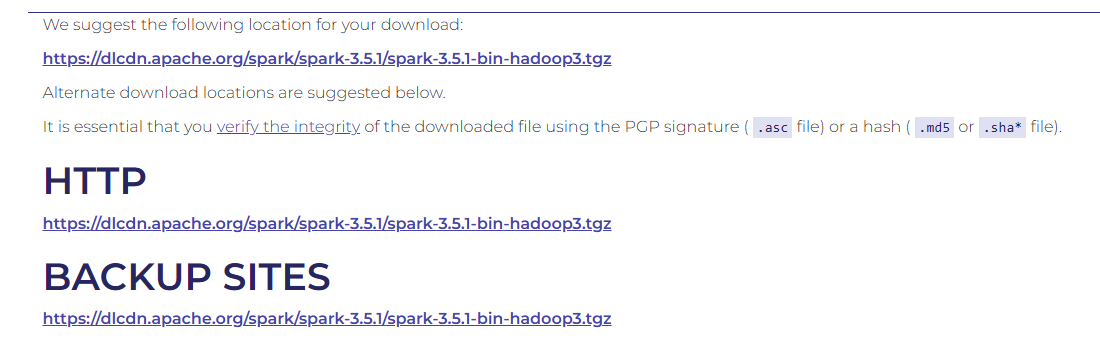

우리는 리눅스 터미널 환경에서 다운로드 받을것이기 때문에 링크만 가져오자.

wget https://dlcdn.apache.org/spark/spark-3.5.1/spark-3.5.1-bin-hadoop3.tgz압축 해제

tar -xvzf spark-3.5.1-bin-hadoop3.tgzSpark도 환경변수를 작성해 주어야 한다.

현재 디렉토리 + Spark이름(spark-3.5.1-bin-hadoop3) 를 작성해주자.

nano ~/.bashrc

export SPARK_HOME=/home/urface/spark_course/spark-3.5.1-bin-hadoop3

export PATH=$PATH:$SPARK_HOME/bin저장해주자.

source ~/.bashrc이제 Spark의 Shell로 진입해보자.

spark-shellSpark-shell을 닫는 방법은 다음과 같다.

:qPy-Spark-Shell

이전에 설치한 Spark는 Scala를 위한 버전이다.

이번에는 python으로 사용가능한 Spark을 설치해보자

그전에 미리 Py4j를 설치해야 한다.

pip install py4j이제 바로 실행 가능하다

pyspark나가는 방법

exit()Spark Submit

Spark을 설치하면 같이 설치되는 파이의 값을 계산하는 예제 프로그램을 실행해보자

spark-submit --master 'local[4]' ./spark-3.5.1-bin-hadoop3/examples/src/main/python/pi.py