🔗 원문 링크

https://jacobgil.github.io/deeplearning/vision-transformer-explainability

1. Background

2020년에 등장한 여러 연구들은 Transformer를 컴퓨터 비전(CV) 분야에 본격적으로 도입하기 시작하였습니다.

그중에서도 < An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale >의 Vision Transformer(ViT)와 < Training data-efficient image transformers & distillation through attention >의 Data-efficient image Transformer(DeiT)가 가장 눈에 띄는 주요한 연구라고 할 수 있습니다.

이후에도 Vision Task에 Transformer를 사용하는 연구들이 많이 등장할 것이라고 예상되는데, 그렇다면 우리는 다음과 같은 의문점을 가질 수도 있습니다.

❓

1. ViT의 내부에서는 무엇이 일어나고 있을까요?

2. ViT는 어떻게 작동하는 걸까요?

3. 우리가 그 내부를 들여다보고 각 구성 요소를 이해할 수 있을까요?

이러한 질문들은 공통적으로 블랙박스형인 딥러닝 모델들의 ‘설명 가능성(Explainability)’을 요구하고 있습니다. ’설명 가능성(Explainability)’은 다소 포괄적이고 사람마다 다르게 해석할 수 있는 개념이지만, 여기서는 설명 가능성을 다음과 같이 정의합니다:

💡 [ for the developers ]

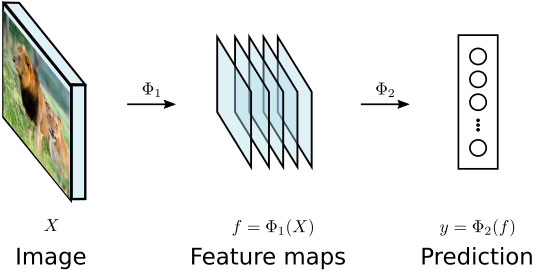

1. What’s going on inside when we run the Transformer on this image? → Activation Visualization

중간 활성화 계층을 살펴볼 수 있는 것이 이에 해당합니다. 컴퓨터 비전에서는 보통 이러한 활성화 계층을 이미지로 표현합니다. 서로 다른 채널 활성화를 2D 이미지로 시각화할 수 있어 어느 정도 해석이 가능합니다.

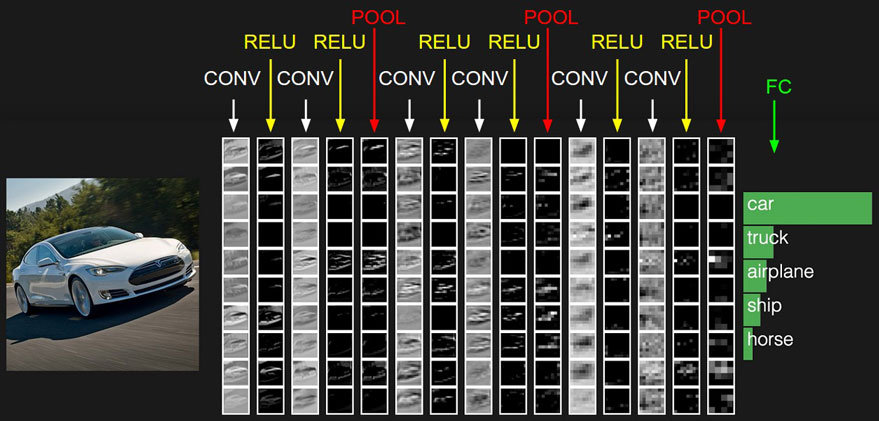

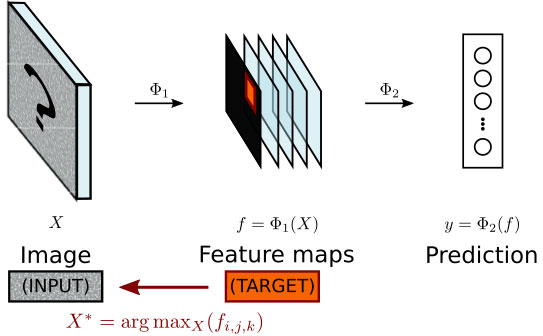

2. What did it learn? → Activation Maximization

모델이 어떤 유형의 패턴을 학습했는지를 조사할 수 있는 것입니다. 보통 “이 활성화에 대한 반응을 최대화하는 입력 이미지는 무엇인가?“라는 질문의 형태로 이루어지며, ‘Activation Maximization(활성화 최대화)’ 기법의 변형을 사용할 수 있습니다.

- Activation Maximization

- CNN 상의 어느 타겟 출력값을 하나 고정해 놓고, 이를 최대로 활성화하는 입력 이미지를 찾거나 생성하는 방법

💡 [ for both the developer and the user ]

1. What did it see in this image? → Pixel Attribution

- “이 이미지에서 네트워크의 예측에 영향을 미친 부분은 어디인가?“라는 질문에 답할 수 있는 것, 즉 픽셀 기여도(Pixel Attribution)라고 불립니다.

이와 같은 설명 가능성이 ViT에도 필요하기에, 이 글에서 이를 구현해보는 과정을 공유합니다. (이 과정에 대한 전체 코드는 다음에서 확인할 수 있습니다: https://github.com/jacobgil/vit-explain )

기본적인 setting으로, 먼저 사용할 모델은 Facebook에서 새롭게 공개한 ‘Deit Tiny’ 모델입니다.

model = torch.hub.load('facebookresearch/deit:main', 'deit_tiny_patch16_224', pretrained=True)그리고 224x224 입력 이미지를 기본으로 하여 진행하겠습니다.

- < ViT & DeiT 이해를 위한 글 >

2. Q, K, V and Attention

Vision Transformer는 몇 개의 인코딩 블록으로 구성됩니다. 각 블록에는 다음이 포함되어 있습니다.

- 여러 개의 Attention Head : 각 패치 표현이 이미지의 다른 패치들로부터 정보를 통합하는 역할을 합니다.

- MLP : 각 패치 표현을 더 높은 수준의 특징 표현으로 변환합니다.

⇒ Attention Head와 MLP 모두에 잔차 연결(residual connection)이 적용되며, 이를 통해 이들이 어떻게 작동하는지 볼 것입니다.

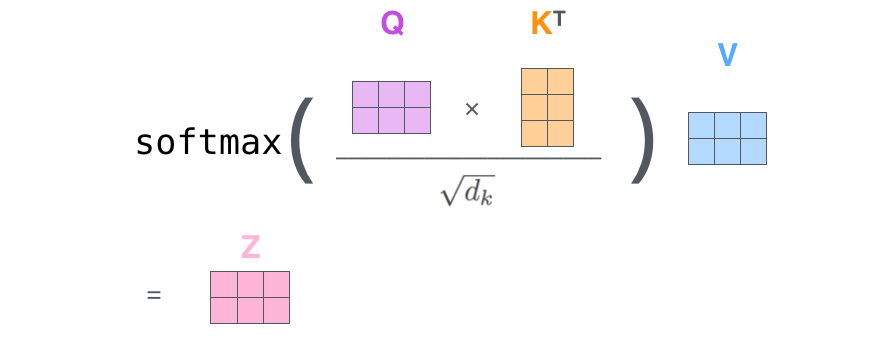

Vision Transformer에서는 이미지 데이터를 입력으로 받아, 이를 토큰화하여 각 패치의 관계를 어텐션 메커니즘을 통해 학습합니다. 특히, 모델 내부의 각 Attention Head에서는 중요한 요소인 Q(Query), K(Key), V(Value)가 함께 작동하여 이미지의 패치들 간의 관계를 파악합니다. 여기서는 Deit Tiny 모델을 예시로 들어 설명하겠습니다.

Deit Tiny의 Attention Head와 Q, K, V의 구조

Deit Tiny는 각 레이어에 3개의 Attention Head를 가지고 있습니다. 각 Attention Head는 이미지 패치의 정보를 담고 있는 토큰들을 처리하며, 이들의 구체적인 shape는 3x197x64입니다. 이를 구체적으로 해석하면 다음과 같습니다:

💡

- Attention Head는 총 3개가 존재합니다.

- Attention Head는 197개의 토큰을 받아들이며, 이는 CLS 토큰을 포함한 전체 이미지 패치를 의미합니다.

- 각 토큰은 길이 64의 특징 벡터를 가지고 있습니다.

따라서, Attention Head마다 197개의 토큰이 각각 64차원의 특징 표현을 가지며, 이들이 모여 3x197x64 구조를 형성합니다.

토큰 구성 - 196개의 이미지 패치 + 1개의 CLS 토큰

197개의 토큰 중 196개는 원본 이미지의 14x14 패치를 나타내며, 각 패치가 개별 토큰으로 표현됩니다. 그리고 첫 번째 토큰은 CLS(Classification) 토큰으로, 최종 예측을 위한 종합적인 정보로 사용됩니다. 이 CLS 토큰은 전체 이미지의 대표적인 정보를 통합하여, 마지막 출력 레이어에서 최종 분류나 예측 작업을 수행하는 데 활용됩니다.

이제, Q와 K의 행이 어떤 의미를 가지는지 살펴보겠습니다.

Q와 K의 역할 - 이미지의 위치 관계를 파악하는 방법

각 패치 토큰에 대해 Query(Q)와 Key(K) 행렬이 생성되며, 이들은 각 이미지 패치의 특정 위치와 관련된 64차원의 특징 벡터를 가지고 있습니다. 이를 통해 transformer는 이미지 패치들 간의 유사성을 계산하고, 어떤 패치들이 다른 패치들로부터 더 많은 정보를 가져가야 하는지 결정합니다.

다음과 같이 Q, K, V의 역할을 이해할 수 있습니다:

💡

- 이미지의 특정 패치가 특징 벡터 를 가진다고 가정합니다.

- 어텐션 메커니즘에서는 이 특징 벡터 와 다른 패치의 Key 벡터 간의 유사성을 계산합니다.

⇒ 이때, 특정 패치 위치에 해당하는 Key 벡터 가 Query 벡터 와 유사할수록 해당 위치로부터 에 더 많은 정보가 흐릅니다. 이를 통해 모델은 각 패치가 서로 어떤 관계에 있는지를 파악하며, attention이 집중되어야 할 부분을 강조합니다.

결과적으로, 유사한 Q와 K를 가진 패치들은 상호간의 관계가 강하게 형성되며, transformer는 이를 바탕으로 이미지 내에서 중요한 위치와 패치 간의 상관관계를 학습하게 됩니다.

image from http://jalammar.github.io/illustrated-transformer

3. Visual Examples of K and Q - different patterns of information flowing

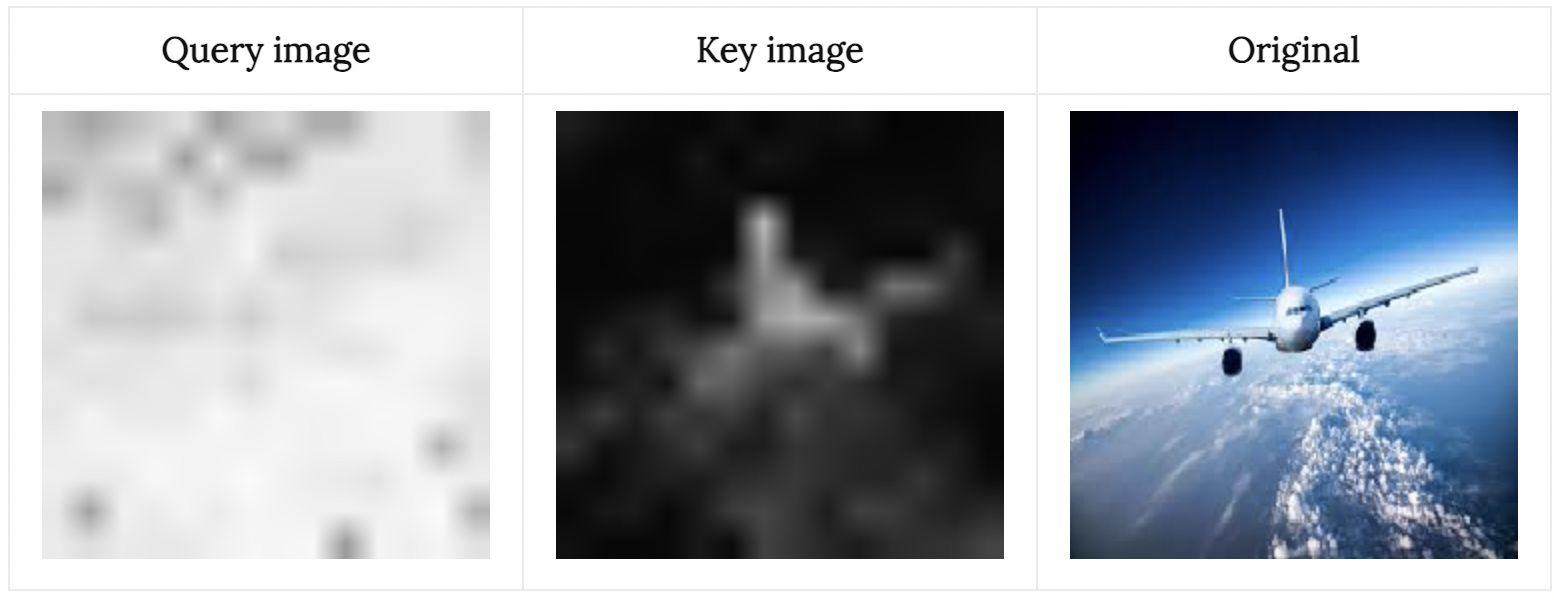

Vision Transformer에 비행기 이미지를 입력으로 넣으면, 이 이미지는 transformer 레이어를 거치며 다양한 특성을 학습하게 됩니다. 이 과정에서 중요한 역할을 하는 것이 바로 Query(Q)와 Key(K)입니다. 여기서는 비행기 이미지를 예로 들어, Q와 K가 어떻게 작동하는지 살펴보겠습니다.

ViT에서 Q와 K는 각각 이미지의 특정 위치에서 특징 벡터를 나타냅니다.

아래와 같이 예를 들을 수 있습니다.

- : 이미지에서 위치 i에 있는 채널 c의 Query 특징 벡터 값

- : 이미지에서 위치 j에 있는 채널 c의 Key 특징 벡터 값

여기서 i와 j는 이미지의 특정 패치 위치를 의미하고, c는 특정 채널을 나타냅니다.

이제 중요한 질문은 “K 벡터가 각 위치에서 Q 벡터로 어떻게 정보를 전달할지”입니다. 이는 Q와 K의 내적을 통해 계산됩니다. ViT는 이미지의 특정 패치 간의 상호작용을 이해하기 위해, Q와 K 벡터 간의 유사성을 내적을 통해 측정하고, 이 유사도에 따라 정보가 흐르게 합니다.

여기에는 두 가지 케이스가 있습니다.

- 같은 채널에서 Q와 K 벡터의 부호가 동일한 경우

예를 들어, 위치 i의 Query 벡터 와 위치 j의 Key 벡터 가 모두 양수이거나 음수인 경우, 이 둘을 곱하면 양수가 됩니다.

→ 이때, 위치 j에서 위치 i로 정보가 흐르게 됩니다. 즉, 해당 패치는 정보가 흐르는 경로로 포함됩니다.

- 같은 채널에서 Q와 K 벡터의 부호가 다른 경우

반면, 가 양수이고 가 음수이거나 그 반대인 경우, 둘을 곱하면 음수가 됩니다.

→ 이 경우에는 위치 j에서 i로 정보가 흐르지 않습니다. 즉, 두 패치 간의 정보 연결이 약해지며, 이들은 서로 다른 특성을 학습하게 됩니다.

이제 이러한 특성을 시각화하기 위해 각 이미지를 torch.nn.Sigmoid() 레이어에 통과시킬 수 있습니다. Sigmoid 함수는 값을 양수와 음수로 나누어주기 때문에, 밝은 픽셀은 양수, 어두운 픽셀은 음수로 나타납니다. 이를 통해 Q와 K의 특성에 따라 이미지의 특정 위치에서 정보가 흐르는지 여부를 쉽게 확인할 수 있습니다.

두 가지 주요 패턴

다양한 채널에 대해 Q와 K 시각화를 살펴보면, 다음과 같은 두 가지 패턴이 관찰됩니다.

- 단방향 정보 흐름: 한 위치에서 출발한 정보가 특정 영역으로만 흐르는 경우입니다.

- 양방향 정보 흐름: 서로 다른 위치 간에 정보를 교환하는 경우입니다. 이 경우, 이미지의 주요 영역들이 서로 연결되어 있으며, 서로의 정보가 흐르는 경로가 강화됩니다.

Pattern 1 - 정보가 한 방향으로 흐르는 경우

레이어 8, 채널 26, 첫 번째 어텐션 헤드:

- Key image

- 비행기를 강조합니다. 이는 해당 패치가 비행기와 관련된 정보를 담고 있음을 나타냅니다.

- Query image

- 전체 이미지를 강조합니다.

이 상황에서 Query 이미지의 대부분 위치가 양수인 반면, 정보는 Key 이미지에서 비행기 부분에서만 흐릅니다. 이는 Query와 Key가 다음과 같은 메시지를 전달하고 있음을 의미합니다.

비행기를 찾았고, 이미지의 모든 위치가 이 정보를 알기를 원해요

이처럼 ViT는 특정 패치를 통해 비행기 위치와 관련된 정보를 찾고, 이 정보를 전체 이미지로 전파하여 다른 패치들도 이 비행기의 존재를 인식할 수 있게 합니다.

Pattern 2 - 정보가 양방향으로 흐르는 경우

레이어 11, 채널 59, 첫 번째 어텐션 헤드:

- Query image

- 주로 비행기의 하단 부분을 강조합니다. 이는 비행기 하단에 집중하여 정보를 받아들이고자 함을 나타냅니다.

- Key image

- 비행기 상단 부분에서 음수 값을 나타내고 있습니다. 이 위치는 이미지의 다른 부분으로 정보를 확산시키는 역할을 합니다.

이 상황에서 정보는 두 방향으로 흘러갑니다.

- 비행기의 상단 부분에서 전체 이미지로 정보가 확산됩니다. Key 이미지에서 비행기 상단이 음수로 나타나면서, 이 정보가 Query의 음수 값인 전체 이미지로 전달됩니다

비행기를 찾았어요. 이제 이미지의 다른 부분에게 이 정보를 전파해요.

- 이미지의 ‘비행기 외’ 부분에서 비행기 하단으로 정보가 전달됩니다. Key 이미지에서 양수로 나타나는 주변 정보는 Query의 양수 값인 비행기 하단으로 흘러 들어갑니다.

비행기에게 주변의 정보를 알려주자.

이와 같은 양방향 정보 흐름을 통해, ViT는 비행기 이미지의 상하 위치뿐만 아니라, 주변 환경과 비행기 간의 관계를 학습하게 됩니다. 이를 통해 모델은 이미지의 주요 객체와 그 주변 배경이 서로 어떻게 연결되어 있는지를 파악합니다.

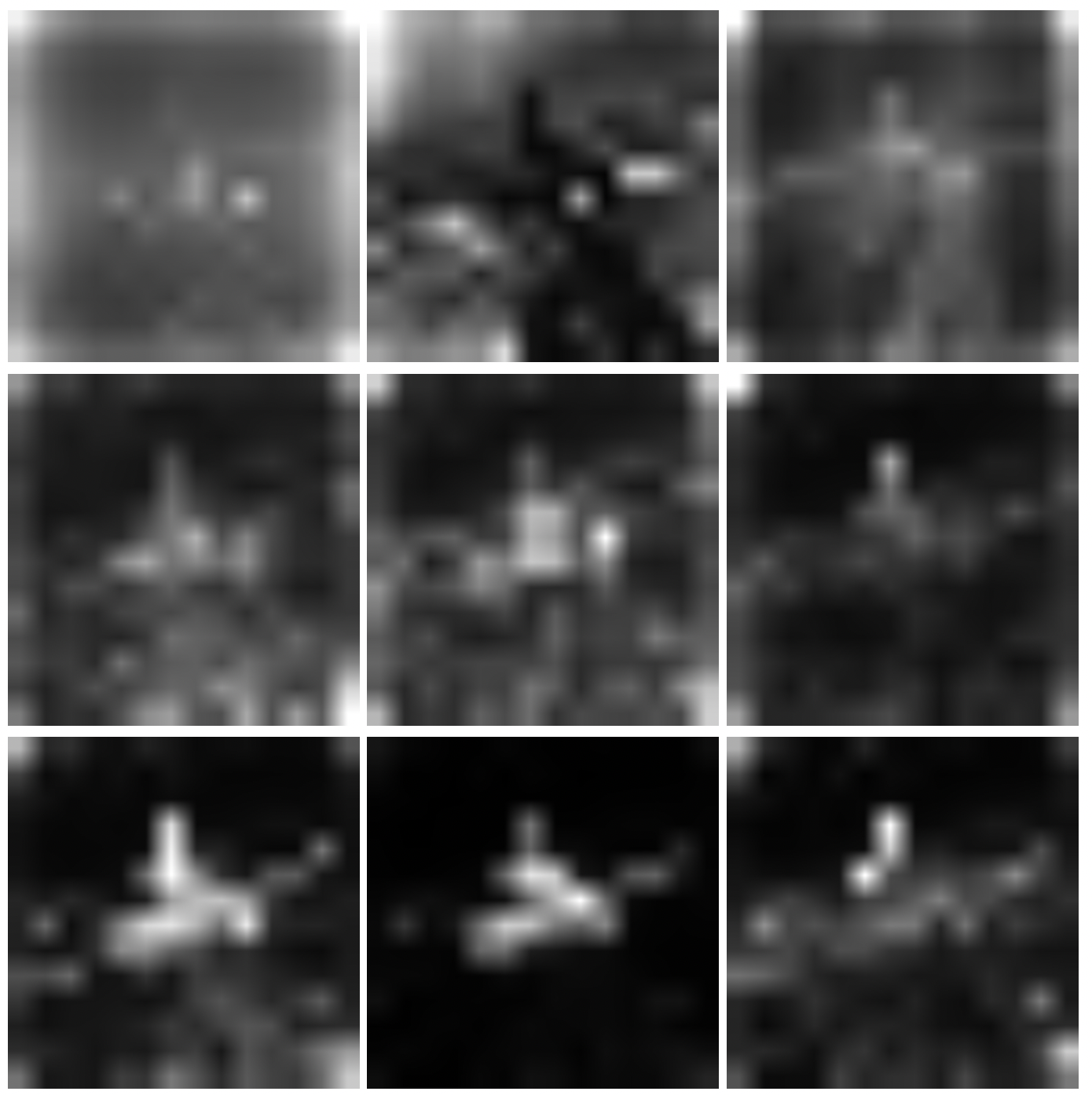

4. How do the Attention Activations look like for the class token throughout the network?

ViT의 중요한 특징 중 하나는 class token이 네트워크 내 여러 레이어를 거치며 다양한 패치로부터 정보를 통합해 간다는 것입니다. 클래스 토큰은 최종 예측에 중요한 역할을 하며, 각 패치들이 어떻게 클래스 토큰에 정보를 제공하는지를 이해하는 것은 모델의 작동 방식을 파악하는 데 유용합니다.

어텐션 헤드가 여러 개 존재하지만, 단순화를 위해 여기서는 첫 번째 어텐션 헤드에서 클래스 토큰의 어텐션 흐름을 살펴보겠습니다. 각 어텐션 행렬()은 197x197크기를 가지며, 이 중 첫 번째 행을 확인하면 클래스 토큰이 이미지 내 다른 위치로부터 정보를 받는 정도를 알 수 있습니다. 첫 번째 값을 제외하고 나머지 196개의 값을 보면, 이는 14x14 크기의 패치들로 구성되어 클래스 토큰으로 정보가 어떻게 흐르는지 시각적으로 표현해줍니다.

class token attention activation의 변화 과정

위 이미지는 클래스 어텐션 활성화가 네트워크의 레이어를 거치면서 어떻게 변화하는지를 보여줍니다.

초기 레이어에서는 이미지 전체가 흐릿하게 나타나지만, 레이어를 거듭할수록 모델이 비행기 부분을 점차 선명하게 분리해내는 것을 볼 수 있습니다. 특히, 7번째 레이어쯤에서는 비행기가 배경으로부터 뚜렷이 구별되어 있습니다.

그러나 레이어가 더 진행됨에 따라, 비행기의 일부가 사라졌다가 다시 나타나는 현상이 반복됩니다. 이는 ViT에서 잔차 연결(residual connections)이 있기 때문에 가능한 일입니다.

잔차 연결의 역할

잔차 연결 덕분에, 특정 레이어에서 일부 정보가 사라져도 이전 레이어의 정보를 다시 참조하여 필요한 부분을 복원할 수 있습니다. 예를 들어, 위 이미지에서처럼 어떤 레이어에서 비행기의 일부가 어텐션 맵에서 사라지더라도, 다음 레이어에서 이전 레이어의 잔차 정보를 통해 다시 나타납니다.

잔차 연결은 ViT가 중요한 패턴을 안정적으로 유지하게 해주는 역할을 합니다. 이는 네트워크가 깊어질수록 중요한 정보가 손실되지 않고 유지될 수 있도록 하여, 최종적으로 모델의 예측 성능을 향상시키는 데 기여합니다.

5. Attention Rollout

트랜스포머의 어텐션 흐름을 시각화하는 방법

이전 이미지들에서는 개별 활성화가 어떻게 생겼는지를 확인할 수 있었지만, 트랜스포머 전체에서 어텐션이 처음부터 끝까지 어떻게 흐르는지는 보여주지 못했습니다. 이를 정량화하기 위해 사용할 수 있는 기법이 바로 Attention Rollout입니다. 이 기법은 Samira Abnar와 Willem Zuidema의 < Quantifying Attention Flow in Transformers >에서 소개되었으며, 트랜스포머의 어텐션 흐름을 정량화하여 시각화하는 데 유용합니다. 이 방법은 또한 ViT로 유명한 < An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale >에서도 언급되었습니다.

Attention Rollout 기법의 작동 방식

Transforemer의 각 블록에서 우리는 어텐션 행렬 을 얻습니다. 이 행렬은 이전 레이어의 토큰 에서 다음 레이어의 토큰 로 얼마나 많은 어텐션이 흐르는지를 나타내는 값입니다. 각 레이어 사이의 어텐션 행렬들을 순차적으로 곱함으로써, 시작부터 끝까지 모든 레이어에서 누적된 전체 어텐션 흐름을 구할 수 있습니다.

잔차 연결과 항등 행렬

Transformer구조에는 잔차 연결이 포함되어 있어, 중간 레이어의 정보가 사라지지 않고 다음 레이어로 전달됩니다. 이를 모델링하기 위해 각 레이어의 어텐션 행렬에 항등 행렬 을 더해주는 방식을 사용합니다. 즉, 를 통해 잔차 연결을 반영한 어텐션 행렬을 구할 수 있습니다.

We have multiple attention heads. What do we do about them?

여러 개의 어텐션 헤드 처리 방법

Transformer에는 일반적으로 여러 개의 어텐션 헤드가 존재하며, 각 헤드는 다른 정보를 학습합니다. Attention Rollout 기법에서는 모든 헤드의 평균을 취해 하나의 어텐션 행렬을 계산하는 것을 권장합니다. 그러나 경우에 따라 최소값, 최대값을 사용하거나 가중치를 다르게 줄 수도 있습니다.

Attention Rollout의 계산

최종적으로, 레이어 에서의 Attention Rollout 행렬은 다음과 같은 재귀적 방식으로 계산됩니다:

또한 전체 어텐션 흐름이 1로 유지되도록 행을 정규화해야 합니다. 이를 통해 각 토큰이 처음부터 끝까지 트랜스포머를 거치며 어떻게 정보가 전달되는지, 어떤 경로로 흐르는지를 시각화할 수 있습니다.

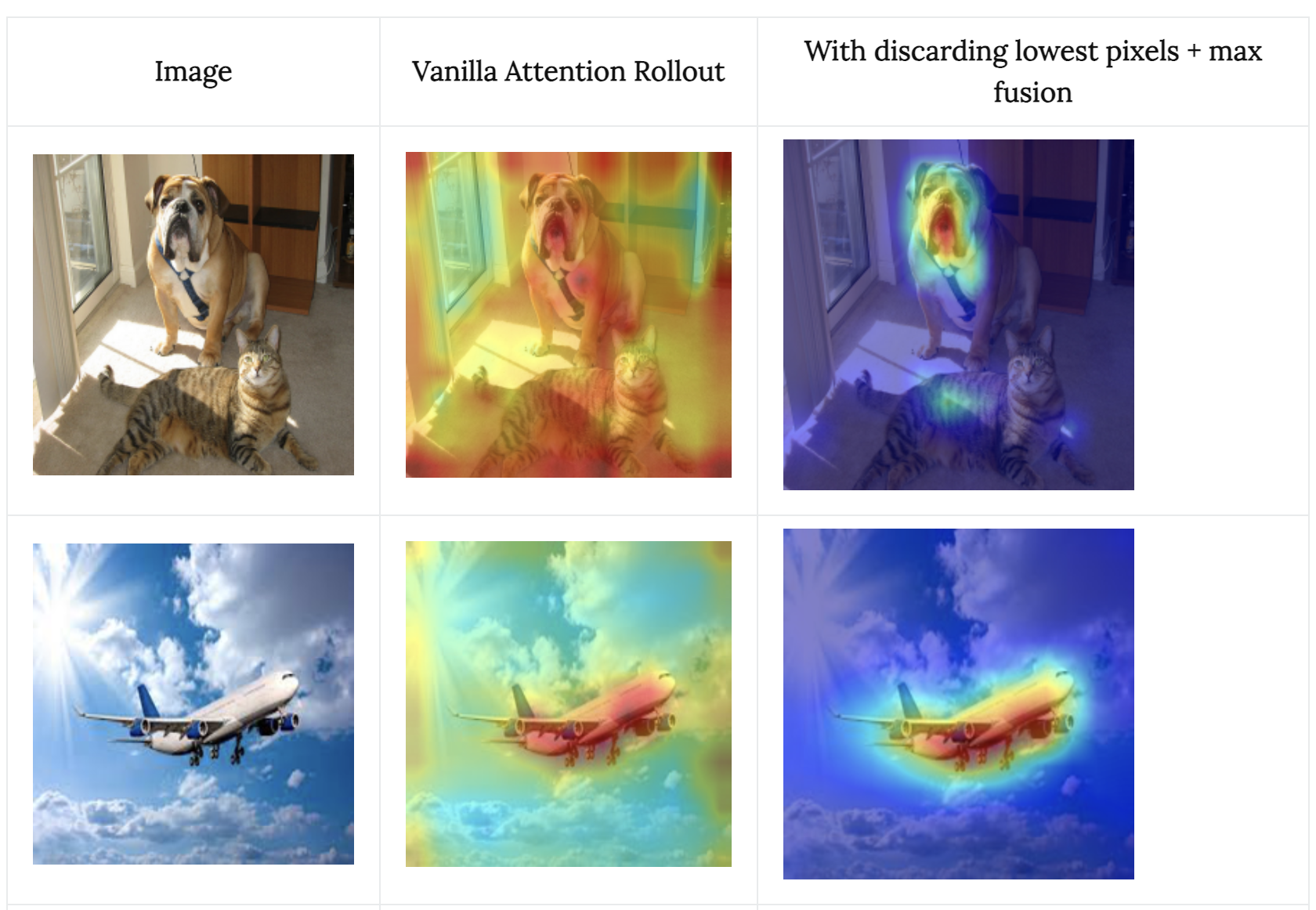

6. Modifications to get Attention Rollout working with Vision Transformers

‘Data Efficient’ 에 Attention Rollout 기법을 적용해보았으나, < An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale > 논문만큼 좋은 결과가 나오지 않았습니다.

특히, 어텐션이 이미지의 흥미로운 부분에만 집중되지 않고 전체적으로 노이즈가 많아 보였습니다.

이를 개선하기 위해 다양한 시도를 했고, 그 과정에서 두 가지 중요한 요소를 발견했습니다.

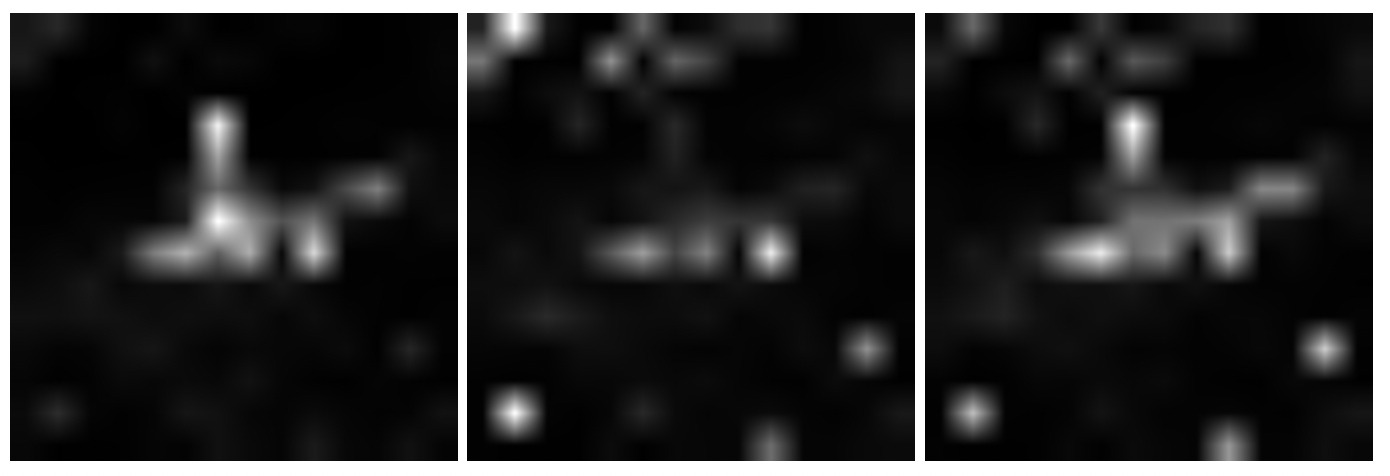

The way we fuse the attention heads matters

Attention Rollout 기법에서는 여러 어텐션 헤드를 결합할 때, 일반적으로 평균값을 사용하는데, 이를 다른 방식으로 해보았습니다.

예를 들어, 최소값을 사용하는 경우 결과가 어떻게 달라지는지를 살펴보았습니다.

- Mean Fusion(평균 결합): 어텐션 헤드의 평균을 사용하여 결합한 이미지입니다. 여러 정보가 고르게 결합되지만 노이즈가 남아 있습니다.

- Min Fusion(최소값 결합): 각 어텐션 헤드의 최솟값을 취해 결합한 이미지로, 공통된 정보만 남겨 노이즈가 줄어드는 것을 확인할 수 있습니다.

여러 어텐션 헤드는 각기 다른 부분에 주목하는데, 최솟값을 사용하면 공통된 관심 영역을 남기면서 노이즈를 줄이는 데 도움이 됩니다. 다만, 낮은 어텐션 값을 제거하는 방법과 함께 최댓값을 결합하는 것이 최상의 결과를 보여주었습니다.

We can focus only on the top attentions, and discard the rest

모든 어텐션 값을 사용하지 않고, 낮은 어텐션 값의 픽셀을 제거하는 것이 결과에 큰 영향을 미쳤습니다.

아래 이미지는 점진적으로 어텐션 값이 낮은 픽셀을 제거해가며 어텐션 맵이 어떻게 변화하는지 보여줍니다. 더 많은 픽셀을 제거할수록 이미지 내 주요 객체가 더 명확히 드러나는 것을 볼 수 있습니다.

아래는 이러한 수정 사항을 반영한 최종 결과입니다.

- Vanilla Attention Rollout (기본 Attention Rollout)

노이즈가 많고 중요한 객체가 명확히 드러나지 않습니다.

- 낮은 픽셀 제거 + 최대값 결합

낮은 어텐션 값을 버리고 최대값 결합을 적용한 결과로, 이미지 내 주요 객체가 더 뚜렷하게 강조된 것을 확인할 수 있습니다.

이처럼 Attention Rollout 기법을 최적화하는 과정에서, 어텐션 헤드를 결합하는 방식과 불필요한 픽셀을 제거하는 방식이 매우 중요하다는 것을 알 수 있습니다. 이를 통해 ViT가 주목하는 영역을 더 정확하게 시각화할 수 있게 되었습니다.

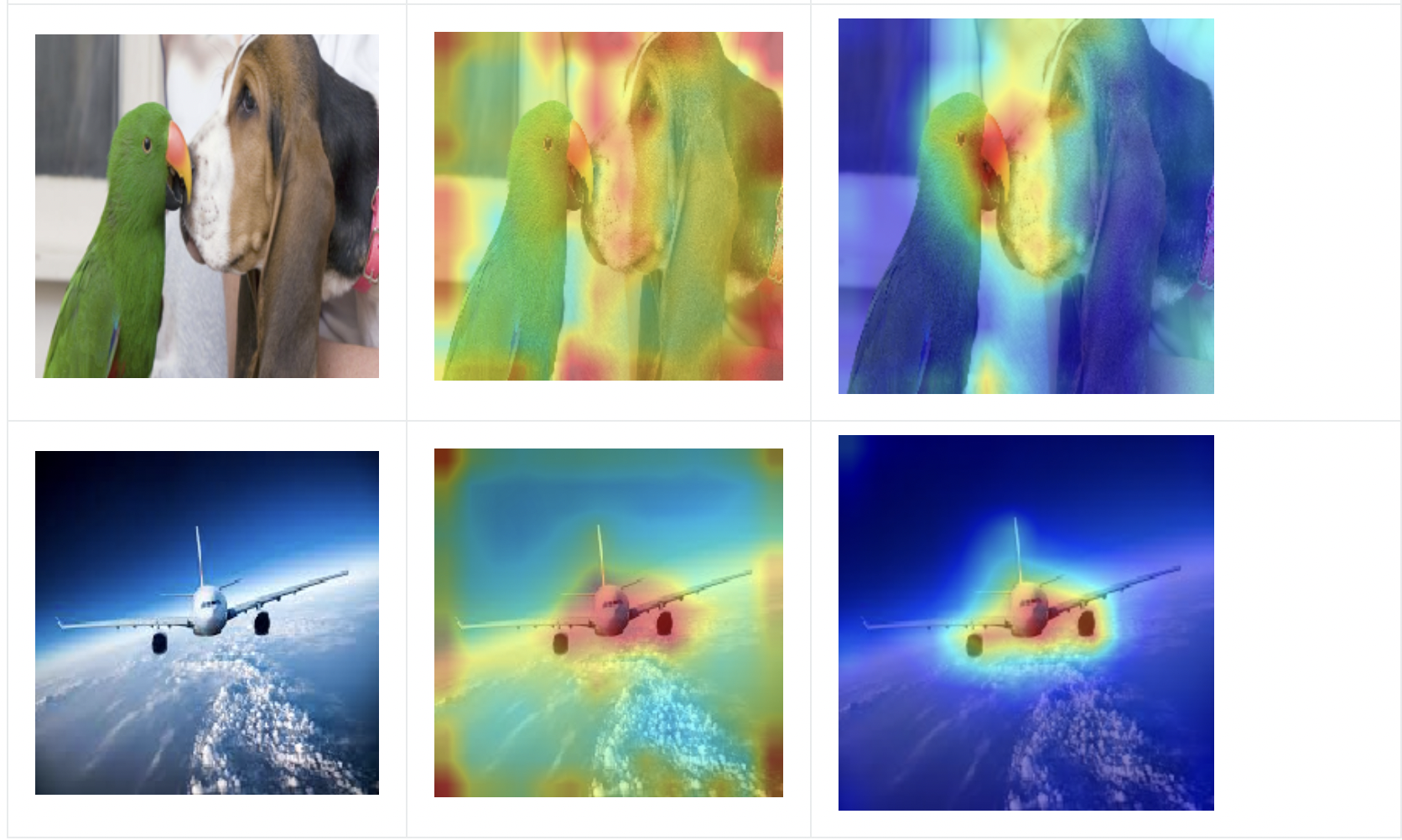

7. Gradient Attention Rollout for Class Specific Explainability

Transformer의 예측 과정에서 특정 클래스가 높은 점수를 받는 이유를 이해하고 싶을 때, 클래스별 설명 가능성(Class Specific Explainability)을 통해 그 답을 찾을 수 있습니다. 예를 들어, “카테고리 42에서 높은 출력 점수를 얻는 데 기여하는 이미지의 부분은 어디인가?“와 같은 질문을 던질 수 있습니다.

이를 구현하기 위한 방법 중 하나가 바로 Gradient Attention Rollout입니다. 이 기법에서는 각 레이어의 어텐션 헤드가 주목하는 위치를 시각화하되, 특정 클래스에 대한 그레이디언트 정보를 활용하여 관심도를 가중치로 부여합니다. 현재는 softmax 이후의 어텐션 값에 적용하고 있지만, 다른 위치에 적용할 수도 있습니다.

수식으로는 다음과 같이 표현됩니다.

Where does the Transformer see a Dog (category243), and a Cat(category 282)?

Where does the Transformer see a Musket dog (category 161) and a Parrot(category 87)?

8. What Activation Maximization Tells us

신경망의 특정 부분을 최대 활성화시키는 입력 이미지를 찾는 기법인 Activation Maximization을 사용하면, 모델이 어떤 특징을 학습했는지를 더 명확히 알 수 있습니다. 이는 네트워크가 특정한 부분에서 높은 반응을 보이도록 하는 입력을 시각화하는 기법입니다.

ViT에서는 이미지를 14x14 개의 독립적인 패치로 나눕니다. 각 패치는 16x16 픽셀로 이루어져 있으며, 모델이 이미지를 인식하는 기본 단위가 됩니다. 따라서 Activation Maximization 기법을 ViT에 적용하면, 아래 그림에서 볼 수 있듯이 연속된 이미지가 아닌 14x14 크기의 개별 패치로 나뉜 결과를 얻습니다.

이러한 패치 구조는 위치 임베딩 덕분에 서로 인접한 패치가 유사한 출력을 내도록 유도됩니다. 아래 이미지에서 인접한 패치들이 서로 비슷한 특징을 가지고 있지만, 패치 간에는 미묘한 불연속성이 보입니다. 이는 ViT가 위치 임베딩을 통해 각 패치 간의 관계를 학습하더라도, 패치 단위로 나뉜 독립적인 구조의 한계도 보여줍니다.

향후 연구에서는 이러한 패치 간의 불연속성을 줄이고, 더 자연스러운 연결성을 유지할 수 있는 공간적 연속성 제약(spatial continuity constraint)을 적용하는 방법이 탐구될 수 있을 것입니다. 이를 통해 transformer가 이미지를 처리할 때 패치들 사이의 연속적인 흐름을 학습하도록 개선할 수 있습니다.