Expectation of a R.V.

Def)

E(X)={∫−∞∞xfX(x)dx :continuous∑xxPX(x) :discrete

provided ∫−∞∞∣x∣fX(x)dx<∞(or ∑x∣x∣PX(x)<∞)

Theorem)

If E(g1(x)),E(g2(x)) exist,

E[k1g1(x)+k2g2(x)]=k1E(g1(x))+k2E(g2(x))

Some special Expectations

Mean(First Moment)

The mean of X: μ=E(X)

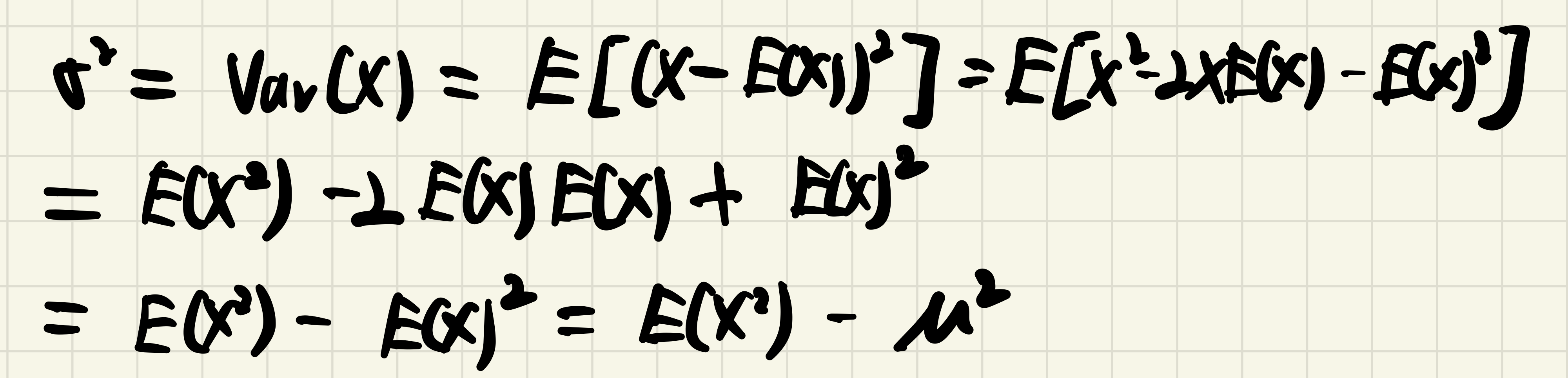

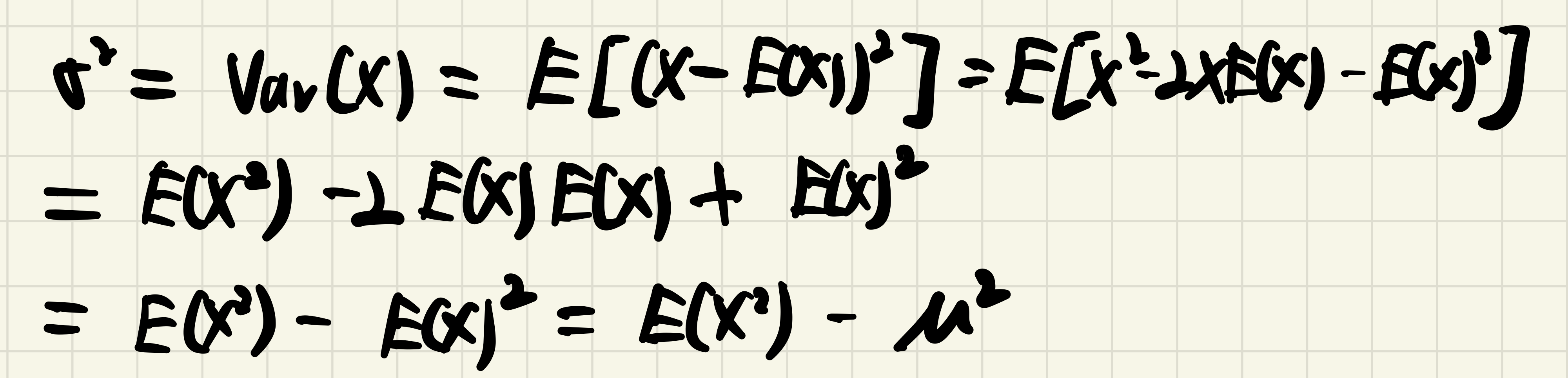

Variance(Second Central Moment)

The variance of X: σ2=Var(X)=E[(X−E(X))2]=E(X2)−[E(X)]2

pf)

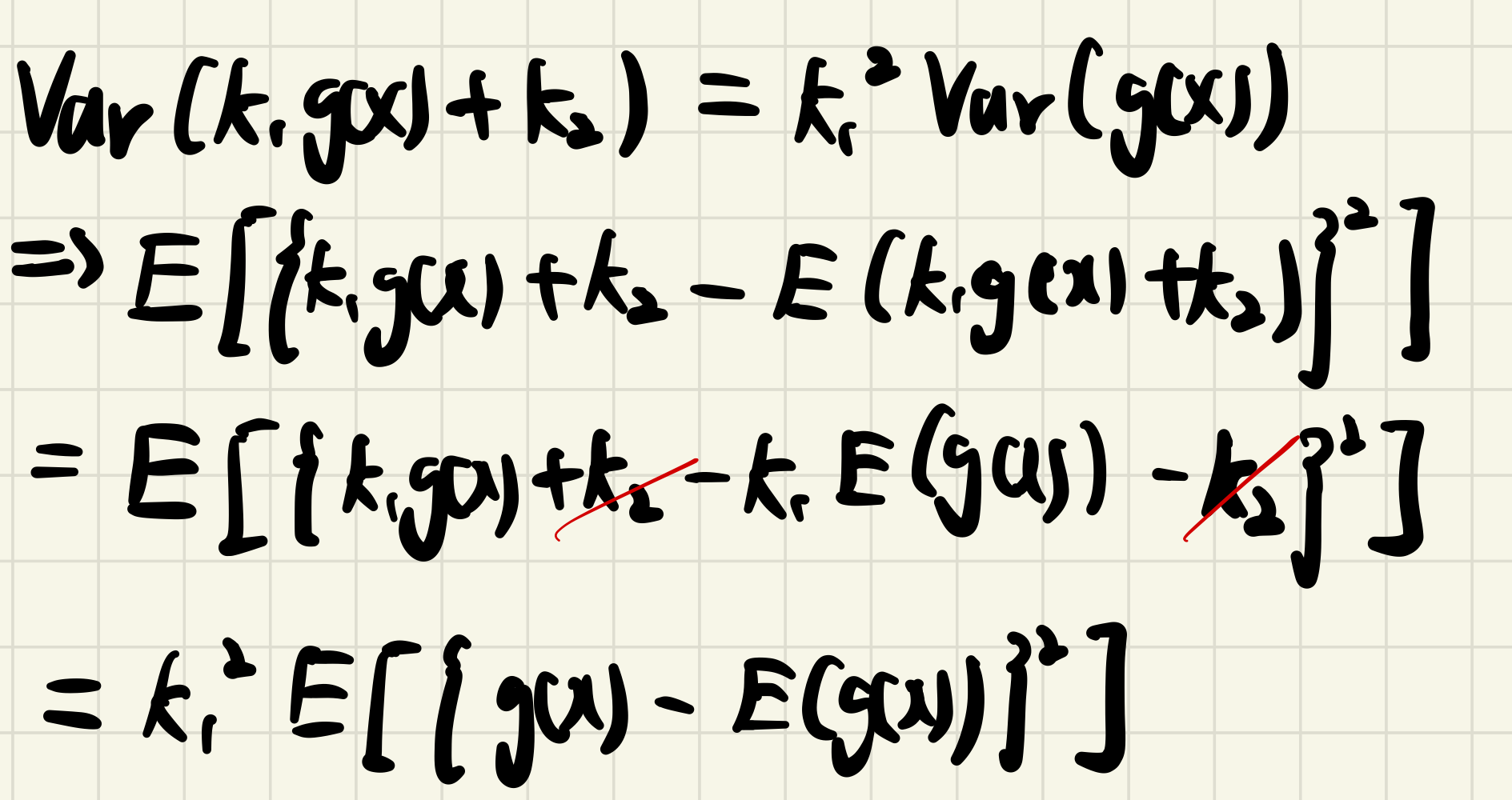

Theorem)

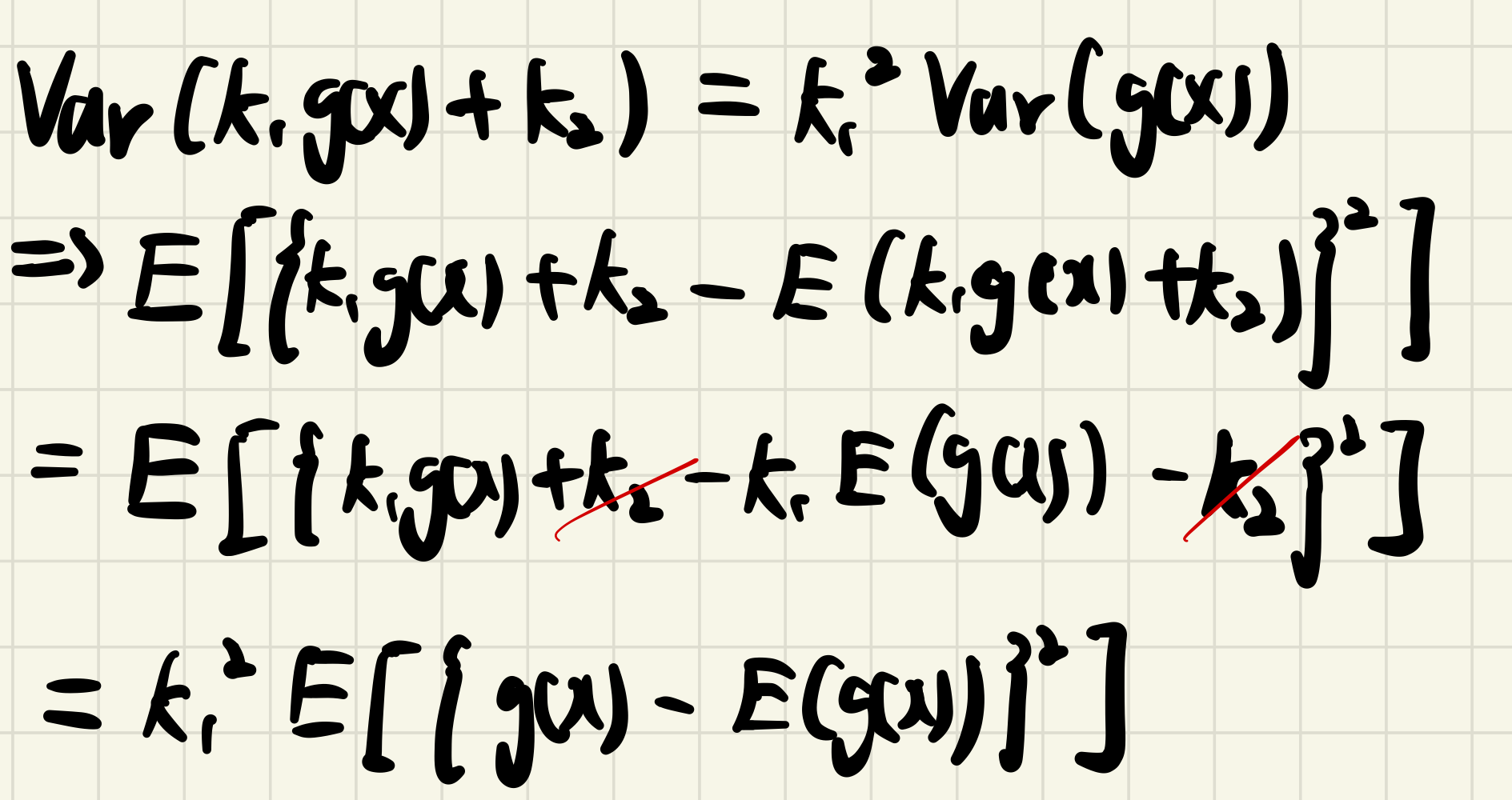

Var(k1g(x)+k2)=k12Var(g(x))

pf)

MGF(Moment Generating Function)

Def)

확률변수 X에 대해, −h<t<h 에서 E(etx)가 존재한다면,

MX(t)=E(etx)

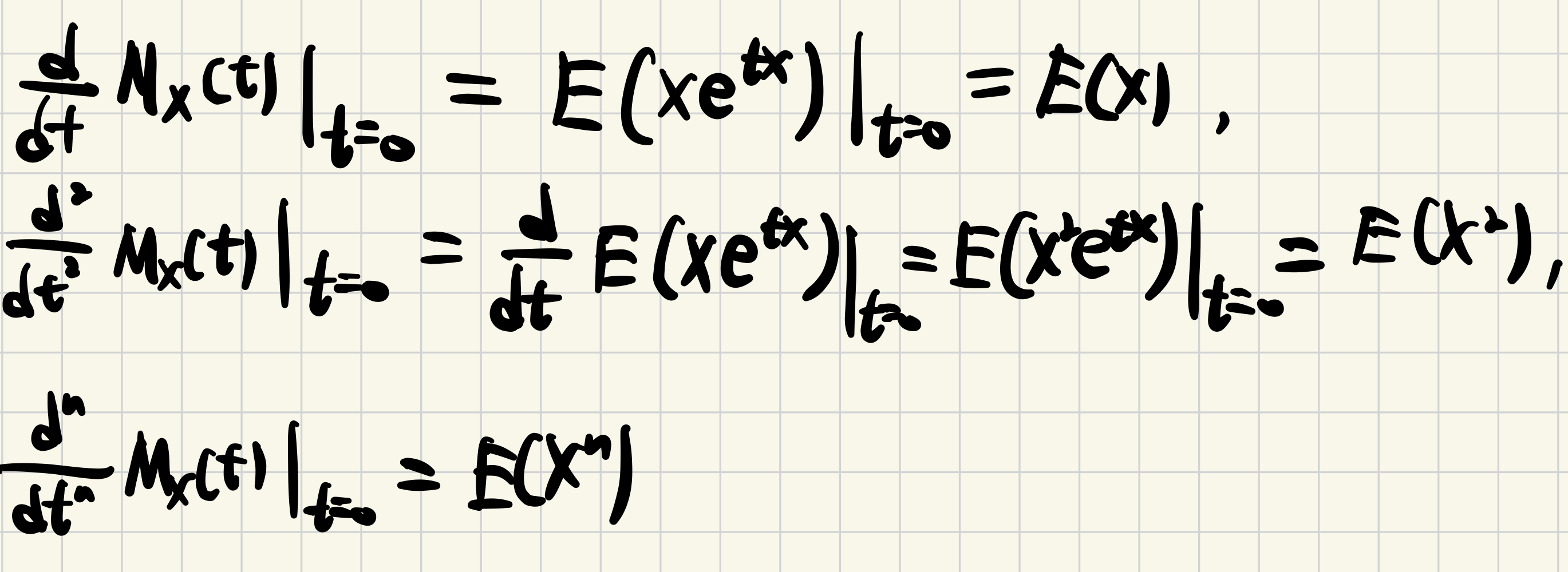

활용법)

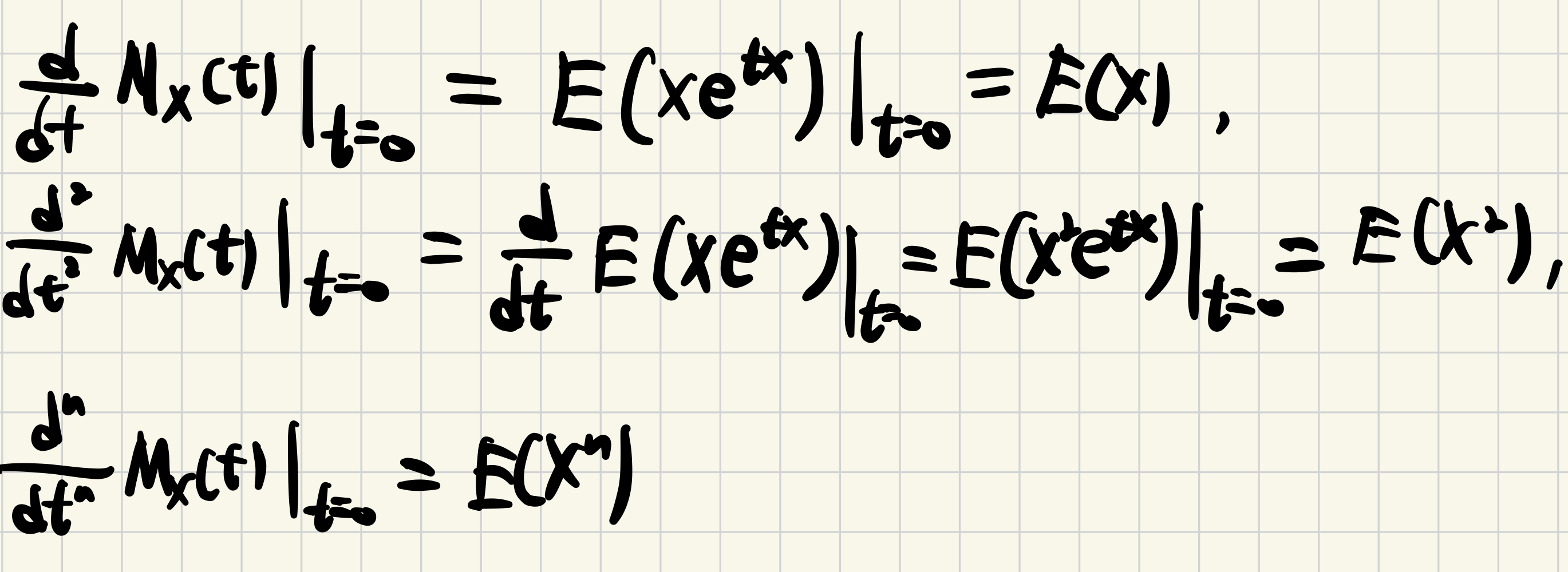

① mgf는 t=0 에서 n번 미분을 함으로써, n th moment of X를 생성한다.

② For a certain distri., mgf is unique.

=> 두 개의 확률변수가 같은 분포를 가지는지 확인하기 위해 사용

✔︎ 주의사항

E(Xn)(n=1,2,...): The n th moment of X

E((X−μ)n)(n=1,2,...): The n th central moment of X

Multivariate case

Suppose bivariate case, X=(X1,X2)

E(g(X1,X2))={∑x1∑x2g(x1,x2)PX1,X2(x1,x2):discrete∫−∞∞∫−∞∞g(x1,x2)fX1,X2(x1,x2):continuousif E(g(X1,X2))<∞

Therem)

If E(g(x1,x2)) and E(g(x1,x2)) exist,

E[k1g1(x1,x2)+k2g2(x1,x2)]=k1E(g1(x1,x2))+k2E(g2(x1,x2)) for any constants k1 and k2

MX(t)=MX1,...,Xn(t1,...,tn)=E(etTX)=E(e∑i=1ntixi)

E(X1m1...Xnmn)=∂t1m1...∂tnmn∂m1+...+mnMX(t)∣t=0

bivariate case)

MX1,X2(t1,t2)=E(et1x1+t2x2)

E(X1m1X2m2)=∂t1m1∂t2m2∂m1+m2MX1,X2(t1,t2)∣t1=t2=0

MX1,X2(t1,0)=E(et1x1+0x2)=E(et1x1)=MX1(t1)