Chapter7 Panoptic segmentation 모델 학습

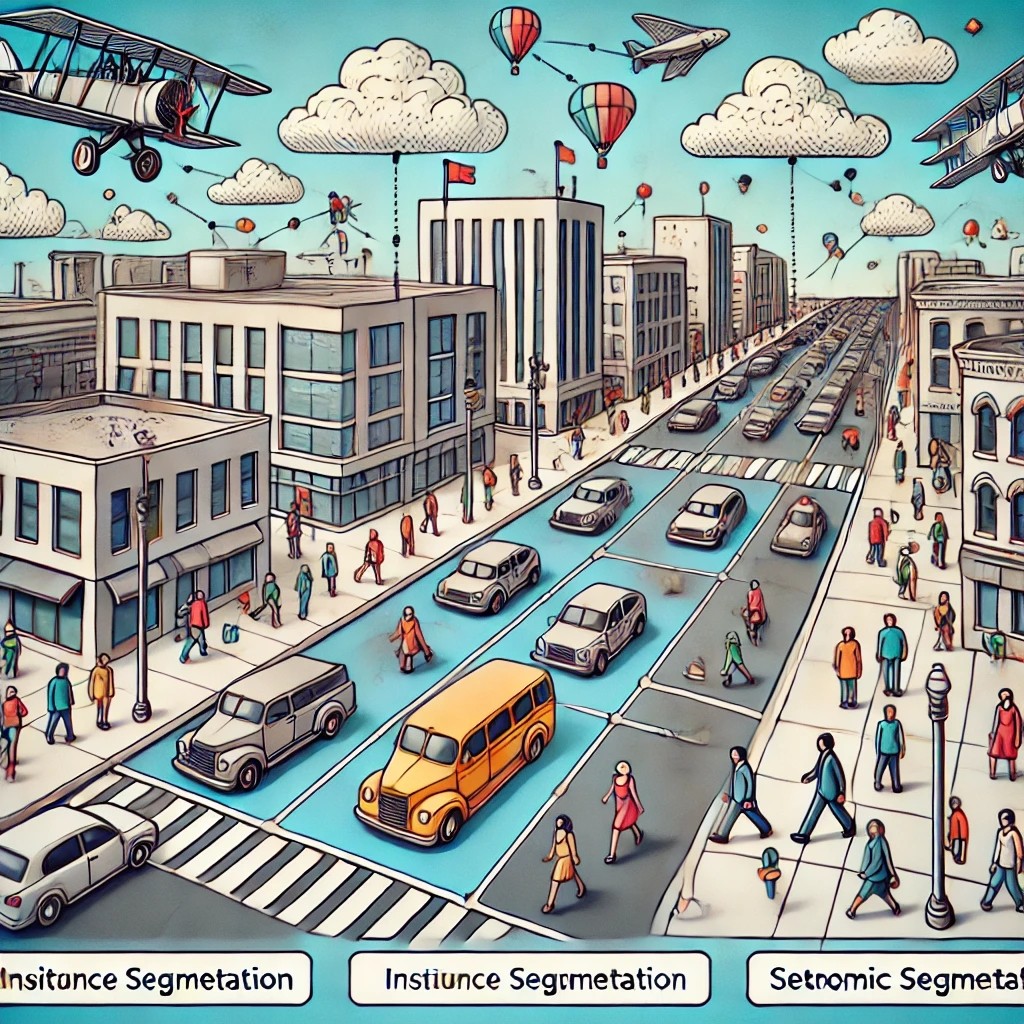

Panoptic segmentation

- Panoptic segmentation = Semantic segmentation(Class별 구분, 모든 이미지에 대해서) + Instance segmentation(개별 객체별로 구분, 배경에 대해서는 예측하지 않음)

- Panoptic Segmentation의 구성

-

Things: 기하학적 구조가 잘 정의되어 있고 사람, 차량, 식물 등의 이미지에서 셀 수 있는 물체

-

Stuff: 정확한 기하학적 구조는 없지만 주로 질감과 재질로 특정지어지는 물체(도로, 하늘, 기타 배경)

-

실제 적용하는 곳

-> 자율주행

-> Medical imaging

-> Smart cities

-> AR/VR

-> Surveillance and security

데이터 준비

- Dataset의 annotation파일 불러오기

with open ('파일 경로/panoptic_train2017.json', 'r') as t:

t_data = t.read()j = json.loads(t_data)j.keys()len(j['images'])j['info']

j['annotations'][0]j['images'][0]

- ID를 기준으로 파일 처리

image_id_list = [images_info['id'] for images_info in j['images']]ann_id_list = [ann_info['image_id'] for ann_info in j['annotations']]image_id_set = set(image_id_list)

ann_id_set = set(ann_id_list)len(image_id_set.intersection(ann_id_set))- 2천개씩 2set

import random

random.shuffle(image_id_list)

first_image_id_list = image_id_list[:2000]

second_image_id_list = image_id_list[2000:4000]import tqdm

first_image_list = []

for image_id in tqdm.tqdm(first_image_id_list) :

for image_data in j['images']:

if image_data['id'] == image_id :

first_image_list.append(image_data)

continuesecond_image_list = []

for image_id in tqdm.tqdm(second_image_id_list) :

for image_data in j['images']:

if image_data['id'] == image_id :

second_image_list.append(image_data)

continueimport tqdm

first_ann_list = []

for image_id in tqdm.tqdm(first_image_id_list) :

for ann_data in j['annotations']:

if ann_data['image_id'] == image_id :

first_ann_list.append(ann_data)

continuesecond_ann_list = []

for image_id in tqdm.tqdm(second_image_id_list) :

for ann_data in j['annotations']:

if ann_data['image_id'] == image_id :

second_ann_list.append(ann_data)

continue- 폴더 구조

# 새폴더를 만들어 줄 함수

def createDirectory(directory):

try:

if not os.path.exists(directory):

os.makedirs(directory)

except OSError:

print("Error: Failed to create the directory.")import os

import shutil

import json

data_root = 'e:/data/coco/'

image_path = 'train2017/'

seg_path = 'annotations/panoptic_train2017/'

ann_path = 'annotations/'

copy_path = 'e:/data/coco/firstset/'

ann_file = 'panoptic_train2017.json'

createDirectory(copy_path)

createDirectory(copy_path+image_path)

createDirectory(copy_path+seg_path)

createDirectory(copy_path+ann_path)

json_data = {'info':j['info'],

'licenses':j['licenses'],

'images':first_image_list,

'annotations':first_ann_list,

'categories':j['categories']}

with open(data_root+'firstset/'+ann_path+ann_file, 'w')as json_file:

json.dump(json_data, json_file)

for i in tqdm.tqdm(first_ann_list):

shutil.copyfile(

data_root+image_path+i['file_name'].replace('png','jpg'),

copy_path+image_path+i['file_name'].replace('png','jpg')

)

shutil.copyfile(

data_root+seg_path+i['file_name'],

copy_path+seg_path+i['file_name']

)- 두번째 set 저장

import os

import shutil

data_root = 'e:/data/coco/'

image_path = 'train2017/'

seg_path = 'annotations/panoptic_train2017/'

ann_path = 'annotations/'

copy_path = 'e:/data/coco/secondset/'

ann_file = 'panoptic_train2017.json'

createDirectory(copy_path)

createDirectory(copy_path+image_path)

createDirectory(copy_path+seg_path)

createDirectory(copy_path+ann_path)

json_data = {'info':j['info'],

'licenses':j['licenses'],

'images':second_image_list,

'annotations':second_ann_list,

'categories':j['categories']}

with open(data_root+'secondset/'+ann_path+ann_file, 'w')as json_file:

json.dump(json_data, json_file)

for i in tqdm.tqdm(second_ann_list):

shutil.copyfile(

data_root+image_path+i['file_name'].replace('png','jpg'),

copy_path+image_path+i['file_name'].replace('png','jpg')

)

shutil.copyfile(data_root+seg_path+i['file_name'],

copy_path+seg_path+i['file_name']

)- val 세트 저장

with open ('e:/data/coco/annotations/panoptic_val2017.json', 'r') as t:

t_data = t.read()

j = json.loads(t_data)image_id_list = [images_info['id'] for images_info in j['images']]

ann_id_list = [ann_info['image_id'] for ann_info in j['annotations']]image_id_set = set(image_id_list)

ann_id_set = set(ann_id_list)

len(image_id_set.intersection(ann_id_set))import random

random.shuffle(image_id_list)

first_image_id_list = image_id_list[:500]

second_image_id_list = image_id_list[500:1000]import tqdm

first_image_list = []

for image_id in tqdm.tqdm(first_image_id_list) :

for image_data in j['images']:

if image_data['id'] == image_id :

first_image_list.append(image_data)

continuesecond_image_list = []

for image_id in tqdm.tqdm(second_image_id_list) :

for image_data in j['images']:

if image_data['id'] == image_id :

second_image_list.append(image_data)

continueimport tqdm

first_ann_list = []

for image_id in tqdm.tqdm(first_image_id_list) :

for ann_data in j['annotations']:

if ann_data['image_id'] == image_id :

first_ann_list.append(ann_data)

continuesecond_ann_list = []

for image_id in tqdm.tqdm(second_image_id_list) :

for ann_data in j['annotations']:

if ann_data['image_id'] == image_id :

second_ann_list.append(ann_data)

continueimport os

import shutil

import json

data_root = 'e:/data/coco/'

image_path = 'val2017/'

seg_path = 'annotations/panoptic_val2017/'

ann_path = 'annotations/'

copy_path = 'e:/data/coco/firstset/'

ann_file = 'panoptic_val2017.json'

createDirectory(copy_path)

createDirectory(copy_path+image_path)

createDirectory(copy_path+seg_path)

createDirectory(copy_path+ann_path)

json_data = {'info':j['info'],

'licenses':j['licenses'],

'images':first_image_list,

'annotations':first_ann_list,

'categories':j['categories']}

with open(data_root+'firstset/'+ann_path+ann_file, 'w')as json_file:

json.dump(json_data, json_file)

for i in tqdm.tqdm(first_ann_list):

shutil.copyfile(

data_root+image_path+i['file_name'].replace('png','jpg'),

copy_path+image_path+i['file_name'].replace('png','jpg')

)

shutil.copyfile(

data_root+seg_path+i['file_name'],

copy_path+seg_path+i['file_name']

)import os

import shutil

data_root = 'e:/data/coco/'

image_path = 'val2017/'

seg_path = 'annotations/panoptic_val2017/'

ann_path = 'annotations/'

copy_path = 'e:/data/coco/secondset/'

ann_file = 'panoptic_val2017.json'

createDirectory(copy_path)

createDirectory(copy_path+image_path)

createDirectory(copy_path+seg_path)

createDirectory(copy_path+ann_path)

json_data = {'info':j['info'],

'licenses':j['licenses'],

'images':second_image_list,

'annotations':second_ann_list,

'categories':j['categories']}

with open(data_root+'secondset/'+ann_path+ann_file, 'w')as json_file:

json.dump(json_data, json_file)

for i in tqdm.tqdm(second_ann_list):

shutil.copyfile(

data_root+image_path+i['file_name'].replace('png','jpg'),

copy_path+image_path+i['file_name'].replace('png','jpg')

)

shutil.copyfile(

data_root+seg_path+i['file_name'],

copy_path+seg_path+i['file_name']

)학습하기

- Config 파일 설정

!mim download mmdet --config panoptic_fpn_r101_fpn_1x_coco --dest ./from mmengine import Config

cfg = Config.fromfile('./panoptic_fpn_r101_fpn_1x_coco.py')cfg.data_root = 'e:/data/coco/'

cfg.train_dataloader.dataset.ann_file = 'firstset/annotations/panoptic_train2017.json'

cfg.train_dataloader.dataset.data_root = cfg.data_root

cfg.val_dataloader.dataset.ann_file = 'firstset/annotations/panoptic_val2017.json'

cfg.val_dataloader.dataset.data_root = cfg.data_root

cfg.test_dataloader.dataset = cfg.val_dataloader.dataset

cfg.val_evaluator.ann_file = 'e:/data/coco/firstset/annotations/panoptic_val2017.json'

cfg.val_evaluator.seg_prefix = 'e:/data/coco/firstset/annotations/panoptic_val2017'

cfg.test_evaluator = cfg.val_evaluator

cfg.optim_wrapper.optimizer.lr = 0.02 / 8

cfg.visualizer.vis_backends.append({"type":'TensorboardVisBackend'})

cfg.train_cfg.max_epochs = 4with open('./panoptic_firstset_config.py', 'w')as f:

f.write(cfg.pretty_text)- 학습 진행

!python tools/train.py ./panoptic_firstset_config.py!python tools/test.py panoptic_fpn_r101_fpn_1x_coco.py ./work_dirs/panoptic_fpn_r101_fpn_1x_coco/epoch_4.pth

- 이어서 학습

from mmengine import Config

cfg = Config.fromfile('./panoptic_fpn_r101_fpn_1x_coco.py')- 이전 학습 결과 불러옴

cfg.data_root = 'e:/data/coco/'

cfg.load_from = './work_dirs/panoptic_firstset_config/epoch_12.pth' # 이전 학습 결과

cfg.train_dataloader.dataset.ann_file = 'secondset/annotations/panoptic_train2017.json'

cfg.train_dataloader.dataset.data_root = cfg.data_root

cfg.val_dataloader.dataset.ann_file = 'secondset/annotations/panoptic_val2017.json'

cfg.val_dataloader.dataset.data_root = cfg.data_root

cfg.test_dataloader.dataset = cfg.val_dataloader.dataset

cfg.val_evaluator.ann_file = 'e:/data/coco/secondset/annotations/panoptic_val2017.json'

cfg.val_evaluator.seg_prefix = 'e:/data/coco/secondset/annotations/panoptic_val2017/'

cfg.test_evaluator = cfg.val_evaluator

cfg.optim_wrapper.optimizer.lr = 0.02/8

cfg.visualizer.vis_backends.append({"type":'TensorboardVisBackend'})with open('./panoptic_secondset_config.py','w')as f:

f.write(cfg.pretty_text)!python tools/train.py ./panoptic_firstset_config.py!python tools/test.py panoptic_fpn_r101_fpn_1x_coco.py ./work_dirs/panoptic_fpn_r101_fpn_1x_coco/epoch_4.pth

이 글은 제로베이스 데이터 취업 스쿨의 강의 자료 일부를 발췌하여 작성되었습니다