Chapter 5 Pose Detection

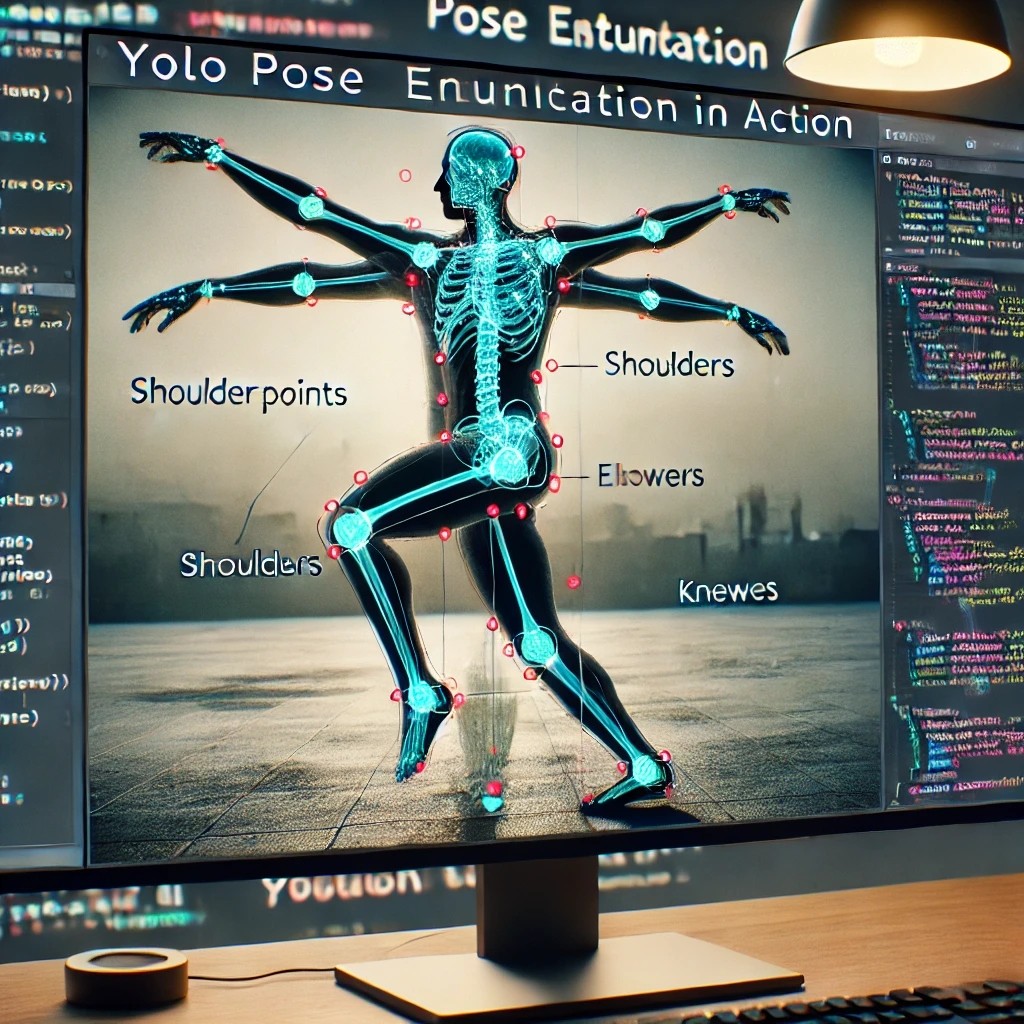

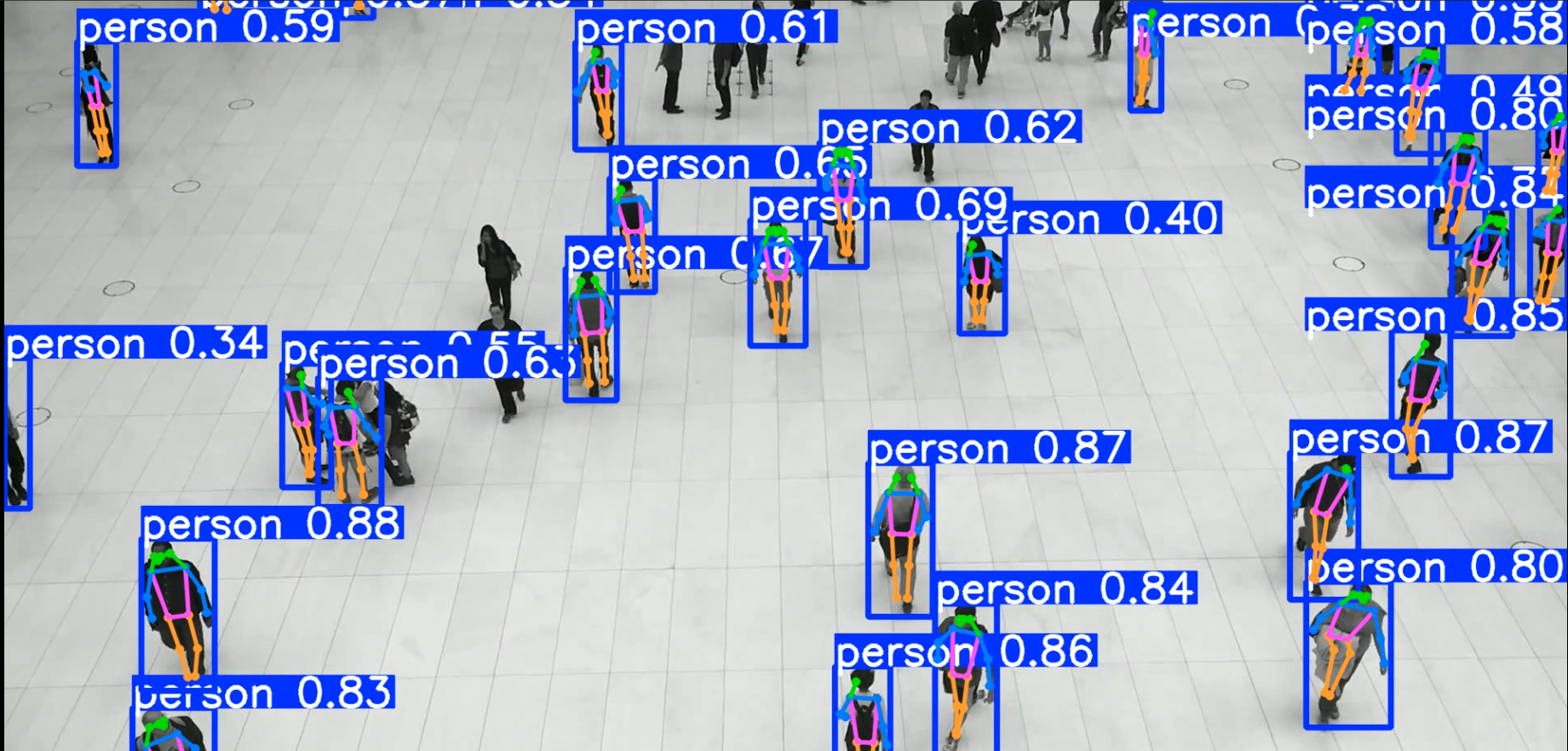

Pose Estimation

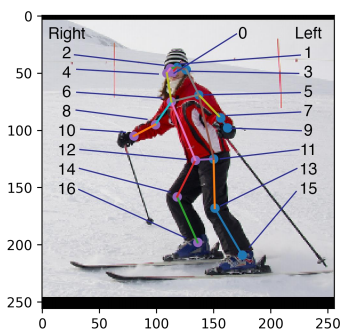

- YOLOv8에서는 총 17개의 Keypoint를 활용해서 동작 감지

from ultralytics import YOLO

model = YOLO('yolov8n-pose.pt')

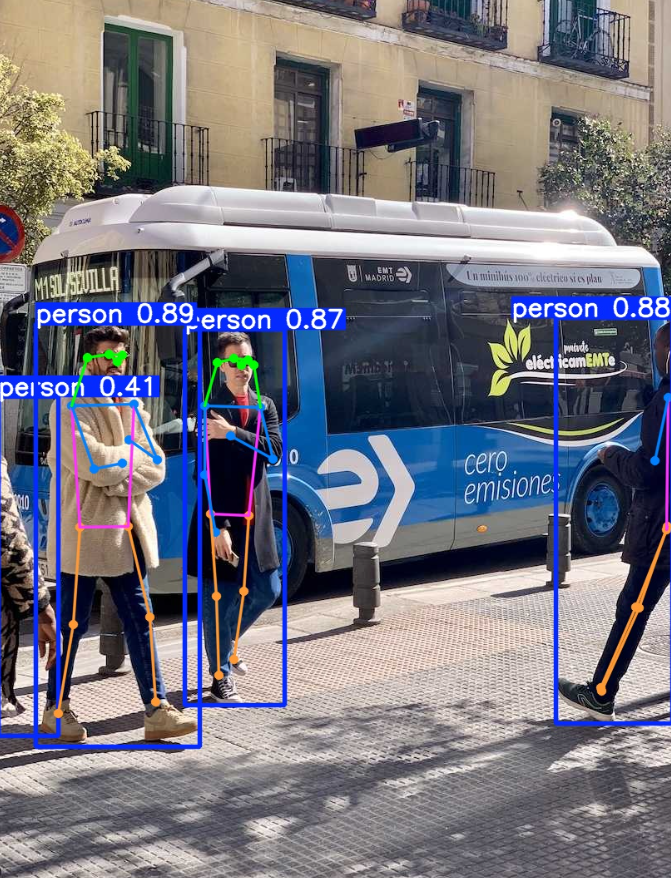

results = model('https://ultralytics.com/images/bus.jpg', save=True)

from ultralytics import YOLO

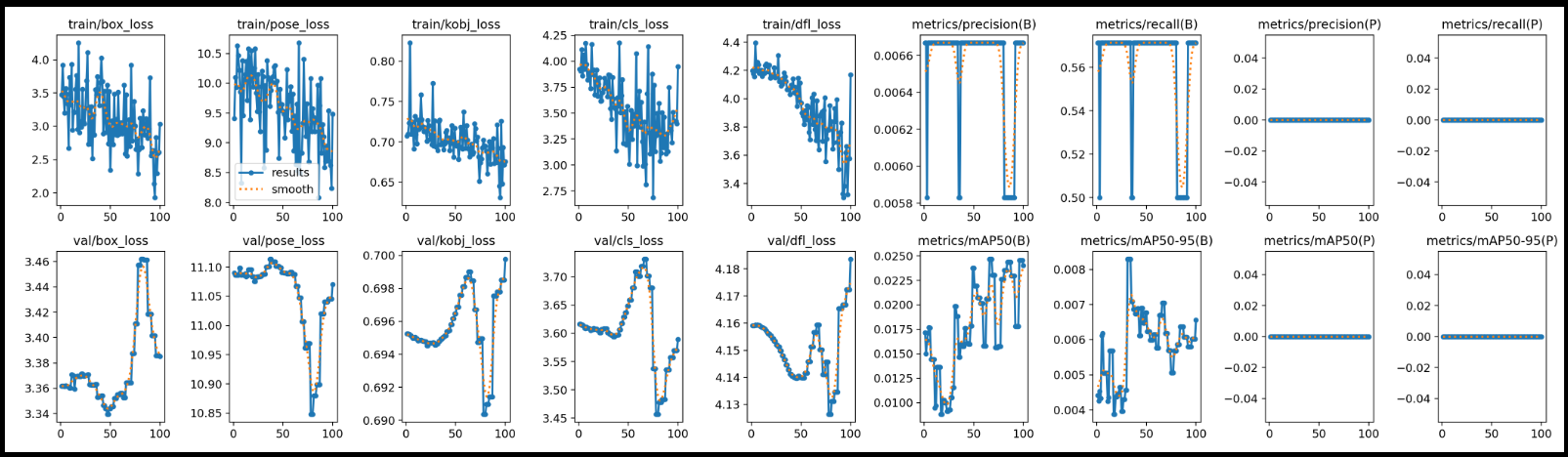

model = YOLO('yolov8n-pose.yaml')

result = model.train(data='coco8-pose.yaml', epochs= 100, imgsz=640, device=0)

from ultralytics import YOLO

model= YOLO('yolov8n-pose.pt')

model(source = 'people_walk.mp4', save=True, imgsz=1280)

- 데이터 셋 준비 (샘플데이터)

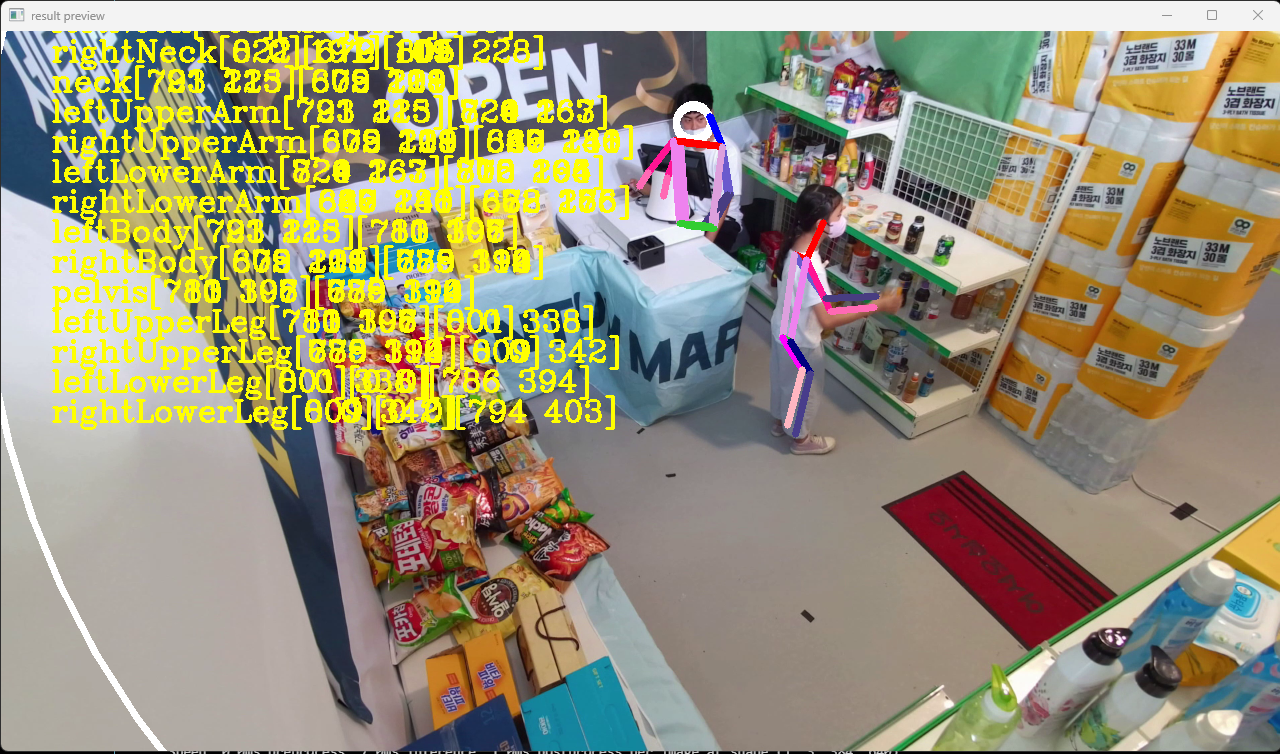

실내 구매행동 데이터

from ultralytics import YOLO

import cv2

import math

import numpy as npcolorMap = {

'leftNeck' : (255,0,0),

'rightNeck' : (0,0,255),

'neck' : (0,0,255),

'leftUpperArm' : (205,90,106),

'rightUpperArm' : (147,20,255),

'leftLowerArm' : (129,61,72),

'rightLowerArm' : (180,105,255),

'leftBody':(221,160,221),

'rightBody':(238,130,238),

'pelvis':(50,205,50),

'leftUpperLeg':(255,0,255),

'rightUpperLeg':(128,0,0),

'leftLowerLeg' : (193,182,255),

'rightLowerLeg' : (139,61,72)

}partDict = {

'leftNeck':(3,5),

'rightNeck' : (4,6),

'neck' : (5, 6),

'leftUpperArm' : (5,7),

'rightUpperArm' : (6,8),

'leftLowerArm' : (7,9),

'rightLowerArm' : (8,10),

'leftBody' :(5,11),

'rightBody':(6,12),

'pelvis':(11,12),

'leftUpperLeg':(11,13),

'rightUpperLeg':(12,14),

'leftLowerLeg':(13,15),

'rightLowerLeg':(14,16)

}def distance(x1, y1, x2, y2):

result = math.sqrt(math.pow(x1 - x2, 2) + math.pow(y1 - y2, 2))

return int(result)

def drawLine(frame, name, org, dst):

if (org.any() == 0 or dst.any() == 0):

return frame

frame = cv2.line(frame, org, dst, colorMap[name], 5)

return framemodel = YOLO('yolov8n-pose.pt')

cap = cv2.VideoCapture('sample.mp4')

while True:

ret, frame = cap.read()

if not ret:

print('Video frame is empty or video processing has been successfully completed')

break

frame = cv2.resize(frame, (1280, 720))

results = model(frame, stream=True)

for r in results:

keypoints = r.keypoints

for ks in keypoints:

# Convert CUDA Tensor to numpy array

k = np.array(ks.xy[0].cpu(), dtype=int)

try:

cv2.circle(

frame,

(k[0][0], k[0][1]),

int(np.linalg.norm(k[0] - k[3])),

(255, 255, 255),

5

)

for idx, (name, (org, dst)) in enumerate(partDict.items()):

frame = cv2.putText(

frame,

name + str(k[org]) + str(k[dst]),

(50, idx * 30),

cv2.FONT_HERSHEY_COMPLEX,

1,

(0, 255, 255),

2

)

frame = drawLine(frame, name, k[org], k[dst])

except Exception as e:

print(e)

cv2.imshow('result preview', frame)

if cv2.waitKey(1) == ord('q'):

break

cap.release()

cv2.destroyAllWindows()

이 글은 제로베이스 데이터 취업 스쿨의 강의 자료 일부를 발췌하여 작성되었습니다