Chapter5 (분류_와인데이터)

- Wine 데이터: 분류 문제에서 많이 사용하는 예제

- 레드와인/화이트와인 두 종류의 데이터셋 존재

- 두개의 데이터를 합칠때 각각을 구분할 수 있는 부분이 필요함

-

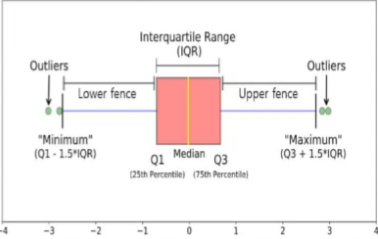

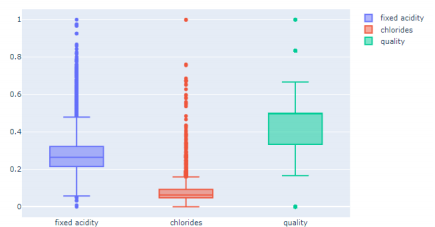

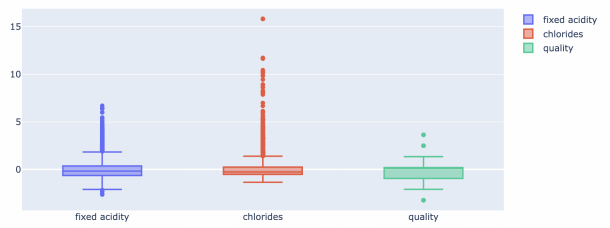

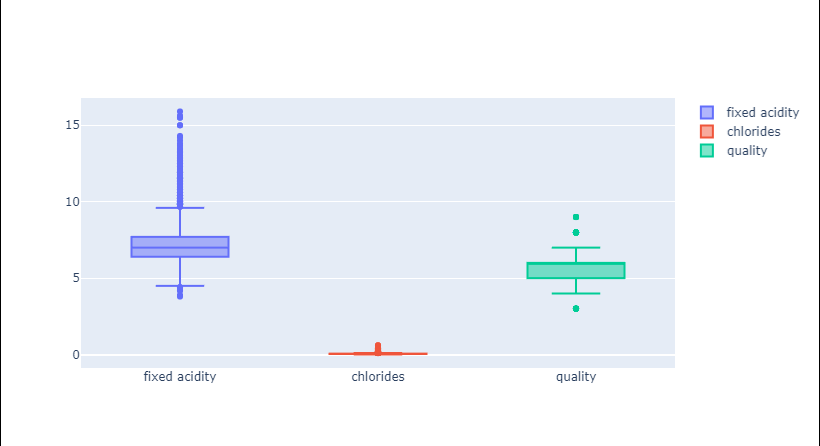

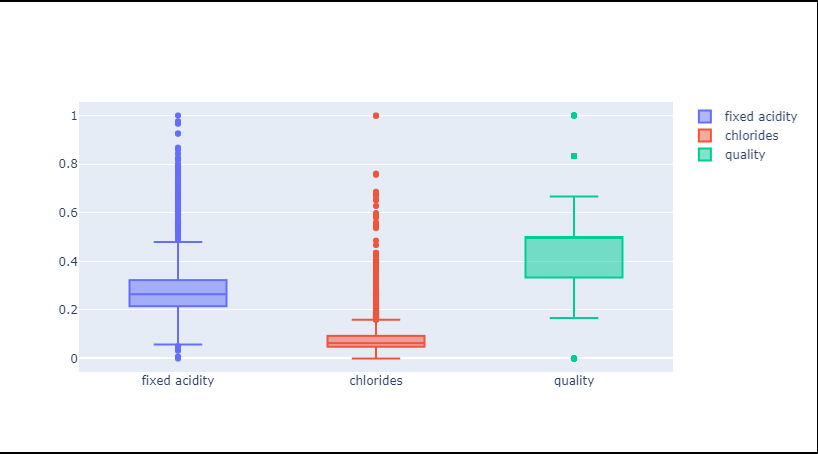

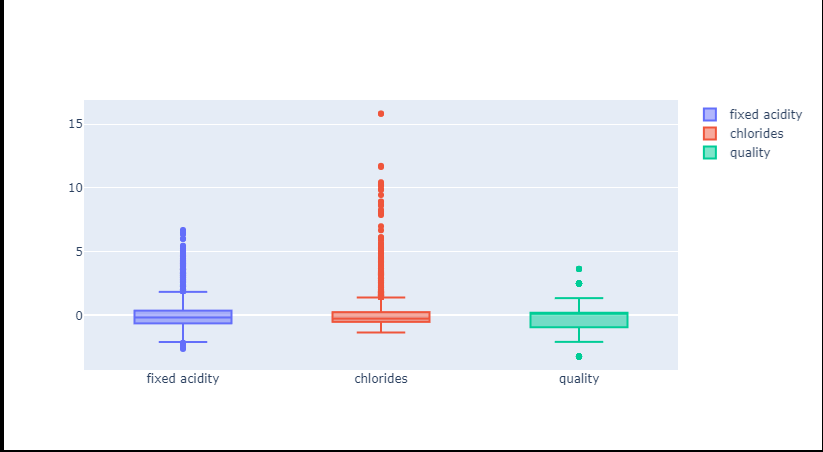

Boxplot을 통해 와인 데이터 항목들을 그렸을 때, 컬럼들의 최대, 최소 범위가 각각 다르고 평균과 분산이 각각 다름

-

특성의 편향 문제는 최적 모델을 찾는데 방해가 됨

-

이런 문제가 발생할 때 MinMaxScaler/StandardScaler을 사용

MinMaxScaler, StandardScaler

-

MinMaxScaler는 0부터 1까지 범위

-

StandardScaler는 평균값을 0으로, 표준편차를 1로 둔 것

-

Image에서는 MinMaxScaler가 유리한 경향을 가짐

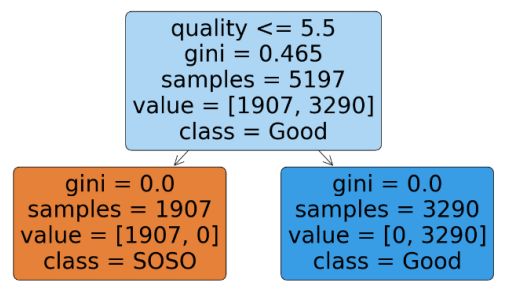

이진 분류로 와인 맛 분류

- 6단계로 구분된 Quality를 맛있음/맛없음으로 이진화

- 조건을 등급이 5보다 크면 맛있다고 설정하면 100%가 나옴

-> 잘못된 정보를 넣어주면 정확도가 아무리 높아도 아무 소용 없음

실습

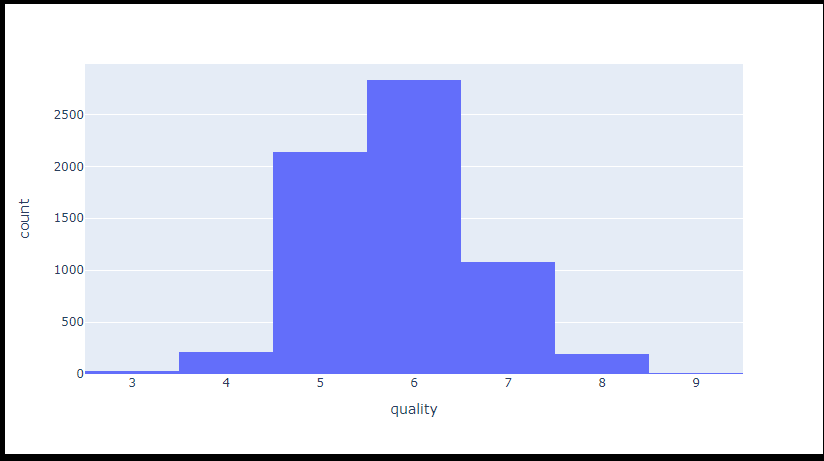

import pandas as pd

red_url = 'git주소'

white_url = 'git주소'

red_wine = pd.read_csv(red_url, sep = ';')

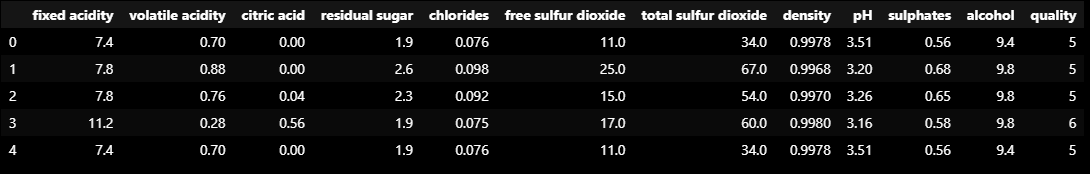

white_wine = pd.read_csv(white_url, sep = ";")red_wine.head()

white_wine.head()

white_wine.columnsIndex(['fixed acidity', 'volatile acidity', 'citric acid', 'residual sugar',

'chlorides', 'free sulfur dioxide', 'total sulfur dioxide', 'density',

'pH', 'sulphates', 'alcohol', 'quality'],

dtype='object')

red_wine['color'] = 1

white_wine['color']= 0

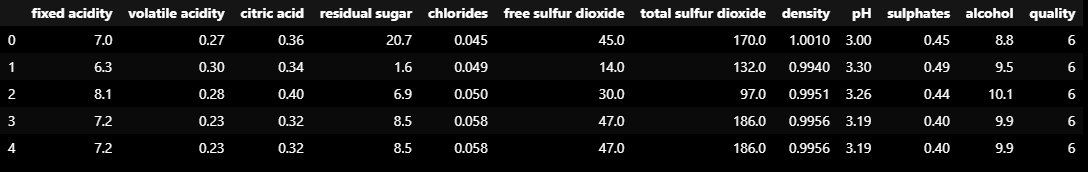

wine = pd.concat([red_wine, white_wine])

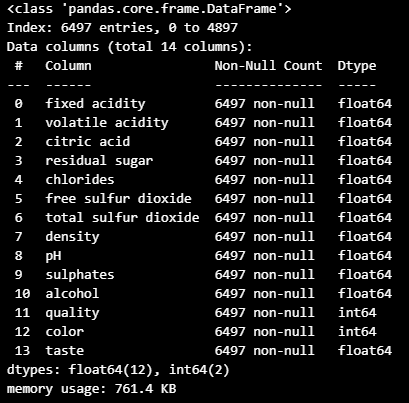

wine.info()

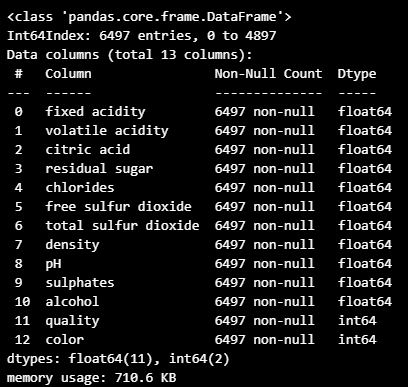

wine['quality'].unique()array([5, 6, 7, 4, 8, 3, 9])

import plotly.express as px

fig = px.histogram(wine, x = 'quality')

fig.show()

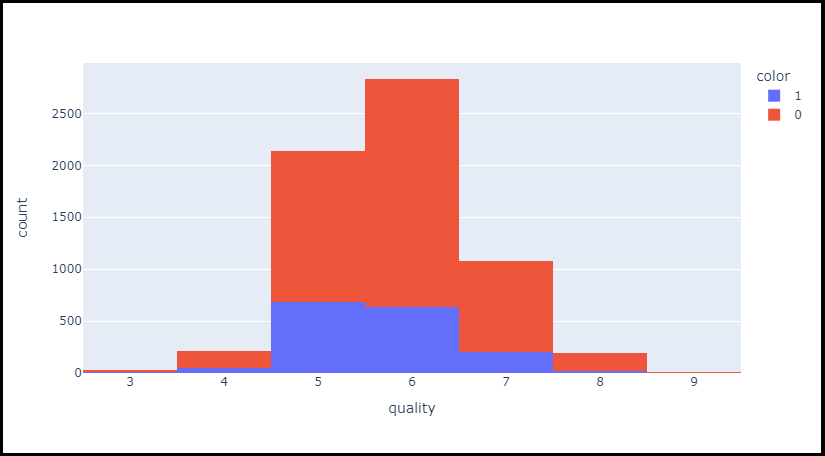

fig = px.histogram(wine, x = 'quality' , color = 'color')

fig.show()

X = wine.drop(['color'], axis = 1)

y = wine['color']from sklearn.model_selection import train_test_split

import numpy as np

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size = 0.2,

random_state = 13)

np.unique(y_train, return_counts = True)(array([0, 1]), array([3913, 1284]))

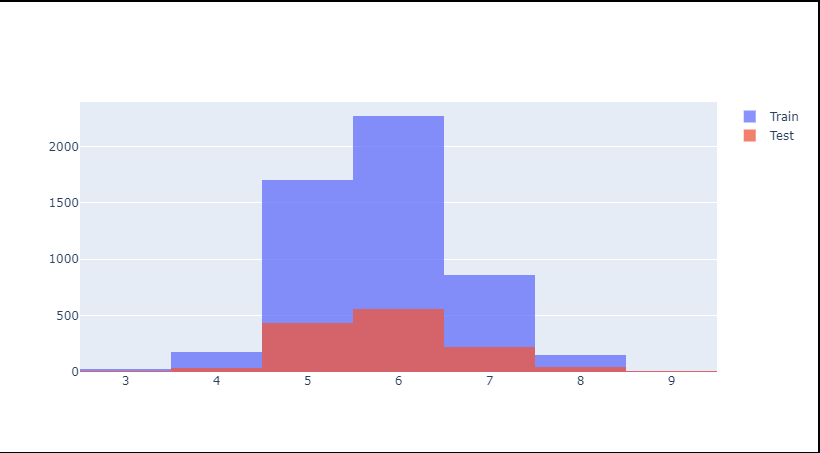

import plotly.graph_objects as go

fig = go.Figure()

fig.add_trace(go.Histogram(x = X_train['quality'] , name = "Train"))

fig.add_trace(go.Histogram(x = X_test['quality'] , name = "Test"))

fig.update_layout(barmode = 'overlay')

fig.update_traces(opacity = 0.75)

fig.show()

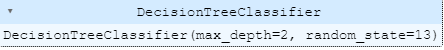

from sklearn.tree import DecisionTreeClassifier

wine_tree = DecisionTreeClassifier(max_depth = 2 , random_state = 13)

wine_tree.fit(X_train, y_train)

from sklearn.metrics import accuracy_score

y_pred_tr = wine_tree.predict(X_train)

y_pred_test = wine_tree.predict(X_test)

print('Train Acc : ' , accuracy_score(y_train, y_pred_tr))

print("Test Acc : " , accuracy_score(y_test, y_pred_test))Train Acc : 0.9553588608812776

Test Acc : 0.9569230769230769

fig = go.Figure()

fig.add_trace(go.Box(y = X['fixed acidity'], name = 'fixed acidity'))

fig.add_trace(go.Box(y = X['chlorides'], name = 'chlorides'))

fig.add_trace(go.Box(y = X['quality'], name = 'quality'))

fig.show()

from sklearn.preprocessing import MinMaxScaler, StandardScaler

MMS = MinMaxScaler()

SS = StandardScaler()

SS.fit(X)

MMS.fit(X)

X_ss = SS.transform(X)

X_mms = MMS.transform(X)X_ss_pd = pd.DataFrame(X_ss, columns = X.columns)

X_mms_pd = pd.DataFrame(X_mms, columns = X.columns)fig = go.Figure()

fig.add_trace(go.Box(y = X_mms_pd['fixed acidity'], name = 'fixed acidity'))

fig.add_trace(go.Box(y = X_mms_pd['chlorides'], name = 'chlorides'))

fig.add_trace(go.Box(y = X_mms_pd['quality'], name = 'quality'))

fig.show()

fig = go.Figure()

fig.add_trace(go.Box(y = X_ss_pd['fixed acidity'], name = 'fixed acidity'))

fig.add_trace(go.Box(y = X_ss_pd['chlorides'], name = 'chlorides'))

fig.add_trace(go.Box(y = X_ss_pd['quality'], name = 'quality'))

fig.show()

X_train, X_test, y_train, y_test = train_test_split(X_mms_pd, y, test_size = 0.2,

random_state = 13)

wine_tree = DecisionTreeClassifier(max_depth = 2, random_state = 13)

wine_tree.fit(X_train, y_train)

y_pred_tr = wine_tree.predict(X_train)

y_pred_test = wine_tree.predict(X_test)

print('Train Acc : ' , accuracy_score(y_train, y_pred_tr))

print("Test Acc : " , accuracy_score(y_test, y_pred_test))Train Acc : 0.9553588608812776

Test Acc : 0.9569230769230769

X_train, X_test, y_train, y_test = train_test_split(X_ss_pd, y, test_size = 0.2,

random_state = 13)

wine_tree = DecisionTreeClassifier(max_depth = 2, random_state = 13)

wine_tree.fit(X_train, y_train)

y_pred_tr = wine_tree.predict(X_train)

y_pred_test = wine_tree.predict(X_test)

print('Train Acc : ' , accuracy_score(y_train, y_pred_tr))

print("Test Acc : " , accuracy_score(y_test, y_pred_test))Train Acc : 0.9553588608812776

Test Acc : 0.9569230769230769

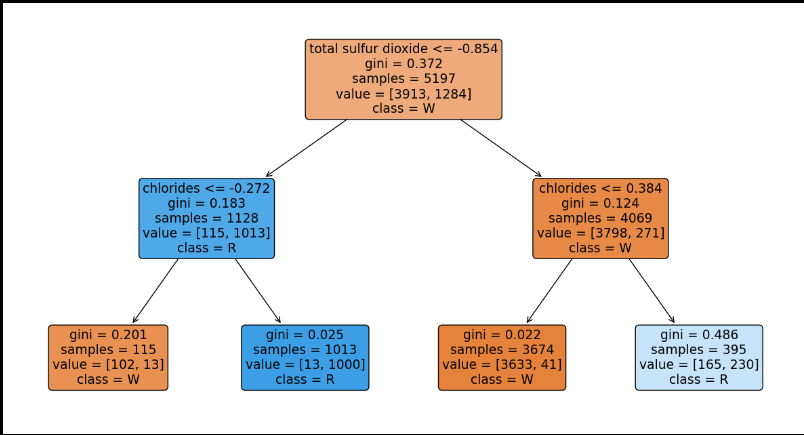

import matplotlib.pyplot as plt

from sklearn import tree

fig = plt.figure(figsize=(15, 8))

_ = tree.plot_tree(wine_tree,

feature_names =list(X_train.columns),

class_names = ["W" , "R"],

rounded = True,

filled=True)

dict(zip(X_train.columns, wine_tree.feature_importances_)){'fixed acidity': 0.0,

'volatile acidity': 0.0,

'citric acid': 0.0,

'residual sugar': 0.0,

'chlorides': 0.24230360549660776,

'free sulfur dioxide': 0.0,

'total sulfur dioxide': 0.7576963945033922,

'density': 0.0,

'pH': 0.0,

'sulphates': 0.0,

'alcohol': 0.0,

'quality': 0.0}

np.unique(wine['quality'])array([3, 4, 5, 6, 7, 8, 9], dtype=int64)

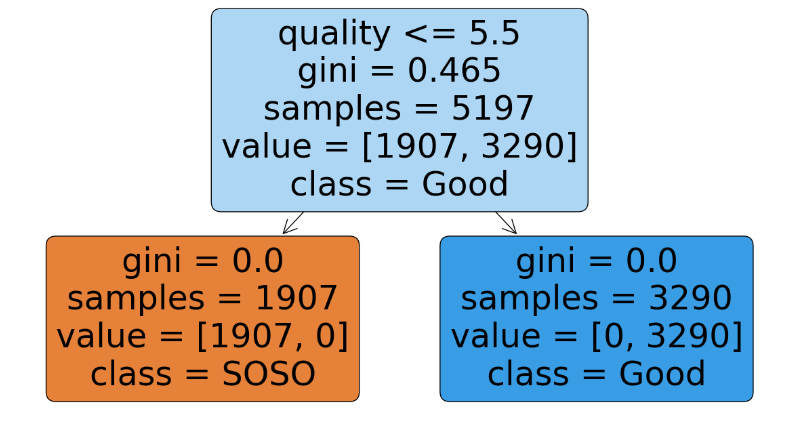

wine['taste'] = [1. if grade > 5 else 0. for grade in wine['quality']]

wine.info()

X = wine.drop(['taste'], axis = 1)

y = wine['taste']

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size = 0.2,

random_state = 13)

wine_tree = DecisionTreeClassifier(max_depth = 2, random_state = 13)

wine_tree.fit(X_train, y_train)

y_pred_tr = wine_tree.predict(X_train)

y_pred_test = wine_tree.predict(X_test)

print('Train Acc : ' , accuracy_score(y_train, y_pred_tr))

print("Test Acc : " , accuracy_score(y_test, y_pred_test))Train Acc : 1.0

Test Acc : 1.0

fig = plt.figure(figsize=(15, 8))

_ = tree.plot_tree(wine_tree,

feature_names =list(X_train.columns),

class_names = ["SOSO" , "Good"],

rounded = True,

filled=True)

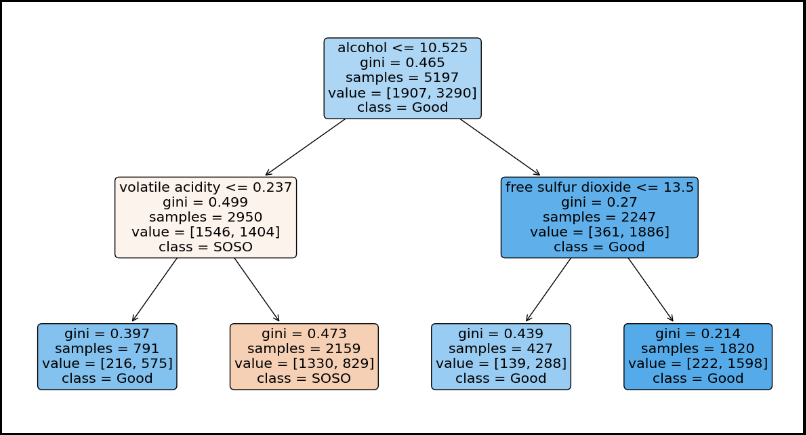

X = wine.drop(['taste', 'quality'], axis = 1)

y = wine['taste']

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size = 0.2,

random_state = 13)

wine_tree = DecisionTreeClassifier(max_depth = 2, random_state = 13)

wine_tree.fit(X_train, y_train)y_pred_tr = wine_tree.predict(X_train)

y_pred_test = wine_tree.predict(X_test)

print('Train Acc : ' , accuracy_score(y_train, y_pred_tr))

print("Test Acc : " , accuracy_score(y_test, y_pred_test))Train Acc : 0.7294593034442948

Test Acc : 0.7161538461538461

fig = plt.figure(figsize=(15, 8))

_ = tree.plot_tree(wine_tree,

feature_names =list(X_train.columns),

class_names = ["SOSO" , "Good"],

rounded = True,

filled=True)

dict(zip(X_train.columns, wine_tree.feature_importances_)){'fixed acidity': 0.0,

'volatile acidity': 0.2714166536849971,

'citric acid': 0.0,

'residual sugar': 0.0,

'chlorides': 0.0,

'free sulfur dioxide': 0.057120460609986594,

'total sulfur dioxide': 0.0,

'density': 0.0,

'pH': 0.0,

'sulphates': 0.0,

'alcohol': 0.6714628857050162,

'color': 0.0}

이 글은 제로베이스 데이터 취업 스쿨의 강의 자료 일부를 발췌하여 작성되었습니다