-

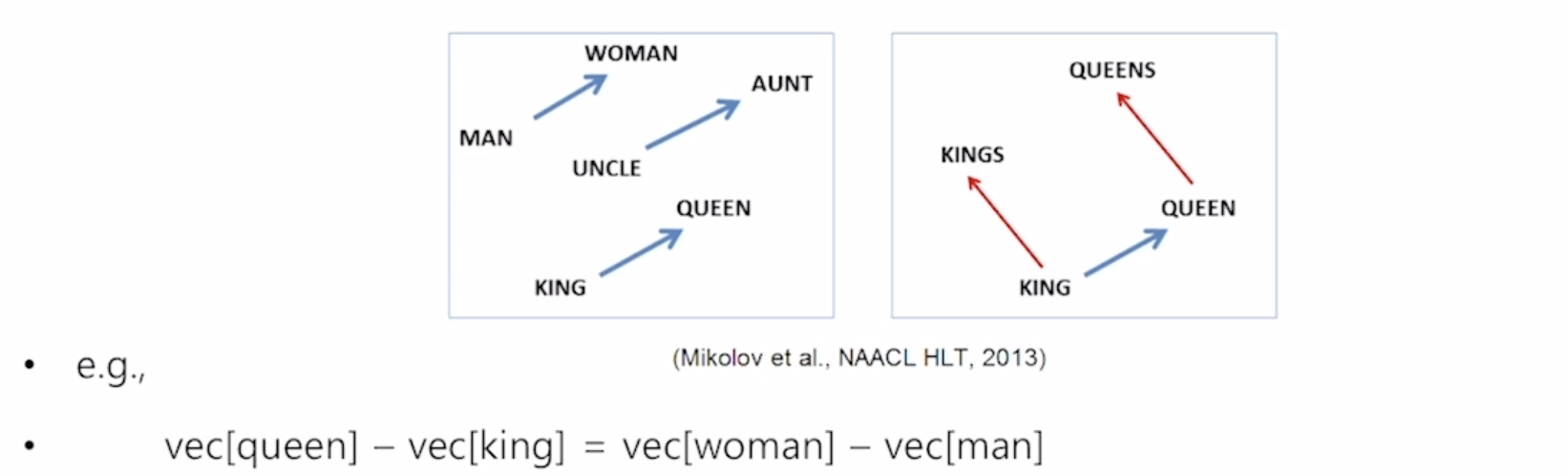

Word2Vec

- An algorithm for training vector representation of a word from context words

- Assumption : words in similar context will have similar meanings

- The word vector, or the relationship between vector points in space, represents the relationship between the words

- The same relationship is represented as the same vectors

-

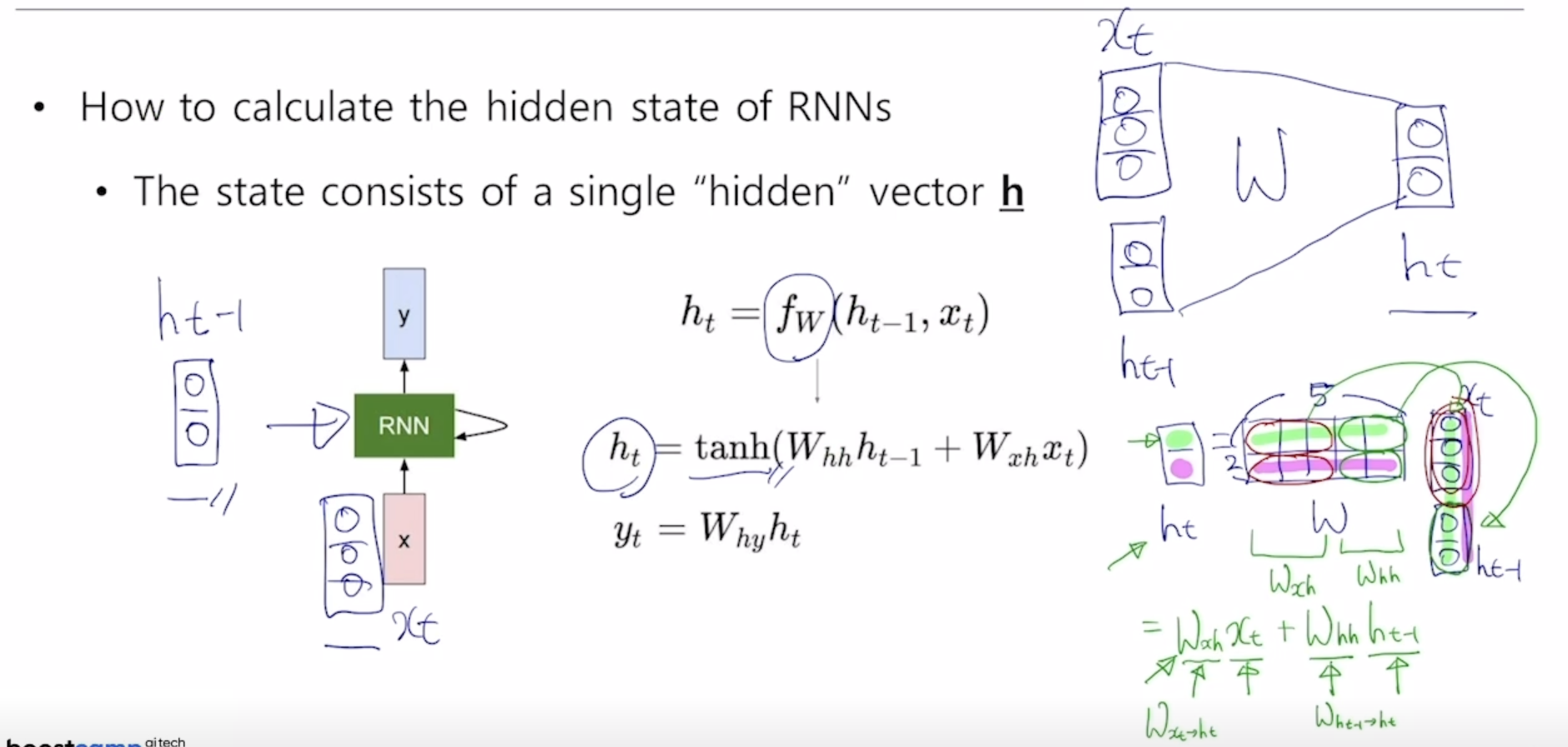

Recurrent Neural Network(RNN)

- How to caclulate the hidden state of RNNs

- : old hidden-state vector

- : input vector at some time step

- : new hidden-state vector

- : RNN function with parameters W

- : output vector at time step

- The same function and the same set of parameters are used at every time step

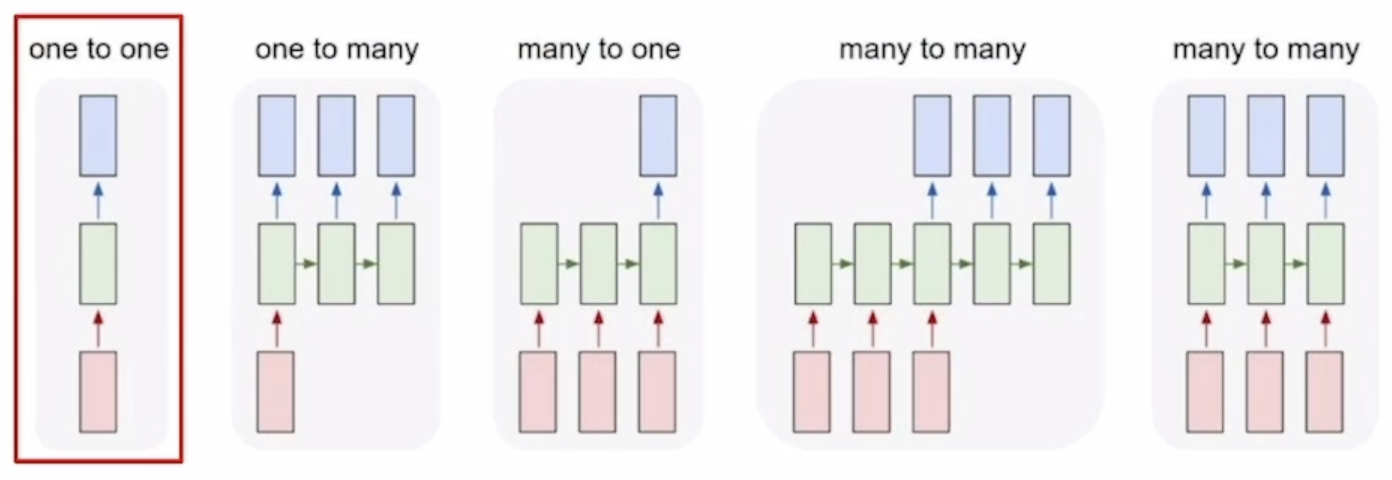

- Types of RNNs

- one to one : Standard Neural Networks

- one to many : Image Captioning

- many to one : Sentiment Classificiation

- many to many : Machine Translation, Video classification on frame level

- Vanishing / Exploding Gradient Problem in RNN

- Multiplying the same matrix at each time step during backpropagation causes gradeint vanishing or exploding ()

- tanh의 미분 그래프는 x가 0일 때 1인 종모양 즉 x가 0이 아닌경우 1보다 작은 값이 계속 곱해짐

-

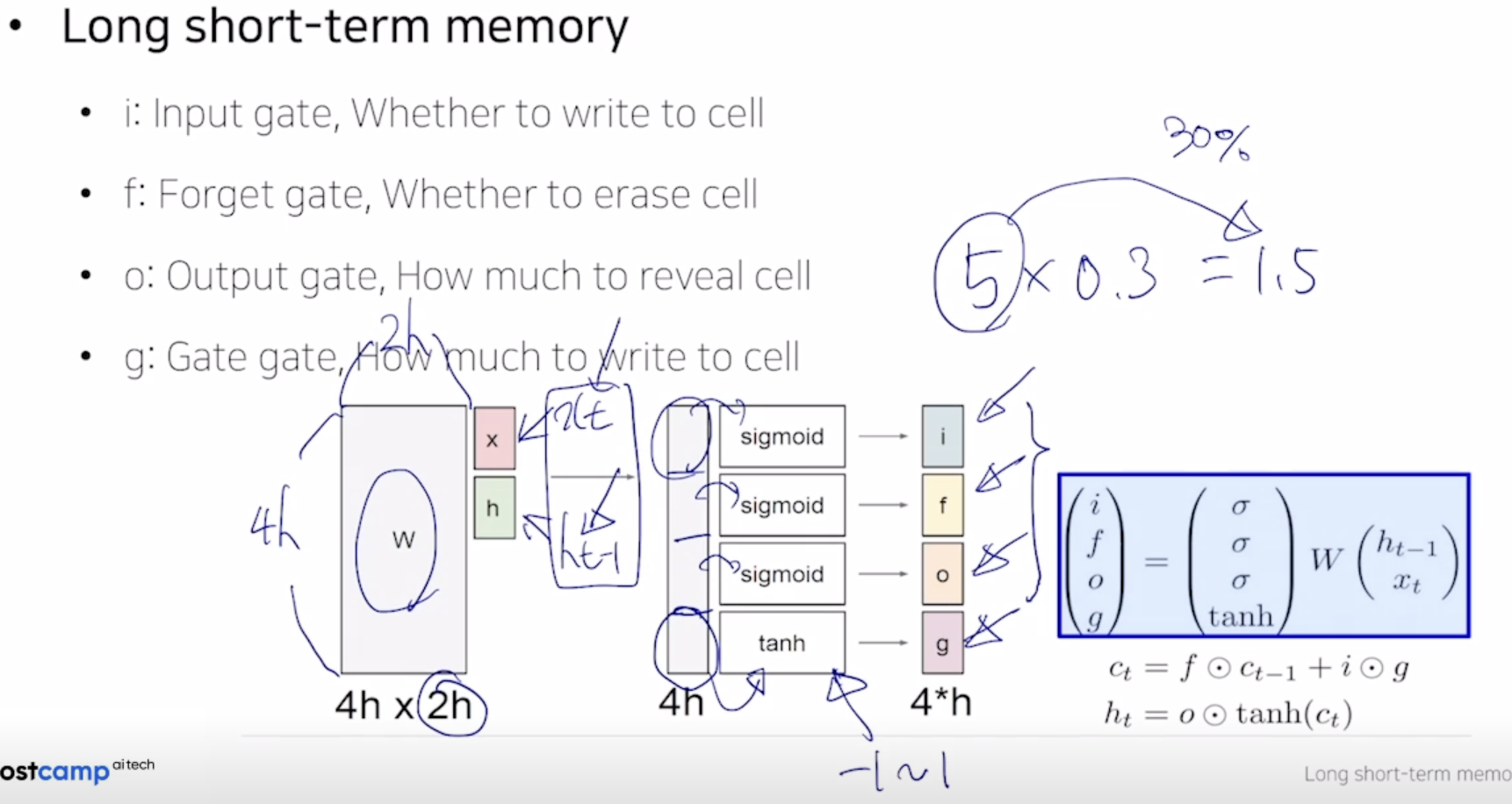

Long Short-Term Memory(LSTM)

- Core idea : pass cell state information straightly without any transformation

- Solving long-term dependency problem

- LSTM

- Core idea : pass cell state information straightly without any transformation

-

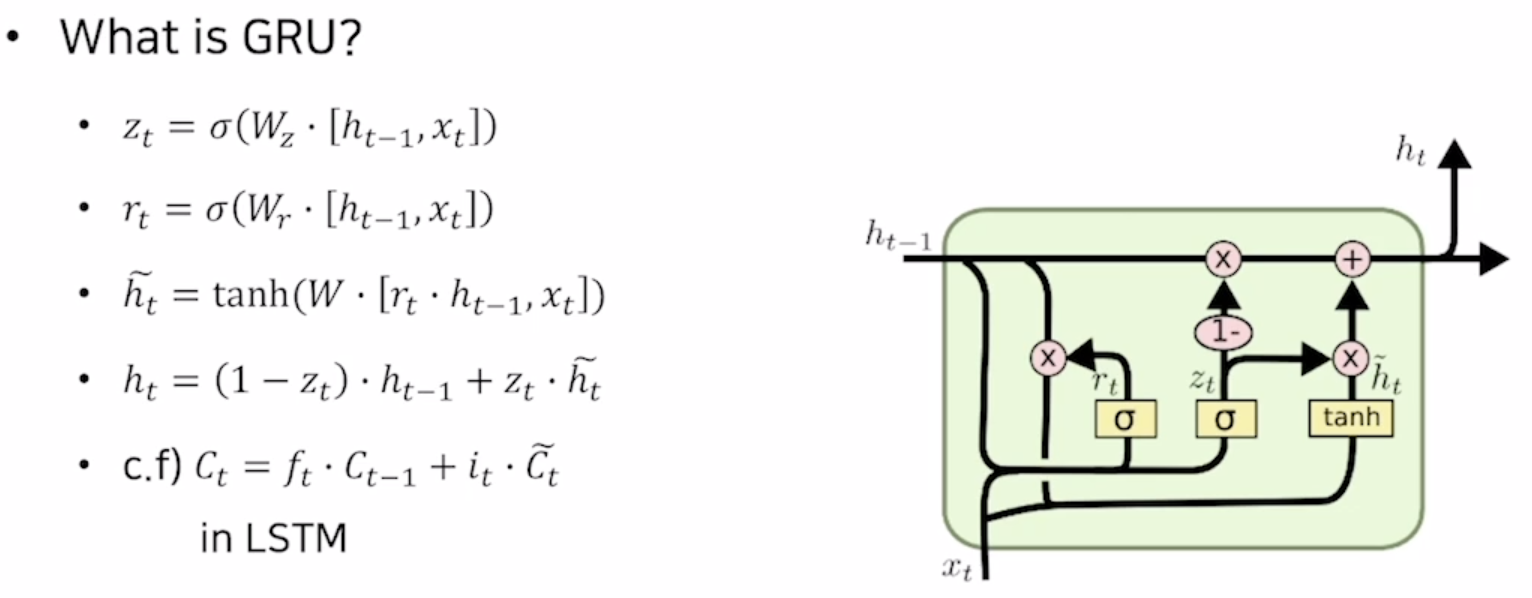

Gated Recurrent Unit(GRU)

-

실습

# padding max_len = len(max(data, key=len)) print(f"Maximum sequence length: {max_len}") valid_lens = [] for i, seq in enumerate(tqdm(data)): valid_lens.append(len(seq)) if len(seq) < max_len: data[i] = seq + [pad_id] * (max_len - len(seq))

출처 : Naver BoostCamp