1-1. 데이터 읽기

# 모듈

import numpy as np

import pandas as pd

import os

import glob

import matplotlib.pyplot as plt

import seaborn as sns

import tensorflow as tf

from tensorflow.keras import Sequential, models

from tensorflow.keras.layers import Flatten, Dense, Conv2D, MaxPool2D

from sklearn.model_selection import train_test_split

from sklearn.metrics import classification_report, confusion_matrix

from google.colab import drive

drive.mount('/content/drive')

import zipfile

# 파일 압축 해제

contetn_zip = zipfile.ZipFile('/content/drive/MyDrive/Colab Notebooks/data/archive.zip')

contetn_zip.extractall('./data')

# 객체 종료

contetn_zip.close()1-2. 파일 정리 후 데이터 프레임

path = './data/Face Mask Dataset/'

dataset = {'image_path': [], 'mask_status':[], 'where':[]}

for where in os.listdir(path):

for status in os.listdir(path + '/' + where):

for image in glob.glob(path + where + '/' + status + '/' + '*.png'):

dataset['image_path'].append(image)

dataset['mask_status'].append(status)

dataset['where'].append(where)

dataset = pd.DataFrame(dataset)2. 데이터 나누기

train_df = dataset[dataset['where'] == 'Train']

test_df = dataset[dataset['where'] == 'Test']

valid_df = dataset[dataset['where'] == 'Validation']

# 인덱스 정리

train_df = train_df.reset_index().drop('index', axis=1)3. 데이터 전처리

data = []

image_size = 150 # 150 x 150

for i in range(len(train_df)):

# converting the image into grayscale

img_array = cv2.imread(train_df['image_path'][i], cv2.IMREAD_GRAYSCALE)

# resizing the array

new_image_array = cv2.resize(img_array, (image_size, image_size))

# encoding the image with the label

# mask o 1 / mask x 0

if train_df['mask_status'][i] == 'WithMask':

data.append([new_image_array, 1])

else:

data.append([new_image_array, 0])

# 데이터 쏠림 방지 -> 무작위

np.random.shuffle(data)4. X, y 데이터로 저장

X= [] # 이미지

y= [] # 라벨(마스크 여부)

for image in data:

X.append(image[0])

y.append(image[1])

X = np.array(X)

y = np.array(y)

X_train, X_val, y_train, y_val = train_test_split(X, y, test_size=0.2, random_state=13)5. 모델

- LeNet

from tensorflow.keras import layers, models

model = models.Sequential([

layers.Conv2D(32, kernel_size=(5, 5), strides=(1, 1), padding='same', activation='relu', input_shape=(150, 150, 1)),

layers.MaxPooling2D(pool_size=(2,2), strides=(2,2)),

layers.Conv2D(64, (2,2), padding='same', activation='relu'),

layers.MaxPooling2D(pool_size=(2,2)),

layers.Dropout(0.25),

layers.Flatten(),

layers.Dense(1000, activation='relu'),

layers.Dense(1, activation='sigmoid')

])6. compile & fit

from sklearn import metrics

model.compile(

optimizer='adam', loss=tf.keras.losses.BinaryCrossentropy(), metrics=['accuracy']

)

X_train = X_train.reshape(len(X_train), X_train.shape[1], X_train.shape[2], 1)

X_val = X_val.reshape(len(X_val), X_val.shape[1], X_val.shape[2], 1)

history = model.fit(X_train, y_train, epochs=4, batch_size=32)

model.evaluate(X_val, y_val)

'''

63/63 [==============================] - 1s 9ms/step - loss: 0.0880 - accuracy: 0.9740

[0.08797423541545868, 0.9739999771118164]

'''7. 결과

prediction = (model.predict(X_val) > 0.5).astype('int32')

print(classification_report(y_val, prediction))

print(confusion_matrix(y_val, prediction))

'''

63/63 [==============================] - 0s 6ms/step

precision recall f1-score support

0 0.99 0.96 0.97 998

1 0.96 0.99 0.97 1002

accuracy 0.97 2000

macro avg 0.97 0.97 0.97 2000

weighted avg 0.97 0.97 0.97 2000

[[955 43]

[ 9 993]]

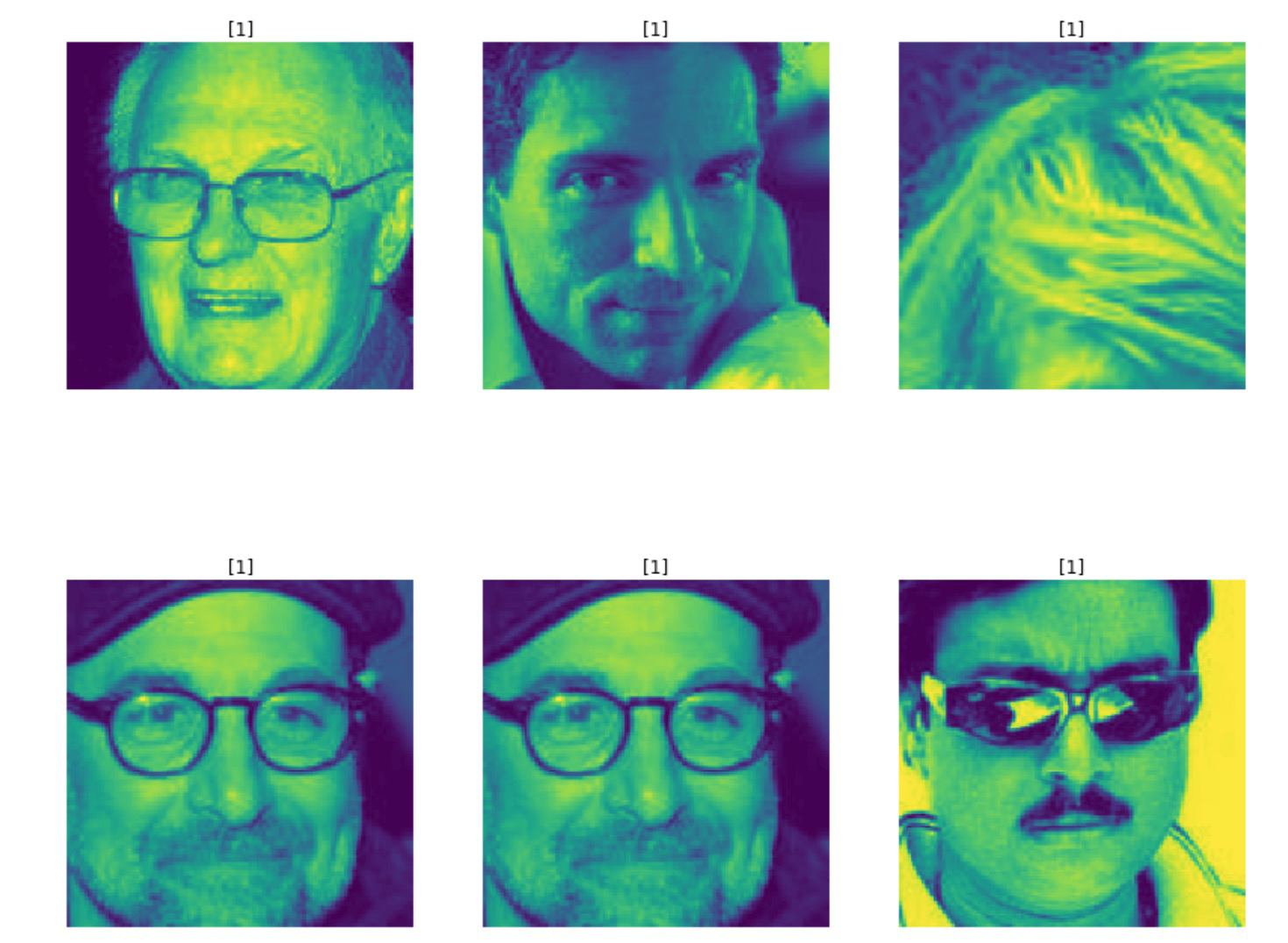

'''8. wrong result

wrong_result = []

for n in range(0, len(y_val)):

if prediction[n] != y_val[n]:

wrong_result.append(n)

import random

samples = random.sample(wrong_result, 6)

# 이미지 출력

plt.figure(figsize=(14, 12))

for idx, n in enumerate(samples):

plt.subplot(3, 2, idx+1)

plt.imshow(X_val[n].reshape(150, 150), interpolation='nearest')

plt.title(prediction[n])

plt.axis('off')

plt.show()

Reference

1) 제로베이스 데이터스쿨 강의자료