[RGB-D (depth camera)를 이용한 vSLAM]

😊RGB-D vSLAM Example in matlab official

목표: RGB-D(=뎁스카메라)를 이용한 3D Slam을 매틀랩에서 구현. 예제 따라가기

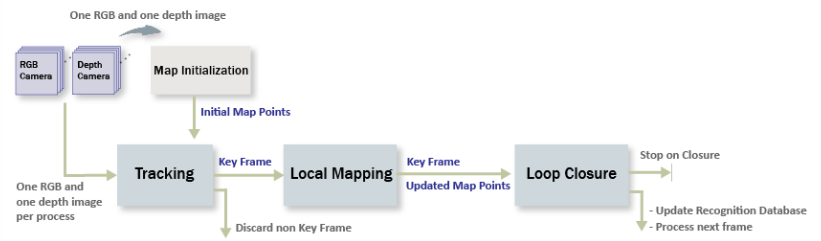

1.Methodology of 3D Slam by RGB-D camera

SLAM flow는 스테레오 카메라와 유사한 구조이다.

1.Map initialize: RGB image + Depth image로 3D 공간구성 시작

2.Tracking: 여러장의 사진을 찍으며, 키프레임간의 특징점의 변화를 계산

3.Local Mapping: 새로운 키프레임이 들어올때마다, map point를 계산하여 mapping

4.Loop closure: 같은 공간에 다시 돌아올때, 이전에 왔던 공간임을 인지하여, 오차(drift)보정

⚠️예제를 불러와도 헬퍼함수가 등록이 안되는 경우가 있었다. 이 경우 빈 함수파일을 만들어주고, 헬퍼함수 내용을 집어넣어주자.

1. 데이터셋 다운로드 및 디렉토리 설정

baseDownloadURL = "https://cvg.cit.tum.de/rgbd/dataset/freiburg3/rgbd_dataset_freiburg3_long_office_household.tgz";

dataFolder = fullfile(tempdir, 'tum_rgbd_dataset', filesep);

options = weboptions('Timeout', Inf);

tgzFileName = [dataFolder, 'fr3_office.tgz'];

folderExists = exist(dataFolder, 'dir');

% Create a folder in a temporary directory to save the downloaded file

if ~folderExists

mkdir(dataFolder);

disp('Downloading fr3_office.tgz (1.38 GB). This download can take a few minutes.')

websave(tgzFileName, baseDownloadURL, options);

% Extract contents of the downloaded file

disp('Extracting fr3_office.tgz (1.38 GB) ...')

untar(tgzFileName, dataFolder);

end

imageFolder = [dataFolder, 'rgbd_dataset_freiburg3_long_office_household/'];imgFolderColor = [imageFolder,'rgb/'];

imgFolderDepth = [imageFolder,'depth/'];

imdsColor = imageDatastore(imgFolderColor);

imdsDepth = imageDatastore(imgFolderDepth);2. image마다 (RGB정보, Depth정보) 동기화 및 테스트 이미지 출력

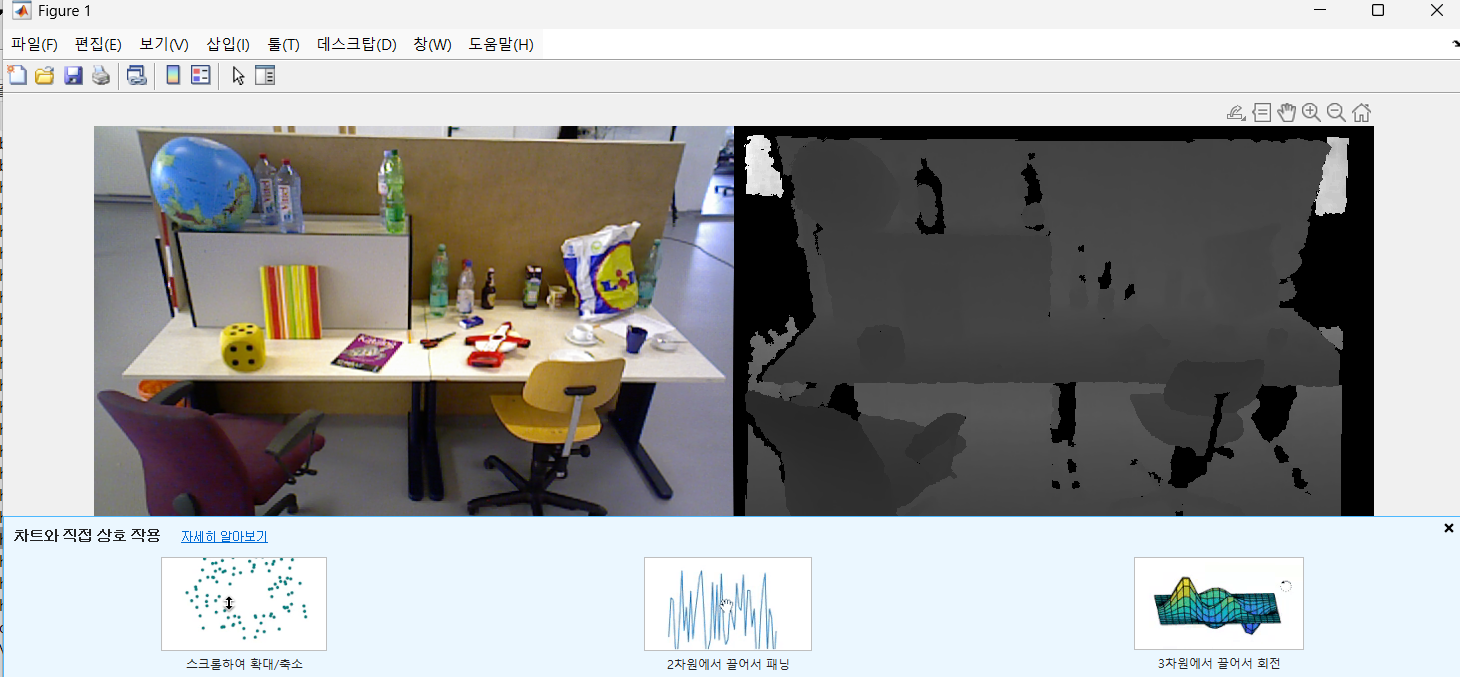

🟢 색상정보와 깊이정보는 이미지에 동기화되어서 저장되는것이 아니라, 분리되어 저장되어있다. Timestamp 기준으로 이미지와 (색상,깊이) 정보를 동기화 시켜주자.

% Load time stamp data of color images

timeColor = helperImportTimestampFile([imageFolder, 'rgb.txt']);

% Load time stamp data of depth images

timeDepth = helperImportTimestampFile([imageFolder, 'depth.txt']);

% Align the time stamp

indexPairs = helperAlignTimestamp(timeColor, timeDepth);

% Select the synchronized image data

imdsColor = subset(imdsColor, indexPairs(:, 1));

imdsDepth = subset(imdsDepth, indexPairs(:, 2));

% Inspect the first RGB-D image

currFrameIdx = 1;

currIcolor = readimage(imdsColor, currFrameIdx);

currIdepth = readimage(imdsDepth, currFrameIdx);

imshowpair(currIcolor, currIdepth, "montage");3.Map initialize

🟢Map에서 SLAM을 하기위한 초기 설정위치이다. 특징점 추출과 관련된 파라미터들(scaleFactor, numLevels, numpoints등)을 설정한다.

% Set random seed for reproducibility

rng(0);

% Create a cameraIntrinsics object to store the camera intrinsic parameters.

% The intrinsics for the dataset can be found at the following page:

% https://vision.in.tum.de/data/datasets/rgbd-dataset/file_formats

focalLength = [535.4, 539.2]; % in units of pixels

principalPoint = [320.1, 247.6]; % in units of pixels

imageSize = size(currIcolor,[1,2]); % in pixels [mrows, ncols]

depthFactor = 5e3;

intrinsics = cameraIntrinsics(focalLength,principalPoint,imageSize);

% Detect and extract ORB features from the color image

scaleFactor = 1.2;

numLevels = 8;

numPoints = 1000;

[currFeatures, currPoints] = helperDetectAndExtractFeatures(currIcolor, scaleFactor, numLevels, numPoints);

initialPose = rigidtform3d();

[xyzPoints, validIndex] = helperReconstructFromRGBD(currPoints, currIdepth, intrinsics, initialPose, depthFactor);4.Loop closure 데이터베이스 구축

🟢같은 위치에 되돌아왔을때, 새로운곳이라고 인지하지 않아야 SLAM이 정확하다. 이는 bof라는 시각적어휘 기법(비유: 이곳의 나무,음식점등 특징이있는 특징점들을 기억하고 메모해두자!)을 이용한다.

이 bof정보들을 저장할 데이터베이스를 구축하는 과정이다.

% Load the bag of features data created offline

bofData = load("bagOfFeaturesDataSLAM.mat");

% Initialize the place recognition database

loopDatabase = invertedImageIndex(bofData.bof, SaveFeatureLocations=false);

% Add features of the first key frame to the database

currKeyFrameId = 1;

addImageFeatures(loopDatabase, currFeatures, currKeyFrameId);5.3D Map point 및 RGB이미지를 저장할 디렉토리 구축 및 SLAM테스트

🟢3D Map point, keyFrame을 저장할 디렉토리를 만들어준다.

Keyframe에서 3D Map point를 추출하여 저장한다.(Keyframe도 저장)

특정 3D Map point 점군마다 깊이정보 및 카메라가 바라보는 방향도 저장한다.

테스트용으로 첫 키프레임의 3D SLAM 결과를 시각화해본다.

3D Mapoint : SLAM결과로서 보이는 점들의 집합

Key Frame : 특징점이 가장 많이 보이는 RGB이미지. SLAM에서 참고하는 이미지들

% Create an empty imageviewset object to store key frames

vSetKeyFrames = imageviewset;

% Create an empty worldpointset object to store 3-D map points

mapPointSet = worldpointset;

% Add the first key frame

vSetKeyFrames = addView(vSetKeyFrames, currKeyFrameId, initialPose, Points=currPoints,...

Features=currFeatures.Features);

% Add 3-D map points

[mapPointSet, rgbdMapPointsIdx] = addWorldPoints(mapPointSet, xyzPoints);

% Add observations of the map points

mapPointSet = addCorrespondences(mapPointSet, currKeyFrameId, rgbdMapPointsIdx, validIndex);

% Update view direction and depth

mapPointSet = updateLimitsAndDirection(mapPointSet, rgbdMapPointsIdx, vSetKeyFrames.Views);

% Update representative view

mapPointSet = updateRepresentativeView(mapPointSet, rgbdMapPointsIdx, vSetKeyFrames.Views);

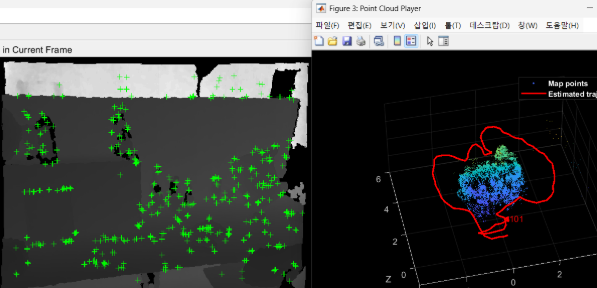

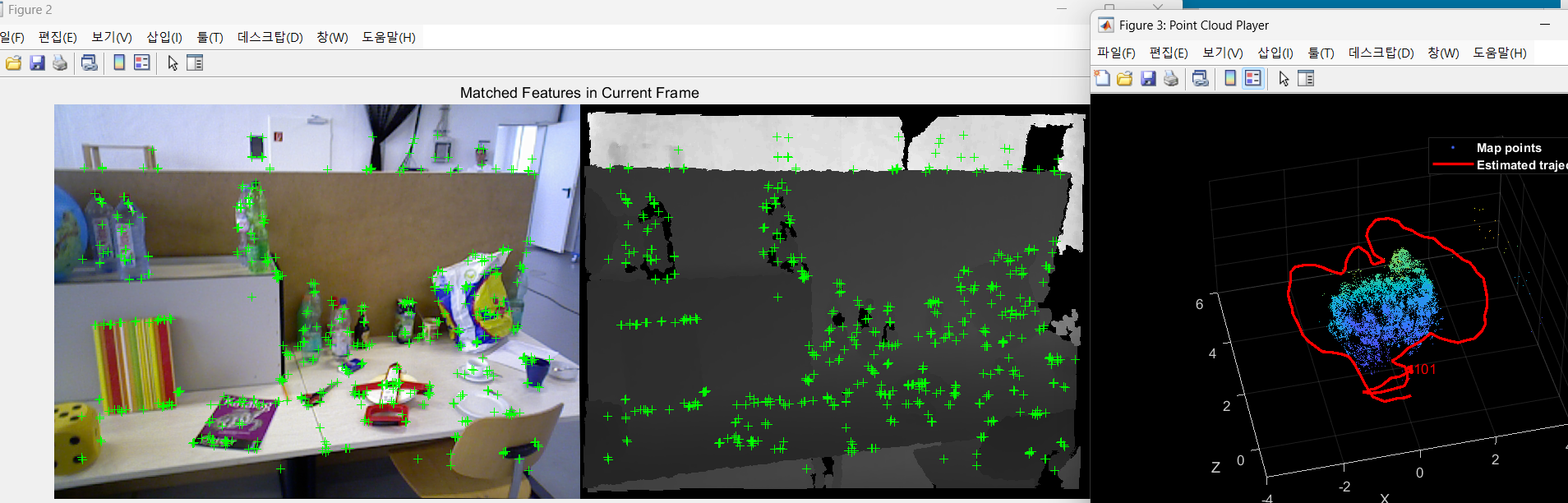

% Visualize matched features in the first key frame

featurePlot = helperVisualizeMatchedFeaturesRGBD(currIcolor, currIdepth, currPoints(validIndex));

% Visualize initial map points and camera trajectory

xLim = [-4 4];

yLim = [-3 1];

zLim = [-1 6];

mapPlot = helperVisualizeMotionAndStructure(vSetKeyFrames, mapPointSet, xLim, yLim, zLim);

% Show legend

showLegend(mapPlot);6.Tracking

🟢카메라가 움직이면서 새로운 사진이 찍히면, 이전 키프레임에 있던 특징점들과 비교하며, 카메라의 상대위치와 포즈(orientation)을 추정한다.

또한, 추가적으로 특징점들을 파악하여 결과값을 미세조정도 한다.

Kanade-Lucas-Tomasi(vision.PointTracker) : 특징점의 변화로 카메라 이동 추정하는 알고리즘

PnP : 서로 대응되는 (2D 좌표 - 3D좌표) 쌍을 이용하여 카메라의 위치와 방향(포즈) 추정

BundleAdjustmentMotion: SLAM결과값 보정

% ViewId of the last key frame

lastKeyFrameId = currKeyFrameId;

% Index of the last key frame in the input image sequence

lastKeyFrameIdx = currFrameIdx;

% Indices of all the key frames in the input image sequence

addedFramesIdx = lastKeyFrameIdx;

currFrameIdx = 2;

isLoopClosed = false;% Main loop

isLastFrameKeyFrame = true;

% Create and initialize the KLT tracker

tracker = vision.PointTracker(MaxBidirectionalError = 5);

initialize(tracker, currPoints.Location(validIndex, :), currIcolor);

while currFrameIdx < numel(imdsColor.Files)

currIcolor = readimage(imdsColor, currFrameIdx);

currIdepth = readimage(imdsDepth, currFrameIdx);

[currFeatures, currPoints] = helperDetectAndExtractFeatures(currIcolor, scaleFactor, numLevels, numPoints);

% Track the last key frame

% trackedMapPointsIdx: Indices of the map points observed in the current left frame

% trackedFeatureIdx: Indices of the corresponding feature points in the current left frame

[currPose, trackedMapPointsIdx, trackedFeatureIdx] = helperTrackLastKeyFrameKLT(tracker, currIcolor, mapPointSet, ...

vSetKeyFrames.Views, currFeatures, currPoints, lastKeyFrameId, intrinsics);

if isempty(currPose) || numel(trackedMapPointsIdx) < 20

currFrameIdx = currFrameIdx + 1;

continue

end

% Track the local map and check if the current frame is a key frame.

% A frame is a key frame if both of the following conditions are satisfied:

%

% 1. At least 20 frames have passed since the last key frame or the

% current frame tracks fewer than 120 map points.

% 2. The map points tracked by the current frame are fewer than 90% of

% points tracked by the reference key frame.

%

% localKeyFrameIds: ViewId of the connected key frames of the current frame

numSkipFrames = 20;

numPointsKeyFrame = 120;

[localKeyFrameIds, currPose, trackedMapPointsIdx, trackedFeatureIdx, isKeyFrame] = ...

helperTrackLocalMap(mapPointSet, vSetKeyFrames, trackedMapPointsIdx, ...

trackedFeatureIdx, currPose, currFeatures, currPoints, intrinsics, scaleFactor, ...

isLastFrameKeyFrame, lastKeyFrameIdx, currFrameIdx, numSkipFrames, numPointsKeyFrame);

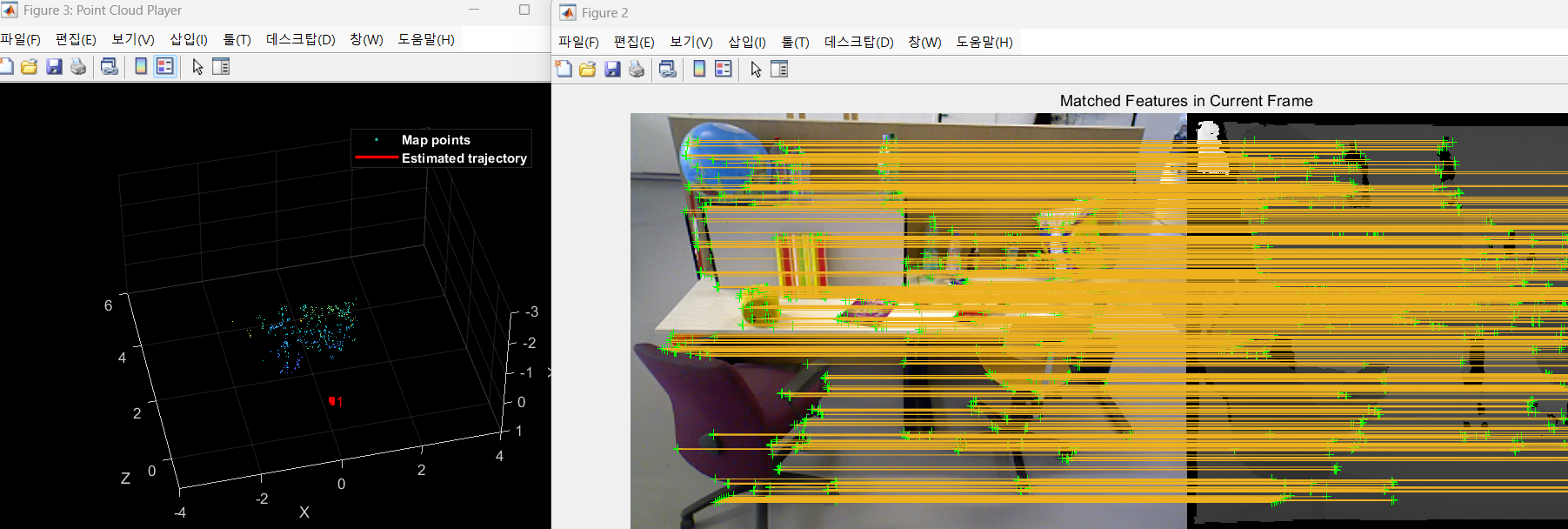

% Visualize matched features

updatePlot(featurePlot, currIcolor, currIdepth, currPoints(trackedFeatureIdx));

if ~isKeyFrame

currFrameIdx = currFrameIdx + 1;

isLastFrameKeyFrame = false;

continue

else

% Match feature points between the stereo images and get the 3-D world positions

[xyzPoints, validIndex] = helperReconstructFromRGBD(currPoints, currIdepth, ...

intrinsics, currPose, depthFactor);

[untrackedFeatureIdx, ia] = setdiff(validIndex, trackedFeatureIdx);

xyzPoints = xyzPoints(ia, :);

isLastFrameKeyFrame = true;

end

% Update current key frame ID

currKeyFrameId = currKeyFrameId + 1;7.Local Mapping

🟢Mapping(Map point 생성)을 수행하는 기준을 설명. Mapping은 새로운 키프레임이 추가될때마다 수행됨. 새로운 키 프레임은 이전의 키프레임의 특징점과 현재 이미지의 특징점 사이의 차이가 커지면 생성이됨.

% Add the new key frame

[mapPointSet, vSetKeyFrames] = helperAddNewKeyFrame(mapPointSet, vSetKeyFrames, ...

currPose, currFeatures, currPoints, trackedMapPointsIdx, trackedFeatureIdx, localKeyFrameIds);

% Remove outlier map points that are observed in fewer than 3 key frames

if currKeyFrameId == 2

triangulatedMapPointsIdx = [];

end

[mapPointSet, trackedMapPointsIdx] = ...

helperCullRecentMapPointsRGBD(mapPointSet, trackedMapPointsIdx, triangulatedMapPointsIdx, ...

rgbdMapPointsIdx);

% Add new map points computed from disparity

[mapPointSet, rgbdMapPointsIdx] = addWorldPoints(mapPointSet, xyzPoints);

mapPointSet = addCorrespondences(mapPointSet, currKeyFrameId, rgbdMapPointsIdx, ...

untrackedFeatureIdx);

% Create new map points by triangulation

minNumMatches = 10;

minParallax = 1;

[mapPointSet, vSetKeyFrames, triangulatedMapPointsIdx, rgbdMapPointsIdx] = helperCreateNewMapPointsStereo( ...

mapPointSet, vSetKeyFrames, currKeyFrameId, intrinsics, scaleFactor, minNumMatches, minParallax, ...

untrackedFeatureIdx, rgbdMapPointsIdx);

% Update view direction and depth

mapPointSet = updateLimitsAndDirection(mapPointSet, [triangulatedMapPointsIdx; rgbdMapPointsIdx], ...

vSetKeyFrames.Views);

% Update representative view

mapPointSet = updateRepresentativeView(mapPointSet, [triangulatedMapPointsIdx; rgbdMapPointsIdx], ...

vSetKeyFrames.Views);

% Local bundle adjustment

[mapPointSet, vSetKeyFrames, triangulatedMapPointsIdx, rgbdMapPointsIdx] = ...

helperLocalBundleAdjustmentStereo(mapPointSet, vSetKeyFrames, ...

currKeyFrameId, intrinsics, triangulatedMapPointsIdx, rgbdMapPointsIdx);

% Visualize 3-D world points and camera trajectory

updatePlot(mapPlot, vSetKeyFrames, mapPointSet);

% Set the feature points to be tracked

[~, index2d] = findWorldPointsInView(mapPointSet, currKeyFrameId);

setPoints(tracker, currPoints.Location(index2d, :));8.Loop Closure

🟢방문했던 위치로 돌아왔을때, 새로운 위치로 인식하지 않도록, 데이터베이스에서 확인해서 SLAM결과값 보정.

인접한 프레임간 비매칭된 포인트들도 연결하여 SLAM 과정에 이용.

매칭된 포인트 : 키프레임 앞뒤로 1개의 프레임(총 3개의 프레임그룹)에서 특징점위치가 크게 변하지 않은것들은 매칭된 포인트이다.

% Check loop closure after some key frames have been created

if currKeyFrameId > 20

% Minimum number of feature matches of loop edges

loopEdgeNumMatches = 120;

% Detect possible loop closure key frame candidates

[isDetected, validLoopCandidates] = helperCheckLoopClosureT(vSetKeyFrames, currKeyFrameId, ...

loopDatabase, currIcolor, loopEdgeNumMatches);

if isDetected

% Add loop closure connections

maxDistance = 0.1;

[isLoopClosed, mapPointSet, vSetKeyFrames] = helperAddLoopConnectionsStereo(...

mapPointSet, vSetKeyFrames, validLoopCandidates, currKeyFrameId, ...

currFeatures, currPoints, loopEdgeNumMatches, maxDistance);

end

end

% If no loop closure is detected, add current features into the database

if ~isLoopClosed

addImageFeatures(loopDatabase, currFeatures, currKeyFrameId);

end

% Update IDs and indices

lastKeyFrameId = currKeyFrameId;

lastKeyFrameIdx = currFrameIdx;

addedFramesIdx = [addedFramesIdx; currFrameIdx]; %#ok<AGROW>

currFrameIdx = currFrameIdx + 1;

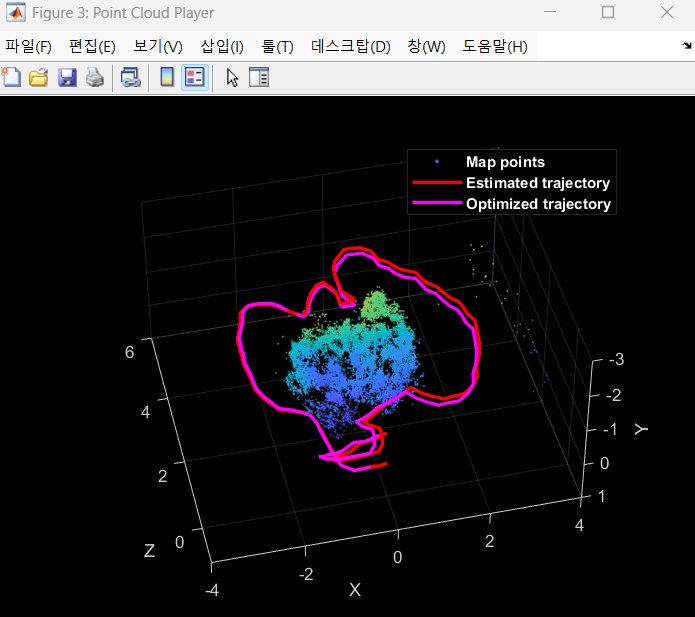

end % End of main loop9.Optimization of Pose Graph

🟢최소 매칭 포인트수 (minNumMatches) 보다 적은 매칭을 가진 프레임은 제거하여 필수 그래프(노이즈제거하는 과정)

이 필수그래프를 기반으로 하여 SLAM결과를 미세하게 조정.

최적화된 3D맵포인트의 위치를 업데이트함.(실제 SLAM과정에서 맨처음에는 특징점 많이 찍히다가 OPG과정을 통해 핵심 특징점만 남게됨.)

% Optimize the poses

minNumMatches = 50;

vSetKeyFramesOptim = optimizePoses(vSetKeyFrames, minNumMatches, Tolerance=1e-16);

% Update map points after optimizing the poses

mapPointSet = helperUpdateGlobalMap(mapPointSet, vSetKeyFrames, vSetKeyFramesOptim);

updatePlot(mapPlot, vSetKeyFrames, mapPointSet);

% Plot the optimized camera trajectory

optimizedPoses = poses(vSetKeyFramesOptim);

plotOptimizedTrajectory(mapPlot, optimizedPoses)

% Update legend

showLegend(mapPlot);10. SLAM결과

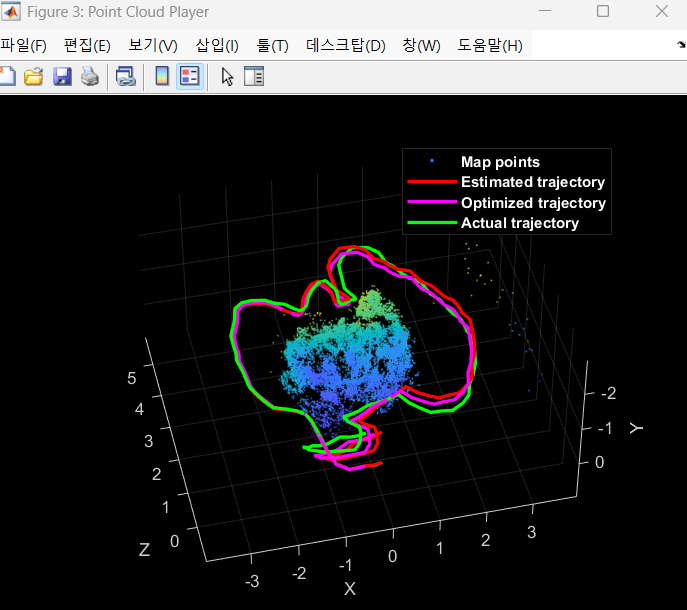

11. GroundTruth와 SLAM결과 비교

🟢프레임마다 카메라의 위치와 방향 정보를 담은 실제 정보와, SLAM을 통해 추정된 카메라의 위치, 방향정보를 비교하여 알고리즘의 정확도를 평가한다.

% Load ground truth

gTruthData = load("orbslamGroundTruth.mat");

gTruth = gTruthData.gTruth;

% Plot the actual camera trajectory

plotActualTrajectory(mapPlot, gTruth(indexPairs(addedFramesIdx, 1)), optimizedPoses);

% Show legend

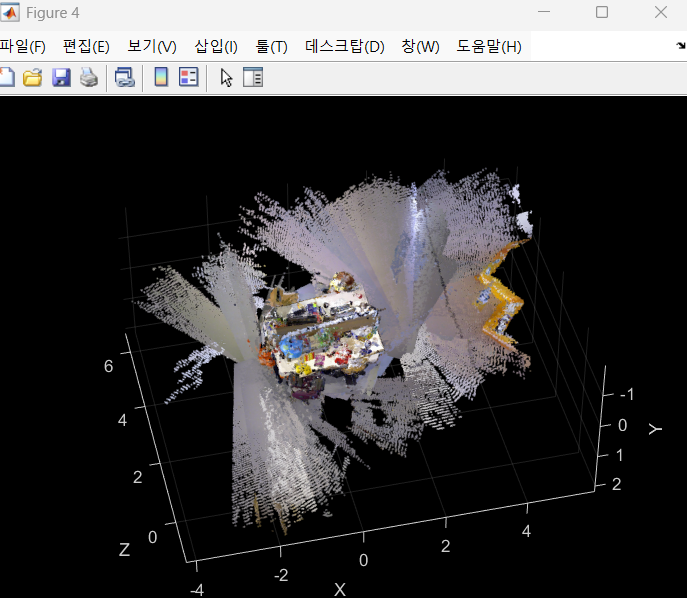

showLegend(mapPlot);12. Dense Reconstruction

🟢카메라 또는 센서로 캡처한 이미지를 사용하여 고밀도 3D 모델을 생성하는 과정. 이 과정은 환경의 세밀한 3D 구조를 재구성하는 데 중점을 두며, 표면의 모든 작은 세부 사항을 포함. SLAM으로 생성된 정보들을 기반으로 공간을 우리가 볼 수 있는 형태로 재구성하는 단계

% Create an array of pointCloud objects to store the world points constructed

% from the key frames

ptClouds = repmat(pointCloud(zeros(1, 3)), numel(addedFramesIdx), 1);

% Ignore image points at the boundary

offset = 40;

[X, Y] = meshgrid(offset:2:imageSize(2)-offset, offset:2:imageSize(1)-offset);

for i = 1: numel(addedFramesIdx)

Icolor = readimage(imdsColor, addedFramesIdx(i));

Idepth = readimage(imdsDepth, addedFramesIdx(i));

[xyzPoints, validIndex] = helperReconstructFromRGBD([X(:), Y(:)], ...

Idepth, intrinsics, optimizedPoses.AbsolutePose(i), depthFactor);

colors = zeros(numel(X), 1, 'like', Icolor);

for j = 1:numel(X)

colors(j, 1:3) = Icolor(Y(j), X(j), :);

end

ptClouds(i) = pointCloud(xyzPoints, Color=colors(validIndex, :));

end

% Concatenate the point clouds

pointCloudsAll = pccat(ptClouds);

figure

pcshow(pointCloudsAll,VerticalAxis="y", VerticalAxisDir="down");

xlabel('X')

ylabel('Y')

zlabel('Z')💡마무리

RGB-D (DepthCamera를 통한 3D SLAM 과정을 알게되었다.)

알아본 결과, 카메라의 이동 및 자세추정단계(Tracking)에서 단순 특징점 사이 이동만을 이용하는것이 아닌, IMU가 여기서 퓨전되면 훨씬 정확한 위치 및 자세추정이 된다는 사실도 찾아보니 알게되었다.

(❗❗현재 포스트에서는 IMU를 이용하지 않고있다❗❗)

추후에 3D카메라와 IMU를 센서퓨전하여 SLAM하는 방식을 Matlab을 통해 해봐야겠다.

데이터셋을 우리껄로 바꿔주면, 내가 원하는 곳의 SLAM을 해볼수 있겠다는 생각이 들었다.