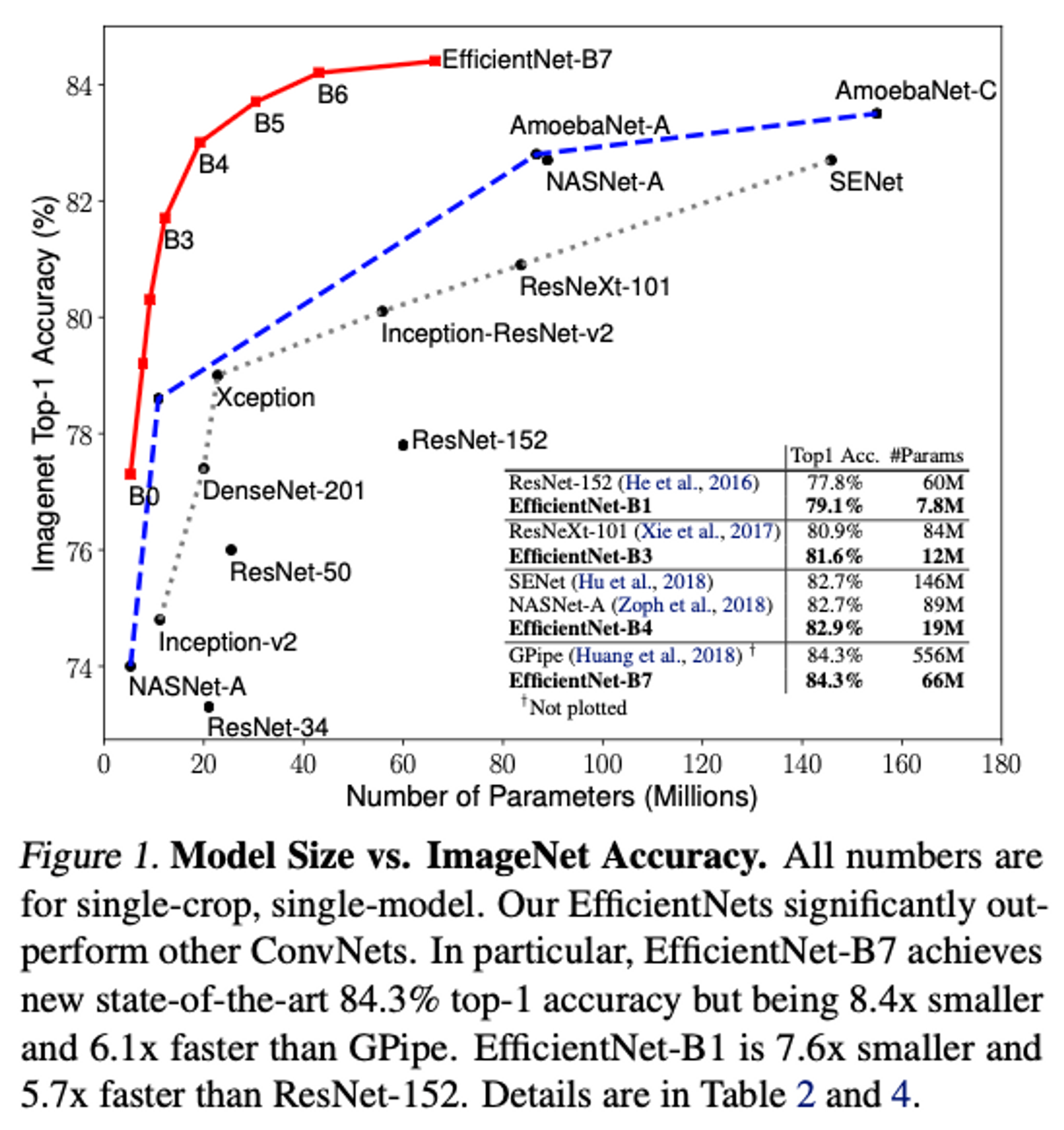

EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks

Abstract

모델의 성능과 효율성을 균형있게 조정하기 위해 네트워크의 규모(scale)를 조절하는 방법을 제시

→ Compound Scaling

Introduction

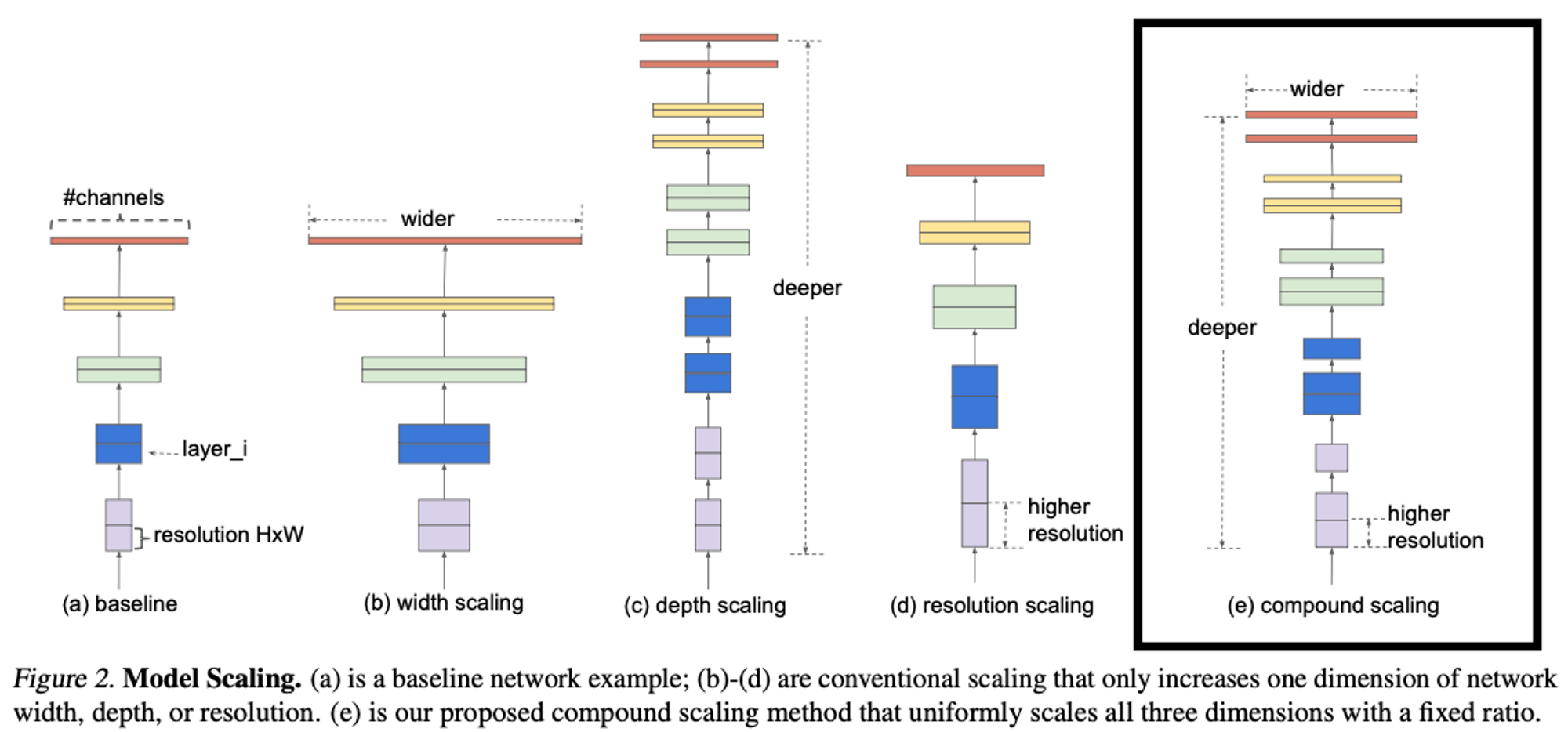

이전엔 ConvNet을 scale up 할 떄 depth or width or resolution 중 하나만 사용

- 동시에 안 한 이유: arbitrary scaling requires tedious manual tuning and still often yields sub-optimal accuracy and efficiency

width/depth/resolution를 constant ratio로 scale → Compound Scaling Method

- uniformly scales network width, depth and resolution with a set of fixed scaling coefficients

- if computational resources → depth width image size :

- 이 때 는 original small model 에서 gird search

- 입력 이미지의 크기가 커질수록 네트워크가 더 많은 layer와 channel을 필요로 한다

- receptive field를 증가시키기 위해 더 많은 layer 필요

- 개별 픽셀이 "보이는" 영역인 receptive field 도 함께 증가해야

- fine-grained pattern을 찾기 위해 더 많은 channel이 필요하다

- 큰 이미지에는 작은 세부 사항과 미세한 패턴들이 더 많이 포함

- receptive field를 증가시키기 위해 더 많은 layer 필요

Compound Model Scaling

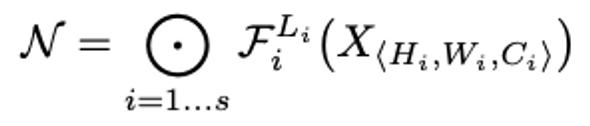

Problem Formulation

- y : output tensor

- f: operator

- x : input tensor, shape : height, width, channel

ConvNet

: F가 stage i에서 L번 반복된다

F를 fix하고 L,C,(H,W)를 expand

Scaling Dimensions

Depth

- Deeper → capture richer and more complex features, generalize well on new tasks

- 그러나 Gradient Vanishing Problem

- 해결책 : Skip Connections, Batch Normalization 등, 하지만 해결 X

Width

- Wider → capture more fine-grained features and are easier to train

- 그러나 extremely wide but shallow networks tend to have difficulties in capturing higher level features

Resolution

- capture more fine-grained patterns

- accuracy gain diminishes for very high resolutions

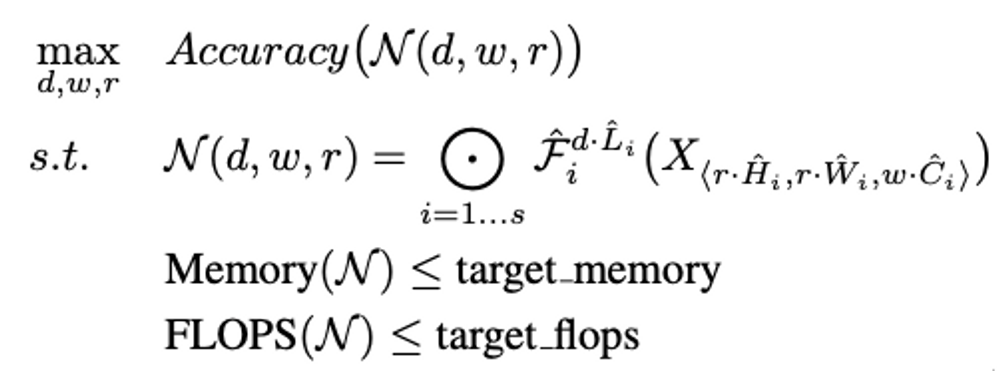

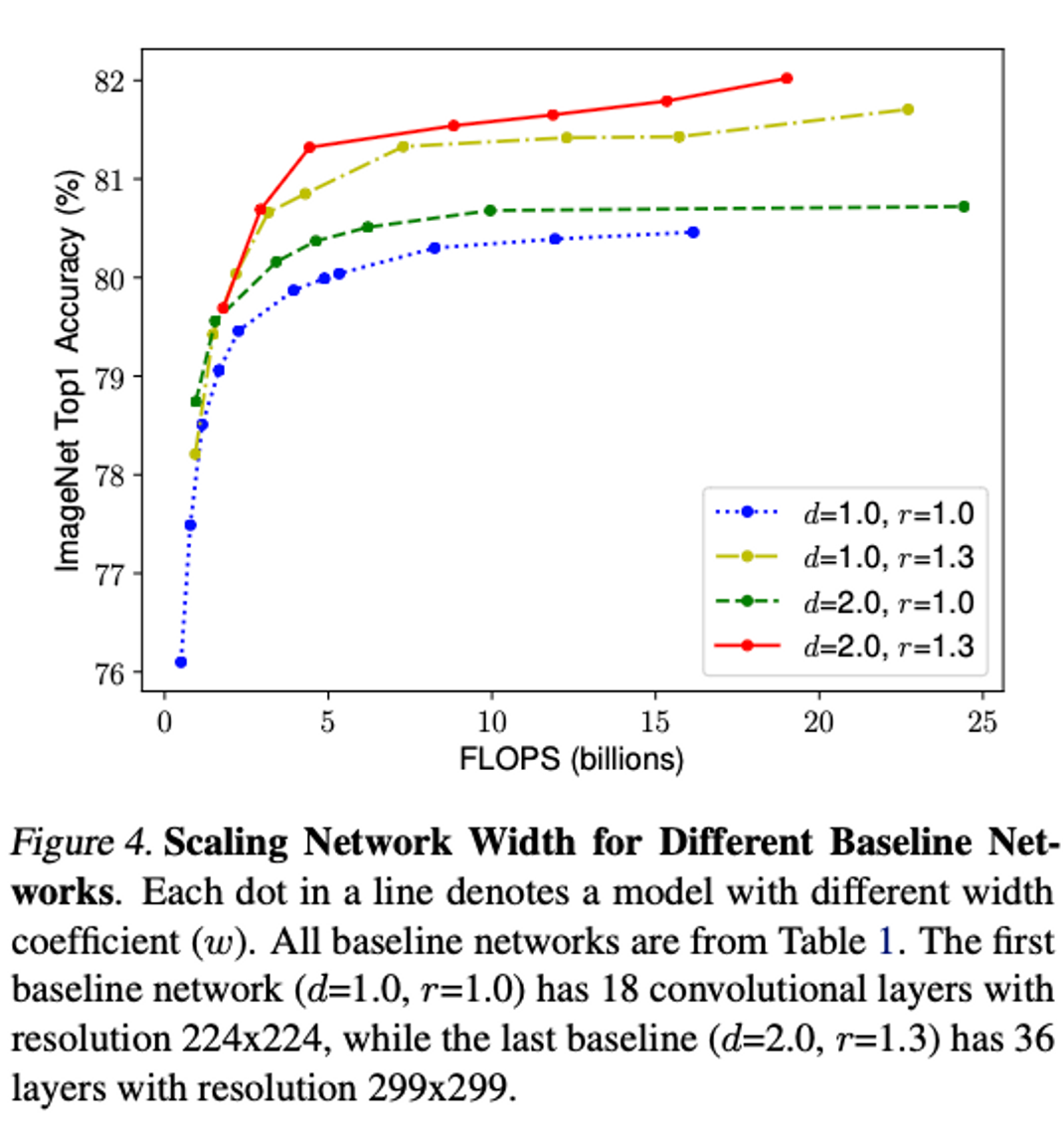

Compound Scaling

직관적으로

Higher Resolution images → increase depth & increase Width

⇒ need to coordinate and balance different scaling dimensions rather than conventional single-dimension scaling

Observation 2

- balance all dimensions of network width, depth, and resolution during ConvNet scaling

- 로 uniformly scale 한다

- 이 때 α, β, γ 는 small grid search로 결정

depth 2배 → FLOPS 2배

width, resolution 2배 → FLOPS 4배

→ 본 논문에서 total FLOPS 2φ 증가

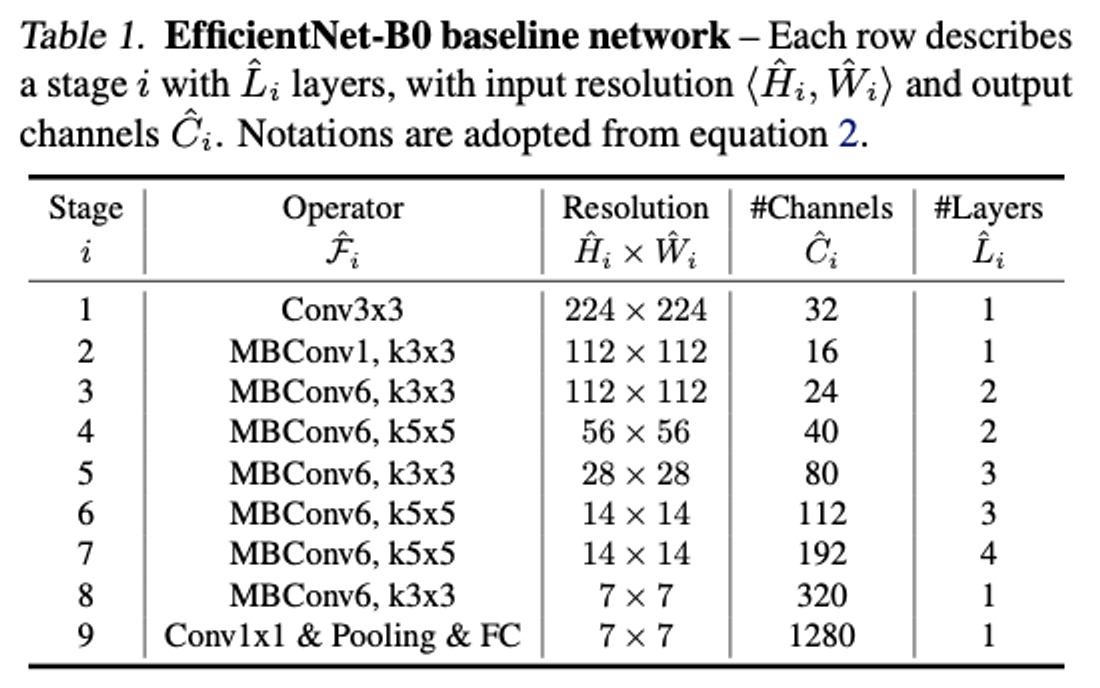

EfficientNet Architecture

- Mnas-Net과 유사한 아키텍처

- MBConv block을 사용

- squeeze and excitation 최적화를 진행

Conclusion