1. 설치 및 환경 설정

pip install langchain

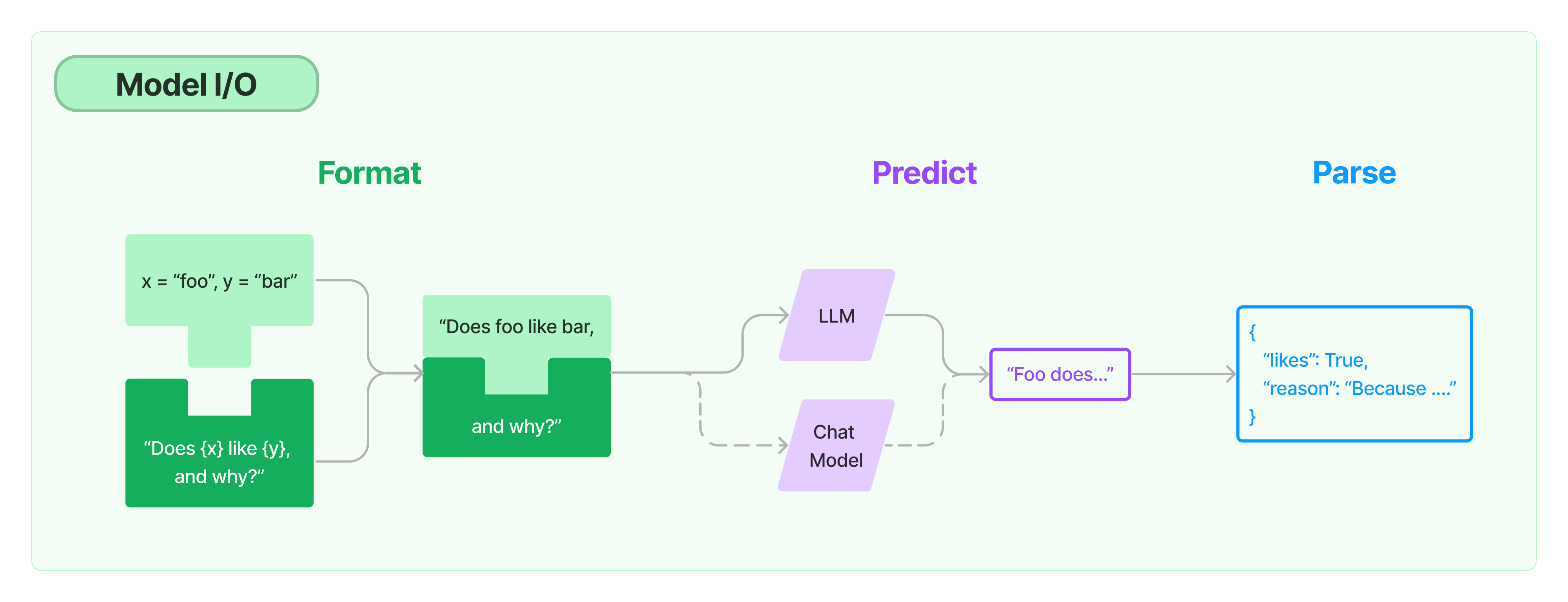

2. Model I/O (전체 구조)

Prompts(초록색): 입력 템플릿화, 동적으로 선택, and 모델 입력 관리Language models(보라색): 공통 인터페이스를 통해 언어 모델을 호출합니다.Output parsers(파란색): 모델 출력에서 정보 추출

=> 해당 파트에서는 Prompts를 다룬다.

3. Prompt Templates - 기초

3-1. Prompt templates 이란?

-

프롬프트 설명에 대한 포멧을 정하는 것임. 프롬프트 엔지니어링에 포함되는 영역

-

실제 chat GPT가 나오면 이를 잘 사용하기 위한 방법들이 많이 연구됨.

- 예시와 같은 포멧을 만드는 것으로 보면 됨.

- 예시

act as a JavaScript Console I want you to act as a javascript console. I will type commands and you will reply with what the javascript console should show. I want you to only reply with the terminal output inside one unique code block, and nothing else. do not write explanations. do not type commands unless I instruct you to do so. when I need to tell you something in english, I will do so by putting text inside curly brackets {like this}. My first command is console.log("Hello World");

3-2. langChain에서 Prompt Templates 사용해보기

-

기본 예시

PromptTemplate.from_template: 템플릿 설정- from_template에 넣은 string에 {변수}를 넣어 받고자 하는 변수를 설정 가능.

prompt.format({변수} = "입력하고자 하는 값")

from langchain import PromptTemplate template = """\ You are a naming consultant for new companies. What is a good name for a company that makes {product}? """ prompt = PromptTemplate.from_template(template) prompt.format(product="colorful socks") -

input_variables 활용 예시

- 이는 서비스에 적용 전에 미리 값이 제대로 나오는지 확인하기 좋아보인다.

- input_variables에 사전 질문 리스트를 만들면 쉽게 테스트 해볼 수 있어보임.

from langchain import PromptTemplate # An example prompt with no input variables no_input_prompt = PromptTemplate(input_variables=[], template="Tell me a joke.") no_input_prompt.format() # -> "Tell me a joke." # An example prompt with one input variable one_input_prompt = PromptTemplate(input_variables=["adjective"], template="Tell me a {adjective} joke.") one_input_prompt.format(adjective="funny") # -> "Tell me a funny joke." # An example prompt with multiple input variables multiple_input_prompt = PromptTemplate( input_variables=["adjective", "content"], template="Tell me a {adjective} joke about {content}." ) multiple_input_prompt.format(adjective="funny", content="chickens") # -> "Tell me a funny joke about chickens."

3-3. Chat Prompt Templates

-

기본 import

from langchain.prompts import ( ChatPromptTemplate, PromptTemplate, SystemMessagePromptTemplate, AIMessagePromptTemplate, HumanMessagePromptTemplate, ) from langchain.schema import ( AIMessage, HumanMessage, SystemMessage ) -

기본 예시

- 언어변환 예시

template="You are a helpful assistant that translates {input_language} to {output_language}." system_message_prompt = SystemMessagePromptTemplate.from_template(template) human_template="{text}" human_message_prompt = HumanMessagePromptTemplate.from_template(human_template)

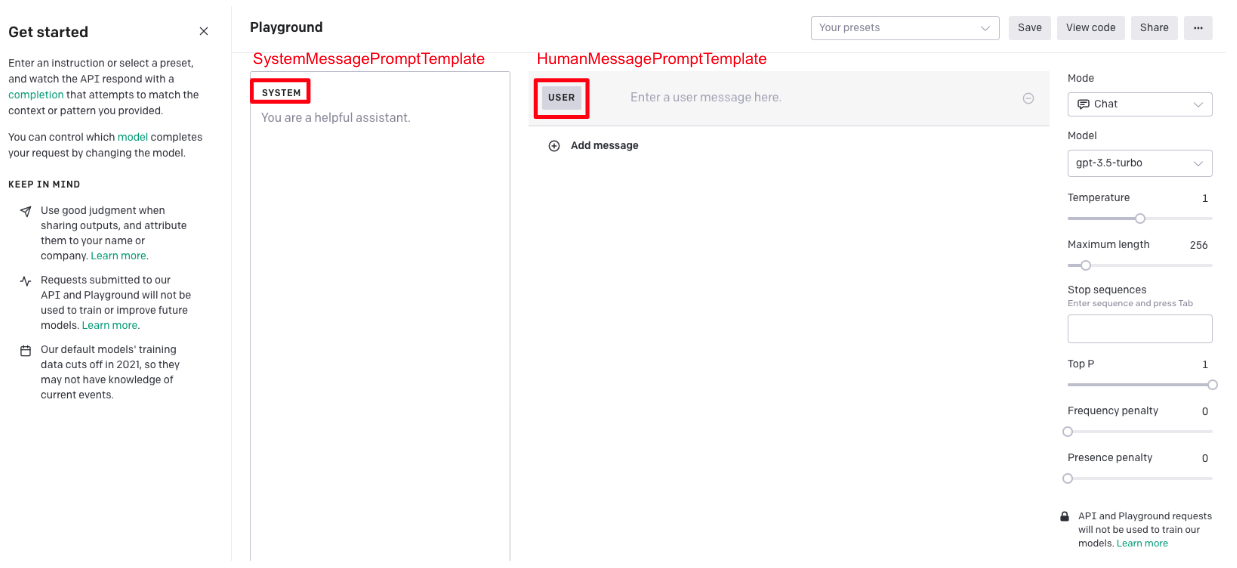

SystemMessagePromptTemplate: openAI play ground System 역할HumanMessagePromptTemplate: openAI play ground user 역할

- 언어변환 예시

-

input_variables 활용 예시

prompt=PromptTemplate( template="You are a helpful assistant that translates {input_language} to {output_language}.", input_variables=["input_language", "output_language"], ) system_message_prompt_2 = SystemMessagePromptTemplate(prompt=prompt) -

template + chat 예시

chat_prompt = ChatPromptTemplate.from_messages([system_message_prompt, human_message_prompt]) # get a chat completion from the formatted messages chat_prompt.format_prompt(input_language="English", output_language="French", text="I love programming.").to_messages() #출력 [SystemMessage(content='You are a helpful assistant that translates English to French.', additional_kwargs={}), HumanMessage(content='I love programming.', additional_kwargs={})]

4. Prompt Templates - 그 외

- 그 외의 부분은 대부분 예시 자료다. -> 위의 개념을 안다면 필요할 경우 찾아봐도 충분하다고 생각한다.

Connecting to a Feature Store의 파트의 경우Feast의 저장소를 활용하는 방법으로 특정 데이터를 저장소에서 가져와서 사용하는 방식으로 필요하신 분은 참조하길 바란다.- 프롬프트 자체에 업데이트 되는 데이터가 필요할 경우 사용한다고 생각하면 된다.

5. example selector

- 간단하게 설명하자면, 질문에 앞서 예시가 필요한 경우 사용하는 방식이다. 아래와 같은 경우 예제가 필요한데 이럴 때 사용이 가능한 것 같다.

- 자세한 내용은 필요할 때 홈페이지를 참조하면 될 것으로 보인다.

- 예시