KANS(Kubernetes Advanced Networking Study) 3기 과정으로 학습한 내용을 정리 또는 실습한 정리한 게시글입니다. 3주차는 Calico CNI에 대해 학습하였습니다. Calico에 대해 학습한 내용과 MacBook Air-M1에 vagrant와 vmware-fusion을 활용하여 vanilla k8s를 구성, Calico를 설치하여 Network Policy 적용사례를 정리하였습니다.

1. Calico란

캘리코란 무엇인가요?

Calico는 Kubernetes 워크로드와 비 Kubernetes/레거시 워크로드가 원활하고 안전하게 통신할 수 있도록 하는 네트워킹 및 보안 솔루션입니다.

구성 요소 및 기능

쿠버네티스에서, 포드 간/포드 간 네트워크 트래픽의 기본값은 default-allow입니다. 네트워크 정책을 사용하여 네트워크 연결을 잠그지 않으면 모든 포드가 다른 포드와 자유롭게 통신할 수 있습니다.

Calico는 네트워크 통신을 보호하는 네트워킹 과 규모에 맞춰 클라우드 기반 마이크로서비스/애플리케이션을 보호하는 고급 네트워크 정책 으로 구성됩니다 .

| 요소 | 설명 | 주요 특징 |

|---|---|---|

| 네트워킹을 위한 Calico CNI | Calico CNI는 여러 데이터 플레인을 프로그래밍하는 제어 플레인입니다. 컨테이너, 쿠버네티스 클러스터, 가상 머신 및 네이티브 호스트 기반 워크로드를 보호하는 L3/L4 네트워킹 솔루션입니다. | • 내장 데이터 암호화 • 고급 IPAM 관리 • 오버레이 및 비 오버레이 네트워킹 옵션 • 데이터 플레인 선택: iptables, eBPF, Windows HNS 또는 VPP |

| 네트워크 정책을 위한 Calico 네트워크 정책 모음 | Calico 네트워크 정책 모음은 데이터 플레인이 실행할 규칙을 포함하는 Calico CNI에 대한 인터페이스입니다. Calico 네트워크 정책: • 제로 트러스트 보안 모델(모두 거부, 필요한 경우에만 허용)로 설계되었습니다. • Kubernetes API 서버와 통합되어 Kubernetes 네트워크 정책을 계속 사용할 수 있습니다. • 동일한 네트워크 정책 모델을 사용하는 레거시 시스템(베어 메탈, 비클러스터 호스트)을 지원합니다. | • 클러스터 내부, 포드와 외부 세계 간, 비클러스터 호스트에 대한 트래픽을 허용/거부하기 위한 네임스페이스 및 글로벌 정책. • 워크로드에 대한 이그레스 및 인그레스 트래픽의 IP 범위를 제한하기 위한 네트워크 세트 (임의의 IP 서브네트워크, CIDR 또는 도메인 세트) . • HTTP 메서드, 경로 및 암호화된 보안 ID와 같은 속성을 사용하여 트래픽을 적용하기 위한 애플리케이션 계층(L7) 정책 . |

Calico 배포 옵션

Calico 네트워킹 과 네트워크 정책은 함께 사용하면 가장 강력하지만, 플랫폼 전반에 걸쳐 가장 광범위하게 채택되도록 둘 다 별도로 제공됩니다. 다음은 일반적인 Calico 배포입니다.

| 배포 옵션 | 예시 |

|---|---|

| 자체 관리형 Kubernetes, 온프레미스 | Kubernetes/kubeadm cluster |

| 퍼블릭 클라우드에서 관리되는 Kubernetes | EKS, GKE, IKS, AKS |

| 퍼블릭 클라우드에서의 자체 관리형 Kubernetes | AWS, GCE, Azure, 디지털 오션 |

| 자체 관리형 Kubernetes 배포 | OpenShift, Azure 스택의 AKS, Mirantis(MKE), RKE, VMware |

| 통합 | 오픈스택, 플래넬 |

| 베어 메탈, 비클러스터 호스트 | |

| Windows Kubernetes 클러스터 |

Calico 커뮤니티 멤버가 사용하는 플랫폼 목록은 커뮤니티에서 테스트한 Kubernetes 버전을 참조하세요 .

기능 요약

다음 표는 Calico의 주요 기능을 요약한 것입니다. 특정 기능을 검색하려면 제품 비교를 참조하세요 .

| 특징 | 설명 |

|---|---|

| 데이터플레인 | eBPF, 표준 Linux iptables, Windows HNS, VPP. |

| 네트워킹 | • BGP 또는 오버레이 네트워킹을 사용한 확장 가능한 POD 네트워킹 • 사용자 정의가 가능한 고급 IP 주소 관리 |

| 보안 | • 작업 부하 및 호스트 엔드포인트에 대한 네트워크 정책 적용 • WireGuard를 사용한 전송 중 데이터 암호화 |

| Calico 구성 요소 모니터링 | Prometheus를 사용하여 Calico 구성 요소 메트릭을 모니터링합니다. |

| 사용자 인터페이스 | CLI: kubectl및 calicoctl |

| APIs | • Calico 리소스를 위한 Calico API • 운영자 설치 및 구성을 위한 설치 API |

| 지원 및 유지 보수 | 커뮤니티 중심. Calico는 166개국에서 매일 200만 개 이상의 노드를 지원합니다. |

2. Vanilla K8S & Calico cni 설치

설치환경

- Machine : MacBook-Air M1 (16GB)

- 배포 : vagrant with VMware Fusion 13 Pro (개인사용자는 무료, 회원가입 필요)

- VM OS : Ubuntu 22.04 LTS

Vagrant, VMware Fusion 설치

- vagrant 설치

$ brew install vagrant --cask - VMware Fusion 설치

- vagrant plugin 설치

$ vagrant plugin install vagrant-vmware-desktop - Updating the Vagrant VMware Desktop plugin

$ vagrant plugin update vagrant-vmware-desktop

Ubuntu 5대 & K8S 설치

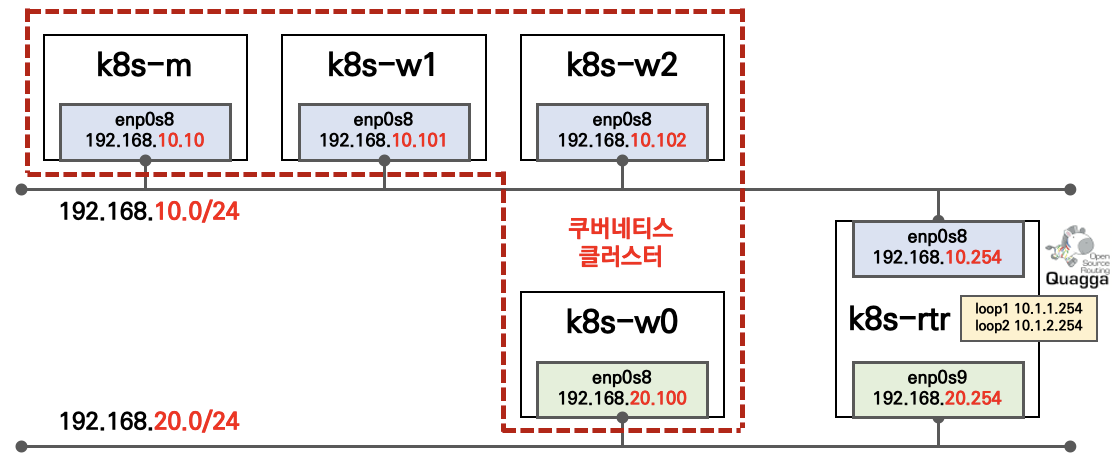

아키텍처 구성도

-

2개의 네트워크 대역이 존재(192.168.10.0/24, 192.168.20.0/24)

-

nic명 : enp0s8 > eth1 (host-only), eth0 (nat)

-

/etc/hosts에 k8s-vm ip & hostname 추가

$ sudo -i # cat <<-EOF >> /etc/hosts ## K8S 192.168.10.10 k8s-m 192.168.10.101 k8s-w1 192.168.10.102 k8s-w2 192.168.20.100 k8s-w0 ## End of K8S EOF exit

MacBook-M1 환경에서 실행위해 수정한 내용(Ubuntu Image, Swap, NIC)

-

원본 Vagrant 파일 Link

-

Model 확인

$ uname -m arm64 -

- Vagrant 파일 수정

- BOX_IMAGE 수정 : ubuntu/jammy64 > bento/ubuntu-22.04-arm64

- BOX_VERSION : 20240823.0.1 > 202401.31.0

$ cat Vagrantfile # Base Image : https://portal.cloud.hashicorp.com/vagrant/discover? query=ubuntu%2Fjammy64 #BOX_IMAGE = "ubuntu/jammy64" #BOX_VERSION = "20240823.0.1" # for MacBook Air - M1 with vmware-fusion BOX_IMAGE = "bento/ubuntu-22.04-arm64" BOX_VERSION = "202401.31.0" # max number of worker nodes : Ex) N = 3 N = 2 # Version : Ex) k8s_V = '1.31' k8s_V = '1.30' cni_N = 'Calico' Vagrant.configure("2") do |config| #-----Manager Node config.vm.define "k8s-m" do |subconfig| subconfig.vm.box = BOX_IMAGE subconfig.vm.box_version = BOX_VERSION subconfig.vm.provider "vmare_desktop" do |v| v.customize ["modifyvm", :id, "--groups", "/#{cni_N}-Lab"] v.customize ["modifyvm", :id, "--nicpromisc2", "allow-vms"] v.name = "#{cni_N}-k8s-m" v.memory = 2048 v.cpus = 2 v.linked_clone = true end subconfig.vm.hostname = "k8s-m" subconfig.vm.synced_folder "./", "/vagrant", disabled: true subconfig.vm.network "private_network", ip: "192.168.10.10" subconfig.vm.network "forwarded_port", guest: 22, host: 50010, auto_correct: true, id: "ssh" subconfig.vm.provision "shell", path: "scripts/init_cfg.sh", args: [ N, k8s_V ] subconfig.vm.provision "shell", path: "scripts/route1.sh" subconfig.vm.provision "shell", path: "scripts/control.sh", args: [ cni_N ] end #-----Router Node config.vm.define "router" do |subconfig| subconfig.vm.box = BOX_IMAGE subconfig.vm.box_version = BOX_VERSION subconfig.vm.provider "vmare_desktop" do |v| v.customize ["modifyvm", :id, "--groups", "/#{cni_N}-Lab"] v.name = "#{cni_N}-router" v.memory = 512 v.cpus = 1 v.linked_clone = true end subconfig.vm.hostname = "router" subconfig.vm.synced_folder "./", "/vagrant", disabled: true subconfig.vm.network "private_network", ip: "192.168.10.254" subconfig.vm.network "private_network", ip: "192.168.20.254" subconfig.vm.network "forwarded_port", guest: 22, host: 50000, auto_correct: true, id: "ssh" subconfig.vm.provision "shell", path: "scripts/linux_router.sh", args: [ N ] end #-----Worker Node Subnet2 config.vm.define "k8s-w0" do |subconfig| subconfig.vm.box = BOX_IMAGE subconfig.vm.box_version = BOX_VERSION subconfig.vm.provider "vmare_desktop" do |v| v.customize ["modifyvm", :id, "--groups", "/#{cni_N}-Lab"] v.name = "#{cni_N}-k8s-w0" v.memory = 2048 v.cpus = 2 v.linked_clone = true end subconfig.vm.hostname = "k8s-w0" subconfig.vm.synced_folder "./", "/vagrant", disabled: true subconfig.vm.network "private_network", ip: "192.168.20.100" subconfig.vm.network "forwarded_port", guest: 22, host: 50020, auto_correct: true, id: "ssh" subconfig.vm.provision "shell", path: "scripts/init_cfg.sh", args: [ N, k8s_V ] subconfig.vm.provision "shell", path: "scripts/route2.sh" subconfig.vm.provision "shell", path: "scripts/worker.sh" end #-----Worker Node Subnet1 (1..N).each do |i| config.vm.define "k8s-w#{i}" do |subconfig| subconfig.vm.box = BOX_IMAGE subconfig.vm.box_version = BOX_VERSION subconfig.vm.provider "vmare_desktop" do |v| v.customize ["modifyvm", :id, "--groups", "/#{cni_N}-Lab"] v.customize ["modifyvm", :id, "--nicpromisc2", "allow-vms"] v.name = "#{cni_N}-k8s-w#{i}" v.memory = 2048 v.cpus = 2 v.linked_clone = true end subconfig.vm.hostname = "k8s-w#{i}" subconfig.vm.synced_folder "./", "/vagrant", disabled: true subconfig.vm.network "private_network", ip: "192.168.10.10#{i}" subconfig.vm.network "forwarded_port", guest: 22, host: "5001#{i}", auto_correct: true, id: "ssh" subconfig.vm.provision "shell", path: "scripts/init_cfg.sh", args: [ N, k8s_V ] subconfig.vm.provision "shell", path: "scripts/route1.sh" subconfig.vm.provision "shell", path: "scripts/worker.sh" end end end -

- swap off 해야 kubelet이 정상 실행 됨

- swap on되어 있을 경우 kubelet 관련 에러 메시지

$ journalctl -xu kubelet -l Sep 14 16:05:16 k8s-m systemd[1]: kubelet.service: Scheduled restart job, restart counter is at 19. Sep 14 16:05:16 k8s-m systemd[1]: Stopped kubelet: The Kubernetes Node Agent. Sep 14 16:05:16 k8s-m systemd[1]: Started kubelet: The Kubernetes Node Agent. Sep 14 16:05:17 k8s-m kubelet[3621]: Flag --container-runtime-endpoint has been deprecated, This parameter should be set via the config file specified by> Sep 14 16:05:17 k8s-m kubelet[3621]: Flag --pod-infra-container-image has been deprecated, will be removed in a future release. Image garbage collector w> Sep 14 16:05:17 k8s-m kubelet[3621]: I0914 16:05:17.046122 3621 server.go:205] "--pod-infra-container-image will not be pruned by the image garbage co> Sep 14 16:05:17 k8s-m kubelet[3621]: I0914 16:05:17.049257 3621 server.go:484] "Kubelet version" kubeletVersion="v1.30.5" Sep 14 16:05:17 k8s-m kubelet[3621]: I0914 16:05:17.049278 3621 server.go:486] "Golang settings" GOGC="" GOMAXPROCS="" GOTRACEBACK="" Sep 14 16:05:17 k8s-m kubelet[3621]: I0914 16:05:17.049441 3621 server.go:927] "Client rotation is on, will bootstrap in background" Sep 14 16:05:17 k8s-m kubelet[3621]: I0914 16:05:17.051526 3621 certificate_store.go:130] Loading cert/key pair from "/var/lib/kubelet/pki/kubelet-cli> Sep 14 16:05:17 k8s-m kubelet[3621]: I0914 16:05:17.052879 3621 dynamic_cafile_content.go:157] "Starting controller" name="client-ca-bundle::/etc/kube> Sep 14 16:05:17 k8s-m kubelet[3621]: I0914 16:05:17.055854 3621 server.go:742] "--cgroups-per-qos enabled, but --cgroup-root was not specified. defau> Sep 14 16:05:17 k8s-m kubelet[3621]: E0914 16:05:17.055927 3621 run.go:74] "command failed" err="failed to run Kubelet: running with swap on is not su> Sep 14 16:05:17 k8s-m systemd[1]: kubelet.service: Main process exited, code=exited, status=1/FAILURE- init_cfg.sh에 swap-off 기능 추가

$ vim scripts/init_cfg.sh #!/usr/bin/env bash echo ">>>> Initial Config Start <<<<" echo "[TASK 0] Swap Disable" # swapoff -a # 추가한 부분 sed -i '/ swap / s/^/#/' /etc/fstab # echo "[TASK 1] Setting SSH with Root" printf "qwe123\nqwe123\n" | passwd >/dev/null 2>&1 sed -i "s/^#PermitRootLogin prohibit-password/PermitRootLogin yes/g" /etc/ssh/sshd_config sed -i "s/^PasswordAuthentication no/PasswordAuthentication yes/g" /etc/ssh/sshd_config.d/60-cloudimg-settings.conf systemctl restart sshd >/dev/null 2>&1 -

- nic명 상이(enp0s8 -> eth1)에 따른 routing 설정 파일 수정

- nic이 eth1으로 변경되었을 경우 에러 메시지

==> k8s-m: Running provisioner: shell... k8s-m: Running: /var/folders/7r/k37w336504d01lg8qbmg2kxw0000gn/T/vagrant-shell20240918-11356-wjymwj.sh k8s-m: k8s-m: ** (generate:3976): WARNING **: 01:21:28.864: Permissions for /etc/netplan/00-installer-config.yaml are too open. Netplan configuration should NOT be accessible by others. k8s-m: k8s-m: ** (generate:3976): WARNING **: 01:21:28.864: Permissions for /etc/netplan/01-netcfg.yaml are too open. Netplan configuration should NOT be accessible by others. k8s-m: /etc/netplan/50-vagrant.yaml:7:7: Error in network definition: address '' is missing /prefixlength k8s-m: - k8s-m: ^ The SSH command responded with a non-zero exit status. Vagrant assumes that this means the command failed. The output for this command should be in the log above. Please read the output to determine what went wrong.- route1.sh, route2.sh 수정 (enp0s8 > eth1)

$ cat scripts/route1.sh #!/usr/bin/env bash IP=$(ip -br -4 addr | grep eth1 | awk '{print $3}') cat <<EOT> /etc/netplan/50-vagrant.yaml network: version: 2 renderer: networkd ethernets: eth1: addresses: - $IP routes: - to: 192.168.20.0/24 via: 192.168.10.254 EOT chmod 600 /etc/netplan/50-vagrant.yaml netplan apply$ cat scripts/route2.sh #!/usr/bin/env bash IP=$(ip -br -4 addr | grep eth1 | awk '{print $3}') cat <<EOT> /etc/netplan/50-vagrant.yaml network: version: 2 renderer: networkd ethernets: eth1: addresses: - $IP routes: - to: 192.168.10.0/24 via: 192.168.20.254 EOT chmod 600 /etc/netplan/50-vagrant.yaml netplan apply

수정된 source download

- git clone

$ git clone https://github.com/icebreaker70/kans-calico.git

vanilla k8s 노드 생성 및 sw 설치

-

vagrant provision

$ vagrant up --provision Bringing machine 'k8s-m' up with 'vmware_desktop' provider... Bringing machine 'router' up with 'vmware_desktop' provider... Bringing machine 'k8s-w0' up with 'vmware_desktop' provider... Bringing machine 'k8s-w1' up with 'vmware_desktop' provider... Bringing machine 'k8s-w2' up with 'vmware_desktop' provider... ==> k8s-m: Cloning VMware VM: 'bento/ubuntu-22.04-arm64'. This can take some time... ==> k8s-m: Checking if box 'bento/ubuntu-22.04-arm64' version '202401.31.0' is up to date... ==> k8s-m: Verifying vmnet devices are healthy... ==> k8s-m: Preparing network adapters... ==> k8s-m: Starting the VMware VM... ==> k8s-m: Waiting for the VM to receive an address... ==> k8s-m: Forwarding ports... k8s-m: -- 22 => 50010 ==> k8s-m: Waiting for machine to boot. This may take a few minutes... k8s-m: SSH address: 127.0.0.1:50010 k8s-m: SSH username: vagrant k8s-m: SSH auth method: private key k8s-m: k8s-m: Vagrant insecure key detected. Vagrant will automatically replace k8s-m: this with a newly generated keypair for better security. k8s-m: k8s-m: Inserting generated public key within guest... k8s-m: Removing insecure key from the guest if it's present... k8s-m: Key inserted! Disconnecting and reconnecting using new SSH key... ==> k8s-m: Machine booted and ready! ==> k8s-m: Setting hostname... ==> k8s-m: Configuring network adapters within the VM... ==> k8s-m: Running provisioner: shell... k8s-m: Running: /var/folders/7r/k37w336504d01lg8qbmg2kxw0000gn/T/vagrant-shell20240918-59036-4yo9oy.sh k8s-m: >>>> Initial Config Start <<<< k8s-m: [TASK 0] Swap Disable k8s-m: [TASK 1] Setting SSH with Root k8s-m: sed: can't read /etc/ssh/sshd_config.d/60-cloudimg-settings.conf: No such file or directory k8s-m: [TASK 2] Profile & Bashrc & Change Timezone k8s-m: [TASK 3] Disable AppArmor k8s-m: [TASK 4] Setting Local DNS Using Hosts file k8s-m: [TASK 5] Install Kubernetes components (kubeadm, kubelet and kubectl) - v1.30 k8s-m: deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.30/deb/ / k8s-m: [TASK 6] Install packages k8s-m: >>>> Initial Config End <<<< ==> k8s-m: Running provisioner: shell... k8s-m: Running: /var/folders/7r/k37w336504d01lg8qbmg2kxw0000gn/T/vagrant-shell20240918-59036-21gflf.sh k8s-m: WARNING:root:Cannot call Open vSwitch: ovsdb-server.service is not running. ==> k8s-m: Running provisioner: shell... k8s-m: Running: /var/folders/7r/k37w336504d01lg8qbmg2kxw0000gn/T/vagrant-shell20240918-59036-4f614x.sh k8s-m: >>>> k8s Controlplane config Start <<<< k8s-m: [TASK 1] Initial Kubernetes - Pod CIDR 172.16.0.0/16 , Service CIDR 10.200.1.0/24 , API Server 192.168.10.10 k8s-m: [TASK 2] Setting kube config file k8s-m: [TASK 3] kubectl completion on bash-completion dir k8s-m: [TASK 4] Alias kubectl to k k8s-m: [TASK 5] Install Kubectx & Kubens k8s-m: [TASK 6] Install Kubeps & Setting PS1 k8s-m: [TASK 7] Install Helm k8s-m: >>>> k8s Controlplane Config End <<<< ==> router: Cloning VMware VM: 'bento/ubuntu-22.04-arm64'. This can take some time... ==> router: Checking if box 'bento/ubuntu-22.04-arm64' version '202401.31.0' is up to date... ==> router: Verifying vmnet devices are healthy... ==> router: Preparing network adapters... ==> router: Starting the VMware VM... ==> router: Waiting for the VM to receive an address... ==> router: Forwarding ports... router: -- 22 => 50000 ==> router: Waiting for machine to boot. This may take a few minutes... router: SSH address: 127.0.0.1:50000 router: SSH username: vagrant router: SSH auth method: private key router: router: Vagrant insecure key detected. Vagrant will automatically replace router: this with a newly generated keypair for better security. router: router: Inserting generated public key within guest... router: Removing insecure key from the guest if it's present... router: Key inserted! Disconnecting and reconnecting using new SSH key... ==> router: Machine booted and ready! ==> router: Setting hostname... ==> router: Configuring network adapters within the VM... ==> router: Running provisioner: shell... router: Running: /var/folders/7r/k37w336504d01lg8qbmg2kxw0000gn/T/vagrant-shell20240918-59036-ypg2ra.sh router: >>>> Initial Config Start <<<< router: [TASK 1] Setting SSH with Root router: sed: can't read /etc/ssh/sshd_config.d/60-cloudimg-settings.conf: No such file or directory router: [TASK 2] Profile & Bashrc & Change Timezone router: [TASK 3] Disable AppArmor router: [TASK 4] Setting Local DNS Using Hosts file router: [TASK 5] Add Kernel setting - IP Forwarding router: [TASK 6] Setting Dummy Interface router: [TASK 7] Install Packages router: [TASK 8] Config FRR Software IP routing suite router: >>>> Initial Config End <<<< ==> k8s-w0: Cloning VMware VM: 'bento/ubuntu-22.04-arm64'. This can take some time... ==> k8s-w0: Checking if box 'bento/ubuntu-22.04-arm64' version '202401.31.0' is up to date... ==> k8s-w0: Verifying vmnet devices are healthy... ==> k8s-w0: Preparing network adapters... ==> k8s-w0: Starting the VMware VM... ==> k8s-w0: Waiting for the VM to receive an address... ==> k8s-w0: Forwarding ports... k8s-w0: -- 22 => 50020 ==> k8s-w0: Waiting for machine to boot. This may take a few minutes... k8s-w0: SSH address: 127.0.0.1:50020 k8s-w0: SSH username: vagrant k8s-w0: SSH auth method: private key k8s-w0: k8s-w0: Vagrant insecure key detected. Vagrant will automatically replace k8s-w0: this with a newly generated keypair for better security. k8s-w0: k8s-w0: Inserting generated public key within guest... k8s-w0: Removing insecure key from the guest if it's present... k8s-w0: Key inserted! Disconnecting and reconnecting using new SSH key... ==> k8s-w0: Machine booted and ready! ==> k8s-w0: Setting hostname... ==> k8s-w0: Configuring network adapters within the VM... ==> k8s-w0: Running provisioner: shell... k8s-w0: Running: /var/folders/7r/k37w336504d01lg8qbmg2kxw0000gn/T/vagrant-shell20240918-59036-moxc52.sh k8s-w0: >>>> Initial Config Start <<<< k8s-w0: [TASK 0] Swap Disable k8s-w0: [TASK 1] Setting SSH with Root k8s-w0: sed: can't read /etc/ssh/sshd_config.d/60-cloudimg-settings.conf: No such file or directory k8s-w0: [TASK 2] Profile & Bashrc & Change Timezone k8s-w0: [TASK 3] Disable AppArmor k8s-w0: [TASK 4] Setting Local DNS Using Hosts file k8s-w0: [TASK 5] Install Kubernetes components (kubeadm, kubelet and kubectl) - v1.30 k8s-w0: deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.30/deb/ / k8s-w0: [TASK 6] Install packages k8s-w0: >>>> Initial Config End <<<< ==> k8s-w0: Running provisioner: shell... k8s-w0: Running: /var/folders/7r/k37w336504d01lg8qbmg2kxw0000gn/T/vagrant-shell20240918-59036-3t9ksk.sh k8s-w0: WARNING:root:Cannot call Open vSwitch: ovsdb-server.service is not running. ==> k8s-w0: Running provisioner: shell... k8s-w0: Running: /var/folders/7r/k37w336504d01lg8qbmg2kxw0000gn/T/vagrant-shell20240918-59036-it3dct.sh k8s-w0: >>>> k8s WorkerNode config Start <<<< k8s-w0: [TASK 1] K8S Controlplane Join - API Server 192.168.10.10 k8s-w0: >>>> k8s WorkerNode Config End <<<< ==> k8s-w1: Cloning VMware VM: 'bento/ubuntu-22.04-arm64'. This can take some time... ==> k8s-w1: Checking if box 'bento/ubuntu-22.04-arm64' version '202401.31.0' is up to date... ==> k8s-w1: Verifying vmnet devices are healthy... ==> k8s-w1: Preparing network adapters... ==> k8s-w1: Starting the VMware VM... ==> k8s-w1: Waiting for the VM to receive an address... ==> k8s-w1: Forwarding ports... k8s-w1: -- 22 => 50011 ==> k8s-w1: Waiting for machine to boot. This may take a few minutes... k8s-w1: SSH address: 127.0.0.1:50011 k8s-w1: SSH username: vagrant k8s-w1: SSH auth method: private key k8s-w1: k8s-w1: Vagrant insecure key detected. Vagrant will automatically replace k8s-w1: this with a newly generated keypair for better security. k8s-w1: k8s-w1: Inserting generated public key within guest... k8s-w1: Removing insecure key from the guest if it's present... k8s-w1: Key inserted! Disconnecting and reconnecting using new SSH key... ==> k8s-w1: Machine booted and ready! ==> k8s-w1: Setting hostname... ==> k8s-w1: Configuring network adapters within the VM... ==> k8s-w1: Running provisioner: shell... k8s-w1: Running: /var/folders/7r/k37w336504d01lg8qbmg2kxw0000gn/T/vagrant-shell20240918-59036-agzn0v.sh k8s-w1: >>>> Initial Config Start <<<< k8s-w1: [TASK 0] Swap Disable k8s-w1: [TASK 1] Setting SSH with Root k8s-w1: sed: can't read /etc/ssh/sshd_config.d/60-cloudimg-settings.conf: No such file or directory k8s-w1: [TASK 2] Profile & Bashrc & Change Timezone k8s-w1: [TASK 3] Disable AppArmor k8s-w1: [TASK 4] Setting Local DNS Using Hosts file k8s-w1: [TASK 5] Install Kubernetes components (kubeadm, kubelet and kubectl) - v1.30 k8s-w1: deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.30/deb/ / k8s-w1: [TASK 6] Install packages k8s-w1: >>>> Initial Config End <<<< ==> k8s-w1: Running provisioner: shell... k8s-w1: Running: /var/folders/7r/k37w336504d01lg8qbmg2kxw0000gn/T/vagrant-shell20240918-59036-benmzn.sh k8s-w1: WARNING:root:Cannot call Open vSwitch: ovsdb-server.service is not running. ==> k8s-w1: Running provisioner: shell... k8s-w1: Running: /var/folders/7r/k37w336504d01lg8qbmg2kxw0000gn/T/vagrant-shell20240918-59036-be64op.sh k8s-w1: >>>> k8s WorkerNode config Start <<<< k8s-w1: [TASK 1] K8S Controlplane Join - API Server 192.168.10.10 k8s-w1: >>>> k8s WorkerNode Config End <<<< ==> k8s-w2: Cloning VMware VM: 'bento/ubuntu-22.04-arm64'. This can take some time... ==> k8s-w2: Checking if box 'bento/ubuntu-22.04-arm64' version '202401.31.0' is up to date... ==> k8s-w2: Verifying vmnet devices are healthy... ==> k8s-w2: Preparing network adapters... ==> k8s-w2: Starting the VMware VM... ==> k8s-w2: Waiting for the VM to receive an address... ==> k8s-w2: Forwarding ports... k8s-w2: -- 22 => 50012 ==> k8s-w2: Waiting for machine to boot. This may take a few minutes... k8s-w2: SSH address: 127.0.0.1:50012 k8s-w2: SSH username: vagrant k8s-w2: SSH auth method: private key k8s-w2: k8s-w2: Vagrant insecure key detected. Vagrant will automatically replace k8s-w2: this with a newly generated keypair for better security. k8s-w2: k8s-w2: Inserting generated public key within guest... k8s-w2: Removing insecure key from the guest if it's present... k8s-w2: Key inserted! Disconnecting and reconnecting using new SSH key... ==> k8s-w2: Machine booted and ready! ==> k8s-w2: Setting hostname... ==> k8s-w2: Configuring network adapters within the VM... ==> k8s-w2: Running provisioner: shell... k8s-w2: Running: /var/folders/7r/k37w336504d01lg8qbmg2kxw0000gn/T/vagrant-shell20240918-59036-aom3ia.sh k8s-w2: >>>> Initial Config Start <<<< k8s-w2: [TASK 0] Swap Disable k8s-w2: [TASK 1] Setting SSH with Root k8s-w2: sed: can't read /etc/ssh/sshd_config.d/60-cloudimg-settings.conf: No such file or directory k8s-w2: [TASK 2] Profile & Bashrc & Change Timezone k8s-w2: [TASK 3] Disable AppArmor k8s-w2: [TASK 4] Setting Local DNS Using Hosts file k8s-w2: [TASK 5] Install Kubernetes components (kubeadm, kubelet and kubectl) - v1.30 k8s-w2: deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.30/deb/ / k8s-w2: [TASK 6] Install packages k8s-w2: >>>> Initial Config End <<<< ==> k8s-w2: Running provisioner: shell... k8s-w2: Running: /var/folders/7r/k37w336504d01lg8qbmg2kxw0000gn/T/vagrant-shell20240918-59036-eafp3w.sh k8s-w2: WARNING:root:Cannot call Open vSwitch: ovsdb-server.service is not running. ==> k8s-w2: Running provisioner: shell... k8s-w2: Running: /var/folders/7r/k37w336504d01lg8qbmg2kxw0000gn/T/vagrant-shell20240918-59036-1alf7n.sh k8s-w2: >>>> k8s WorkerNode config Start <<<< k8s-w2: [TASK 1] K8S Controlplane Join - API Server 192.168.10.10 k8s-w2: >>>> k8s WorkerNode Config End <<<< -

vagrant status

$ vagrant status Current machine states: k8s-m running (vmware_desktop) router running (vmware_desktop) k8s-w0 running (vmware_desktop) k8s-w1 running (vmware_desktop) k8s-w2 running (vmware_desktop) This environment represents multiple VMs. The VMs are all listed above with their current state. For more information about a specific VM, run `vagrant status NAME`. -

k8s-m 접속 및 상태 확인

❯ vagrant ssh k8s-m

Welcome to Ubuntu 22.04.3 LTS (GNU/Linux 5.15.0-92-generic aarch64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/pro

System information as of Wed Sep 18 11:40:50 AM UTC 2024

System load: 0.25439453125 Processes: 233

Usage of /: 12.2% of 29.82GB Users logged in: 0

Memory usage: 11% IPv4 address for eth0: 172.16.195.184

Swap usage: 0%

This system is built by the Bento project by Chef Software

More information can be found at https://github.com/chef/bento

Last login: Wed Sep 18 04:20:52 2024 from 172.16.195.1

(Calico-Lab:N/A) root@k8s-m:~#

(Calico-Lab:N/A) root@k8s-m:~# ip -c a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:a4:bd:1f brd ff:ff:ff:ff:ff:ff

altname enp2s0

altname ens160

inet 172.16.195.184/24 metric 100 brd 172.16.195.255 scope global dynamic eth0

valid_lft 1502sec preferred_lft 1502sec

inet6 fe80::20c:29ff:fea4:bd1f/64 scope link

valid_lft forever preferred_lft forever

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:a4:bd:29 brd ff:ff:ff:ff:ff:ff

altname enp18s0

altname ens224

inet 192.168.10.10/24 brd 192.168.10.255 scope global eth1

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fea4:bd29/64 scope link

valid_lft forever preferred_lft forever

(Calico-Lab:N/A) root@k8s-m:~# ip -c route

default via 172.16.195.2 dev eth0 proto dhcp src 172.16.195.184 metric 100

172.16.195.0/24 dev eth0 proto kernel scope link src 172.16.195.184 metric 100

172.16.195.2 dev eth0 proto dhcp scope link src 172.16.195.184 metric 100

192.168.10.0/24 dev eth1 proto kernel scope link src 192.168.10.10

192.168.20.0/24 via 192.168.10.254 dev eth1 proto static kube 인증서 파일복사 & 환경설정

-

kube 인증서 파일 저장경로 생성 & 원격파일 복사

$ mkdir -p .kube $ scp root@k8s-m:/root/.kube/config .kube/config root@k8s-m's password: config 100% 5623 8.4MB/s 00:00 $ ls -al ~/.kube/config -rw------- 1 sjkim staff 5608 Sep 8 00:44 /Users/sjkim/.kube/config -

KUBECONFIG 환경변수 설정

$ export KUBECONFIG=.kube/config $ kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-m NotReady control-plane 32m v1.30.5 k8s-w0 NotReady <none> 27m v1.30.5 k8s-w1 NotReady <none> 26m v1.30.5 k8s-w2 NotReady <none> 24m v1.30.5

Calico cni 설치

-

calico manifests 적용

$ kubectl apply -f addons/calico-kans.yaml poddisruptionbudget.policy/calico-kube-controllers created serviceaccount/calico-kube-controllers created serviceaccount/calico-node created serviceaccount/calico-cni-plugin created configmap/calico-config created customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/bgpfilters.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ipreservations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created clusterrole.rbac.authorization.k8s.io/calico-node created clusterrole.rbac.authorization.k8s.io/calico-cni-plugin created clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created clusterrolebinding.rbac.authorization.k8s.io/calico-node created clusterrolebinding.rbac.authorization.k8s.io/calico-cni-plugin created daemonset.apps/calico-node created deployment.apps/calico-kube-controllers created -

Namespace, deployment, daemonset, pod 확인

$ kubectl get ns NAME STATUS AGE default Active 40m kube-node-lease Active 40m kube-public Active 40m kube-system Active 40m $ kubectl -n kube-system get deployment NAME READY UP-TO-DATE AVAILABLE AGE calico-kube-controllers 0/1 1 0 51s coredns 0/2 2 0 41m $ kubectl -n kube-system get daemonset NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE calico-node 4 4 0 4 0 kubernetes.io/os=linux 59s kube-proxy 4 4 4 4 4 kubernetes.io/os=linux 41m # node 상태가 Ready 상태로 변경됨 $ kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-m Ready control-plane 41m v1.30.5 k8s-w0 Ready <none> 37m v1.30.5 k8s-w1 Ready <none> 35m v1.30.5 k8s-w2 Ready <none> 33m v1.30.5 # calico controller deployment 확인 $ kubectl -n kube-system get deployment NAME READY UP-TO-DATE AVAILABLE AGE calico-kube-controllers 1/1 1 1 4m23s coredns 2/2 2 2 44m # calico-node daemonset 확인 $ kubectl -n kube-system get daemonset NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE calico-node 4 4 4 4 4 kubernetes.io/os=linux 7m3s kube-proxy 4 4 4 4 4 kubernetes.io/os=linux 47m # Pod 확인 $ kubectl -n kube-system get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES calico-kube-controllers-77d59654f4-p69dm 1/1 Running 0 4m40s 172.16.34.1 k8s-w0 <none> <none> calico-node-4m7rl 1/1 Running 0 4m40s 192.168.10.101 k8s-w1 <none> <none> calico-node-5qn27 1/1 Running 0 4m40s 192.168.10.10 k8s-m <none> <none> calico-node-q4tqb 1/1 Running 0 4m40s 192.168.10.102 k8s-w2 <none> <none> calico-node-q7t25 1/1 Running 0 4m40s 192.168.20.100 k8s-w0 <none> <none> coredns-55cb58b774-5h5lj 1/1 Running 0 44m 172.16.34.3 k8s-w0 <none> <none> coredns-55cb58b774-qszbk 1/1 Running 0 44m 172.16.34.2 k8s-w0 <none> <none> etcd-k8s-m 1/1 Running 0 44m 192.168.10.10 k8s-m <none> <none> kube-apiserver-k8s-m 1/1 Running 0 44m 192.168.10.10 k8s-m <none> <none> kube-controller-manager-k8s-m 1/1 Running 0 44m 192.168.10.10 k8s-m <none> <none> kube-proxy-5566f 1/1 Running 0 44m 192.168.10.10 k8s-m <none> <none> kube-proxy-8lsd4 1/1 Running 0 37m 192.168.10.102 k8s-w2 <none> <none> kube-proxy-tml4g 1/1 Running 0 40m 192.168.20.100 k8s-w0 <none> <none> kube-proxy-w74md 1/1 Running 0 38m 192.168.10.101 k8s-w1 <none> <none> kube-scheduler-k8s-m 1/1 Running 0 44m 192.168.10.10 k8s-m <none> <none>

3. Calico 이용 Network Policy 적용

NetworkPolicy

- 쿠버네티스의 확장성 덕분에 하나의 클러스터 내에서 여러 앱을 쉽게 실행 가능함. 그러나 쿠버네티스는 기본적으로 네트워크 격리가 부족하므로 모든 앱이 서로 자유롭게 통신할 수 있는 문제점 또한 존재함. 네트워크 정책은 이 문제를 해결하는 쿠버네티스 객체임.

- NetworkPolicy는 이름 그대로 Pod 내부로 들어오거나(Ingress) 외부로 나가는(Egress) 트래픽을 허용하고 거부하는 정책을 설정할 수 있는 오브젝트다.

- NetworkPolicy는 기본적으로 Whitelist 형식이다. 따라서 설정되는 순간 명시해놓은 목록에 있는 Pod나 Host(다른 노드일 수도 있고 다른 IP를 가진 Source일 수도 있고) 외에는 이 Pod에 트래픽을 보낼 수가 없다.

- 트래픽 제어 부분이기 때문에 당연히 CNI 를 사용하는 것을 전제로 한다(물론 CNI를 사용안하는 플랫폼이 있는지는 의문이지만). 없이도 설정되지만 효과는 없다.

- 네트워크 정책은 네트워크 솔루션에 의해 실행된다. 모든 네트워크 솔루션이 네트워크 정책을 지원하는 것은 아니다.

- 네트워크 정책을 지원하지 않는 솔루션으로 구성된 클러스터에서도 정책을 만들 순 있지만 강요되는 것은 아니다.

NetworkPolicy 지원하는 솔루션

| 지원 | 미지원 |

|---|---|

| - Calico - Weave-net - Amazon VPC CNI(1.14이상) - Kube-router - Romana | - Flannel |

Stars 네트워크 정책 데모 Link

1. Frontend, Backend, Client, Management-UI 배포

-

Namespace 생성 및 Pod 배포

$ kubectl create -f https://docs.tigera.io/files/00-namespace.yaml namespace/stars created $ kubectl create -f https://docs.tigera.io/files/01-management-ui.yaml namespace/management-ui created service/management-ui created deployment.apps/management-ui created $ kubectl create -f https://docs.tigera.io/files/02-backend.yaml service/backend created deployment.apps/backend created $ kubectl create -f https://docs.tigera.io/files/03-frontend.yaml service/frontend created deployment.apps/frontend created $ kubectl create -f https://docs.tigera.io/files/04-client.yaml namespace/client created deployment.apps/client created service/client created -

모든 포드가 Running 상태로 들어갈 때까지 기다립니다.

$ kubectl get pod -A NAMESPACE NAME READY STATUS RESTARTS AGE client client-55f998b84b-wh9h9 1/1 Running 0 3m35s kube-system calico-kube-controllers-77d59654f4-p69dm 1/1 Running 0 3h34m kube-system calico-node-4m7rl 1/1 Running 0 3h34m kube-system calico-node-5qn27 1/1 Running 0 3h34m kube-system calico-node-q4tqb 1/1 Running 0 3h34m kube-system calico-node-q7t25 1/1 Running 0 3h34m kube-system coredns-55cb58b774-5h5lj 1/1 Running 0 4h14m kube-system coredns-55cb58b774-qszbk 1/1 Running 0 4h14m kube-system etcd-k8s-m 1/1 Running 0 4h14m kube-system kube-apiserver-k8s-m 1/1 Running 0 4h14m kube-system kube-controller-manager-k8s-m 1/1 Running 0 4h14m kube-system kube-proxy-5566f 1/1 Running 0 4h14m kube-system kube-proxy-8lsd4 1/1 Running 0 4h6m kube-system kube-proxy-tml4g 1/1 Running 0 4h10m kube-system kube-proxy-w74md 1/1 Running 0 4h8m kube-system kube-scheduler-k8s-m 1/1 Running 0 4h14m management-ui management-ui-7758d6b44b-jf9lh 1/1 Running 0 4m1s stars backend-77d55c85b8-kwlvc 1/1 Running 0 3m50s stars frontend-6846695988-xxd4g 1/1 Running 0 3m43s -

Management-UI는 NodePort로 서비스 실행됩니다.

$ kubectl get svc -A NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE client client ClusterIP 10.200.1.124 <none> 9000/TCP 6m41s default kubernetes ClusterIP 10.200.1.1 <none> 443/TCP 4h17m kube-system kube-dns ClusterIP 10.200.1.10 <none> 53/UDP,53/TCP,9153/TCP 4h17m management-ui management-ui NodePort 10.200.1.212 <none> 9001:30002/TCP 7m7s stars backend ClusterIP 10.200.1.89 <none> 6379/TCP 6m57s stars frontend ClusterIP 10.200.1.210 <none> 80/TCP 6m49s -

Management-UI 접속 (http://k8s-w0:30002)

모든 포드가 시작되면 완전한 연결 상태가 되어야 합니다. UI를 방문하여 확인할 수 있습니다.

각 서비스는 그래프에서 단일 노드로 표현됩니다.- backend-> 노드 "B"

- frontend-> 노드 "F"

- client-> 노드 "C"

2. Pod간 통신 차단

-

stars 및 client 네임스페이스 모두에 다음 네트워크 정책을 적용하여 서비스를 각각 격리시킵니다.

kind: NetworkPolicy apiVersion: networking.k8s.io/v1 metadata: name: default-deny spec: podSelector: matchLabels: {} -

다음 명령을 실행하면 Frontend, Backend 및 Client 서비스에 대한 모든 액세스가 차단됩니다.

$ kubectl create -n stars -f https://docs.tigera.io/files/default-deny.yaml networkpolicy.networking.k8s.io/default-deny created $ kubectl create -n client -f https://docs.tigera.io/files/default-deny.yaml networkpolicy.networking.k8s.io/default-deny created $ kubectl get netpol -A NAMESPACE NAME POD-SELECTOR AGE stars default-deny <none> 62s client default-deny <none> 48s -

Management-UI를 새로 고칩니다(변경 사항이 UI에 반영되는 데 최대 10초가 걸릴 수 있음)

격리를 활성화했으므로 UI가 더 이상 포드에 액세스할 수 없으므로 UI에 더 이상 표시되지 않습니다.

3. Network Policy로 Management-UI가 Services들에 접속가능하도록 허용

-

관리 사용자 인터페이스가 서비스에 액세스하도록 허용합니

kind: NetworkPolicy apiVersion: networking.k8s.io/v1 metadata: namespace: stars name: allow-ui spec: podSelector: matchLabels: {} ingress: - from: - namespaceSelector: matchLabels: role: management-ui -

이 정책을 적용하여 클라이언트를 허용합니다.

kind: NetworkPolicy apiVersion: networking.k8s.io/v1 metadata: namespace: client name: allow-ui spec: podSelector: matchLabels: {} ingress: - from: - namespaceSelector: matchLabels: role: management-ui -

다음 YAML 파일을 적용하여 관리 UI에서 액세스를 허용합니다.

kind: NetworkPolicy apiVersion: networking.k8s.io/v1 metadata: namespace: stars name: backend-policy spec: podSelector: matchLabels: role: backend ingress: - from: - podSelector: matchLabels: role: frontend ports: - protocol: TCP port: 6379$ kubectl create -f https://docs.tigera.io/files/allow-ui.yaml networkpolicy.networking.k8s.io/allow-ui created $ kubectl create -f https://docs.tigera.io/files/allow-ui-client.yaml networkpolicy.networking.k8s.io/allow-ui created $ kubectl get netpol -A NAMESPACE NAME POD-SELECTOR AGE client allow-ui <none> 116s stars allow-ui <none> 2m4s client default-deny <none> 19m stars default-deny <none> 19m -

몇 초 후에 UI를 새로 고칩니다. 이제 서비스가 표시되지만, 각 서비스 간에는 더 이상 액세스할 수 없습니다.

4. Frontend에서 Backend로 통신가능하도록 정책적용

-

다음 네트워크 정책을 적용하여 프런트엔드 서비스에서 백엔드 서비스로의 트래픽을 허용합니다.

kind: NetworkPolicy apiVersion: networking.k8s.io/v1 metadata: namespace: stars name: backend-policy spec: podSelector: matchLabels: role: backend ingress: - from: - podSelector: matchLabels: role: frontend ports: - protocol: TCP port: 6379 -

정책 배포

$ kubectl create -f https://docs.tigera.io/files/backend-policy.yaml networkpolicy.networking.k8s.io/backend-policy created $ kubectl get netpol -A NAMESPACE NAME POD-SELECTOR AGE stars backend-policy role=backend 70s client allow-ui <none> 10m stars allow-ui <none> 10m client default-deny <none> 27m stars default-deny <none> 27m -

UI를 새로 고칩니다. 다음이 표시되어야 합니다.

- 이제 프런트엔드가 백엔드에 접근할 수 있습니다(TCP 포트 6379에서만).

- 백엔드는 프런트엔드에 전혀 접근할 수 없습니다.

- 클라이언트는 프런트엔드에 접근할 수 없고, 백엔드에도 접근할 수 없습니다.

5. Frontend 서비스를 Client Namespace에 노출

-

다음 네트워크 정책을 적용하여 클라이언트에서 프런트엔드 서비스로의 트래픽을 허용합니다.

kind: NetworkPolicy apiVersion: networking.k8s.io/v1 metadata: namespace: stars name: frontend-policy spec: podSelector: matchLabels: role: frontend ingress: - from: - namespaceSelector: matchLabels: role: client ports: - protocol: TCP port: 80 -

정책 배포

$ kubectl create -f https://docs.tigera.io/files/frontend-policy.yaml networkpolicy.networking.k8s.io/frontend-policy created $ kubectl get netpol -A NAMESPACE NAME POD-SELECTOR AGE stars frontend-policy role=frontend 118s stars backend-policy role=backend 6m41s client allow-ui <none> 15m stars allow-ui <none> 15m client default-deny <none> 33m stars default-deny <none> 33m -

이제 클라이언트는 프런트엔드에 액세스할 수 있지만 백엔드에는 액세스할 수 없습니다. 프런트엔드나 백엔드 모두 클라이언트에 연결을 시작할 수 없습니다. 프런트엔드는 여전히 백엔드에 액세스할 수 있습니다.

6. Stars 네트워크 정책 데모 삭제

- 데모 네임스페이스를 삭제하면 데모를 정리할 수 있습니다.

$ kubectl delete ns client stars management-ui

깔끔한 정리 글 잘 보고 갑니다~

다음에 맥 구매하면 꼭 한번 따라해보기 실습 해보겠습니다~ ^0^