KANS(Kubernetes Advanced Networking Study) 3기 과정으로 학습한 내용을 정리 또는 실습한 정리한 게시글입니다. 6주차는 Ingress & Gateway API + CoreDNS 대해 학습한 내용을 정리하였습니다. 추가적으로 vagrant이용 VMware Fusion에서 k3s 실습환경 구성 방법을 추가로 정리하였습니다.

1. mac에 k3s 실습환경 구성

설치환경

- Macbook Air(M1 16GB)+vagrant+VMware Fusion 13 Pro+Ubuntu24.04(arm64)

초기 환경 구성 시 문제점 및 해결

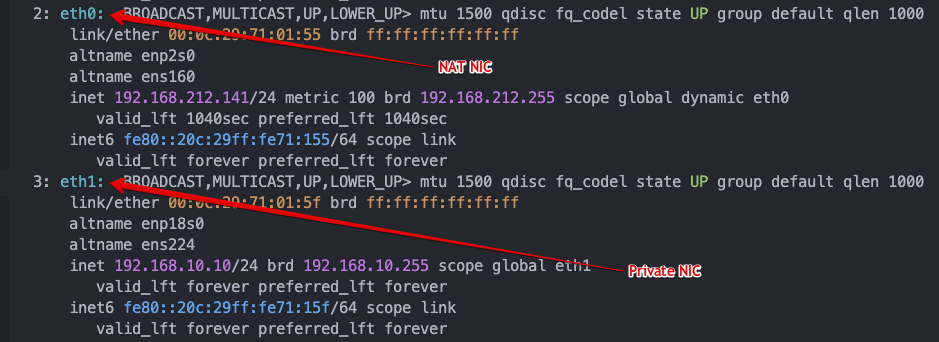

- Vagrantfile에서 각 VM에 대해 private ip로 192.168.10.x로 지정하였으나

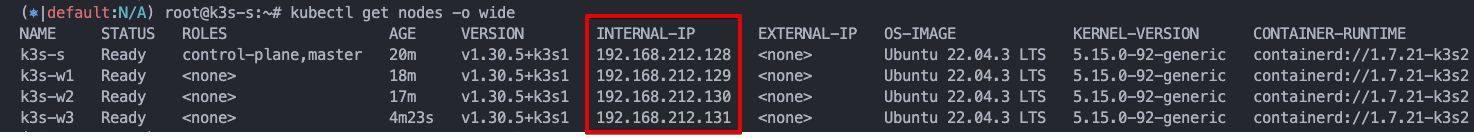

K3S Node Join된 Worker Node의 IP는 eth0 NAT IP임

-

k3s 클러스터를 설치할 때, 노드의 INTERNAL-IP를 기본적으로 eth0 인터페이스에 할당된 IP로 설정하는 경우가 많습니다. 하지만, eth1을 INTERNAL-IP로 설정하려면 --node-ip 옵션을 사용해 원하는 인터페이스의 IP를 명시해줄 수 있습니다.

-

다음은 k3s 설치 시 eth1의 IP를 INTERNAL-IP로 설정하는 방법입니다:

-

1) eth1 IP 확인

먼저, eth1 인터페이스에 할당된 IP 주소를 확인해야 합니다. SSH로 접속하여 다음 명령어를 실행합니다❯ ip addr show eth1 -

k3s 설치 명령에 --node-ip 옵션 추가

k3s를 설치할 때 --node-ip 옵션을 사용하여 eth1 인터페이스의 IP 주소를 내부 IP로 설정할 수 있습니다. 예를 들어, 아래와 같이 k3s를 설치합니다:## Control Plane ❯ curl -sfL https://get.k3s.io | sh -s - server --bind-ip 192.168.10.10 --node-ip 192.168.10.10 ## Worker Node ❯ curl -sfL https://get.k3s.io | K3S_URL=https://192.168.10.10:6443 K3S_TOKEN=<NODE_TOKEN> sh -s - --node-ip 192.168.10.101 # 여기서 <MASTER_NODE_IP>는 마스터 노드의 IP 주소를 입력하고, <NODE_TOKEN>은 마스터 노드에서 확인할 수 있는 토큰 값입니다. -

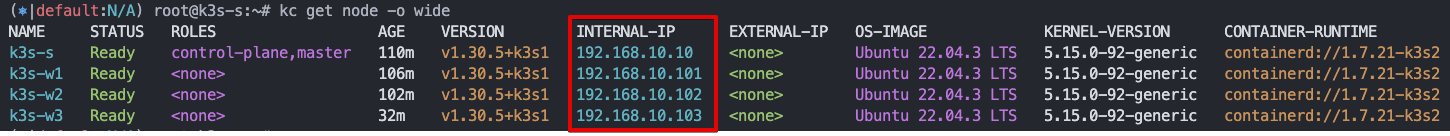

k3s 노드 설정 확인

설치 후, kubectl get nodes -o wide 명령을 실행하여 노드의 INTERNAL-IP가 올바르게 eth1의 IP로 설정되었는지 확인할 수 있습니다:❯ kubectl get nodes -o wide

-

- VMware Fusion으로 Node 구성 시 Pod들도 arm Image로 Download 해야 함

- k8s.gcr.io/echoserver:1.5 <-- arm 미지원으로 CrashLoopBackOff 발생

- arm 지원되는 jmalloc/echo-server:latest 으로 변경 필요 함

- kubectl krew에서 arm64은 ingress-nginx 미지원으로 설치 불가 ㅠㅠ

소스코드

-

Vagrantfile

❯ cat <<EOF> Vagrantfile # for MacBook Air - M1 with vmware-fusion BOX_IMAGE = "bento/ubuntu-22.04-arm64" BOX_VERSION = "202401.31.0" # max number of worker nodes : Ex) N = 3 N = 3 # Version : Ex) k8s_V = '1.31' k8s_V = '1.30' proj_N = 'kans' Vagrant.configure("2") do |config| #-----Manager Node config.vm.define "k3s-s" do |subconfig| subconfig.vm.box = BOX_IMAGE subconfig.vm.box_version = BOX_VERSION subconfig.vm.provider "vmare_desktop" do |v| v.customize ["modifyvm", :id, "--groups", "/#{proj_N}"] v.customize ["modifyvm", :id, "--nicpromisc2", "allow-vms"] v.name = "#{proj_N}-k3s-s" v.memory = 2048 v.cpus = 2 v.linked_clone = true end subconfig.vm.hostname = "k3s-s" subconfig.vm.synced_folder "./", "/vagrant", disabled: true subconfig.vm.network "private_network", ip: "192.168.10.10" subconfig.vm.network "forwarded_port", guest: 22, host: 50010, auto_correct: true, id: "ssh" subconfig.vm.provision "shell", path: "scripts/k3s-s.sh", args: [ k8s_V ] end #-----Worker Node Subnet1 (1..N).each do |i| config.vm.define "k3s-w#{i}" do |subconfig| subconfig.vm.box = BOX_IMAGE subconfig.vm.box_version = BOX_VERSION subconfig.vm.provider "vmare_desktop" do |v| v.customize ["modifyvm", :id, "--groups", "/#{proj_N}"] v.customize ["modifyvm", :id, "--nicpromisc2", "allow-vms"] v.name = "#{proj_N}-k3s-w#{i}" v.memory = 2048 v.cpus = 1 v.linked_clone = true end subconfig.vm.hostname = "k3s-w#{i}" subconfig.vm.synced_folder "./", "/vagrant", disabled: true subconfig.vm.network "private_network", ip: "192.168.10.10#{i}" subconfig.vm.network "forwarded_port", guest: 22, host: "5001#{i}", auto_correct: true, id: "ssh" subconfig.vm.provision "shell", path: "scripts/k3s-w.sh", args: [ i, k8s_V ] end end end EOF -

scripts/k3s-s.sh

#!/bin/bash hostnamectl --static set-hostname k3s-s # swap disable echo "Swap Disable" swapoff -a sed -i '/ swap / s/^/#/' /etc/fstab # Config convenience echo 'alias vi=vim' >> /etc/profile echo "sudo su -" >> /home/vagrant/.bashrc ln -sf /usr/share/zoneinfo/Asia/Seoul /etc/localtime # Disable ufw & apparmor systemctl stop ufw && systemctl disable ufw systemctl stop apparmor && systemctl disable apparmor # Install packages apt update && apt-get install bridge-utils net-tools conntrack ngrep jq tree unzip kubecolor kubetail -y # local dns - hosts file echo "192.168.10.10 k3s-s" >> /etc/hosts echo "192.168.10.101 k3s-w1" >> /etc/hosts echo "192.168.10.102 k3s-w2" >> /etc/hosts echo "192.168.10.103 k3s-w3" >> /etc/hosts # Install k3s-server curl -sfL https://get.k3s.io | INSTALL_K3S_EXEC=" --disable=traefik" sh -s - server --token kanstoken --bind-address 192.168.10.10 --node-ip 192.168.10.10 --cluster-cidr "172.16.0.0/16" --service-cidr "10.10.200.0/24" --write-kubeconfig-mode 644 # Change kubeconfig echo 'export KUBECONFIG=/etc/rancher/k3s/k3s.yaml' >> /etc/profile # Install Helm curl -s https://raw.githubusercontent.com/helm/helm/master/scripts/get-helm-3 | bash # Alias kubectl to k echo 'alias kc=kubecolor' >> /etc/profile echo 'alias k=kubectl' >> /etc/profile echo 'complete -o default -F __start_kubectl k' >> /etc/profile # kubectl Source the completion source <(kubectl completion bash) echo 'source <(kubectl completion bash)' >> /etc/profile # Install Kubectx & Kubens git clone https://github.com/ahmetb/kubectx /opt/kubectx ln -s /opt/kubectx/kubens /usr/local/bin/kubens ln -s /opt/kubectx/kubectx /usr/local/bin/kubectx # Install Kubeps & Setting PS1 git clone https://github.com/jonmosco/kube-ps1.git /root/kube-ps1 cat <<"EOT" >> ~/.bash_profile source /root/kube-ps1/kube-ps1.sh KUBE_PS1_SYMBOL_ENABLE=true function get_cluster_short() { echo "$1" | cut -d . -f1 } KUBE_PS1_CLUSTER_FUNCTION=get_cluster_short KUBE_PS1_SUFFIX=') ' PS1='$(kube_ps1)'$PS1 EOT -

scripts/k3s-w.sh

#!/bin/bash hostnamectl --static set-hostname k3s-w$1 # swap disable echo "Swap Disable" swapoff -a sed -i '/ swap / s/^/#/' /etc/fstab # Config convenience echo 'alias vi=vim' >> /etc/profile echo "sudo su -" >> /home/vagrant/.bashrc ln -sf /usr/share/zoneinfo/Asia/Seoul /etc/localtime # Disable ufw & apparmor systemctl stop ufw && systemctl disable ufw systemctl stop apparmor && systemctl disable apparmor # Install packages apt update && apt-get install bridge-utils net-tools conntrack ngrep jq tree unzip kubecolor kubetail -y # local dns - hosts file echo "192.168.10.10 k3s-s" >> /etc/hosts echo "192.168.10.101 k3s-w1" >> /etc/hosts echo "192.168.10.102 k3s-w2" >> /etc/hosts echo "192.168.10.103 k3s-w3" >> /etc/hosts # Install k3s-agent curl -sfL https://get.k3s.io | K3S_URL=https://192.168.10.10:6443 K3S_TOKEN=kanstoken sh -s - --node-ip 192.168.10.10$1

코드배포 & 확인

- vagrant 설치, VMware Fusion 설치는 3주차 정리자료 참조

# 소스 Download

❯ git clone https://github.com/icebreaker70/kans-k3s-ingress

❯ cd kans-k3s-ingress

# vm provision

❯ vagrant up --provision

# vm 생성 상태 확인

❯ vagrant status

Current machine states:

k3s-s running (vmware_desktop)

k3s-w1 running (vmware_desktop)

k3s-w2 running (vmware_desktop)

k3s-w3 running (vmware_desktop)

# 노드 접속

❯ vagrant ssh k3s-s

❯ vagrant ssh k3s-w1

❯ vagrant ssh k3s-w2

❯ vagrant ssh k3s-w3

# k3s cluster 확인

❯ vagrant ssh k3s-s

❯ kc cluster-info

❯ kc get nodes -o wide

❯ kc get pods -A

# vm 일시멈춤

❯ vagrant suspend

# vm 멈춤재개

❯ vagrant resume

# vm 삭제

❯ vagrant destory -f2. K3s

K3s는 무엇일까요?

- Lightweight Kubernetes. Easy to install, half the memory, all in a binary of less than 100 MB

- K3s는 쿠버네티스와 완전히 호환되며 다음과 같은 향상된 기능을 갖춘 배포판입니다

- 단일 바이너리로 패키지화

- 기본 스토리지 메커니즘으로 sqlite3를 기반으로 하는 경량 스토리지 백엔드. etcd3, MySQL, Postgres도 사용 가능

- 복잡한 TLS 및 옵션을 처리하는 간단한 런처에 포함

- 경량 환경을 위한 합리적인 기본값으로 기본적으로 보안을 유지함

- 다음과 같이 간단하지만 강력한 'batteries-included' 기능 추가. 예를 들어:

- local storage provider

- service load balancer

- Helm controller

- Traefik ingress controller

- 모든 쿠버네티스 컨트롤 플레인 구성 요소의 작동은 단일 바이너리 및 프로세스로 캡슐화. 이를 통해 K3s는 인증서 배포와 같은 복잡한 클러스터 작업을 자동화하고 관리

- 외부 종속성 최소화(최신 커널과 cgroup 마운트만 필요)

- K3s는 다음과 같은 필수 종속성을 패키지로 제공합니다:

- Containerd

- Flannel (CNI)

- CoreDNS

- Traefik (인그레스)

- Klipper-lb (서비스 로드밸런서)

- 임베디드 네트워크 정책 컨트롤러

- 임베디드 로컬 경로 프로비저너

- 호스트 유틸리티(iptables, socat 등)

이름에는 무슨 뜻이 있나요?

우리는 메모리 풋프린트 측면에서 절반 크기의 Kubernetes를 설치하기를 원했습니다. Kubernetes는 K8s로 표기되는 10글자 단어입니다. 따라서 쿠버네티스의 절반 크기라면 K3s로 표기된 5글자 단어가 될 것입니다. K3s의 긴 형태는 없으며 공식적인 발음도 없습니다.

아키텍처

- 서버 노드는

k3s server명령을 실행하는 호스트로 정의되며, 컨트롤 플레인 및 데이터스토어 구성 요소는 K3s에서 관리합니다. - 에이전트 노드는 데이터스토어 또는 컨트롤 플레인 구성 요소 없이

k3s agent명령을 실행하는 호스트로 정의됩니다. - 서버와 에이전트 모두 kubelet, 컨테이너 런타임 및 CNI를 실행합니다. 에이전트 없는 서버 실행에 대한 자세한 내용은 고급 옵션 설명서를 참조하세요.

임베디드 DB가 있는 단일 서버 설정

- 다음 다이어그램은 임베디드 SQLite 데이터베이스가 있는 단일 노드 K3s 서버가 있는 클러스터의 예를 보여줍니다.

- 이 구성에서 각 에이전트 노드는 동일한 서버 노드에 등록됩니다. K3s 사용자는 서버 노드에서 K3s API를 호출하여 쿠버네티스 리소스를 조작할 수 있습니다.

외부 DB가 있는 고가용성 K3s 서버

- 단일 서버 클러스터는 다양한 사용 사례를 충족할 수 있지만, Kubernetes 컨트롤 플레인의 가동 시간이 중요한 환경의 경우, HA 구성으로 K3s를 실행할 수 있습니다. HA K3s 클러스터는 다음과 같이 구성됩니다:

- 두 개 이상의 서버 노드가 Kubernetes API를 제공하고 다른 컨트롤 플레인 서비스를 실행합니다.

- 외부 데이터스토어(단일 서버 설정에 사용되는 임베디드 SQLite 데이터스토어와 반대)

에이전트 노드를 위한 고정 등록 주소

- 고가용성 서버 구성에서 각 노드는 아래 다이어그램과 같이 고정 등록 주소를 사용하여 Kubernetes API에 등록해야 합니다.

- 등록 후 에이전트 노드는 서버 노드 중 하나에 직접 연결을 설정합니다.

에이전트 노드 등록 작동 방식

- 에이전트 노드는

k3s agent프로세스에 의해 시작된 웹소켓 연결로 등록되며, 에이전트 프로세스의 일부로 실행되는 클라이언트 측 로드밸런서에 의해 연결이 유지됩니다. 이 로드 밸런서는 클러스터의 모든 서버에 대한 안정적인 연결을 유지하여 개별 서버의 중단을 허용하는 에이전시 서버에 대한 연결을 제공합니다. - 에이전트는 노드 클러스터 시크릿과 노드에 대해 무작위로 생성된 비밀번호를 사용하여 서버에 등록하며, 이 비밀번호는

/etc/rancher/node/password에 저장됩니다. 서버는 개별 노드의 비밀번호를 쿠버네티스 시크릿으로 저장하며, 이후 모든 시도는 동일한 비밀번호를 사용해야 합니다. 노드 패스워드 시크릿은<host>.node-password.k3s템플릿을 사용하는 이름으로kube-system네임스페이스에 저장됩니다. 이는 노드 ID의 무결성을 보호하기 위해 수행됩니다. - 에이전트의

/etc/rancher/node디렉터리가 제거되거나 기존 이름을 사용하여 노드에 다시 가입하려는 경우, 클러스터에서 노드를 삭제해야 합니다. 이렇게 하면 이전 노드 항목과 노드 비밀번호 시크릿이 모두 정리되고 노드가 클러스터에 (재)조인할 수 있습니다. - 호스트 이름을 자주 재사용하지만 노드 암호 시크릿을 제거할 수 없는 경우,

--with-node-id플래그를 사용하여 K3s 서버 또는 에이전트를 시작하면 호스트 이름에 고유 노드 ID를 자동으로 추가할 수 있습니다. 활성화하면 노드 ID는/etc/rancher/node/에도 저장됩니다.

k3s 기본 정보 확인

# 노드 확인

(⎈|default:N/A) root@k3s-s:~# kubectl get node -owide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k3s-s Ready control-plane,master 2d8h v1.30.5+k3s1 192.168.10.10 <none> Ubuntu 22.04.3 LTS 5.15.0-92-generic containerd://1.7.21-k3s2

k3s-w1 NotReady <none> 2d8h v1.30.5+k3s1 192.168.10.101 <none> Ubuntu 22.04.3 LTS 5.15.0-92-generic containerd://1.7.21-k3s2

k3s-w2 Ready <none> 2d8h v1.30.5+k3s1 192.168.10.102 <none> Ubuntu 22.04.3 LTS 5.15.0-92-generic containerd://1.7.21-k3s2

k3s-w3 Ready <none> 2d8h v1.30.5+k3s1 192.168.10.103 <none> Ubuntu 22.04.3 LTS 5.15.0-92-generic containerd://1.7.21-k3s2

# kubecolor alias 로 kc 설정 되어 있음, flannel vxlan 사용

(⎈|default:N/A) root@k3s-s:~# kc describe node k3s-s # Taints 없음

Name: k3s-s

Roles: control-plane,master

Labels: beta.kubernetes.io/arch=arm64

beta.kubernetes.io/instance-type=k3s

beta.kubernetes.io/os=linux

kubernetes.io/arch=arm64

kubernetes.io/hostname=k3s-s

kubernetes.io/os=linux

node-role.kubernetes.io/control-plane=true

node-role.kubernetes.io/master=true

node.kubernetes.io/instance-type=k3s

Annotations: alpha.kubernetes.io/provided-node-ip: 192.168.10.10

flannel.alpha.coreos.com/backend-data: {"VNI":1,"VtepMAC":"1e:4b:42:d5:5a:a3"}

flannel.alpha.coreos.com/backend-type: vxlan

flannel.alpha.coreos.com/kube-subnet-manager: true

flannel.alpha.coreos.com/public-ip: 192.168.212.145

k3s.io/hostname: k3s-s

k3s.io/internal-ip: 192.168.10.10

k3s.io/node-args:

["server","--disable","traefik","server","--token","********","--bind-address","192.168.10.10","--node-ip","192.168.10.10","--cluster-cidr...

k3s.io/node-config-hash: P37I5O7VPI4UXTJC2C5AOM4M7E4RWDQMVQGJVMMZQM5KKWKTAGNA====

k3s.io/node-env: {}

node.alpha.kubernetes.io/ttl: 0

volumes.kubernetes.io/controller-managed-attach-detach: true

CreationTimestamp: Wed, 09 Oct 2024 23:30:33 +0900

Taints: <none>

Unschedulable: false

Lease:

HolderIdentity: k3s-s

AcquireTime: <unset>

RenewTime: Sat, 12 Oct 2024 08:21:55 +0900

Conditions:

Type Status LastHeartbeatTime LastTransitionTime Reason Message

---- ------ ----------------- ------------------ ------ -------

MemoryPressure False Sat, 12 Oct 2024 08:20:19 +0900 Wed, 09 Oct 2024 23:30:33 +0900 KubeletHasSufficientMemory kubelet has sufficient memory available

DiskPressure False Sat, 12 Oct 2024 08:20:19 +0900 Wed, 09 Oct 2024 23:30:33 +0900 KubeletHasNoDiskPressure kubelet has no disk pressure

PIDPressure False Sat, 12 Oct 2024 08:20:19 +0900 Wed, 09 Oct 2024 23:30:33 +0900 KubeletHasSufficientPID kubelet has sufficient PID available

Ready True Sat, 12 Oct 2024 08:20:19 +0900 Wed, 09 Oct 2024 23:30:33 +0900 KubeletReady kubelet is posting ready status

Addresses:

InternalIP: 192.168.10.10

Hostname: k3s-s

Capacity:

cpu: 2

ephemeral-storage: 31270768Ki

hugepages-1Gi: 0

hugepages-2Mi: 0

hugepages-32Mi: 0

hugepages-64Ki: 0

memory: 2013976Ki

pods: 110

Allocatable:

cpu: 2

ephemeral-storage: 30420203087

hugepages-1Gi: 0

hugepages-2Mi: 0

hugepages-32Mi: 0

hugepages-64Ki: 0

memory: 2013976Ki

pods: 110

System Info:

Machine ID: 2ef8795fded746a4a182ce106618d77d

System UUID: f81e4d56-9d12-7eee-a4aa-084df7a46a1d

Boot ID: bf0eb348-7cfb-4c50-b332-c263034c75ad

Kernel Version: 5.15.0-92-generic

OS Image: Ubuntu 22.04.3 LTS

Operating System: linux

Architecture: arm64

Container Runtime Version: containerd://1.7.21-k3s2

Kubelet Version: v1.30.5+k3s1

Kube-Proxy Version: v1.30.5+k3s1

PodCIDR: 172.16.0.0/24

PodCIDRs: 172.16.0.0/24

ProviderID: k3s://k3s-s

Non-terminated Pods: (3 in total)

Namespace Name CPU Requests CPU Limits Memory Requests Memory Limits Age

--------- ---- ------------ ---------- --------------- ------------- ---

kube-system coredns-7b98449c4-ldgbx 100m (5%) 0 (0%) 70Mi (3%) 170Mi (8%) 2d8h

kube-system local-path-provisioner-6795b5f9d8-fw8qb 0 (0%) 0 (0%) 0 (0%) 0 (0%) 2d8h

kube-system metrics-server-cdcc87586-x8q7j 100m (5%) 0 (0%) 70Mi (3%) 0 (0%) 2d8h

Allocated resources:

(Total limits may be over 100 percent, i.e., overcommitted.)

Resource Requests Limits

-------- -------- ------

cpu 200m (10%) 0 (0%)

memory 140Mi (7%) 170Mi (8%)

ephemeral-storage 0 (0%) 0 (0%)

hugepages-1Gi 0 (0%) 0 (0%)

hugepages-2Mi 0 (0%) 0 (0%)

hugepages-32Mi 0 (0%) 0 (0%)

hugepages-64Ki 0 (0%) 0 (0%)

Events: <none>

(⎈|default:N/A) root@k3s-s:~# kc describe node k3s-w1

Name: k3s-w1

Roles: <none>

Labels: beta.kubernetes.io/arch=arm64

beta.kubernetes.io/instance-type=k3s

beta.kubernetes.io/os=linux

kubernetes.io/arch=arm64

kubernetes.io/hostname=k3s-w1

kubernetes.io/os=linux

node.kubernetes.io/instance-type=k3s

Annotations: alpha.kubernetes.io/provided-node-ip: 192.168.10.101

flannel.alpha.coreos.com/backend-data: {"VNI":1,"VtepMAC":"16:7a:20:77:c6:8b"}

flannel.alpha.coreos.com/backend-type: vxlan

flannel.alpha.coreos.com/kube-subnet-manager: true

flannel.alpha.coreos.com/public-ip: 192.168.212.150

k3s.io/hostname: k3s-w1

k3s.io/internal-ip: 192.168.10.101

k3s.io/node-args: ["agent","--node-ip","192.168.10.101"]

k3s.io/node-config-hash: GHPQS7L4HRJUDCFTWT33ZGJDFV6K75N6Z5YC6LKDAZIBQ7Y74W5Q====

k3s.io/node-env: {"K3S_TOKEN":"********","K3S_URL":"https://192.168.10.10:6443"}

node.alpha.kubernetes.io/ttl: 0

volumes.kubernetes.io/controller-managed-attach-detach: true

CreationTimestamp: Sat, 12 Oct 2024 08:30:41 +0900

Taints: <none>

Unschedulable: false

Lease:

HolderIdentity: k3s-w1

AcquireTime: <unset>

RenewTime: Sat, 12 Oct 2024 08:38:31 +0900

Conditions:

Type Status LastHeartbeatTime LastTransitionTime Reason Message

---- ------ ----------------- ------------------ ------ -------

MemoryPressure False Sat, 12 Oct 2024 08:35:47 +0900 Sat, 12 Oct 2024 08:30:41 +0900 KubeletHasSufficientMemory kubelet has sufficient memory available

DiskPressure False Sat, 12 Oct 2024 08:35:47 +0900 Sat, 12 Oct 2024 08:30:41 +0900 KubeletHasNoDiskPressure kubelet has no disk pressure

PIDPressure False Sat, 12 Oct 2024 08:35:47 +0900 Sat, 12 Oct 2024 08:30:41 +0900 KubeletHasSufficientPID kubelet has sufficient PID available

Ready True Sat, 12 Oct 2024 08:35:47 +0900 Sat, 12 Oct 2024 08:30:42 +0900 KubeletReady kubelet is posting ready status

Addresses:

InternalIP: 192.168.10.101

Hostname: k3s-w1

Capacity:

cpu: 2

ephemeral-storage: 31270768Ki

hugepages-1Gi: 0

hugepages-2Mi: 0

hugepages-32Mi: 0

hugepages-64Ki: 0

memory: 2013976Ki

pods: 110

Allocatable:

cpu: 2

ephemeral-storage: 30420203087

hugepages-1Gi: 0

hugepages-2Mi: 0

hugepages-32Mi: 0

hugepages-64Ki: 0

memory: 2013976Ki

pods: 110

System Info:

Machine ID: cee5bc0741844f26b2cbf47111190780

System UUID: 1ec34d56-cab1-e145-0cf3-229f3b78bdd4

Boot ID: 8c8f623c-3eda-405e-b174-16706cc256d9

Kernel Version: 5.15.0-92-generic

OS Image: Ubuntu 22.04.3 LTS

Operating System: linux

Architecture: arm64

Container Runtime Version: containerd://1.7.21-k3s2

Kubelet Version: v1.30.5+k3s1

Kube-Proxy Version: v1.30.5+k3s1

PodCIDR: 172.16.1.0/24

PodCIDRs: 172.16.1.0/24

ProviderID: k3s://k3s-w1

Non-terminated Pods: (0 in total)

Namespace Name CPU Requests CPU Limits Memory Requests Memory Limits Age

--------- ---- ------------ ---------- --------------- ------------- ---

Allocated resources:

(Total limits may be over 100 percent, i.e., overcommitted.)

Resource Requests Limits

-------- -------- ------

cpu 0 (0%) 0 (0%)

memory 0 (0%) 0 (0%)

ephemeral-storage 0 (0%) 0 (0%)

hugepages-1Gi 0 (0%) 0 (0%)

hugepages-2Mi 0 (0%) 0 (0%)

hugepages-32Mi 0 (0%) 0 (0%)

hugepages-64Ki 0 (0%) 0 (0%)

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Starting 7m56s kube-proxy

Normal Starting 7m57s kubelet Starting kubelet.

Warning InvalidDiskCapacity 7m57s kubelet invalid capacity 0 on image filesystem

Normal NodeHasSufficientMemory 7m57s (x2 over 7m57s) kubelet Node k3s-w1 status is now: NodeHasSufficientMemory

Normal NodeHasNoDiskPressure 7m57s (x2 over 7m57s) kubelet Node k3s-w1 status is now: NodeHasNoDiskPressure

Normal NodeHasSufficientPID 7m57s (x2 over 7m57s) kubelet Node k3s-w1 status is now: NodeHasSufficientPID

Normal NodeAllocatableEnforced 7m57s kubelet Updated Node Allocatable limit across pods

Normal Synced 7m56s (x2 over 7m57s) cloud-node-controller Node synced successfully

Normal NodeReady 7m56s kubelet Node k3s-w1 status is now: NodeReady

Normal RegisteredNode 7m53s node-controller Node k3s-w1 event: Registered Node k3s-w1 in Controller

# 파드 확인

(⎈|default:N/A) root@k3s-s:~# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-7b98449c4-jmhgk 1/1 Running 0 21m

local-path-provisioner-6795b5f9d8-w6h8s 1/1 Running 0 21m

metrics-server-cdcc87586-m4ndt 1/1 Running 0 21m

(⎈|default:N/A) root@k3s-s:~# kc top node

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

k3s-s 112m 5% 1080Mi 54%

k3s-w1 17m 0% 461Mi 23%

k3s-w2 25m 1% 440Mi 22%

k3s-w3 35m 1% 410Mi 20%

(⎈|default:N/A) root@k3s-s:~#

(⎈|default:N/A) root@k3s-s:~# kubectl top pod -A --sort-by='cpu'

NAMESPACE NAME CPU(cores) MEMORY(bytes)

kube-system metrics-server-cdcc87586-pmsxc 6m 18Mi

kube-system coredns-7b98449c4-b49gg 2m 13Mi

kube-system local-path-provisioner-6795b5f9d8-vmfj2 1m 6Mi

(⎈|default:N/A) root@k3s-s:~#

(⎈|default:N/A) root@k3s-s:~# kubectl top pod -A --sort-by='memory'

NAMESPACE NAME CPU(cores) MEMORY(bytes)

kube-system metrics-server-cdcc87586-pmsxc 7m 18Mi

kube-system coredns-7b98449c4-b49gg 3m 12Mi

kube-system local-path-provisioner-6795b5f9d8-vmfj2 1m 6Mi

(⎈|default:N/A) root@k3s-s:~# kubectl get storageclass

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

local-path (default) rancher.io/local-path Delete WaitForFirstConsumer false 6m55s

# config 정보(위치) 확인 (/etc/rancher/k3s/k3s.yaml)

(⎈|default:N/A) root@k3s-s:~# kubectl get pod -v=6

I1012 08:40:53.183279 5319 loader.go:395] Config loaded from file: /etc/rancher/k3s/k3s.yaml

I1012 08:40:53.189356 5319 round_trippers.go:553] GET https://192.168.10.10:6443/api/v1/namespaces/default/pods?limit=500 200 OK in 2 milliseconds

No resources found in default namespace.

(⎈|default:N/A) root@k3s-s:~# cat /etc/rancher/k3s/k3s.yaml

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUJkekNDQ...

server: https://192.168.10.10:6443

name: default

contexts:

- context:

cluster: default

user: default

name: default

current-context: default

kind: Config

preferences: {}

users:

- name: default

user:

client-certificate-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUJrVENDQVRl...

client-key-data: LS0tLS1CRUdJTiBFQyBQUklWQVRFIEtFWS0tLS0tCk1IY0NBUUVF...

(⎈|default:N/A) root@k3s-s:~# export | grep KUBECONFIG

declare -x KUBECONFIG="/etc/rancher/k3s/k3s.yaml"

# 네트워크 정보 확인 : flannel CNI(vxlan mode), podCIDR

(⎈|default:N/A) root@k3s-s:~# ip -c addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:45:6e:4c brd ff:ff:ff:ff:ff:ff

altname enp2s0

altname ens160

inet 192.168.212.149/24 metric 100 brd 192.168.212.255 scope global dynamic eth0

valid_lft 1772sec preferred_lft 1772sec

inet6 fe80::20c:29ff:fe45:6e4c/64 scope link

valid_lft forever preferred_lft forever

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:45:6e:56 brd ff:ff:ff:ff:ff:ff

altname enp18s0

altname ens224

inet 192.168.10.10/24 brd 192.168.10.255 scope global eth1

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe45:6e56/64 scope link

valid_lft forever preferred_lft forever

4: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN group default

link/ether 56:31:fe:6a:d3:59 brd ff:ff:ff:ff:ff:ff

inet 172.16.0.0/32 scope global flannel.1

valid_lft forever preferred_lft forever

inet6 fe80::5431:feff:fe6a:d359/64 scope link

valid_lft forever preferred_lft forever

5: cni0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default qlen 1000

link/ether e6:dd:41:9d:d1:68 brd ff:ff:ff:ff:ff:ff

inet 172.16.0.1/24 brd 172.16.0.255 scope global cni0

valid_lft forever preferred_lft forever

inet6 fe80::e4dd:41ff:fe9d:d168/64 scope link

valid_lft forever preferred_lft forever

6: vethdf6d46dd@if2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP group default

link/ether 3a:cd:bd:66:ef:17 brd ff:ff:ff:ff:ff:ff link-netns cni-33bd5679-f46c-0cde-a176-9dcf46d86f2d

inet6 fe80::38cd:bdff:fe66:ef17/64 scope link

valid_lft forever preferred_lft forever

7: veth04956c4a@if2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP group default

link/ether fa:3d:3e:c5:39:bf brd ff:ff:ff:ff:ff:ff link-netns cni-239f5b99-3ae4-2678-156d-d5413405ec0e

inet6 fe80::f83d:3eff:fec5:39bf/64 scope link

valid_lft forever preferred_lft forever

8: vethc9a483fa@if2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP group default

link/ether 76:c0:20:68:d3:76 brd ff:ff:ff:ff:ff:ff link-netns cni-a022bd16-360a-6584-e9cb-30c94ba089ac

inet6 fe80::74c0:20ff:fe68:d376/64 scope link

valid_lft forever preferred_lft forever

(⎈|default:N/A) root@k3s-s:~# ip -c route

default via 192.168.212.2 dev eth0 proto dhcp src 192.168.212.149 metric 100

172.16.0.0/24 dev cni0 proto kernel scope link src 172.16.0.1

172.16.1.0/24 via 172.16.1.0 dev flannel.1 onlink

172.16.2.0/24 via 172.16.2.0 dev flannel.1 onlink

172.16.3.0/24 via 172.16.3.0 dev flannel.1 onlink

192.168.10.0/24 dev eth1 proto kernel scope link src 192.168.10.10

192.168.212.0/24 dev eth0 proto kernel scope link src 192.168.212.149 metric 100

192.168.212.2 dev eth0 proto dhcp scope link src 192.168.212.149 metric 100

(⎈|default:N/A) root@k3s-s:~# cat /run/flannel/subnet.env

FLANNEL_NETWORK=172.16.0.0/16

FLANNEL_SUBNET=172.16.0.1/24

FLANNEL_MTU=1450

FLANNEL_IPMASQ=true

(⎈|default:N/A) root@k3s-s:~# kubectl get nodes -o jsonpath='{.items[*].spec.podCIDR}' ;echo

172.16.0.0/24 172.16.1.0/24 172.16.2.0/24 172.16.3.0/24

(⎈|default:N/A) root@k3s-s:~# kubectl describe node | grep -A3 Annotations

Annotations: alpha.kubernetes.io/provided-node-ip: 192.168.10.10

flannel.alpha.coreos.com/backend-data: {"VNI":1,"VtepMAC":"56:31:fe:6a:d3:59"}

flannel.alpha.coreos.com/backend-type: vxlan

flannel.alpha.coreos.com/kube-subnet-manager: true

--

Annotations: alpha.kubernetes.io/provided-node-ip: 192.168.10.101

flannel.alpha.coreos.com/backend-data: {"VNI":1,"VtepMAC":"16:7a:20:77:c6:8b"}

flannel.alpha.coreos.com/backend-type: vxlan

flannel.alpha.coreos.com/kube-subnet-manager: true

--

Annotations: alpha.kubernetes.io/provided-node-ip: 192.168.10.102

flannel.alpha.coreos.com/backend-data: {"VNI":1,"VtepMAC":"86:97:b5:40:89:6c"}

flannel.alpha.coreos.com/backend-type: vxlan

flannel.alpha.coreos.com/kube-subnet-manager: true

--

Annotations: alpha.kubernetes.io/provided-node-ip: 192.168.10.103

flannel.alpha.coreos.com/backend-data: {"VNI":1,"VtepMAC":"ee:41:03:3c:d8:73"}

flannel.alpha.coreos.com/backend-type: vxlan

flannel.alpha.coreos.com/kube-subnet-manager: true

(⎈|default:N/A) root@k3s-s:~# brctl show

bridge name bridge id STP enabled interfaces

cni0 8000.e6dd419dd168 no veth04956c4a

vethc9a483fa

vethdf6d46dd

# 서비스와 엔드포인트 확인

(⎈|default:N/A) root@k3s-s:~# kubectl get svc,ep -A

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default service/kubernetes ClusterIP 10.10.200.1 <none> 443/TCP 17m

kube-system service/kube-dns ClusterIP 10.10.200.10 <none> 53/UDP,53/TCP,9153/TCP 17m

kube-system service/metrics-server ClusterIP 10.10.200.246 <none> 443/TCP 17m

NAMESPACE NAME ENDPOINTS AGE

default endpoints/kubernetes 192.168.10.10:6443 17m

kube-system endpoints/kube-dns 172.16.0.4:53,172.16.0.4:53,172.16.0.4:9153 17m

kube-system endpoints/metrics-server 172.16.0.2:10250 17m

# iptables 정보 확인

(⎈|default:N/A) root@k3s-s:~# iptables -t filter -S

-P INPUT ACCEPT

-P FORWARD ACCEPT

-P OUTPUT ACCEPT

-N FLANNEL-FWD

-N KUBE-EXTERNAL-SERVICES

-N KUBE-FIREWALL

-N KUBE-FORWARD

-N KUBE-KUBELET-CANARY

-N KUBE-NODEPORTS

-N KUBE-NWPLCY-DEFAULT

-N KUBE-POD-FW-NIH7HIVVCXZNAHVI

-N KUBE-POD-FW-NOVWXJHIOSRJ56UA

-N KUBE-POD-FW-RAB3WYUKRP4RKZ3S

-N KUBE-PROXY-CANARY

-N KUBE-PROXY-FIREWALL

-N KUBE-ROUTER-FORWARD

-N KUBE-ROUTER-INPUT

-N KUBE-ROUTER-OUTPUT

-N KUBE-SERVICES

-A INPUT -m comment --comment "kube-router netpol - 4IA2OSFRMVNDXBVV" -j KUBE-ROUTER-INPUT

-A INPUT -m conntrack --ctstate NEW -m comment --comment "kubernetes load balancer firewall" -j KUBE-PROXY-FIREWALL

-A INPUT -m comment --comment "kubernetes health check service ports" -j KUBE-NODEPORTS

-A INPUT -m conntrack --ctstate NEW -m comment --comment "kubernetes externally-visible service portals" -j KUBE-EXTERNAL-SERVICES

-A INPUT -j KUBE-FIREWALL

-A INPUT -m comment --comment "KUBE-ROUTER rule to explicitly ACCEPT traffic that comply to network policies" -m mark --mark 0x20000/0x20000 -j ACCEPT

-A FORWARD -m comment --comment "kube-router netpol - TEMCG2JMHZYE7H7T" -j KUBE-ROUTER-FORWARD

-A FORWARD -m conntrack --ctstate NEW -m comment --comment "kubernetes load balancer firewall" -j KUBE-PROXY-FIREWALL

-A FORWARD -m comment --comment "kubernetes forwarding rules" -j KUBE-FORWARD

-A FORWARD -m conntrack --ctstate NEW -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A FORWARD -m conntrack --ctstate NEW -m comment --comment "kubernetes externally-visible service portals" -j KUBE-EXTERNAL-SERVICES

-A FORWARD -m comment --comment "KUBE-ROUTER rule to explicitly ACCEPT traffic that comply to network policies" -m mark --mark 0x20000/0x20000 -j ACCEPT

-A FORWARD -m comment --comment "flanneld forward" -j FLANNEL-FWD

-A OUTPUT -m comment --comment "kube-router netpol - VEAAIY32XVBHCSCY" -j KUBE-ROUTER-OUTPUT

-A OUTPUT -m conntrack --ctstate NEW -m comment --comment "kubernetes load balancer firewall" -j KUBE-PROXY-FIREWALL

-A OUTPUT -m conntrack --ctstate NEW -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A OUTPUT -j KUBE-FIREWALL

-A OUTPUT -m comment --comment "KUBE-ROUTER rule to explicitly ACCEPT traffic that comply to network policies" -m mark --mark 0x20000/0x20000 -j ACCEPT

-A FLANNEL-FWD -s 172.16.0.0/16 -m comment --comment "flanneld forward" -j ACCEPT

-A FLANNEL-FWD -d 172.16.0.0/16 -m comment --comment "flanneld forward" -j ACCEPT

-A KUBE-FIREWALL ! -s 127.0.0.0/8 -d 127.0.0.0/8 -m comment --comment "block incoming localnet connections" -m conntrack ! --ctstate RELATED,ESTABLISHED,DNAT -j DROP

-A KUBE-FORWARD -m conntrack --ctstate INVALID -j DROP

-A KUBE-FORWARD -m comment --comment "kubernetes forwarding rules" -m mark --mark 0x4000/0x4000 -j ACCEPT

-A KUBE-FORWARD -m comment --comment "kubernetes forwarding conntrack rule" -m conntrack --ctstate RELATED,ESTABLISHED -j ACCEPT

-A KUBE-NWPLCY-DEFAULT -p icmp -m comment --comment "allow icmp echo requests" -m icmp --icmp-type 8 -j ACCEPT

-A KUBE-NWPLCY-DEFAULT -p icmp -m comment --comment "allow icmp destination unreachable messages" -m icmp --icmp-type 3 -j ACCEPT

-A KUBE-NWPLCY-DEFAULT -p icmp -m comment --comment "allow icmp time exceeded messages" -m icmp --icmp-type 11 -j ACCEPT

-A KUBE-NWPLCY-DEFAULT -m comment --comment "rule to mark traffic matching a network policy" -j MARK --set-xmark 0x10000/0x10000

-A KUBE-POD-FW-NIH7HIVVCXZNAHVI -m comment --comment "rule for stateful firewall for pod" -m conntrack --ctstate RELATED,ESTABLISHED -j ACCEPT

-A KUBE-POD-FW-NIH7HIVVCXZNAHVI -m comment --comment "rule to drop invalid state for pod" -m conntrack --ctstate INVALID -j DROP

-A KUBE-POD-FW-NIH7HIVVCXZNAHVI -d 172.16.0.2/32 -m comment --comment "rule to permit the traffic traffic to pods when source is the pod\'s local node" -m addrtype --src-type LOCAL -j ACCEPT

-A KUBE-POD-FW-NIH7HIVVCXZNAHVI -s 172.16.0.2/32 -m comment --comment "run through default egress network policy chain" -j KUBE-NWPLCY-DEFAULT

-A KUBE-POD-FW-NIH7HIVVCXZNAHVI -d 172.16.0.2/32 -m comment --comment "run through default ingress network policy chain" -j KUBE-NWPLCY-DEFAULT

-A KUBE-POD-FW-NIH7HIVVCXZNAHVI -m comment --comment "rule to log dropped traffic POD name:metrics-server-cdcc87586-pmsxc namespace: kube-system" -m mark ! --mark 0x10000/0x10000 -m limit --limit 10/min --limit-burst 10 -j NFLOG --nflog-group 100

-A KUBE-POD-FW-NIH7HIVVCXZNAHVI -m comment --comment "rule to REJECT traffic destined for POD name:metrics-server-cdcc87586-pmsxc namespace: kube-system" -m mark ! --mark 0x10000/0x10000 -j REJECT --reject-with icmp-port-unreachable

-A KUBE-POD-FW-NIH7HIVVCXZNAHVI -j MARK --set-xmark 0x0/0x10000

-A KUBE-POD-FW-NIH7HIVVCXZNAHVI -m comment --comment "set mark to ACCEPT traffic that comply to network policies" -j MARK --set-xmark 0x20000/0x20000

-A KUBE-POD-FW-NOVWXJHIOSRJ56UA -m comment --comment "rule for stateful firewall for pod" -m conntrack --ctstate RELATED,ESTABLISHED -j ACCEPT

-A KUBE-POD-FW-NOVWXJHIOSRJ56UA -m comment --comment "rule to drop invalid state for pod" -m conntrack --ctstate INVALID -j DROP

-A KUBE-POD-FW-NOVWXJHIOSRJ56UA -d 172.16.0.4/32 -m comment --comment "rule to permit the traffic traffic to pods when source is the pod\'s local node" -m addrtype --src-type LOCAL -j ACCEPT

-A KUBE-POD-FW-NOVWXJHIOSRJ56UA -s 172.16.0.4/32 -m comment --comment "run through default egress network policy chain" -j KUBE-NWPLCY-DEFAULT

-A KUBE-POD-FW-NOVWXJHIOSRJ56UA -d 172.16.0.4/32 -m comment --comment "run through default ingress network policy chain" -j KUBE-NWPLCY-DEFAULT

-A KUBE-POD-FW-NOVWXJHIOSRJ56UA -m comment --comment "rule to log dropped traffic POD name:coredns-7b98449c4-b49gg namespace: kube-system" -m mark ! --mark 0x10000/0x10000 -m limit --limit 10/min --limit-burst 10 -j NFLOG --nflog-group 100

-A KUBE-POD-FW-NOVWXJHIOSRJ56UA -m comment --comment "rule to REJECT traffic destined for POD name:coredns-7b98449c4-b49gg namespace: kube-system" -m mark ! --mark 0x10000/0x10000 -j REJECT --reject-with icmp-port-unreachable

-A KUBE-POD-FW-NOVWXJHIOSRJ56UA -j MARK --set-xmark 0x0/0x10000

-A KUBE-POD-FW-NOVWXJHIOSRJ56UA -m comment --comment "set mark to ACCEPT traffic that comply to network policies" -j MARK --set-xmark 0x20000/0x20000

-A KUBE-POD-FW-RAB3WYUKRP4RKZ3S -m comment --comment "rule for stateful firewall for pod" -m conntrack --ctstate RELATED,ESTABLISHED -j ACCEPT

-A KUBE-POD-FW-RAB3WYUKRP4RKZ3S -m comment --comment "rule to drop invalid state for pod" -m conntrack --ctstate INVALID -j DROP

-A KUBE-POD-FW-RAB3WYUKRP4RKZ3S -d 172.16.0.3/32 -m comment --comment "rule to permit the traffic traffic to pods when source is the pod\'s local node" -m addrtype --src-type LOCAL -j ACCEPT

-A KUBE-POD-FW-RAB3WYUKRP4RKZ3S -s 172.16.0.3/32 -m comment --comment "run through default egress network policy chain" -j KUBE-NWPLCY-DEFAULT

-A KUBE-POD-FW-RAB3WYUKRP4RKZ3S -d 172.16.0.3/32 -m comment --comment "run through default ingress network policy chain" -j KUBE-NWPLCY-DEFAULT

-A KUBE-POD-FW-RAB3WYUKRP4RKZ3S -m comment --comment "rule to log dropped traffic POD name:local-path-provisioner-6795b5f9d8-vmfj2 namespace: kube-system" -m mark ! --mark 0x10000/0x10000 -m limit --limit 10/min --limit-burst 10 -j NFLOG --nflog-group 100

-A KUBE-POD-FW-RAB3WYUKRP4RKZ3S -m comment --comment "rule to REJECT traffic destined for POD name:local-path-provisioner-6795b5f9d8-vmfj2 namespace: kube-system" -m mark ! --mark 0x10000/0x10000 -j REJECT --reject-with icmp-port-unreachable

-A KUBE-POD-FW-RAB3WYUKRP4RKZ3S -j MARK --set-xmark 0x0/0x10000

-A KUBE-POD-FW-RAB3WYUKRP4RKZ3S -m comment --comment "set mark to ACCEPT traffic that comply to network policies" -j MARK --set-xmark 0x20000/0x20000

-A KUBE-ROUTER-FORWARD -d 172.16.0.4/32 -m comment --comment "rule to jump traffic destined to POD name:coredns-7b98449c4-b49gg namespace: kube-system to chain KUBE-POD-FW-NOVWXJHIOSRJ56UA" -j KUBE-POD-FW-NOVWXJHIOSRJ56UA

-A KUBE-ROUTER-FORWARD -d 172.16.0.4/32 -m physdev --physdev-is-bridged -m comment --comment "rule to jump traffic destined to POD name:coredns-7b98449c4-b49gg namespace: kube-system to chain KUBE-POD-FW-NOVWXJHIOSRJ56UA" -j KUBE-POD-FW-NOVWXJHIOSRJ56UA

-A KUBE-ROUTER-FORWARD -s 172.16.0.4/32 -m comment --comment "rule to jump traffic from POD name:coredns-7b98449c4-b49gg namespace: kube-system to chain KUBE-POD-FW-NOVWXJHIOSRJ56UA" -j KUBE-POD-FW-NOVWXJHIOSRJ56UA

-A KUBE-ROUTER-FORWARD -s 172.16.0.4/32 -m physdev --physdev-is-bridged -m comment --comment "rule to jump traffic from POD name:coredns-7b98449c4-b49gg namespace: kube-system to chain KUBE-POD-FW-NOVWXJHIOSRJ56UA" -j KUBE-POD-FW-NOVWXJHIOSRJ56UA

-A KUBE-ROUTER-FORWARD -d 172.16.0.2/32 -m comment --comment "rule to jump traffic destined to POD name:metrics-server-cdcc87586-pmsxc namespace: kube-system to chain KUBE-POD-FW-NIH7HIVVCXZNAHVI" -j KUBE-POD-FW-NIH7HIVVCXZNAHVI

-A KUBE-ROUTER-FORWARD -d 172.16.0.2/32 -m physdev --physdev-is-bridged -m comment --comment "rule to jump traffic destined to POD name:metrics-server-cdcc87586-pmsxc namespace: kube-system to chain KUBE-POD-FW-NIH7HIVVCXZNAHVI" -j KUBE-POD-FW-NIH7HIVVCXZNAHVI

-A KUBE-ROUTER-FORWARD -s 172.16.0.2/32 -m comment --comment "rule to jump traffic from POD name:metrics-server-cdcc87586-pmsxc namespace: kube-system to chain KUBE-POD-FW-NIH7HIVVCXZNAHVI" -j KUBE-POD-FW-NIH7HIVVCXZNAHVI

-A KUBE-ROUTER-FORWARD -s 172.16.0.2/32 -m physdev --physdev-is-bridged -m comment --comment "rule to jump traffic from POD name:metrics-server-cdcc87586-pmsxc namespace: kube-system to chain KUBE-POD-FW-NIH7HIVVCXZNAHVI" -j KUBE-POD-FW-NIH7HIVVCXZNAHVI

-A KUBE-ROUTER-FORWARD -d 172.16.0.3/32 -m comment --comment "rule to jump traffic destined to POD name:local-path-provisioner-6795b5f9d8-vmfj2 namespace: kube-system to chain KUBE-POD-FW-RAB3WYUKRP4RKZ3S" -j KUBE-POD-FW-RAB3WYUKRP4RKZ3S

-A KUBE-ROUTER-FORWARD -d 172.16.0.3/32 -m physdev --physdev-is-bridged -m comment --comment "rule to jump traffic destined to POD name:local-path-provisioner-6795b5f9d8-vmfj2 namespace: kube-system to chain KUBE-POD-FW-RAB3WYUKRP4RKZ3S" -j KUBE-POD-FW-RAB3WYUKRP4RKZ3S

-A KUBE-ROUTER-FORWARD -s 172.16.0.3/32 -m comment --comment "rule to jump traffic from POD name:local-path-provisioner-6795b5f9d8-vmfj2 namespace: kube-system to chain KUBE-POD-FW-RAB3WYUKRP4RKZ3S" -j KUBE-POD-FW-RAB3WYUKRP4RKZ3S

-A KUBE-ROUTER-FORWARD -s 172.16.0.3/32 -m physdev --physdev-is-bridged -m comment --comment "rule to jump traffic from POD name:local-path-provisioner-6795b5f9d8-vmfj2 namespace: kube-system to chain KUBE-POD-FW-RAB3WYUKRP4RKZ3S" -j KUBE-POD-FW-RAB3WYUKRP4RKZ3S

-A KUBE-ROUTER-INPUT -d 10.10.200.0/24 -m comment --comment "allow traffic to primary/secondary cluster IP range - VBVALVEBPE4N7FZN" -j RETURN

-A KUBE-ROUTER-INPUT -p tcp -m comment --comment "allow LOCAL TCP traffic to node ports - LR7XO7NXDBGQJD2M" -m addrtype --dst-type LOCAL -m multiport --dports 30000:32767 -j RETURN

-A KUBE-ROUTER-INPUT -p udp -m comment --comment "allow LOCAL UDP traffic to node ports - 76UCBPIZNGJNWNUZ" -m addrtype --dst-type LOCAL -m multiport --dports 30000:32767 -j RETURN

-A KUBE-ROUTER-INPUT -s 172.16.0.4/32 -m comment --comment "rule to jump traffic from POD name:coredns-7b98449c4-b49gg namespace: kube-system to chain KUBE-POD-FW-NOVWXJHIOSRJ56UA" -j KUBE-POD-FW-NOVWXJHIOSRJ56UA

-A KUBE-ROUTER-INPUT -s 172.16.0.2/32 -m comment --comment "rule to jump traffic from POD name:metrics-server-cdcc87586-pmsxc namespace: kube-system to chain KUBE-POD-FW-NIH7HIVVCXZNAHVI" -j KUBE-POD-FW-NIH7HIVVCXZNAHVI

-A KUBE-ROUTER-INPUT -s 172.16.0.3/32 -m comment --comment "rule to jump traffic from POD name:local-path-provisioner-6795b5f9d8-vmfj2 namespace: kube-system to chain KUBE-POD-FW-RAB3WYUKRP4RKZ3S" -j KUBE-POD-FW-RAB3WYUKRP4RKZ3S

-A KUBE-ROUTER-OUTPUT -d 172.16.0.4/32 -m comment --comment "rule to jump traffic destined to POD name:coredns-7b98449c4-b49gg namespace: kube-system to chain KUBE-POD-FW-NOVWXJHIOSRJ56UA" -j KUBE-POD-FW-NOVWXJHIOSRJ56UA

-A KUBE-ROUTER-OUTPUT -s 172.16.0.4/32 -m comment --comment "rule to jump traffic from POD name:coredns-7b98449c4-b49gg namespace: kube-system to chain KUBE-POD-FW-NOVWXJHIOSRJ56UA" -j KUBE-POD-FW-NOVWXJHIOSRJ56UA

-A KUBE-ROUTER-OUTPUT -d 172.16.0.2/32 -m comment --comment "rule to jump traffic destined to POD name:metrics-server-cdcc87586-pmsxc namespace: kube-system to chain KUBE-POD-FW-NIH7HIVVCXZNAHVI" -j KUBE-POD-FW-NIH7HIVVCXZNAHVI

-A KUBE-ROUTER-OUTPUT -s 172.16.0.2/32 -m comment --comment "rule to jump traffic from POD name:metrics-server-cdcc87586-pmsxc namespace: kube-system to chain KUBE-POD-FW-NIH7HIVVCXZNAHVI" -j KUBE-POD-FW-NIH7HIVVCXZNAHVI

-A KUBE-ROUTER-OUTPUT -d 172.16.0.3/32 -m comment --comment "rule to jump traffic destined to POD name:local-path-provisioner-6795b5f9d8-vmfj2 namespace: kube-system to chain KUBE-POD-FW-RAB3WYUKRP4RKZ3S" -j KUBE-POD-FW-RAB3WYUKRP4RKZ3S

-A KUBE-ROUTER-OUTPUT -s 172.16.0.3/32 -m comment --comment "rule to jump traffic from POD name:local-path-provisioner-6795b5f9d8-vmfj2 namespace: kube-system to chain KUBE-POD-FW-RAB3WYUKRP4RKZ3S" -j KUBE-POD-FW-RAB3WYUKRP4RKZ3S

(⎈|default:N/A) root@k3s-s:~# iptables -t nat -S

-P PREROUTING ACCEPT

-P INPUT ACCEPT

-P OUTPUT ACCEPT

-P POSTROUTING ACCEPT

-N FLANNEL-POSTRTG

-N KUBE-KUBELET-CANARY

-N KUBE-MARK-MASQ

-N KUBE-NODEPORTS

-N KUBE-POSTROUTING

-N KUBE-PROXY-CANARY

-N KUBE-SEP-2MUYUGIAGQGQPV7U

-N KUBE-SEP-ANIYGV7PXZAM24GG

-N KUBE-SEP-FKILGDVKESVSEHEB

-N KUBE-SEP-JKZRJH4QOPCEEICC

-N KUBE-SEP-JMGC46K7TWUGKBRL

-N KUBE-SERVICES

-N KUBE-SVC-ERIFXISQEP7F7OF4

-N KUBE-SVC-JD5MR3NA4I4DYORP

-N KUBE-SVC-NPX46M4PTMTKRN6Y

-N KUBE-SVC-TCOU7JCQXEZGVUNU

-N KUBE-SVC-Z4ANX4WAEWEBLCTM

-A PREROUTING -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A OUTPUT -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A POSTROUTING -m comment --comment "kubernetes postrouting rules" -j KUBE-POSTROUTING

-A POSTROUTING -m comment --comment "flanneld masq" -j FLANNEL-POSTRTG

-A FLANNEL-POSTRTG -m mark --mark 0x4000/0x4000 -m comment --comment "flanneld masq" -j RETURN

-A FLANNEL-POSTRTG -s 172.16.0.0/24 -d 172.16.0.0/16 -m comment --comment "flanneld masq" -j RETURN

-A FLANNEL-POSTRTG -s 172.16.0.0/16 -d 172.16.0.0/24 -m comment --comment "flanneld masq" -j RETURN

-A FLANNEL-POSTRTG ! -s 172.16.0.0/16 -d 172.16.0.0/24 -m comment --comment "flanneld masq" -j RETURN

-A FLANNEL-POSTRTG -s 172.16.0.0/16 ! -d 224.0.0.0/4 -m comment --comment "flanneld masq" -j MASQUERADE --random-fully

-A FLANNEL-POSTRTG ! -s 172.16.0.0/16 -d 172.16.0.0/16 -m comment --comment "flanneld masq" -j MASQUERADE --random-fully

-A KUBE-MARK-MASQ -j MARK --set-xmark 0x4000/0x4000

-A KUBE-POSTROUTING -m mark ! --mark 0x4000/0x4000 -j RETURN

-A KUBE-POSTROUTING -j MARK --set-xmark 0x4000/0x0

-A KUBE-POSTROUTING -m comment --comment "kubernetes service traffic requiring SNAT" -j MASQUERADE --random-fully

-A KUBE-SEP-2MUYUGIAGQGQPV7U -s 172.16.0.2/32 -m comment --comment "kube-system/metrics-server:https" -j KUBE-MARK-MASQ

-A KUBE-SEP-2MUYUGIAGQGQPV7U -p tcp -m comment --comment "kube-system/metrics-server:https" -m tcp -j DNAT --to-destination 172.16.0.2:10250

-A KUBE-SEP-ANIYGV7PXZAM24GG -s 172.16.0.4/32 -m comment --comment "kube-system/kube-dns:dns" -j KUBE-MARK-MASQ

-A KUBE-SEP-ANIYGV7PXZAM24GG -p udp -m comment --comment "kube-system/kube-dns:dns" -m udp -j DNAT --to-destination 172.16.0.4:53

-A KUBE-SEP-FKILGDVKESVSEHEB -s 192.168.10.10/32 -m comment --comment "default/kubernetes:https" -j KUBE-MARK-MASQ

-A KUBE-SEP-FKILGDVKESVSEHEB -p tcp -m comment --comment "default/kubernetes:https" -m tcp -j DNAT --to-destination 192.168.10.10:6443

-A KUBE-SEP-JKZRJH4QOPCEEICC -s 172.16.0.4/32 -m comment --comment "kube-system/kube-dns:metrics" -j KUBE-MARK-MASQ

-A KUBE-SEP-JKZRJH4QOPCEEICC -p tcp -m comment --comment "kube-system/kube-dns:metrics" -m tcp -j DNAT --to-destination 172.16.0.4:9153

-A KUBE-SEP-JMGC46K7TWUGKBRL -s 172.16.0.4/32 -m comment --comment "kube-system/kube-dns:dns-tcp" -j KUBE-MARK-MASQ

-A KUBE-SEP-JMGC46K7TWUGKBRL -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp" -m tcp -j DNAT --to-destination 172.16.0.4:53

-A KUBE-SERVICES -d 10.10.200.10/32 -p udp -m comment --comment "kube-system/kube-dns:dns cluster IP" -m udp --dport 53 -j KUBE-SVC-TCOU7JCQXEZGVUNU

-A KUBE-SERVICES -d 10.10.200.10/32 -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp cluster IP" -m tcp --dport 53 -j KUBE-SVC-ERIFXISQEP7F7OF4

-A KUBE-SERVICES -d 10.10.200.246/32 -p tcp -m comment --comment "kube-system/metrics-server:https cluster IP" -m tcp --dport 443 -j KUBE-SVC-Z4ANX4WAEWEBLCTM

-A KUBE-SERVICES -d 10.10.200.1/32 -p tcp -m comment --comment "default/kubernetes:https cluster IP" -m tcp --dport 443 -j KUBE-SVC-NPX46M4PTMTKRN6Y

-A KUBE-SERVICES -d 10.10.200.10/32 -p tcp -m comment --comment "kube-system/kube-dns:metrics cluster IP" -m tcp --dport 9153 -j KUBE-SVC-JD5MR3NA4I4DYORP

-A KUBE-SERVICES -m comment --comment "kubernetes service nodeports; NOTE: this must be the last rule in this chain" -m addrtype --dst-type LOCAL -j KUBE-NODEPORTS

-A KUBE-SVC-ERIFXISQEP7F7OF4 ! -s 172.16.0.0/16 -d 10.10.200.10/32 -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp cluster IP" -m tcp --dport 53 -j KUBE-MARK-MASQ

-A KUBE-SVC-ERIFXISQEP7F7OF4 -m comment --comment "kube-system/kube-dns:dns-tcp -> 172.16.0.4:53" -j KUBE-SEP-JMGC46K7TWUGKBRL

-A KUBE-SVC-JD5MR3NA4I4DYORP ! -s 172.16.0.0/16 -d 10.10.200.10/32 -p tcp -m comment --comment "kube-system/kube-dns:metrics cluster IP" -m tcp --dport 9153 -j KUBE-MARK-MASQ

-A KUBE-SVC-JD5MR3NA4I4DYORP -m comment --comment "kube-system/kube-dns:metrics -> 172.16.0.4:9153" -j KUBE-SEP-JKZRJH4QOPCEEICC

-A KUBE-SVC-NPX46M4PTMTKRN6Y ! -s 172.16.0.0/16 -d 10.10.200.1/32 -p tcp -m comment --comment "default/kubernetes:https cluster IP" -m tcp --dport 443 -j KUBE-MARK-MASQ

-A KUBE-SVC-NPX46M4PTMTKRN6Y -m comment --comment "default/kubernetes:https -> 192.168.10.10:6443" -j KUBE-SEP-FKILGDVKESVSEHEB

-A KUBE-SVC-TCOU7JCQXEZGVUNU ! -s 172.16.0.0/16 -d 10.10.200.10/32 -p udp -m comment --comment "kube-system/kube-dns:dns cluster IP" -m udp --dport 53 -j KUBE-MARK-MASQ

-A KUBE-SVC-TCOU7JCQXEZGVUNU -m comment --comment "kube-system/kube-dns:dns -> 172.16.0.4:53" -j KUBE-SEP-ANIYGV7PXZAM24GG

-A KUBE-SVC-Z4ANX4WAEWEBLCTM ! -s 172.16.0.0/16 -d 10.10.200.246/32 -p tcp -m comment --comment "kube-system/metrics-server:https cluster IP" -m tcp --dport 443 -j KUBE-MARK-MASQ

-A KUBE-SVC-Z4ANX4WAEWEBLCTM -m comment --comment "kube-system/metrics-server:https -> 172.16.0.2:10250" -j KUBE-SEP-2MUYUGIAGQGQPV7U

(⎈|default:N/A) root@k3s-s:~# iptables -t mangle -S

-P PREROUTING ACCEPT

-P INPUT ACCEPT

-P FORWARD ACCEPT

-P OUTPUT ACCEPT

-P POSTROUTING ACCEPT

-N KUBE-IPTABLES-HINT

-N KUBE-KUBELET-CANARY

-N KUBE-PROXY-CANARY

# tcp listen 포트 정보 확인

(⎈|default:N/A) root@k3s-s:~# ss -tnlp

State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

LISTEN 0 4096 127.0.0.53%lo:53 0.0.0.0:* users:(("systemd-resolve",pid=834,fd=14))

LISTEN 0 128 0.0.0.0:22 0.0.0.0:* users:(("sshd",pid=1029,fd=3))

LISTEN 0 4096 127.0.0.1:10010 0.0.0.0:* users:(("containerd",pid=3282,fd=12))

LISTEN 0 4096 127.0.0.1:10248 0.0.0.0:* users:(("k3s-server",pid=3266,fd=167))

LISTEN 0 4096 127.0.0.1:10249 0.0.0.0:* users:(("k3s-server",pid=3266,fd=165))

LISTEN 0 4096 192.168.10.10:10250 0.0.0.0:* users:(("k3s-server",pid=3266,fd=163))

LISTEN 0 4096 127.0.0.1:6443 0.0.0.0:* users:(("k3s-server",pid=3266,fd=14))

LISTEN 0 4096 192.168.10.10:6443 0.0.0.0:* users:(("k3s-server",pid=3266,fd=13))

LISTEN 0 4096 127.0.0.1:6444 0.0.0.0:* users:(("k3s-server",pid=3266,fd=16))

LISTEN 0 4096 127.0.0.1:10256 0.0.0.0:* users:(("k3s-server",pid=3266,fd=155))

LISTEN 0 4096 127.0.0.1:10257 0.0.0.0:* users:(("k3s-server",pid=3266,fd=179))

LISTEN 0 4096 127.0.0.1:10258 0.0.0.0:* users:(("k3s-server",pid=3266,fd=189))

LISTEN 0 4096 127.0.0.1:10259 0.0.0.0:* users:(("k3s-server",pid=3266,fd=194))

LISTEN 0 128 [::]:22 [::]:* users:(("sshd",pid=1029,fd=4))

LISTEN 0 4096 [::1]:6443 [::]:* users:(("k3s-server",pid=3266,fd=15)) Service/Loadbalancer - K3S Software LB 사용

- echo-pod.yaml : 디플로이먼트 생성.

apiVersion: apps/v1

kind: Deployment

metadata:

name: deploy-echo

spec:

replicas: 3

selector:

matchLabels:

app: deploy-websrv

template:

metadata:

labels:

app: deploy-websrv

spec:

containers:

- name: echo-server

image: jmalloc/echo-server # k8s.gcr.io/echoserver:1.5 arm 미지원

ports:

- containerPort: 8080-

확인

# (옵션) 터미널1 watch -d 'kubectl get pods,svc,ep -o wide' # 파드 생성 curl -s -O https://raw.githubusercontent.com/gasida/DKOS/main/5/echo-pod.yaml kubectl apply -f echo-pod.yaml # Pod IP마다 curl 접속 : 출력 정보 확인 (⎈|default:N/A) root@k3s-s:~# for pod in $(kubectl get pod -o wide -l app=deploy-websrv |awk 'NR>1 {print $6}'); do curl -s $pod:8080 | egrep '(Host|served)' ; done Request served by deploy-echo-58d6fb68db-9jn4l Host: 172.16.3.13:8080 Request served by deploy-echo-58d6fb68db-g9gb2 Host: 172.16.1.15:8080 Request served by deploy-echo-58d6fb68db-jnqz5 Host: 172.16.2.14:8080 # Pod 로그 실시간 확인 : 출력 정보 확인 (⎈|default:N/A) root@k3s-s:~# kubectl logs -l app=deploy-websrv -f Echo server listening on port 8080. 172.16.0.0:51698 | GET / 172.16.0.0:51710 | GET / 172.16.0.0:51722 | GET / 172.16.0.0:44636 | GET / 172.16.0.0:52342 | GET / Echo server listening on port 8080. 172.16.0.0:54306 | GET / 172.16.0.0:54308 | GET / 172.16.0.0:54318 | GET / 172.16.0.0:49704 | GET / 172.16.0.0:46840 | GET / Echo server listening on port 8080. 172.16.0.0:38742 | GET / 172.16.0.0:38754 | GET / 172.16.0.0:38762 | GET / 172.16.0.0:42538 | GET / 172.16.0.0:40906 | GET / -

K3S 는 LoadBalancer 동작 처리를 위한 별도의 데몬셋(파드)가 생성됩니다

apiVersion: v1 kind: Service metadata: name: k3s-svc-lb spec: ports: - name: k3slb-webport port: 8000 targetPort: 8080 nodePort: 31000 selector: app: deploy-websrv type: LoadBalancer # 생성 (⎈|default:N/A) root@k3s-s:~# curl -s -O https://raw.githubusercontent.com/gasida/DKOS/main/5/svc-lb.yaml (⎈|default:N/A) root@k3s-s:~# kubectl apply -f svc-lb.yaml service/k3s-svc-lb created (⎈|default:N/A) root@k3s-s:~# # 서비스 확인 kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE k3s-svc-lb LoadBalancer 10.10.200.145 192.168.10.10,192.168.10.101,192.168.10.102,192.168.10.103 8000:31000/TCP 8s kubernetes ClusterIP 10.10.200.1 <none> 443/TCP 104m # LB SVC 생성 시 동작 처리해주는 파드가 생성됨(Namespace kube-system) (⎈|default:N/A) root@k3s-s:~# kubectl get pod -o wide -l app=svclb-k3s-svc-lb-e27edb9d -n kube-system NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES svclb-k3s-svc-lb-e27edb9d-5ltrw 1/1 Running 0 4m32s 172.16.1.16 k3s-w1 <none> <none> svclb-k3s-svc-lb-e27edb9d-cg4gt 1/1 Running 0 4m32s 172.16.0.5 k3s-s <none> <none> svclb-k3s-svc-lb-e27edb9d-ckj5s 1/1 Running 0 4m32s 172.16.2.15 k3s-w2 <none> <none> svclb-k3s-svc-lb-e27edb9d-fz6ll 1/1 Running 0 4m32s 172.16.3.14 k3s-w3 <none> <none> # k3s LB 는 마치 노드포트처럼 LB(port)도 동작한다 (⎈|default:N/A) root@k3s-s:~# for node in $(kubectl get node -o wide |awk 'NR>1 {print $6}'); do curl -s $node:31000 | grep Host ; done Host: 192.168.10.10:31000 Host: 192.168.10.101:31000 Host: 192.168.10.102:31000 Host: 192.168.10.103:31000 # 노드포트로도 접속 테스트 > 동일한 결과(노드에서 자신 파드 혹은 타 노드 파드로 전달 - SNAT) (⎈|default:N/A) root@k3s-s:~# for node in $(kubectl get node -o wide |awk 'NR>1 {print $6}'); do curl -s $node:8000 | grep Host ; done Host: 192.168.10.10:8000 Host: 192.168.10.101:8000 Host: 192.168.10.102:8000 Host: 192.168.10.103:8000 # 외부 클라이언트에서 k3s 노드IP:port 로 접속 테스트해보자

3. Ingress

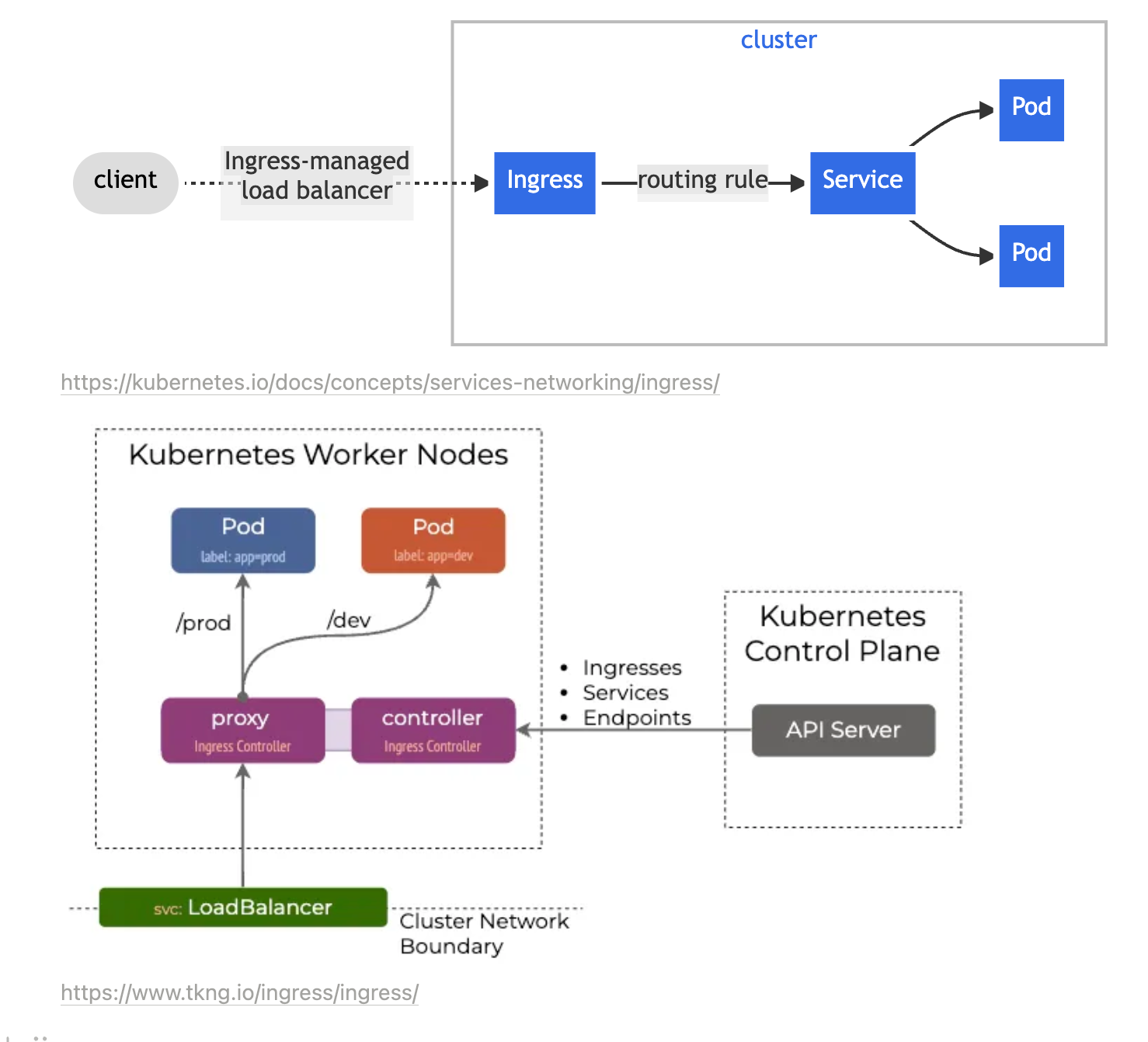

Ingress는

Ingress는 Kubernetes 클러스터에서 외부 트래픽(HTTP 및 HTTPS 요청)을 서비스로 라우팅하는 역할을 하는 네트워크 구성 요소입니다. 이를 통해 클러스터 내부에서 실행되는 서비스들을 외부에서 접근 가능하게 만듭니다. Ingress는 다양한 라우팅 규칙을 설정할 수 있어 단일 IP로 여러 서비스에 대한 HTTP/HTTPS 트래픽을 관리할 수 있습니다.

Ingress의 주요 개념

-

Ingress 리소스 (Ingress Resource):

Ingress는 Kubernetes API 객체로, 외부에서 들어오는 요청을 특정 서비스로 어떻게 라우팅할지 정의합니다.- 라우팅 규칙, 호스트 기반 및 경로 기반 라우팅 설정, TLS 설정 등 다양한 네트워크 규칙을 정의할 수 있습니다.

-

Ingress 컨트롤러 (Ingress Controller):

Ingress리소스만으로는 아무런 작동을 하지 않으며, 이를 구현하는 컨트롤러가 필요합니다.Ingress Controller는Ingress리소스에 정의된 규칙을 실제로 적용하여 트래픽을 관리합니다. 즉, 클러스터 내부에서 트래픽을 처리하고 라우팅하는 프록시 역할을 합니다.- 대표적인 Ingress Controller는 NGINX Ingress Controller, Traefik, HAProxy, AWS ALB Ingress Controller 등이 있습니다.

-

서비스와 Ingress의 차이:

Service는 Kubernetes 내부에서 클러스터 내의 Pod들 간의 통신을 처리하거나,NodePort,LoadBalancer타입을 통해 외부 트래픽을 서비스로 연결할 수 있게 해줍니다.Ingress는 이보다 더 복잡한 외부 트래픽 관리가 가능합니다. 예를 들어,Ingress를 사용하면 하나의 외부 IP로 여러 서비스에 대한 트래픽을 라우팅할 수 있습니다. 이를 통해 도메인별 라우팅이나 경로 기반 라우팅, TLS 인증서 적용 등의 고급 기능을 구현할 수 있습니다.

Ingress의 주요 기능

-

호스트 기반 라우팅:

-

여러 도메인에 따라 트래픽을 특정 서비스로 라우팅할 수 있습니다. 예를 들어,

example.com의 트래픽은 서비스 A로,api.example.com의 트래픽은 서비스 B로 라우팅하는 방식입니다. -

예시:

apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: example-ingress spec: rules: - host: example.com http: paths: - path: / pathType: Prefix backend: service: name: service-a port: number: 80 - host: api.example.com http: paths: - path: / pathType: Prefix backend: service: name: service-b port: number: 80

-

-

경로 기반 라우팅:

-

URL 경로에 따라 트래픽을 다른 서비스로 라우팅할 수 있습니다. 예를 들어,

/api/로 시작하는 트래픽은 서비스 A로,/blog/로 시작하는 트래픽은 서비스 B로 라우팅할 수 있습니다. -

예시:

apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: example-ingress spec: rules: - host: example.com http: paths: - path: /api pathType: Prefix backend: service: name: service-a port: number: 80 - path: /blog pathType: Prefix backend: service: name: service-b port: number: 80

-

-

TLS/SSL:

-

Ingress를 사용하면 TLS를 통해 HTTPS 연결을 할 수 있습니다. 이 경우, 인증서와 키를 Kubernetes의 Secret으로 저장한 후 Ingress에 적용하여 TLS를 사용할 수 있습니다. -

예시:

apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: tls-example-ingress spec: tls: - hosts: - example.com secretName: example-tls-secret rules: - host: example.com http: paths: - path: / pathType: Prefix backend: service: name: service-a port: number: 80

-

-

기본 백엔드 설정:

- 정의된 라우팅 규칙에 맞지 않는 요청이 들어왔을 때, 이를 처리할 기본 백엔드 서비스도 정의할 수 있습니다.

Ingress 컨트롤러 설치

Ingress 리소스를 사용하려면 Ingress Controller가 반드시 필요합니다. Kubernetes 클러스터에 Ingress Controller를 설치하려면 다음과 같은 명령을 사용하여 NGINX Ingress Controller를 설치할 수 있습니다.

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/main/deploy/static/provider/cloud/deploy.yaml이 외에도 다른 컨트롤러(예: Traefik, AWS ALB)를 사용할 수도 있으며, 클러스터의 환경과 요구사항에 맞는 컨트롤러를 선택하면 됩니다.

Ingress를 사용하는 이유

- 다양한 트래픽 관리: 호스트 및 경로 기반으로 트래픽을 다루기 쉽습니다.

- TLS 지원: HTTPS를 쉽게 설정할 수 있습니다.

- 단일 IP로 다중 서비스 지원: 여러 서비스에 대한 요청을 하나의 IP로 처리할 수 있어 네트워크 리소스를 효율적으로 사용할 수 있습니다.

결론적으로, Ingress는 Kubernetes에서 외부 트래픽을 효과적으로 관리할 수 있는 강력한 도구입니다.

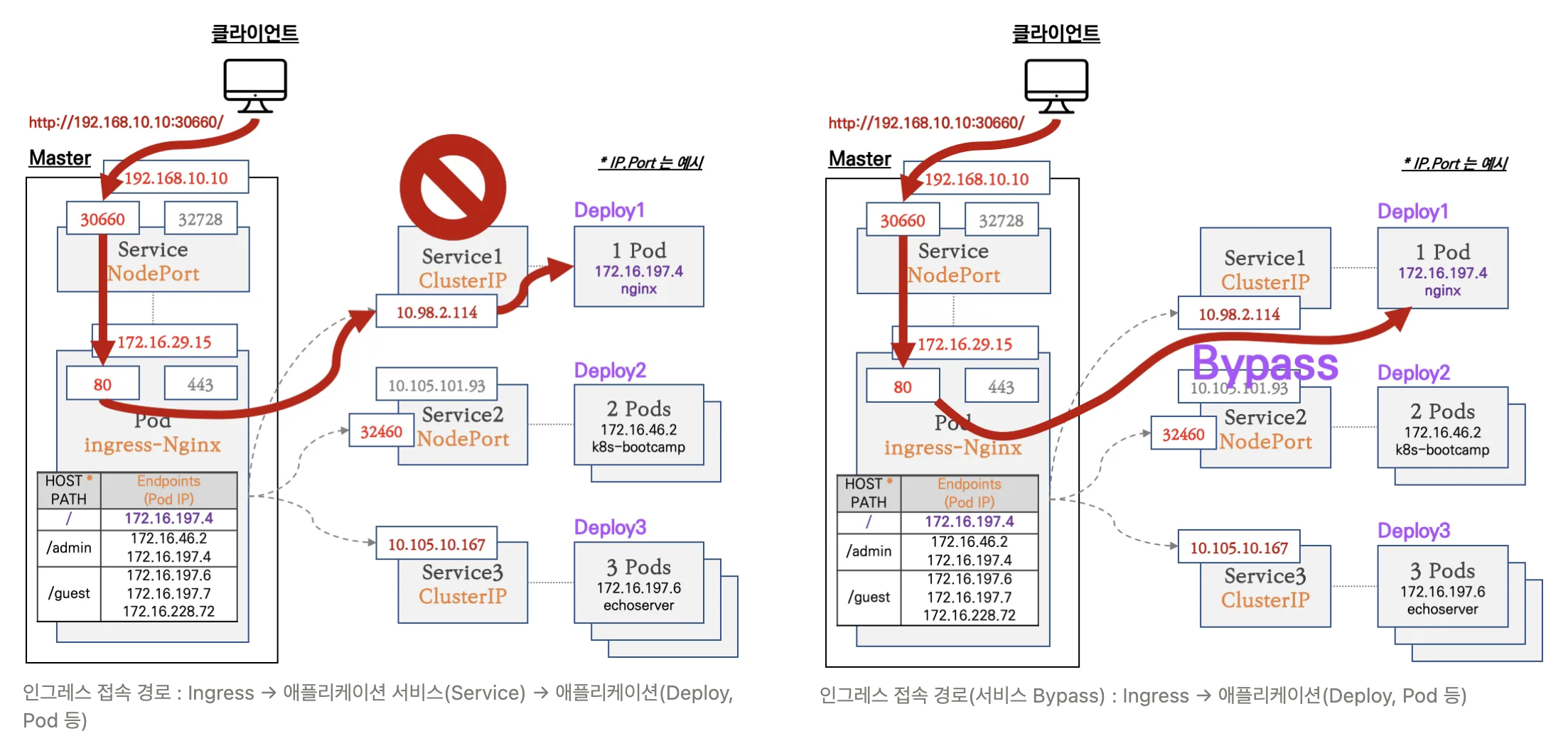

인그레스를 통한 통신 흐름

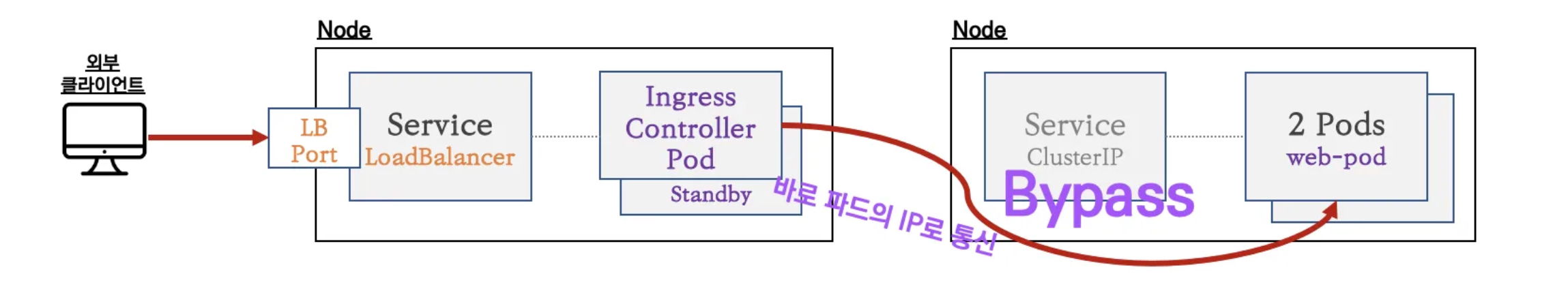

- Nginx 인그레스 컨트롤러 경우 : 외부에서 인그레스로 접속 시 Nginx 인그레스 컨트롤러 파드로 인입되고, 이후 애플리케이션 파드의 IP로 직접 통신

Nginx 인그레스 컨트롤러 설치

-

인그레스(Ingress) : 클러스터 내부의 HTTP/HTTPS 서비스를 외부로 노출(expose)

-

Ingress-Nginx 컨트롤러 생성 - ArtifactHub release

# Ingress-Nginx 컨트롤러 생성 cat <<EOT> ingress-nginx-values.yaml controller: service: type: NodePort nodePorts: http: 30080 https: 30443 nodeSelector: kubernetes.io/hostname: "k3s-s" metrics: enabled: true serviceMonitor: enabled: true EOT (⎈|default:N/A) root@k3s-s:~# helm repo add ingress-nginx https://kubernetes.github.io/ingress-nginx WARNING: Kubernetes configuration file is group-readable. This is insecure. Location: /etc/rancher/k3s/k3s.yaml "ingress-nginx" has been added to your repositories (⎈|default:N/A) root@k3s-s:~# helm repo update Hang tight while we grab the latest from your chart repositories... ...Successfully got an update from the "ingress-nginx" chart repository Update Complete. ⎈Happy Helming!⎈ (⎈|default:N/A) root@k3s-s:~# kubectl create ns ingress namespace/ingress created (⎈|default:N/A) root@k3s-s:~# helm install ingress-nginx ingress-nginx/ingress-nginx -f ingress-nginx-values.yaml --namespace ingress --version 4.11.2 NAME: ingress-nginx LAST DEPLOYED: Sat Oct 12 11:18:59 2024 NAMESPACE: ingress STATUS: deployed REVISION: 1 TEST SUITE: None NOTES: The ingress-nginx controller has been installed. Get the application URL by running these commands: export HTTP_NODE_PORT=30080 export HTTPS_NODE_PORT=30443 export NODE_IP="$(kubectl get nodes --output jsonpath="{.items[0].status.addresses[1].address}")" echo "Visit http://${NODE_IP}:${HTTP_NODE_PORT} to access your application via HTTP." echo "Visit https://${NODE_IP}:${HTTPS_NODE_PORT} to access your application via HTTPS." An example Ingress that makes use of the controller: apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: example namespace: foo spec: ingressClassName: nginx rules: - host: www.example.com http: paths: - pathType: Prefix backend: service: name: exampleService port: number: 80 path: / # This section is only required if TLS is to be enabled for the Ingress tls: - hosts: - www.example.com secretName: example-tls If TLS is enabled for the Ingress, a Secret containing the certificate and key must also be provided: apiVersion: v1 kind: Secret metadata: name: example-tls namespace: foo data: tls.crt: <base64 encoded cert> tls.key: <base64 encoded key> type: kubernetes.io/tls # 확인 (⎈|default:N/A) root@k3s-s:~# kubectl get all -n ingress NAME READY STATUS RESTARTS AGE pod/ingress-nginx-controller-979fc89cf-lhzhn 1/1 Running 0 6m57s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/ingress-nginx-controller NodePort 10.10.200.236 <none> 80:30080/TCP,443:30443/TCP 6m57s service/ingress-nginx-controller-admission ClusterIP 10.10.200.201 <none> 443/TCP 6m57s service/ingress-nginx-controller-metrics ClusterIP 10.10.200.41 <none> 10254/TCP 6m57s NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/ingress-nginx-controller 1/1 1 1 6m57s NAME DESIRED CURRENT READY AGE replicaset.apps/ingress-nginx-controller-979fc89cf 1 1 1 6m57s (⎈|default:N/A) root@k3s-s:~# kc describe svc -n ingress ingress-nginx-controller Name: ingress-nginx-controller Namespace: ingress Labels: app.kubernetes.io/component=controller app.kubernetes.io/instance=ingress-nginx app.kubernetes.io/managed-by=Helm app.kubernetes.io/name=ingress-nginx app.kubernetes.io/part-of=ingress-nginx app.kubernetes.io/version=1.11.2 helm.sh/chart=ingress-nginx-4.11.2 Annotations: meta.helm.sh/release-name: ingress-nginx meta.helm.sh/release-namespace: ingress Selector: app.kubernetes.io/component=controller,app.kubernetes.io/instance=ingress-nginx,app.kubernetes.io/name=ingress-nginx Type: NodePort IP Family Policy: SingleStack IP Families: IPv4 IP: 10.10.200.236 IPs: 10.10.200.236 Port: http 80/TCP TargetPort: http/TCP NodePort: http 30080/TCP Endpoints: 172.16.0.6:80 Port: https 443/TCP TargetPort: https/TCP NodePort: https 30443/TCP Endpoints: 172.16.0.6:443 Session Affinity: None External Traffic Policy: Cluster Events: <none> # externalTrafficPolicy 설정 (⎈|default:N/A) root@k3s-s:~# kubectl patch svc -n ingress ingress-nginx-controller -p '{"spec":{"externalTrafficPolicy": "Local"}}' service/ingress-nginx-controller patched # 기본 nginx conf 파일 확인 (⎈|default:N/A) root@k3s-s:~# kc describe cm -n ingress ingress-nginx-controller Name: ingress-nginx-controller Namespace: ingress Labels: app.kubernetes.io/component=controller app.kubernetes.io/instance=ingress-nginx app.kubernetes.io/managed-by=Helm app.kubernetes.io/name=ingress-nginx app.kubernetes.io/part-of=ingress-nginx app.kubernetes.io/version=1.11.2 helm.sh/chart=ingress-nginx-4.11.2 Annotations: meta.helm.sh/release-name: ingress-nginx meta.helm.sh/release-namespace: ingress Data ==== allow-snippet-annotations: ---- false BinaryData ==== Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal CREATE 9m47s nginx-ingress-controller ConfigMap ingress/ingress-nginx-controller kubectl exec deploy/ingress-nginx-controller -n ingress -it -- cat /etc/nginx/nginx.conf # Configuration checksum: 14558602868788752933 # setup custom paths that do not require root access pid /tmp/nginx/nginx.pid; daemon off; worker_processes 2; worker_rlimit_nofile 1047552; worker_shutdown_timeout 240s ; events { multi_accept on; worker_connections 16384; use epoll; } http { lua_package_path "/etc/nginx/lua/?.lua;;"; lua_shared_dict balancer_ewma 10M; lua_shared_dict balancer_ewma_last_touched_at 10M; lua_shared_dict balancer_ewma_locks 1M; lua_shared_dict certificate_data 20M; lua_shared_dict certificate_servers 5M; lua_shared_dict configuration_data 20M; lua_shared_dict global_throttle_cache 10M; lua_shared_dict ocsp_response_cache 5M; ... # 관련된 정보 확인 : 포드(Nginx 서버), 서비스, 디플로이먼트, 리플리카셋, 컨피그맵, 롤, 클러스터롤, 서비스 어카운트 등 (⎈|default:N/A) root@k3s-s:~# kubectl get all,sa,cm,secret,roles -n ingress NAME READY STATUS RESTARTS AGE pod/ingress-nginx-controller-979fc89cf-lhzhn 1/1 Running 0 15m NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/ingress-nginx-controller NodePort 10.10.200.236 <none> 80:30080/TCP,443:30443/TCP 15m service/ingress-nginx-controller-admission ClusterIP 10.10.200.201 <none> 443/TCP 15m service/ingress-nginx-controller-metrics ClusterIP 10.10.200.41 <none> 10254/TCP 15m NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/ingress-nginx-controller 1/1 1 1 15m NAME DESIRED CURRENT READY AGE replicaset.apps/ingress-nginx-controller-979fc89cf 1 1 1 15m NAME SECRETS AGE serviceaccount/default 0 16m serviceaccount/ingress-nginx 0 15m NAME DATA AGE configmap/ingress-nginx-controller 1 15m configmap/kube-root-ca.crt 1 16m NAME TYPE DATA AGE secret/ingress-nginx-admission Opaque 3 16m secret/sh.helm.release.v1.ingress-nginx.v1 helm.sh/release.v1 1 16m NAME CREATED AT role.rbac.authorization.k8s.io/ingress-nginx 2024-10-12T02:19:08Z (⎈|default:N/A) root@k3s-s:~# kc describe clusterroles ingress-nginx Name: ingress-nginx Labels: app.kubernetes.io/instance=ingress-nginx app.kubernetes.io/managed-by=Helm app.kubernetes.io/name=ingress-nginx app.kubernetes.io/part-of=ingress-nginx app.kubernetes.io/version=1.11.2 helm.sh/chart=ingress-nginx-4.11.2 Annotations: meta.helm.sh/release-name: ingress-nginx meta.helm.sh/release-namespace: ingress PolicyRule: Resources Non-Resource URLs Resource Names Verbs --------- ----------------- -------------- ----- events [] [] [create patch] services [] [] [get list watch] ingressclasses.networking.k8s.io [] [] [get list watch] ingresses.networking.k8s.io [] [] [get list watch] nodes [] [] [list watch get] endpointslices.discovery.k8s.io [] [] [list watch get] configmaps [] [] [list watch] endpoints [] [] [list watch] namespaces [] [] [list watch] pods [] [] [list watch] secrets [] [] [list watch] leases.coordination.k8s.io [] [] [list watch] ingresses.networking.k8s.io/status [] [] [update] (⎈|default:N/A) root@k3s-s:~# kubectl get pod,svc,ep -n ingress -o wide -l app.kubernetes.io/component=controller NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES pod/ingress-nginx-controller-979fc89cf-lhzhn 1/1 Running 0 21m 172.16.0.6 k3s-s <none> <none> NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR service/ingress-nginx-controller NodePort 10.10.200.236 <none> 80:30080/TCP,443:30443/TCP 21m app.kubernetes.io/component=controller,app.kubernetes.io/instance=ingress-nginx,app.kubernetes.io/name=ingress-nginx service/ingress-nginx-controller-admission ClusterIP 10.10.200.201 <none> 443/TCP 21m app.kubernetes.io/component=controller,app.kubernetes.io/instance=ingress-nginx,app.kubernetes.io/name=ingress-nginx service/ingress-nginx-controller-metrics ClusterIP 10.10.200.41 <none> 10254/TCP 21m app.kubernetes.io/component=controller,app.kubernetes.io/instance=ingress-nginx,app.kubernetes.io/name=ingress-nginx NAME ENDPOINTS AGE endpoints/ingress-nginx-controller 172.16.0.6:443,172.16.0.6:80 21m endpoints/ingress-nginx-controller-admission 172.16.0.6:8443 21m endpoints/ingress-nginx-controller-metrics 172.16.0.6:10254 21m # 버전 정보 확인 (⎈|default:N/A) root@k3s-s:~# POD_NAMESPACE=ingress (⎈|default:N/A) root@k3s-s:~# (⎈|default:N/A) root@k3s-s:~# POD_NAME=$(kubectl get pods -n $POD_NAMESPACE -l app.kubernetes.io/name=ingress-nginx --field-selector=status.phase=Running -o name) (⎈|default:N/A) root@k3s-s:~# kubectl exec $POD_NAME -n $POD_NAMESPACE -- /nginx-ingress-controller --version ------------------------------------------------------------------------------- NGINX Ingress controller Release: v1.11.2 Build: 46e76e5916813cfca2a9b0bfdc34b69a0000f6b9 Repository: https://github.com/kubernetes/ingress-nginx nginx version: nginx/1.25.5 ------------------------------------------------------------------------------- -

kubectl krew 설치 & ingress-nginx plugin 설치

# (참고) 운영체제 확인 : linux (⎈|default:N/A) root@k3s-s:~# OS="$(uname | tr '[:upper:]' '[:lower:]')" # (참고) CPU 아키텍처 확인 : amd64 (⎈|default:N/A) root@k3s-s:~# ARCH="$(uname -m | sed -e 's/x86_64/amd64/' -e 's/\(arm\)\(64\)\?.*/\1\2/' -e 's/aarch64$/arm64/')" # (참고) KREW 지정 : krew-linux_amd64 (⎈|default:N/A) root@k3s-s:~# KREW="krew-${OS}_${ARCH}" (⎈|default:N/A) root@k3s-s:~# echo $KREW krew-linux_arm64 # kubectl krew 설치 (⎈|default:N/A) root@k3s-s:~# curl -fsSLO "https://github.com/kubernetes-sigs/krew/releases/latest/download/${KREW}.tar.gz" (⎈|default:N/A) root@k3s-s:~# tar zxvf krew-linux_arm64.tar.gz ./LICENSE ./krew-linux_arm64 (⎈|default:N/A) root@k3s-s:~# ./krew-linux_arm64 install krew Adding "default" plugin index from https://github.com/kubernetes-sigs/krew-index.git. Updated the local copy of plugin index. Installing plugin: krew Installed plugin: krew \ | Use this plugin: | kubectl krew | Documentation: | https://krew.sigs.k8s.io/ | Caveats: | \ | | krew is now installed! To start using kubectl plugins, you need to add | | krew's installation directory to your PATH: | | | | * macOS/Linux: | | - Add the following to your ~/.bashrc or ~/.zshrc: | | export PATH="${KREW_ROOT:-$HOME/.krew}/bin:$PATH" | | - Restart your shell. | | | | * Windows: Add %USERPROFILE%\.krew\bin to your PATH environment variable | | | | To list krew commands and to get help, run: | | $ kubectl krew | | For a full list of available plugins, run: | | $ kubectl krew search | | | | You can find documentation at | | https://krew.sigs.k8s.io/docs/user-guide/quickstart/. | / / (⎈|default:N/A) root@k3s-s:~# export PATH="${KREW_ROOT:-$HOME/.krew}/bin:$PATH" # 플러그인 정보 업데이트 후 확인 - 링크 kubectl krew update kubectl krew search # ingress-nginx 플러그인 설치 (⎈|default:N/A) root@k3s-s:~# kubectl krew install ingress-nginx Updated the local copy of plugin index. Installing plugin: ingress-nginx W1012 11:57:25.529347 86243 install.go:164] failed to install plugin "ingress-nginx": plugin "ingress-nginx" does not offer installation for this platform failed to install some plugins: [ingress-nginx]: plugin "ingress-nginx" does not offer installation for this platform # ingress-nginx 플러그인 명령어 실행(도움말 출력) kubectl ingress-nginx # nginx ctrl 의 backends 설정 정보 출력 kubectl ingress-nginx backends -n ingress-nginx --list kubectl ingress-nginx backends -n ingress-nginx # conf 출력 kubectl ingress-nginx conf -n ingress-nginx ## 특정 호스트(도메인) 설정 확인 kubectl ingress-nginx conf -n ingress-nginx --host gasida.cndk.link kubectl ingress-nginx conf -n ingress-nginx --host nasida.cndk.link # 정보 보기 편함! kubectl ingress-nginx ingresses kubectl ingress-nginx ingresses --all-namespaces

인그레스(Ingress) 실습 및 통신 흐름 확인

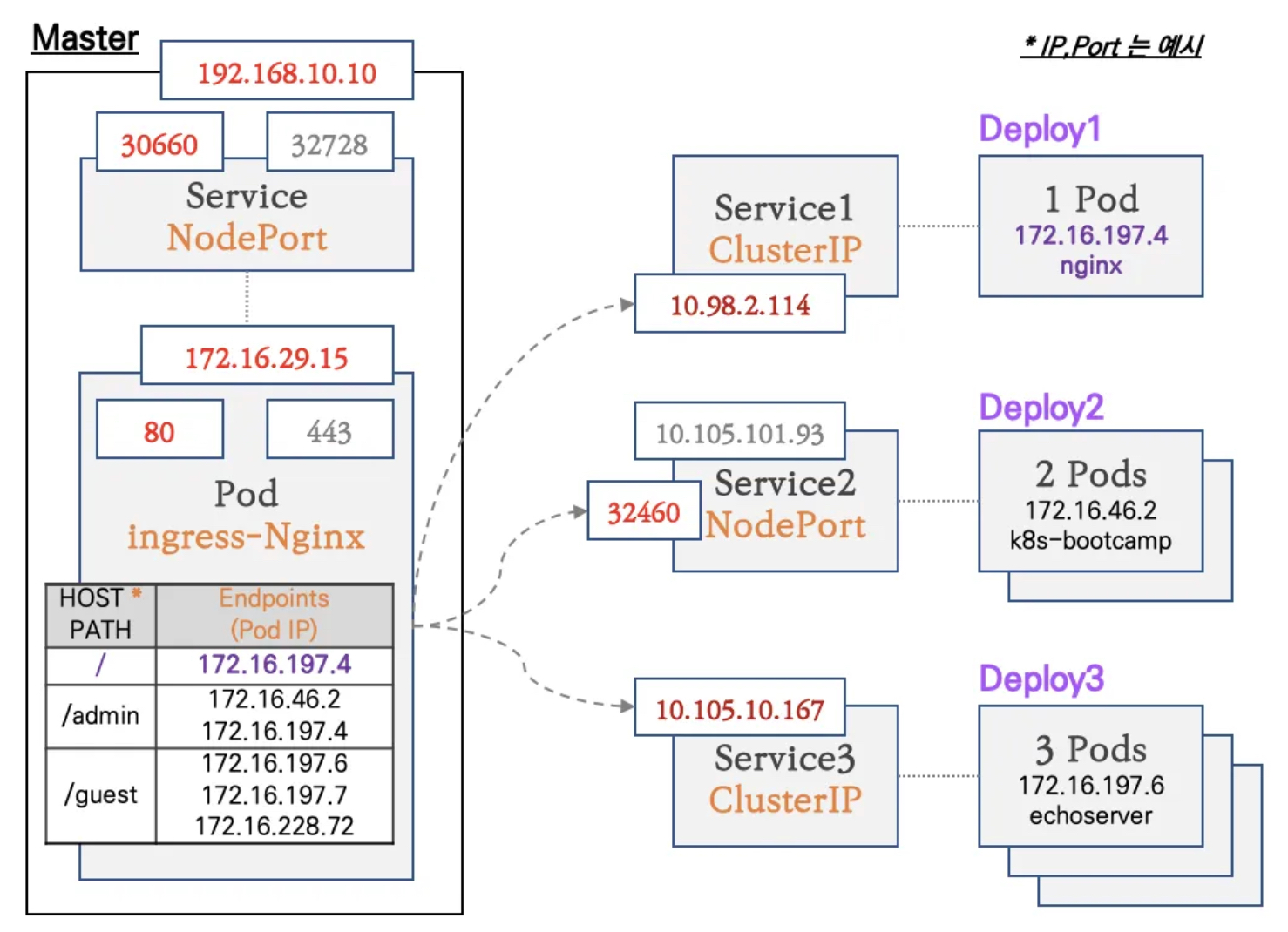

실습 구성도

- 컨트롤플레인 노드에 인그레스 컨트롤러(Nginx) 파드를 생성, NodePort로 외부에 노출

- 인그레스 정책 설정 : Host/Path routing, 실습의 편리를 위해서 도메인 없이 IP로 접속 설정 가능

디플로이먼트와 서비스를 생성

-

svc1-pod.yaml

apiVersion: apps/v1 kind: Deployment metadata: name: deploy1-websrv spec: replicas: 1 selector: matchLabels: app: websrv template: metadata: labels: app: websrv spec: containers: - name: pod-web image: nginx --- apiVersion: v1 kind: Service metadata: name: svc1-web spec: ports: - name: web-port port: 9001 targetPort: 80 selector: app: websrv type: ClusterIP -

svc2-pod.yaml

apiVersion: apps/v1 kind: Deployment metadata: name: deploy2-guestsrv spec: replicas: 2 selector: matchLabels: app: guestsrv template: metadata: labels: app: guestsrv spec: containers: - name: pod-guest image: jmalloc/echo-server:latest ports: - containerPort: 8080 --- apiVersion: v1 kind: Service metadata: name: svc2-guest spec: ports: - name: guest-port port: 9002 targetPort: 8080 selector: app: guestsrv type: NodePort -

svc3-pod.yaml

apiVersion: apps/v1 kind: Deployment metadata: name: deploy3-adminsrv spec: replicas: 3 selector: matchLabels: app: adminsrv template: metadata: labels: app: adminsrv spec: containers: - name: pod-admin image: jmalloc/echo-server:latest ports: - containerPort: 8080 --- apiVersion: v1 kind: Service metadata: name: svc3-admin spec: ports: - name: admin-port port: 9003 targetPort: 8080 selector: app: adminsrv -

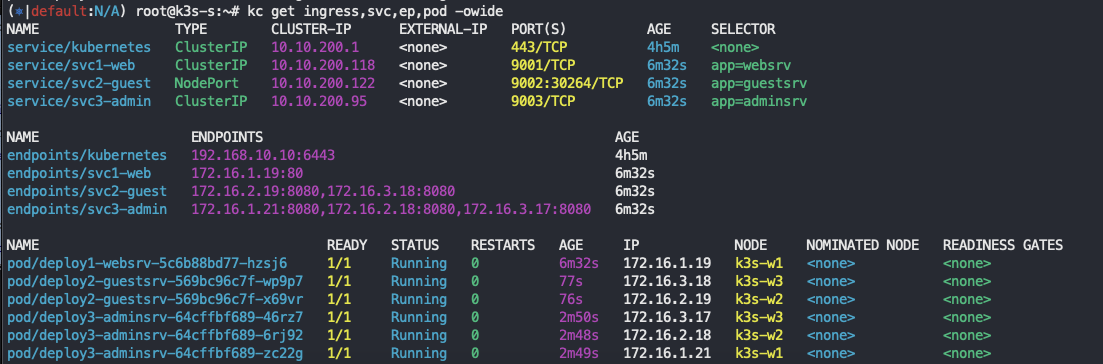

생성 및 확인

# 모니터링 watch -d 'kubectl get ingress,svc,ep,pod -owide' # 생성 (⎈|default:N/A) root@k3s-s:~# kc describe node k3s-s | grep -i taint Taints: <none> (⎈|default:N/A) root@k3s-s:~# kubectl taint nodes k3s-s role=controlplane:NoSchedule node/k3s-s tainted (⎈|default:N/A) root@k3s-s:~# kc describe node k3s-s | grep -i taint Taints: role=controlplane:NoSchedule kubectl apply -f svc1-pod.yaml,svc2-pod.yaml,svc3-pod.yaml # 확인 : svc1, svc3 은 ClusterIP 로 클러스터 외부에서는 접속할 수 없다 >> Ingress 는 연결 가능! kubectl get pod,svc,ep

인그레스(정책) 생성

- ingress1.yaml

cat <<EOT> ingress1.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-1

annotations:

#nginx.ingress.kubernetes.io/upstream-hash-by: "true"

spec:

ingressClassName: nginx

rules:

- http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: svc1-web

port:

number: 80

- path: /guest

pathType: Prefix

backend:

service:

name: svc2-guest

port:

number: 8080

- path: /admin

pathType: Prefix

backend:

service:

name: svc3-admin

port:

number: 8080

EOT- 인그레스 생성 및 확인

# 모니터링

watch -d 'kubectl get ingress,svc,ep,pod -owide'

# 생성

(⎈|default:N/A) root@k3s-s:~# kubectl apply -f ingress1.yaml

ingress.networking.k8s.io/ingress-1 created

# 확인

(⎈|default:N/A) root@k3s-s:~# kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress-1 nginx * 10.10.200.236 80 27s

(⎈|default:N/A) root@k3s-s:~# kc describe ingress ingress-1

Name: ingress-1

Labels: <none>

Namespace: default

Address: 10.10.200.236

Ingress Class: nginx

Default backend: <default>

Rules:

Host Path Backends

---- ---- --------

*

/ svc1-web:80 ()

/guest svc2-guest:8080 ()

/admin svc3-admin:8080 ()

...

# 설정이 반영된 nginx conf 파일 확인

(⎈|default:N/A) root@k3s-s:~# kubectl exec deploy/ingress-nginx-controller -n ingress -it -- cat /etc/nginx/nginx.conf

(⎈|default:N/A) root@k3s-s:~# kubectl exec deploy/ingress-nginx-controller -n ingress -it -- cat /etc/nginx/nginx.conf | grep 'location /' -A5

location /guest/ {

set $namespace "default";

set $ingress_name "ingress-1";

set $service_name "svc2-guest";

set $service_port "8080";

--

location /admin/ {

set $namespace "default";

set $ingress_name "ingress-1";

set $service_name "svc3-admin";

set $service_port "8080";

--

location / {

set $namespace "default";

set $ingress_name "ingress-1";

set $service_name "svc1-web";

set $service_port "80";

}

...인그레스를 통한 내부 접속

-

Nginx 인그레스 컨트롤러를 통한 접속(HTTP 인입) 경로 : 인그레스 컨트롤러 파드에서 서비스 파드의 IP로 직접 연결 (아래 오른쪽 그림)

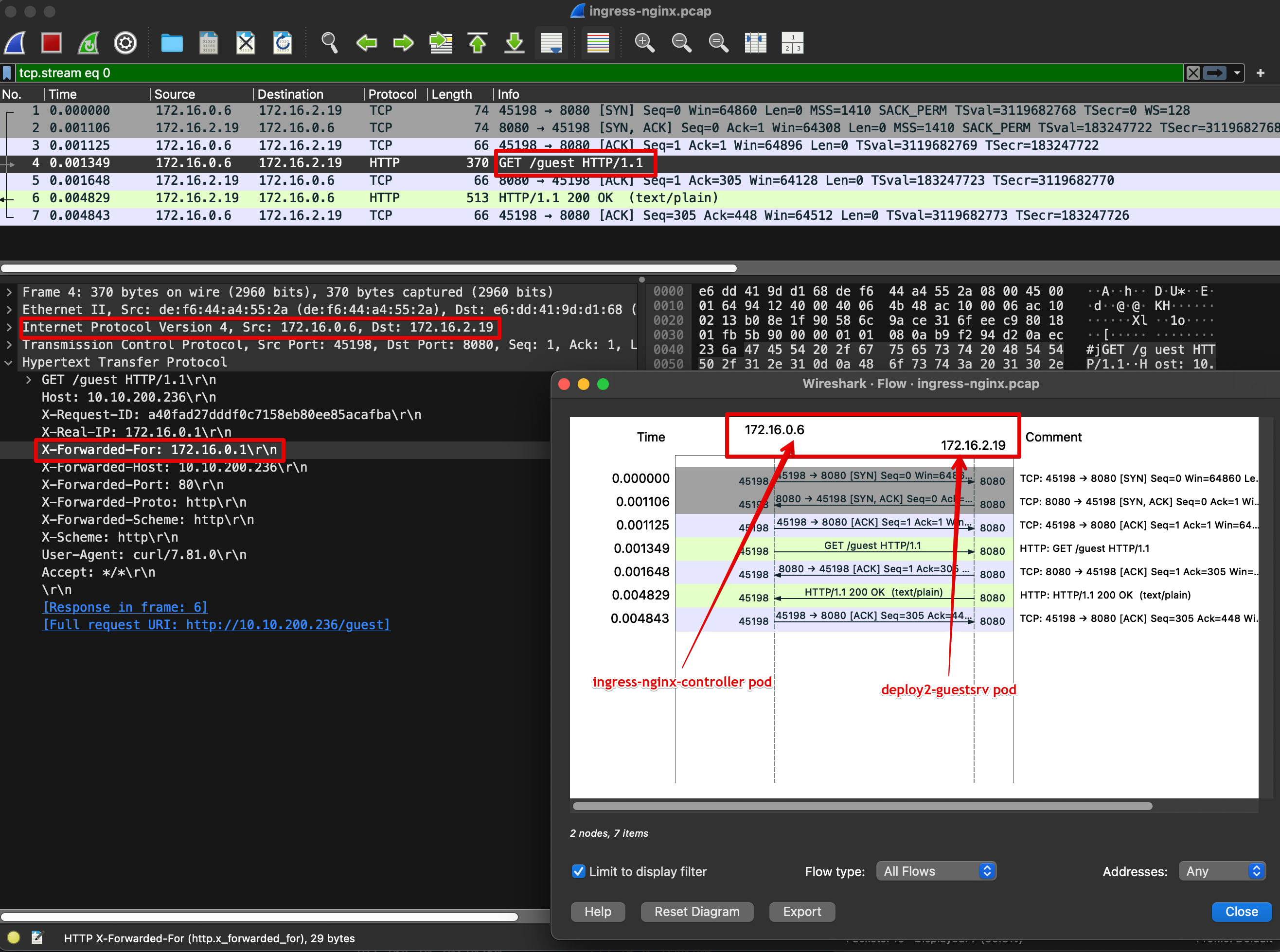

X-Forwarded-For 헤더, X-Forwarded-Proto 헤더

- X-Forwarded-For 헤더는 송신지 IP 주소가 변환되는 환경(장비, 서버, 솔루션 등)에서, 변환 전 송신지(클라이언트) IP 주소를 저장하는 헤더입니다.

- X-Forwarded-Proto 헤더는 변환 전 프로토콜을 저장합니다. (예. SSL Offload 환경에서 서버 측에서 클라이언트가 요청 시 사용한 원래 프로토콜을 확인)

Client IP 를 가져오는 방법 - 웹 애플리케이션이 client IP를 추출하기 위해서 Http request header를 다음과 같은 순서로 뒤짐

- Proxy-Client-IP : 특정 웹 어플리케이션에서 사용 (예. WebLogic Connector - mod_wl)

- WL-Proxy-Client-IP : 특정 웹 어플리케이션에서 사용 (예. WebLogic Connector - mod_wl)

- X-Forwarded-For : HTTP RFC 표준에는 없지만 사실상 표준!!!

- request.getRemoteAddr()

- CLIENT_IP

- 3번 X-Forwarded-For: , ,

요청이 여러 프록시를 거치는 경우 X-Forwarded-For 요청 헤더의 clientIPAddress 다음 에는 로드 밸런서에 도달하기 전에 요청이 통과하는 각 연속 프록시의 IP주소가 온다.

따라서, 가장 오른쪽의 IP 주소는 가장 최근의 프록시의 IP주소이고 가장 왼쪽의 IP 주소는 원래 클라이언트의 IP 주소이다.

-

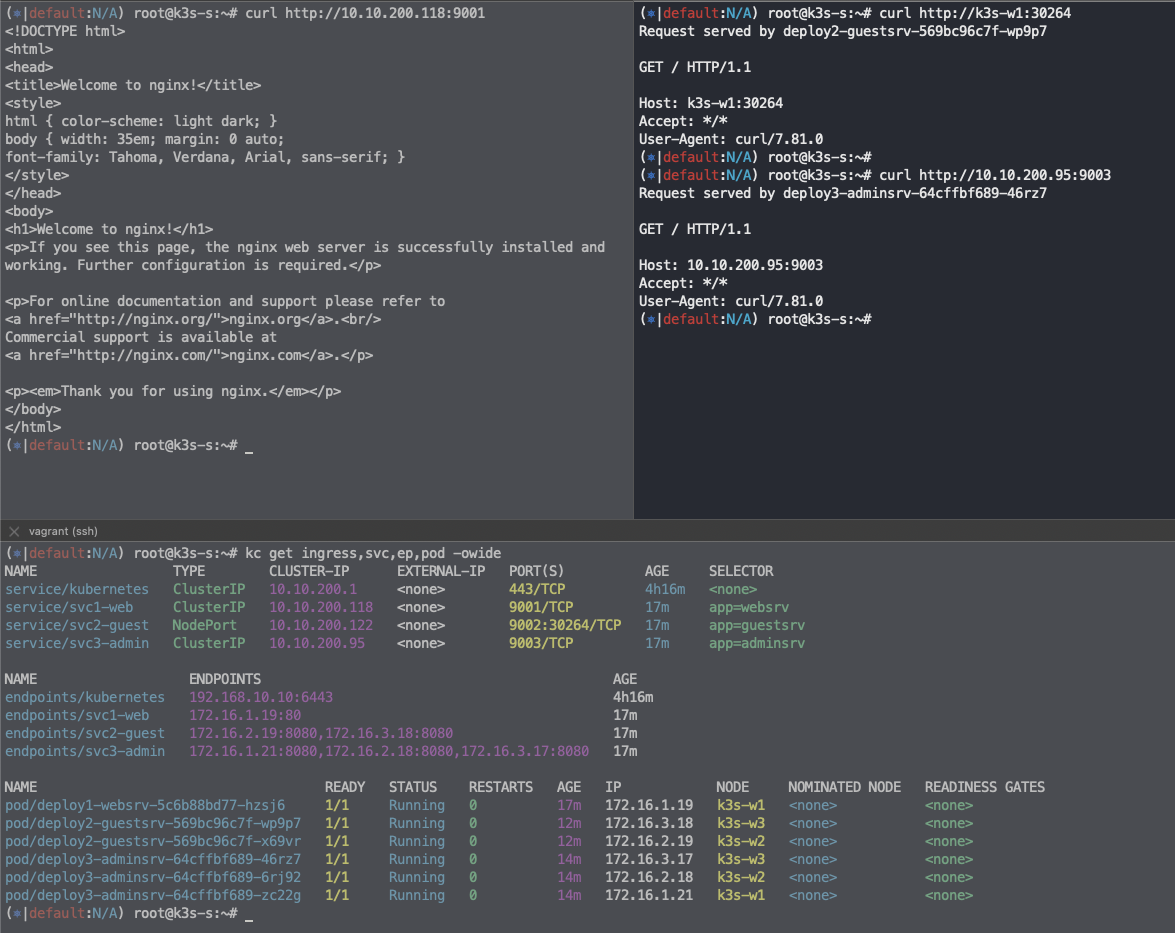

인그레스(Nginx 인그레스 컨트롤러)를 통한 접속(HTTP 인입) 확인*** : HTTP 부하분산 & PATH 기반 라우팅, 애플리케이션 파드에 연결된 서비스는 Bypass

# (krew 플러그인 설치 시) 인그레스 정책 확인 kubectl ingress-nginx ingresses INGRESS NAME HOST+PATH ADDRESSES TLS SERVICE SERVICE PORT ENDPOINTS ingress-1 / 192.168.10.10 NO svc1-web 80 1 ingress-1 /guest 192.168.10.10 NO svc2-guest 8080 2 ingress-1 /admin 192.168.10.10 NO svc3-admin 8080 3 # (⎈|default:N/A) root@k3s-s:~# kubectl get ingress NAME CLASS HOSTS ADDRESS PORTS AGE ingress-1 nginx * 10.10.200.236 80 18m (⎈|default:N/A) root@k3s-s:~# kubectl describe ingress ingress-1 | sed -n "5, \$p" Ingress Class: nginx Default backend: <default> Rules: Host Path Backends ---- ---- -------- * / svc1-web:80 () /guest svc2-guest:8080 () /admin svc3-admin:8080 () # 접속 로그 확인 : kubetail 설치되어 있음 - 출력되는 nginx 의 로그의 IP 확인 (⎈|default:N/A) root@k3s-s:~# kubetail -n ingress -l app.kubernetes.io/component=controller Will tail 1 logs... ingress-nginx-controller-979fc89cf-lhzhn ------------------------------- # 자신의 집 PC에서 인그레스를 통한 접속 : 각각 echo -e "Ingress1 sv1-web URL = http://$(curl -s ipinfo.io/ip):30080" echo -e "Ingress1 sv2-guest URL = http://$(curl -s ipinfo.io/ip):30080/guest" echo -e "Ingress1 sv3-admin URL = http://$(curl -s ipinfo.io/ip):30080/admin" # svc1-web 접속 MYIP=<EC2 공인 IP> MYIP=13.124.93.150 (⎈|default:N/A) root@k3s-s:~# curl -s $MYIP:30080 # svvc2-guest 접속 (⎈|default:N/A) root@k3s-s:~# curl -s $MYIP:30080/guest (⎈|default:N/A) root@k3s-s:~# for i in {1..100}; do curl -s $MYIP:30080/guest ; done | sort | uniq -c | sort -nr # svc3-admin 접속 > 기본적으로 Nginx 는 라운드로빈 부하분산 알고리즘을 사용 >> Client_address 와 XFF 주소는 어떤 주소인가요? (⎈|default:N/A) curl -s $MYIP:30080/admin (⎈|default:N/A) root@k3s-s:~# curl -s $MYIP:30080/admin | egrep -i '(Host|x-forwarded-for)' Host: 192.168.10.10:30080 X-Forwarded-For: 172.16.0.1 X-Forwarded-Host: 192.168.10.10:30080 -

노드에서 아래 패킷 캡처 확인 : flannel vxlan, 파드 간 통신 시 IP 정보 확인

#

ngrep -tW byline -d eth1 '' udp port 8472 or tcp port 80

#

tcpdump -i eth1 udp port 8472 -nn

# vethY는 각자 k3s-s 의 가장 마지막 veth 를 지정

tcpdump -i veth5e1e960b tcp port 8080 -nn

tcpdump -i veth5e1e960b tcp port 8080 -w /tmp/ingress-nginx.pcap

# 자신의 PC에서 k3s-s IP로 pcap 다운로드

scp vagrant@192.168.10.10:/tmp/ingress-nginx.pcap ~/Downloads

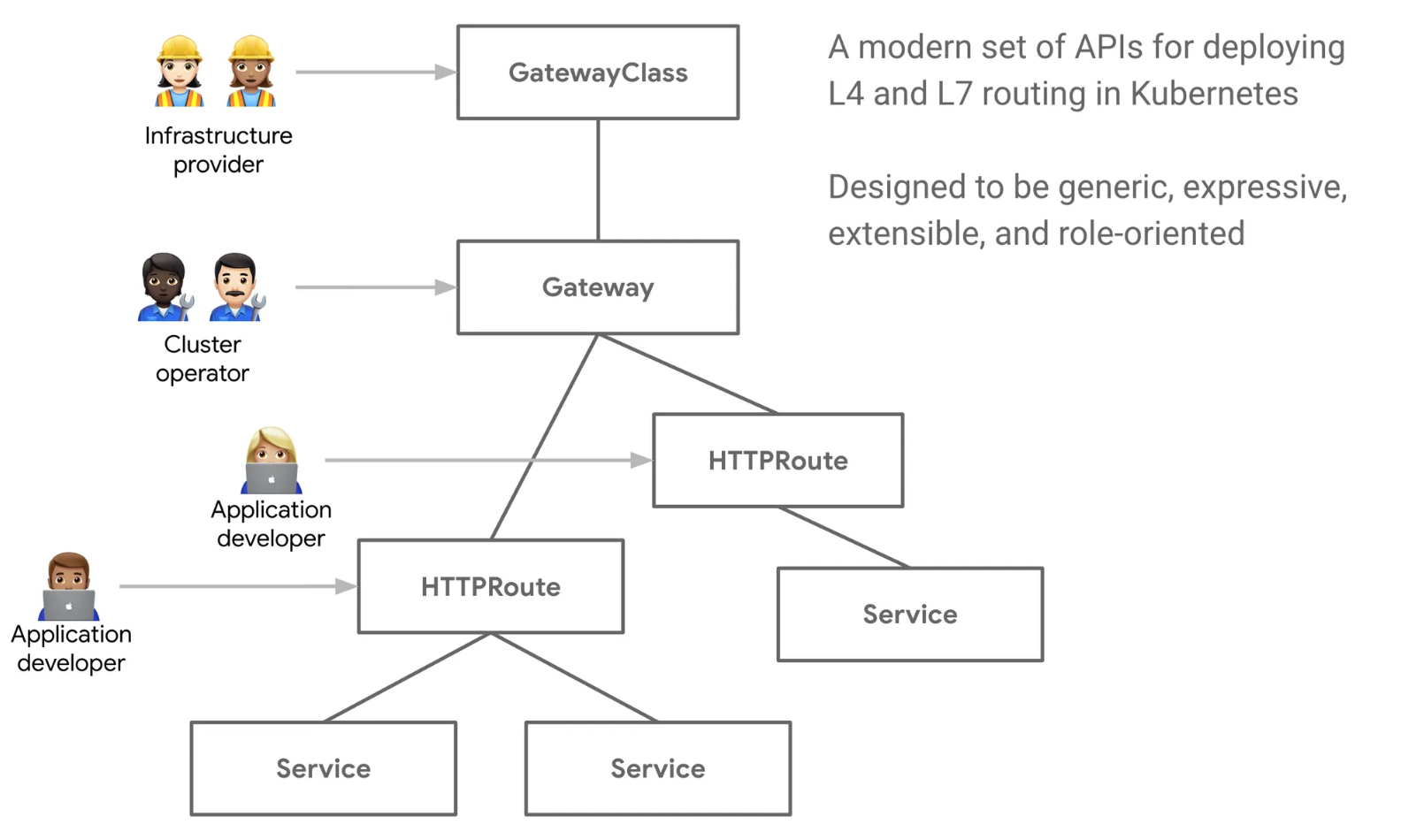

4. Gateway API

Gateway API는 Kubernetes 네트워크 인프라에서 외부 및 내부 트래픽을 관리하고 제어하기 위한 새로운 표준입니다. 기존의 Ingress API를 확장하고 개선한 형태로, 더 유연하고 다양한 네트워크 시나리오를 지원합니다. Ingress API가 단순한 HTTP 트래픽 라우팅에 초점을 맞춘 반면, Gateway API는 HTTP, TCP, UDP 등의 다양한 프로토콜을 지원하며, 더 나은 확장성과 유연한 트래픽 관리를 제공합니다.

Gateway API의 주요 개념

Gateway API는 네트워크 경계를 명확하게 정의하고 역할을 구분하여 네트워크 트래픽을 관리할 수 있습니다. 아래는 주요 개념입니다:

-

Gateway:

- 트래픽을 받아들이는 네트워크 리소스입니다. 물리적 또는 논리적 네트워크 장치에 대한 추상화를 제공합니다. 하나의

Gateway는 여러 네트워크 리스닝 포트를 정의하고, 다양한 서비스로 트래픽을 전달할 수 있습니다.

- 트래픽을 받아들이는 네트워크 리소스입니다. 물리적 또는 논리적 네트워크 장치에 대한 추상화를 제공합니다. 하나의

-

GatewayClass:

- Gateway의 동작을 정의하는 클래스입니다. 네트워크 제공자 또는 클러스터 관리자는 여러 개의

GatewayClass를 정의하여 다양한 유형의 트래픽 처리 방식을 설정할 수 있습니다. 예를 들어, NGINX, Istio, AWS Load Balancer 등 다양한 구현을 지원합니다.

- Gateway의 동작을 정의하는 클래스입니다. 네트워크 제공자 또는 클러스터 관리자는 여러 개의

-

HTTPRoute, TCPRoute, UDPRoute:

- 트래픽을 특정 서비스로 라우팅하는 규칙을 정의합니다. 각 Route는 HTTP, TCP, UDP 프로토콜에 맞춰 구성할 수 있으며, 이를 통해

Gateway에 들어온 트래픽을 원하는 서비스로 보낼 수 있습니다.

- 트래픽을 특정 서비스로 라우팅하는 규칙을 정의합니다. 각 Route는 HTTP, TCP, UDP 프로토콜에 맞춰 구성할 수 있으며, 이를 통해

- Listener:

- Gateway에서 어떤 포트와 프로토콜로 트래픽을 수신할지 정의하는 구성 요소입니다.

Listener는 HTTP, HTTPS, TCP, UDP 등의 프로토콜을 수신할 수 있도록 설정할 수 있습니다.

- Gateway에서 어떤 포트와 프로토콜로 트래픽을 수신할지 정의하는 구성 요소입니다.

Gateway API의 주요 기능

-

다양한 프로토콜 지원:

Ingress는 주로 HTTP/HTTPS 트래픽을 처리하는 반면,Gateway API는 HTTP뿐만 아니라 TCP, UDP 등 다양한 프로토콜을 지원합니다. 이를 통해 더 많은 유형의 트래픽을 관리할 수 있습니다.

-

역할 기반 네트워크 관리:

Gateway API는 네트워크 관리에서 역할을 분리하고, 다양한 팀들이 각자 자신에게 할당된 부분만 관리할 수 있게 해줍니다. 예를 들어, 네트워크 관리자는 Gateway를 설정하고, 애플리케이션 개발자는 라우팅 규칙을 정의할 수 있습니다.

-

향상된 확장성:

Gateway는 확장성과 유연성을 염두에 두고 설계되었습니다. 여러Listener를 통해 다중 포트를 지원하며,GatewayClass를 사용하여 다양한 네트워크 인프라를 관리할 수 있습니다.

-

세밀한 라우팅 제어:

- HTTP 헤더, 쿠키, 메서드 등 다양한 요청 메타데이터에 따라 트래픽을 라우팅할 수 있습니다. 이 기능을 통해 더욱 세밀한 트래픽 제어가 가능해졌습니다.

-

다중 서비스 라우팅:

- 하나의

Gateway에서 여러 라우트를 정의하여 다양한 서비스를 하나의 엔드포인트에서 제공할 수 있습니다. 이를 통해 네트워크 리소스를 효율적으로 사용할 수 있습니다.

- 하나의

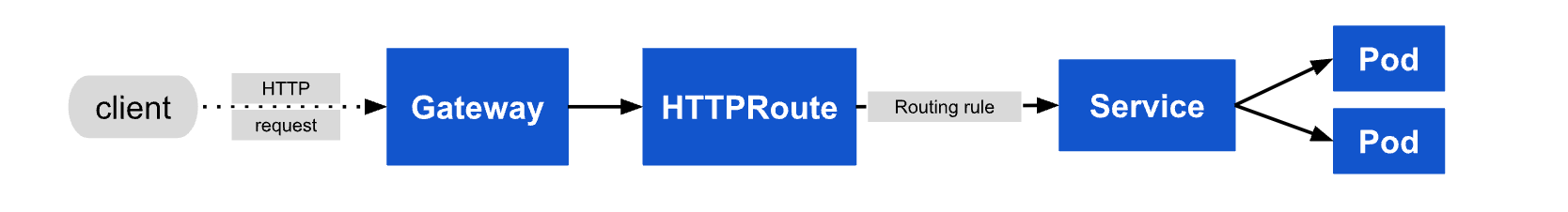

Request flow

Gateway API의 장점

- 확장성: 여러 리스너와 경로를 정의해 하나의

Gateway로 복잡한 트래픽 흐름을 쉽게 관리할 수 있습니다. - 유연성: 다양한 네트워크 시나리오에 맞춰 프로토콜과 규칙을 구성할 수 있습니다.

- 다양한 프로토콜 지원: HTTP뿐만 아니라 TCP, UDP도 지원하여 더욱 폭넓은 트래픽 제어가 가능합니다.

- 역할 기반 관리: 네트워크와 애플리케이션 간의 책임을 분리하여 더욱 효율적인 관리가 가능합니다.

Gateway API는 기존의 Ingress보다 강력한 네트워크 트래픽 관리 기능을 제공하며, 특히 대규모 클러스터에서 여러 프로토콜을 다루는 복잡한 애플리케이션 환경에서 유용하게 사용할 수 있습니다.

실습: Gateway API 적용해보기

Helm을 사용하여 k3s에 Gateway API 및 NGINX Gateway Controller를 설치하는 방법입니다. Helm은 Kubernetes 리소스를 쉽게 배포하고 관리할 수 있는 패키지 관리 도구로, 여러 리소스를 한 번에 관리할 수 있습니다. 이번 실습에서는 Helm 차트를 사용해 Gateway API 및 NGINX Ingress Controller를 설치하고 설정하는 방법을 다룹니다.

목차

- 사전 준비

- Helm 설치

- Helm 리포지토리 추가 및 업데이트

- NGINX Ingress Controller 설치

- GatewayClass 생성

- Gateway 생성

- HTTPRoute 생성

- 샘플 애플리케이션 배포

- 결과 확인

1. 사전 준비

-

운영 체제: Linux 또는 macOS

-

k3s 설치 권한: 클러스터를 설치할 수 있는 관리자 권한

-

Helm 설치

: Helm 차트를 사용하려면 Helm이 설치되어 있어야 합니다.# macOS brew install helm # Ubuntu sudo snap install helm --classic

2. Helm 설치

Helm이 설치되지 않았다면 위의 명령어를 통해 설치합니다. 설치 후, Helm이 제대로 설치되었는지 확인합니다.

helm version3. Helm 리포지토리 추가 및 업데이트

Helm을 사용해 NGINX Ingress Controller를 설치하려면 먼저 필요한 리포지토리를 추가해야 합니다.

-

NGINX Ingress Controller Helm 리포지토리 추가:

helm repo add ingress-nginx https://kubernetes.github.io/ingress-nginx helm repo update -

Gateway API Helm 차트 리포지토리 추가: Gateway API에 대한 Helm 차트는 공식적으로 제공되지는 않지만, Ingress Controller가 설치되면 자동으로 관련 리소스를 배포할 수 있습니다.

4. NGINX Ingress Controller 설치

Helm을 사용하여 NGINX Ingress Controller를 설치합니다. 이를 통해 Kubernetes 클러스터에 Ingress Controller를 배포하고, Gateway API 리소스와 함께 사용할 수 있습니다.

-

NGINX Ingress Controller 설치 명령: