MoA

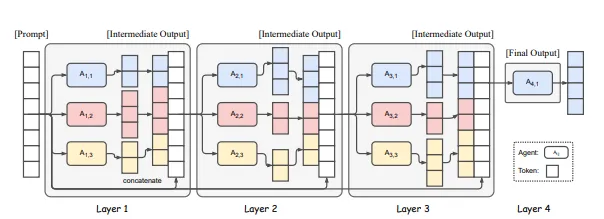

1.Mixture of Agents Enhances Large Language Model Capabilities

여러 개의 대형 언어 모델(LLM)을 협력적으로 사용하여 성능을 향상시키는 Mixture-of-Agents (MoA) 접근 방식을 제안하고 평가하는 연구입니다.

2.MoA is All You Need :Building LLM Research Team using Mixture of Agents

"MoA is All You Need: Building LLM Research"는 금융 분야에서 대형 언어 모델(LLM) 연구를 위한 실용적인 다중 에이전트 기반의 RAG(검색 강화 생성) 프레임워크 등 제안. 정리 및 번역

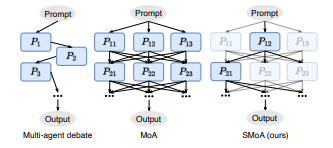

3.SMoA: Improving Multi-agent Large Language Models with Sparse Mixture-of-Agents

SMoA (Sparse Mixture-of-Agents)는 다중 에이전트 기반의 대형 언어 모델(LLM) 성능을 향상시키기 위해 Sparse Mixture-of-Experts(SMoE) 개념에서 영감을 받아 제안된 새로운 프레임워크

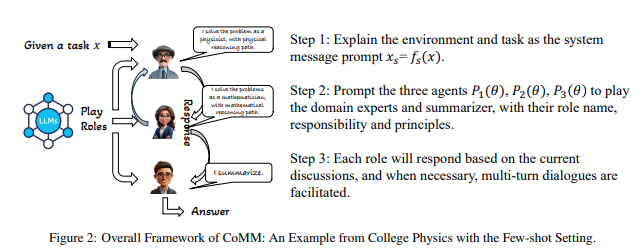

4.CoMM: Collaborative Multi-Agent, Multi-Reasoning-Path Prompting for Complex Problem Solving

대규모 언어 모델(LLM)을 활용하여 복잡한 문제를 해결하는 데 중점을 둔 프레임워크를 제안합니다. 이 논문에서는 다양한 역할을 수행하는 여러 에이전트가 협력하여 복잡한 문제를 해결하는 방법을 설명합니다.

5.Rethinking Mixture-of-Agents: Is Mixing Different Large Language Models Beneficial?

이 논문은 Mixture-of-Agents (MoA) 기법을 재검토하면서, 여러 개의 대형 언어 모델(LLM)을 혼합하는 것이 실제로 유용한지에 대한 질문을 다룹니다.

6.Distributed Mixture-of-Agents for Edge Inference with Large Language Models

이 논문은 Distributed Mixture-of-Agents (MoA) 프레임워크를 사용하여 에지 디바이스에서 대형 언어 모델(LLM) 추론을 분산적으로 수행하는 방법을 연구합니다.

7.Multi-LLM Collaborative Search for Complex Problem Solving

이 논문 *"Multi-LLM Collaborative Search for Complex Problem Solving"*은 복잡한 문제 해결을 위해 다중 대형 언어 모델(LLM)을 활용하는 Mixture-of-Search-Agents (MOSA) 패러다임을 제안