Basics

Setting

Assumption

- N CPUs, P process/threads

Questions needed to be addressed

- In what order should the process be run?

- On what CPU should each process run?

Focus on scheduling in case of 1 CPU

Factors Influencing Scheduling

Characteristics of the process

- I/O bound or CPU bound

- CPU bound means the process is CPU intensive tasks

- Any metadata about the process

- If we have deadlines, we can take into account when scheduling

- Predictable?

- If the running time is predictable, we can take into account

Characteristics of the machine (Hardware + OS)

- #CPUs

- Can we preempt process?

Characteristics of the user

- Interactive? (desktop apps (mouse, keyboard))

Basic Scheduler Architecture

Scheduler selects from the ready processes

Scheduling decisions are made when a process

- No preemption

- Switches from running to waiting

- Terminates

- Preemption

- Switches from running to ready

- e.g. yield

- Switches from waiting to ready

- After I/O finish

Dispatch Latency

Dispatcher gives control of the CPU to the process selected by the scheduler

- Switches context

- Switching to/from kernel mode/user mode

- Saving the old EIP, loading the new EIP

Dispatching incurs a cost

- Context switching and mode switch are expensive

- Memory accessing is high-cost

Minimizing process switching is advantegeous

Note on Process & Thread

Process and thread are equivalent for scheduling purposes

SCS: System-contention scope

- Kernel supports threads = kernel threads

PCS: Process contention scope

- Kernel does not support threads = user threads

- Each process handles it's own thread scheduling

Basic Process Behavior

Process alternate between working and waiting

- Work -> CPU burst

- Processes vary I/O bound or CPU bound

so that make scheduler designing difficult

Scheduling Optimization Criteria

Max CPU utilization

- Keep the CPU as busy as possible

Max throughput

- # of processes that finish over time

- Min turnaround time: amount of time to finish a process (Arrival ~ Completion)

- Min waiting time: amount of time a ready process has been waiting (Waiting time in ready queue)

- Turnaround time - Waiting time = Execution time

Maximizing CPU utilization and maximizing throughput are independent

- If a process with a very long CPU usage time occupies,

CPU utilization goes up, but throughput goes down

Min response time

- amount of time between submitting a request and receiving a response (Arrival ~ Execute)

- Cliking a button and Seeing a response

Fairness

- All processes receive min/max fair CPU resources

- If one thread occupy CPU less than the other,

Process completion time will increase

Classic Schedulers

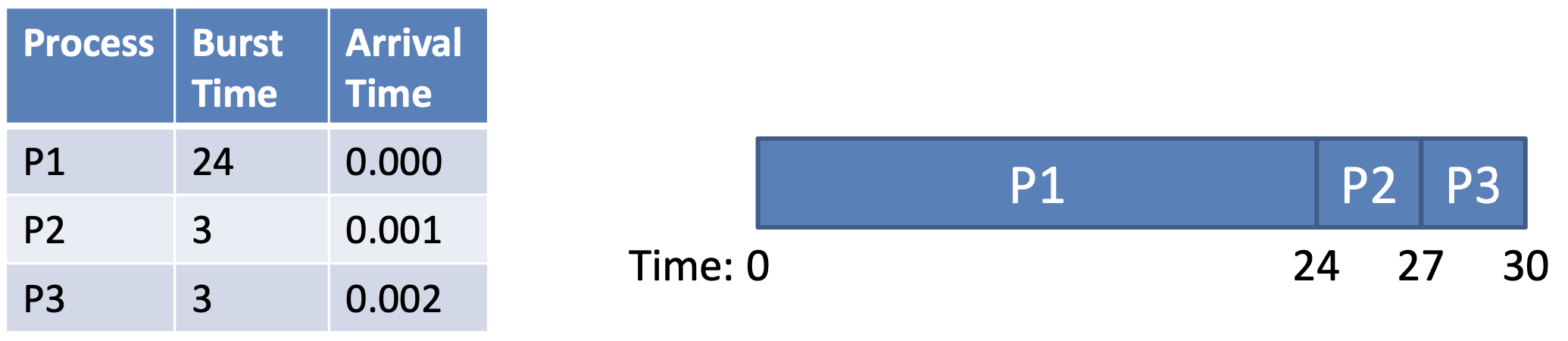

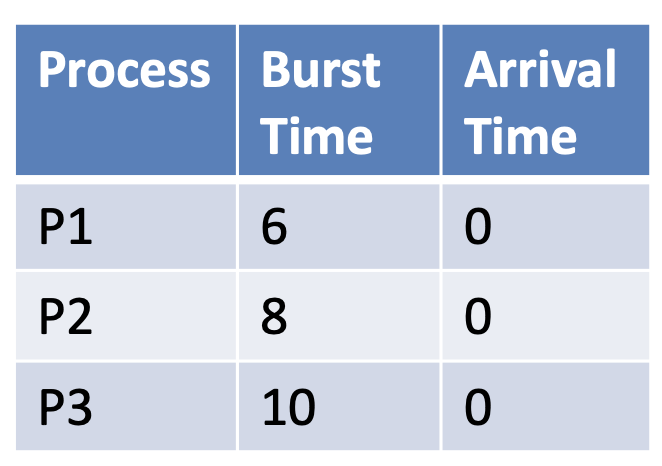

FCFS: First Come, First Serve

Processes stored in a FIFO queue

- Served in order of arrival

Turnaround time = completion time - arrival time

- P1 = 24, P2= 27, P3=30

- Average turnaround time:

Convoy Effect (= Head-of-line Blocking)

- Long process can impede short processes

- Serving a process that has long burst time leads to long turnaround time

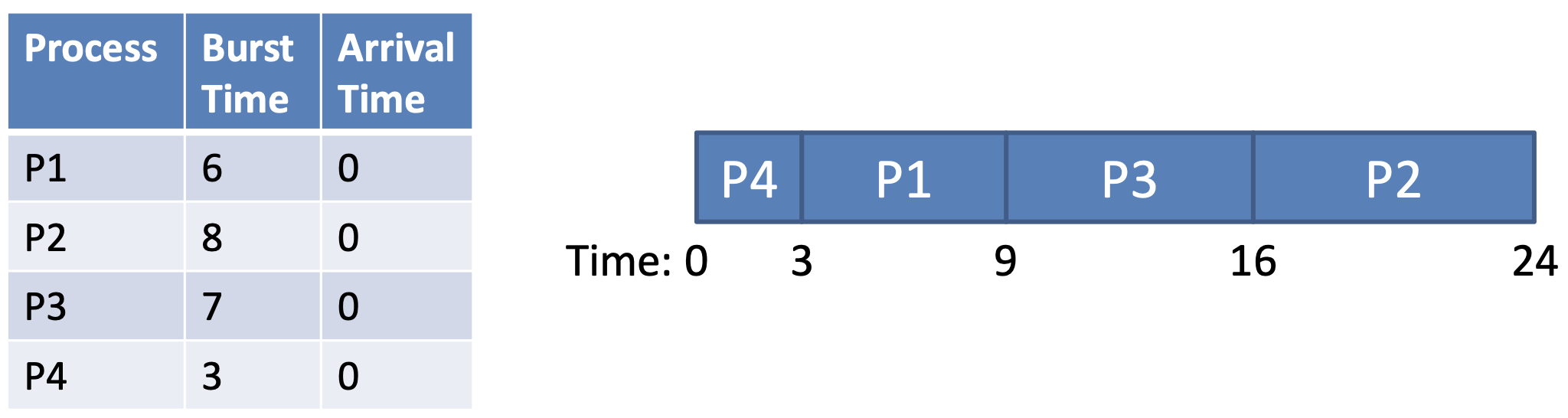

SJF: Shortest Job First

Schedule process based on the length of next CPU burst time

- Shortest processes go first

Turnaround time = completion time - arrival time

- Average turnaround time:

SJF is optimal: guarantees minimum wait time

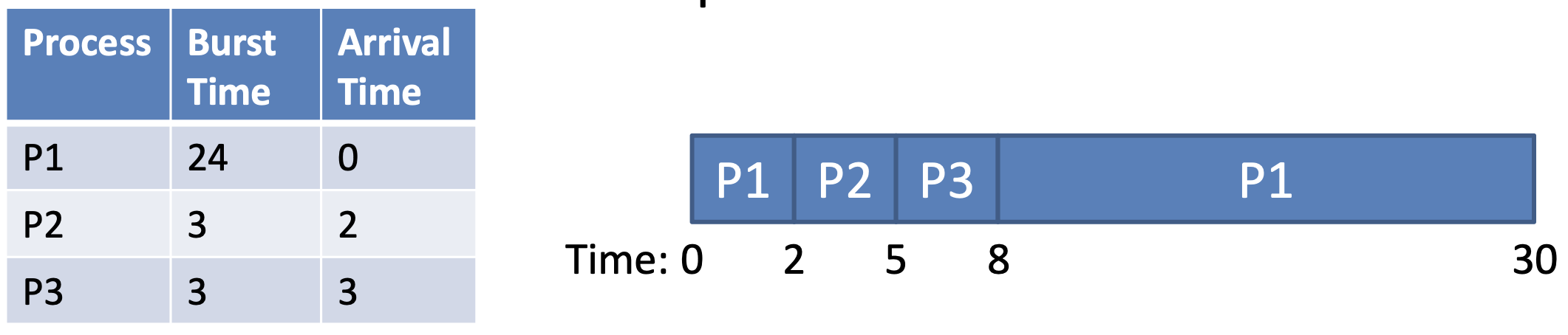

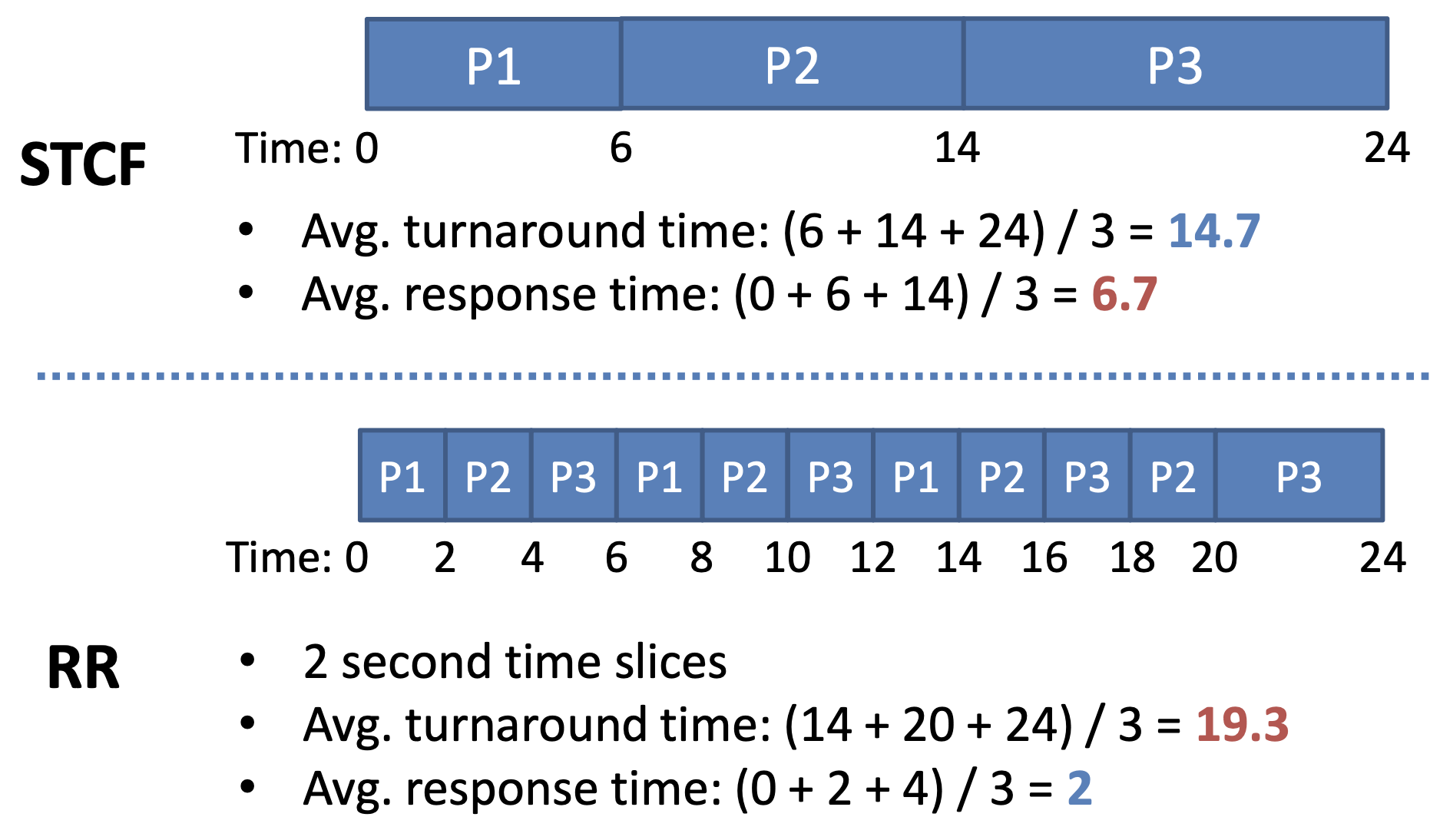

STCF: Shortest Time-To-Completion First

= PSJF: Preemptive SJF or SRTF: Shortest Remainig-Time First

Process with long bursts can be context switched out short processes

- At time 2, Compare 22(P1) with 3(P2)

- Average turnaround time:

STCF is also optimal (among Preemptive scheduling)

- Assuming we know future CPU burst times

Interactive Systems

Typing / Clicking in a desktop app

- User doesn't care about turnaround time

- User Does care about responsiveness

Response time = First run time - arrival time

Average turnaround time:

Average response time:

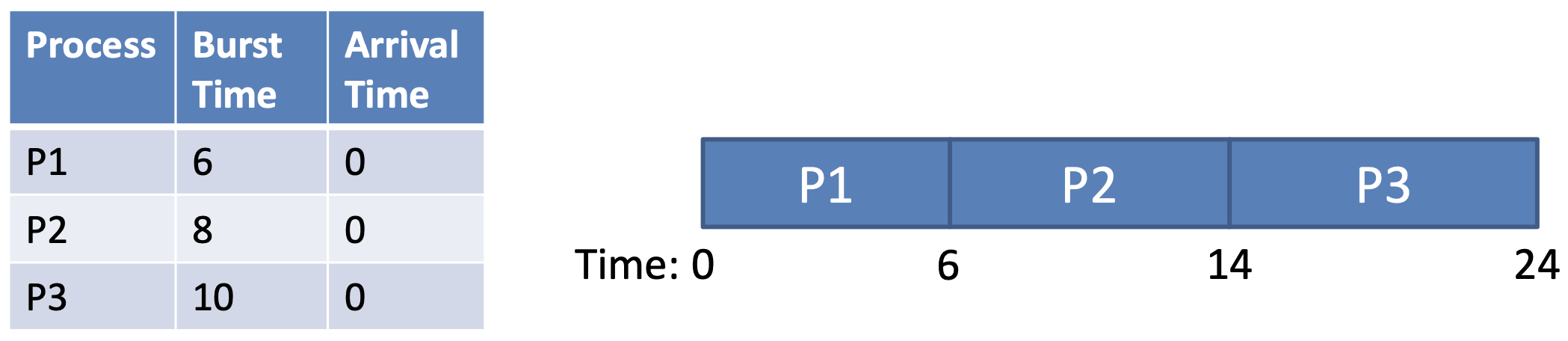

RR: Round Robin (=Time slicing)

RR is designed to reduce response times

- RR runs jobs for a time slice (= scheduling quantum)

RR vs STCF

- No process waits more than time slices

- Case) # processes N=3, time slice Q=2

The last process P3 waits for (3-1)2 = 4

- Case) # processes N=3, time slice Q=2

Tradeoffs

| RR | STCF | |

|---|---|---|

| Response time | Good response time | Bad response time |

| Turnaround time | Worst possible (Q is large FIFO behavior) | Achieves optimal, low turnaround times |

| Fairness | Achieves fairness | Unfair |

| # Context Switch | Many times | A few times |

Selecting the Time Slice

- Smaller time slices = faster response times

- Advance on hardware spawns a tiny time slice

- Context switching overhead

- Context switch wastes CPU time

- If time slice is too short, context switch overhead will dominate

- This results in another trade off