1.강의 내용

[DL Basic]Convolution은 무엇인가?

- CNN(Convolutional Neural Networks)

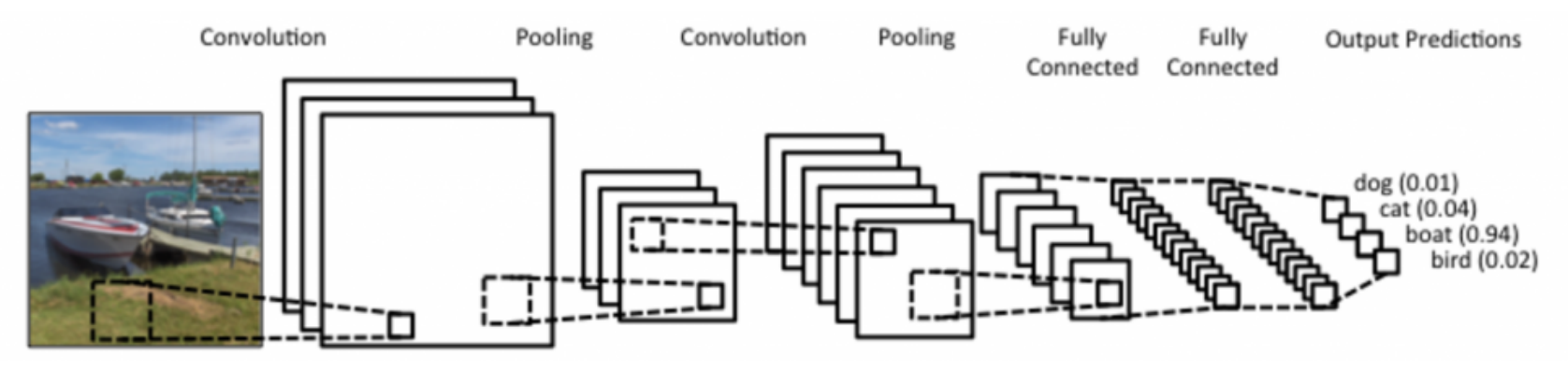

CNN consists of convolution layer, pooling layer, and fully connected layer.

1)Convolution and pooling layers: feature extraction

2)Fully connected layer: decision making(e.g., classification)

[DL Basic]Modern CNN

1)VGG: repeated 3x3 blocks

2)GoogleNet: 1x1 convolution

3)ResNet: skip-connection

4)DenseNet: concatenation

[DL Basic]Computer Vision

- Fully Convolutional Network

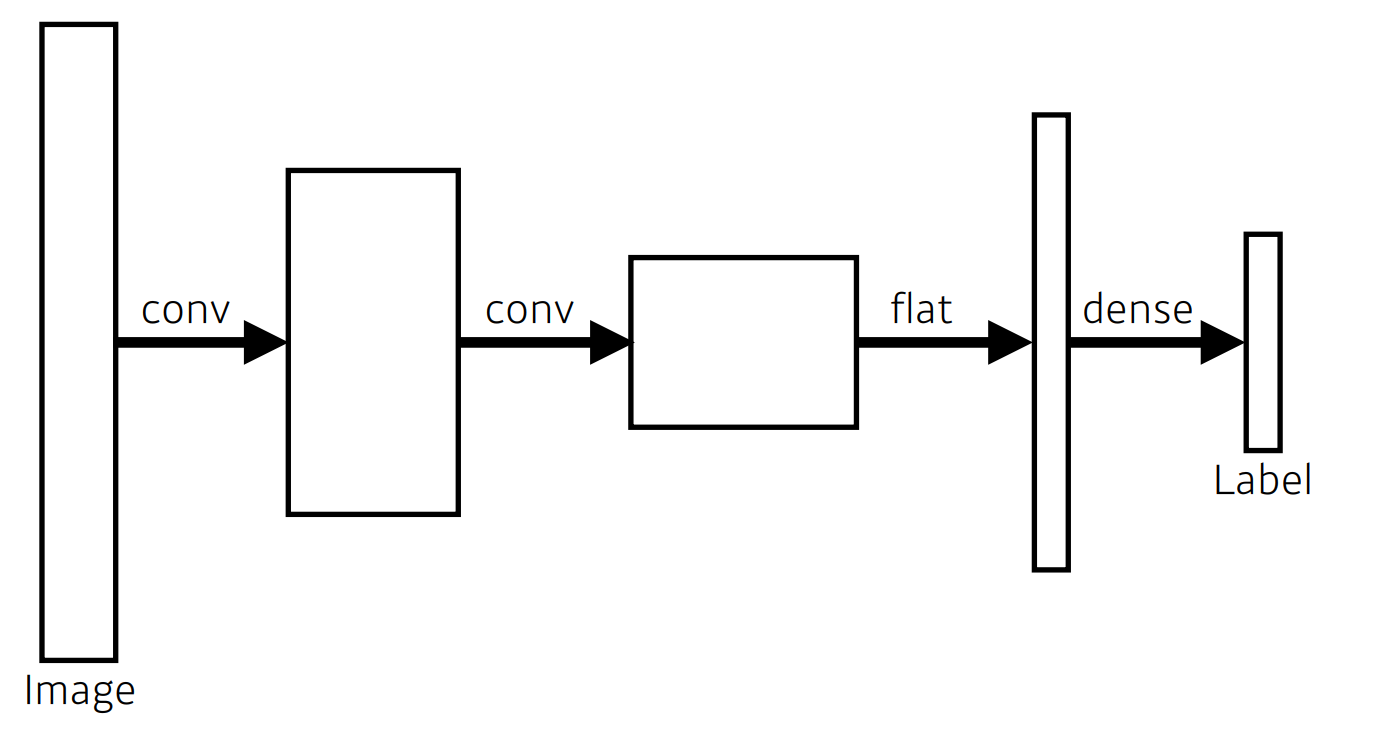

일반적인 CNN의 모습

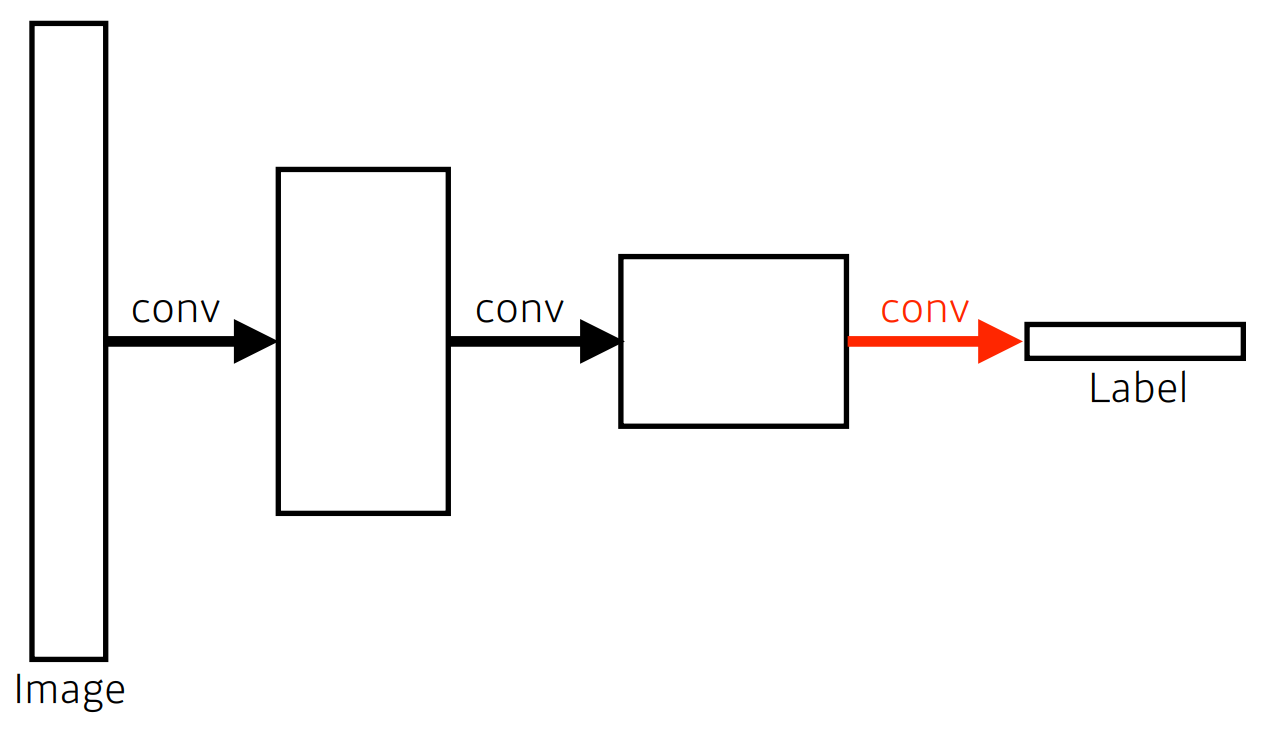

fully convolution network의 모습

- Detection

1)R_CNN

1.takes an input image,

2.extracts around 2,000 region proposals (using Selective search),

3.compute features for each proposal (using AlexNet), and then

4.classifies with linear SVMs.

2)SPPNet

1.In R-CNN, the number of crop/warp is usually over 2,000 meaning that CNN must run more than 2,000 times (59s/image on CPU).

2.However, in SPPNet, CNN runs once.

3)Fast R_CNN

1.Takes an input and a set of bounding boxes.

2.Generated convolutional feature map

3.For each region, get a fixed length feature from ROI pooling

4.Two outputs: class and bounding-box regressor.

4)YOLO

1.YOLO (v1) is an extremely fast object detection algorithm

2.It simultaneously predicts multiple bounding boxes and class probabilities

3.Given an image, YOLO divides it into SxS grid

4.Each cell predicts B bounding boxes (B=5)

5.Each cell predicts C class probabilities

- 실습

필수과제 3 내용

2.과제 수행 과정/결과물 정리

- Define MLP model

class ConvolutionalNeuralNetworkClass(nn.Module):

"""

Convolutional Neural Network (CNN) Class

"""

def __init__(self,name='cnn',xdim=[1,28,28],

ksize=3,cdims=[32,64],hdims=[1024,128],ydim=10,

USE_BATCHNORM=False):

super(ConvolutionalNeuralNetworkClass,self).__init__()

self.name = name

self.xdim = xdim

self.ksize = ksize

self.cdims = cdims

self.hdims = hdims

self.ydim = ydim

self.USE_BATCHNORM = USE_BATCHNORM

# Convolutional layers

self.layers = []

prev_cdim = self.xdim[0]

for cdim in self.cdims: # for each hidden layer

self.layers.append(

nn.Conv2d(

in_channels = prev_cdim,

out_channels = cdim,

kernel_size = self.ksize,

stride = (1, 1),

padding = self.ksize//2

)) # convlution

if self.USE_BATCHNORM:

self.layers.append(nn.BatchNorm2d(cdim)) # batch-norm

self.layers.append(nn.ReLU(True)) # activation

self.layers.append(nn.MaxPool2d(kernel_size=(2,2), stride=(2,2))) # max-pooling

self.layers.append(nn.Dropout2d(p=0.5)) # dropout

prev_cdim = cdim

# Dense layers

self.layers.append(nn.Flatten())

prev_hdim = prev_cdim*(self.xdim[1]//(2**len(self.cdims)))*(self.xdim[2]//(2**len(self.cdims)))

for hdim in self.hdims:

self.layers.append(nn.Linear(

prev_hdim, hdim, bias = True

))

self.layers.append(nn.ReLU(True)) # activation

prev_hdim = hdim

# Final layer (without activation)

self.layers.append(nn.Linear(prev_hdim,self.ydim,bias=True))

# Concatenate all layers

self.net = nn.Sequential()

for l_idx,layer in enumerate(self.layers):

layer_name = "%s_%02d"%(type(layer).__name__.lower(),l_idx)

self.net.add_module(layer_name,layer)

self.init_param() # initialize parameters

def init_param(self):

for m in self.modules():

if isinstance(m,nn.Conv2d): # init conv

nn.init.kaiming_normal_(m.weight)

nn.init.zeros_(m.bias)

elif isinstance(m,nn.BatchNorm2d): # init BN

nn.init.constant_(m.weight,1)

nn.init.constant_(m.bias,0)

elif isinstance(m,nn.Linear): # lnit dense

nn.init.kaiming_normal_(m.weight)

nn.init.zeros_(m.bias)

def forward(self,x):

return self.net(x)

C = ConvolutionalNeuralNetworkClass(

name='cnn',xdim=[1,28,28],ksize=3,cdims=[32,64],

hdims=[32],ydim=10).to(device)

loss = nn.CrossEntropyLoss()

optm = optim.Adam(C.parameters(),lr=1e-3)3.피어 세션

학습 내용 공유

1.과제 코드 리뷰

- 필수과제 3 특성상 스킵

1.강의 내용 및 심화내용 토론

[DL Basic]Convolution은 무엇인가?

[DL Basic]Modern CNN

[DL Basic]Computer Vision

3.논문 리뷰

4.학습회고

이고잉 님의 Git 2번째 특강을 들었습니다.

지금까지 Git을 다룬 적이 없기에 P-stage 들어가기 전까지 논문 리뷰를 하며 하게 될 구현 및 기타 과제들을 Git에 정리를 하며 익숙해져야겠습니다.

대부분의 시간을 컴퓨터 앞에 앉아 있다보니 체력관리를 별도로 해야 할 것 같습니다.