Wine

- 분류 문제에서 많이 사용하는 iris만큼 알려지지는 않았지만, 그래도 많이 사용함!

- 인류 역사에서 최초의 술로 알려져 있다

- 플라톤이 와인 짱 좋아함

| fixed acidity : 고정산도 | total sulfur dioxide : 총 이산화황 |

|---|---|

| volatile acidity : 휘발성산도 | density : 밀도 |

| citric acid : 시트르산 | pH |

| residual sugar : 잔류 당분 | sulphates : 황산염 |

| chlorides : 염화물 | alcohol |

| free sulfur dioxide : 자유 이산화황 | quality : 0~10 (높을수록 좋은 품질) |

import pandas as pd

red_wine = pd.read_csv('./data/winequality-red.csv', sep=';')

white_wine = pd.read_csv('./data/winequality-white.csv', sep=';')

# 레드와인과 화이트와인 합치기

red_wine['color'] = 1

white_wine['color'] = 0

wine = pd.concat([red_wine, white_wine])

wine['quality'].unique()

#output :

array([5, 6, 7, 4, 8, 3, 9], dtype=int64)

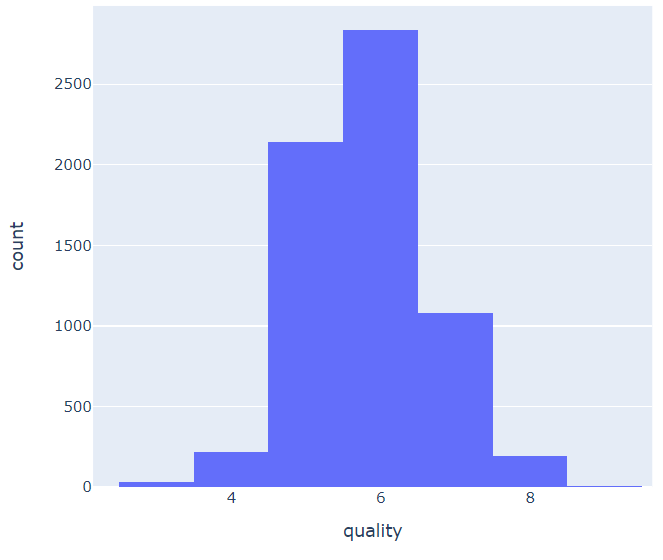

# 퀄리티를 기준으로 개수를 알아보자

import plotly.express as px

fig = px.histogram(wine, x='quality')

fig.show()

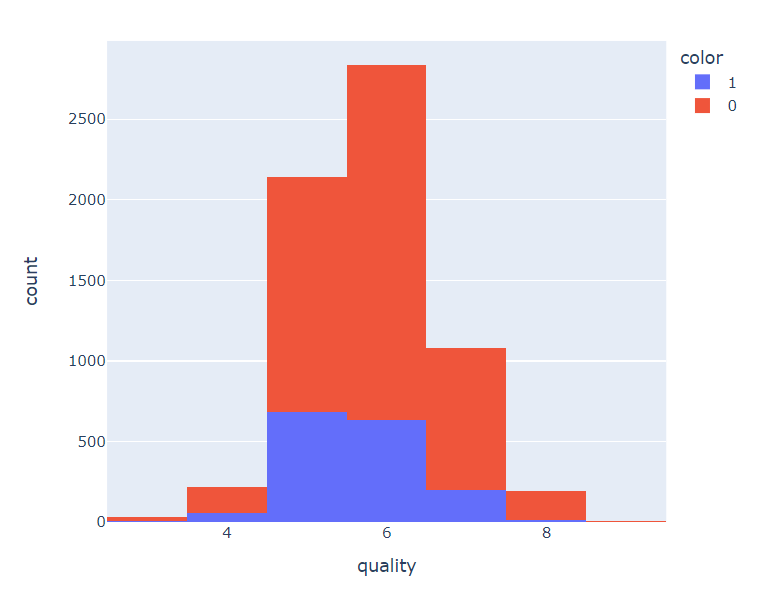

# 레드, 화이트 각각 봐보자

fig = px.histogram(wine, x='quality', color='color')

fig.show()

# 라벨 분리

X = wine.drop(['color'], axis=1)

y = wine['color']

# 데이터셋 분리

from sklearn.model_selection import train_test_split

import numpy as np

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state = 42)

np.unique(y_train, return_counts=True)

# output:

(array([0, 1], dtype=int64), array([3939, 1258], dtype=int64))

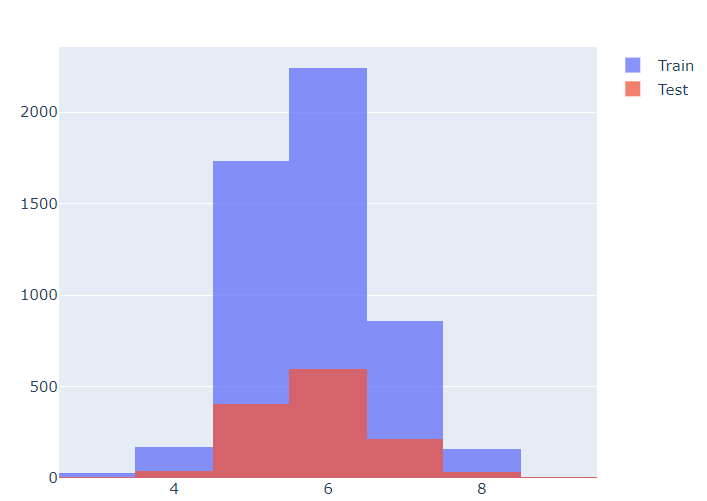

# 훈련용과 테스트용이 레드/화이트 와인에 따라 어느정도 구분됐는지 확인

import plotly.graph_objects as go

fig = go.Figure()

fig.add_trace(go.Histogram(x=X_train['quality'], name='Train'))

fig.add_trace(go.Histogram(x=X_test['quality'], name='Test'))

fig.update_layout(barmode='overlay') #두개 겹쳐그린다

fig.update_traces(opacity=0.75) # 투명도

fig.show()

from sklearn.tree import DecisionTreeClassifier

wine_tree = DecisionTreeClassifier(max_depth=2, random_state=42)

wine_tree.fit(X_train, y_train)

# 결과는 ?

from sklearn.metrics import accuracy_score

y_pred_tr = wine_tree.predict(X_train)

y_pred_test = wine_tree.predict(X_test)

print('Train Acc : ', accuracy_score(y_train, y_pred_tr))

print('Test Acc : ', accuracy_score(y_test, y_pred_test))

# output :

Train Acc : 0.957475466615355

Test Acc : 0.9476923076923077데이터 전처리 - MinMAxsScaler 와 StandardScaler

-

근데 사실 Decision Tre에서는 영향 없음

-

주로 Cost Function을 최적화할 때 유효할 때가 있음.

-

어떤 스케일러가 좋은지는 해봐야 안다

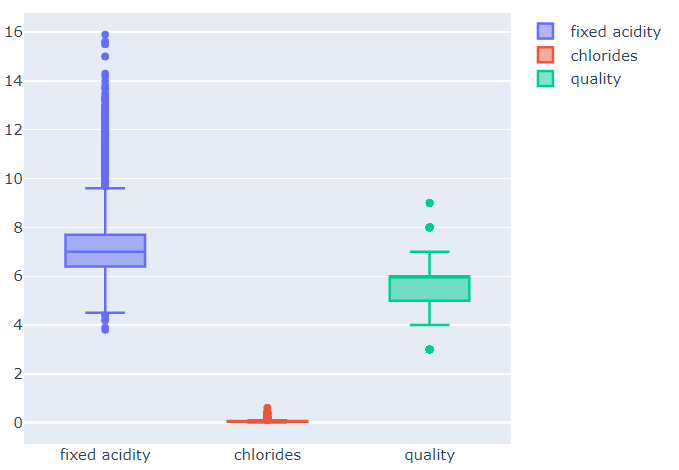

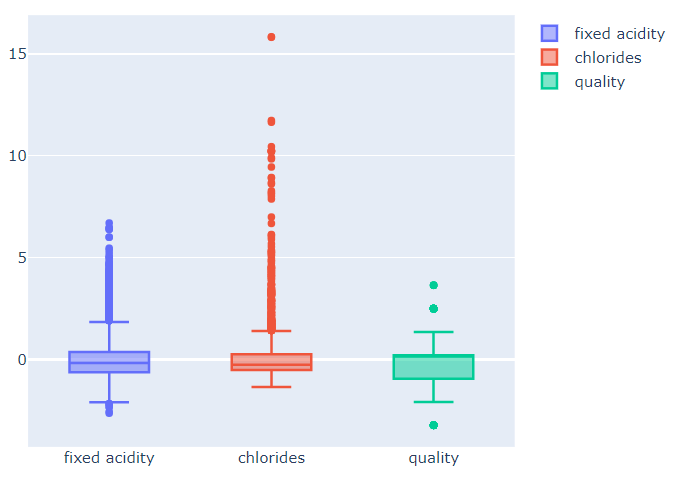

fig = go.Figure()

fig.add_trace(go.Box(y=X['fixed acidity'], name='fixed acidity'))

fig.add_trace(go.Box(y=X['chlorides'], name='chlorides'))

fig.add_trace(go.Box(y=X['quality'], name='quality'))

fig.show()

- 이렇게 피쳐들 사이의 범위 격차가 크면 ML에선 학습이 안될 수도 있음, 안된다는건 아님

from sklearn.preprocessing import MinMaxScaler, StandardScaler

MMS= MinMaxScaler()

SS = StandardScaler()

SS.fit(X)

MMS.fit(X)

X_ss = SS.transform(X)

X_mms = MMS.transform(X)

X_ss_pd = pd.DataFrame(X_ss, columns=X.columns)

X_mms_pd = pd.DataFrame(X_mms, columns=X.columns)

fig = go.Figure()

fig.add_trace(go.Box(y=X_ss_pd['fixed acidity'], name='fixed acidity'))

fig.add_trace(go.Box(y=X_ss_pd['chlorides'], name='chlorides'))

fig.add_trace(go.Box(y=X_ss_pd['quality'], name='quality'))

fig.show()

- 근데 어차피 결정나무에서는 이런 전처리 효과가 거의 없다, 그냥 해봄!

레드와인과 화이트와인을 구분하는 중요한 특성은 무엇일까?

dict(zip(X_train.columns, wine_tree.feature_importances_))

# output :

{'fixed acidity': 0.0,

'volatile acidity': 0.0,

'citric acid': 0.0,

'residual sugar': 0.0,

'chlorides': 0.23383167646371428,

'free sulfur dioxide': 0.0,

'total sulfur dioxide': 0.7661683235362857,

'density': 0.0,

'pH': 0.0,

'sulphates': 0.0,

'alcohol': 0.0,

'quality': 0.0}맛의 이진분류를 하고 quality 컬럼을 이진화 해보자!

wine['taste'] = [1. if grade>5 else 0 for grade in wine['quality']]

X = wine.drop(['taste'], axis=1)

y = wine['taste']

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

wine_tree = DecisionTreeClassifier(max_depth=2, random_state=42)

wine_tree.fit(X_train, y_train)

y_pred_tr = wine_tree.predict(X_train)

y_pred_test = wine_tree.predict(X_test)

print('Train Acc : ', accuracy_score(y_train, y_pred_tr))

print('Train Acc : ', accuracy_score(y_test, y_pred_test))

# output :

Train Acc : 1.0

Train Acc : 1.0100%가 나오면 의심해봐야한다, 왜 이렇게 나왔을까?

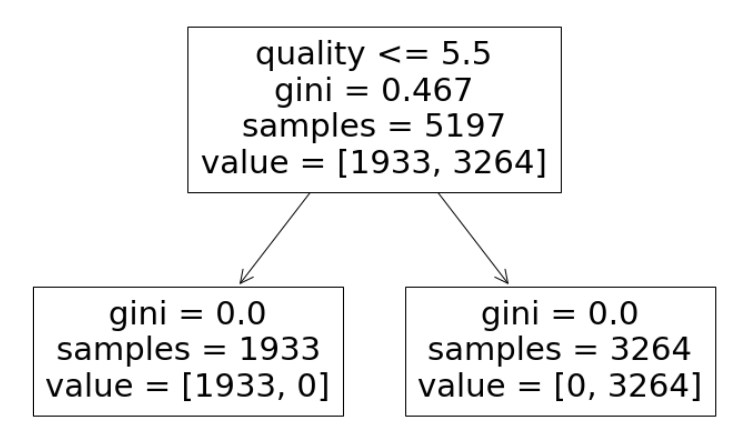

import matplotlib.pyplot as plt

import sklearn.tree as tree

plt.figure(figsize=(12, 8))

tree.plot_tree(wine_tree, feature_names=X.columns);

- 이진분류를 퀄리티 기준으로 했는데 정작 데이터프레임에 그 기준이 된 퀄리티가 남아있었고, 그걸로 학습시켜서 백프로가 됨

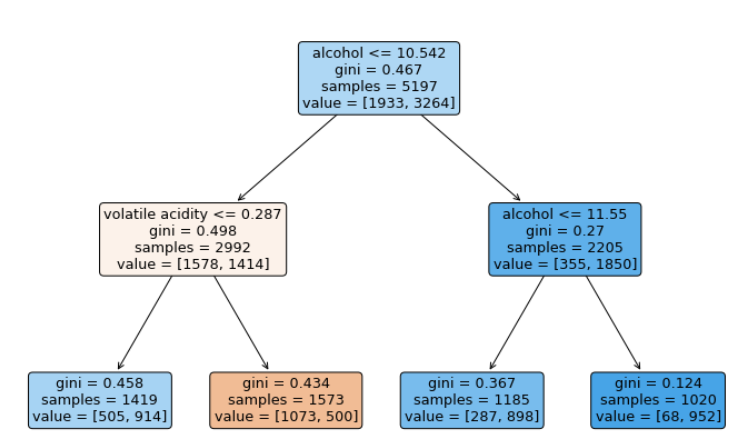

X = wine.drop(['taste', 'quality'], axis=1)

y = wine['taste']

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

wine_tree = DecisionTreeClassifier(max_depth=2, random_state=42)

wine_tree.fit(X_train, y_train)

y_pred_tr = wine_tree.predict(X_train)

y_pred_test = wine_tree.predict(X_test)

print('Train Acc : ', accuracy_score(y_train, y_pred_tr))

print('Train Acc : ', accuracy_score(y_test, y_pred_test))

# output:

Train Acc : 0.7383105637868

Train Acc : 0.7084615384615385

그럼 어떤 와인을 맛있다고 한걸까?

plt.figure(figsize=(12, 8))

tree.plot_tree(wine_tree, feature_names=X.columns,

rounded=True,

filled=True,

);

- 알콜 도수로 가오부려땅