Statistical vs Practical Significance

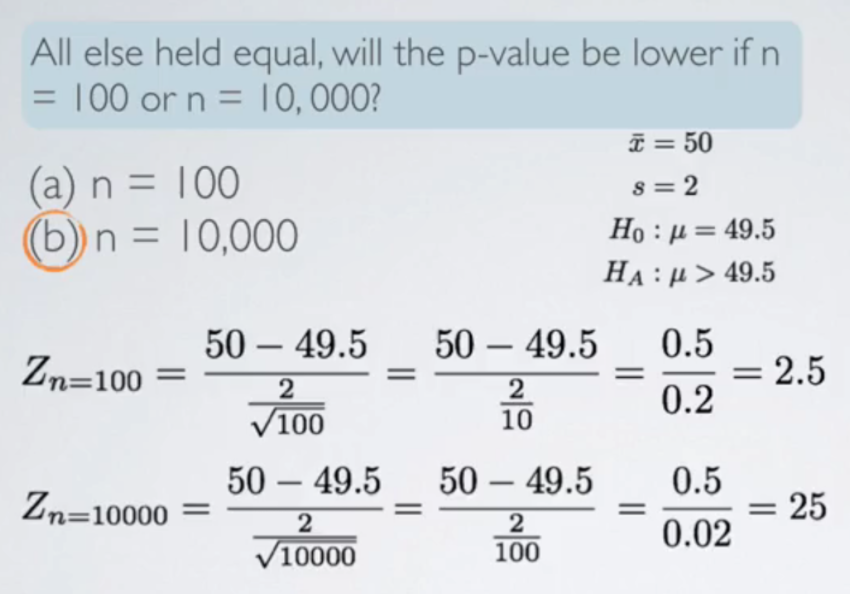

Increase in sample size → decrease in standard error → increase in (z) test statistic → smaller p value

A z-score of 25 basically means almost no p-value. Or in other words, highly statistically significant finding. However, is it practically significant? When we're thinking about practical significance, we focus on the effect size. And remember, we define the effect size as the difference between your point estimate, and then your null value. So that would be in the calculation of the test statistic as the numerator. In both instances, we have the same exact effect size. And it's a small effect size to be fair as well. And even though we have a small effect size, which may not be practically significant. We are able to find the statistically significant result simply by inflating our sample size. And remember that the sample size is something the researcher has control over, because after all, you get to decide how many observations you want to sample. Sure, there's going to be a bound based on how many, how much resources you have but at the end of the day that's the human controlled part of a study. So, when you see highly statistically significant results make sure that you have a critical eye and make sure that you also inquire whether the effect size is reported and what the sample size is as well. And not only should you inquire this stuff but if you are reporting these highly statistically significant results it's always a good idea to let your readers know of your effect size and your sample size so that it, the discussion is made clear if a statistically significant finding is also practically significant or not.

So, to summarize real differences between the point estimate and the null value are easier to detect with large samples. However very large samples will result in statistical significance even for tiny differences between the sample mean and the null value or our effect size, even when the difference is not practically significant. So, in order to make sure that your findings don't suffer from this problem of being statistically significant, but not practically significant, oftentimes what we do is we do some a priori analysis before you actually do the data collection to figure out, based on characteristics of the variable you are studying, how many observations to collect. So, it is highly recommended that researchers, either they should do this themselves, or consult with statisticians, but to figure out how many observations to sample before they actually go into doing that. Because the last thing you want to do is having to find out is, we have already put in the researches to collect some data, and you either don't have enough or you have too many observations. This brings to mind a quote from a famous statistician, R.A. Fisher. To call in the statistician after the experiment is done may be no more than asking him to perform a post-mortem examination. He may be able to say what the experiment died of.