논문 - LLM

1.Recurrent neural network based language model - 2010

2010 - Mikolov T, Karafiat M, Burget L, Cernocky JH, Khudanpur S. “Recurrent neural network based language model” INTERSPEECHhttps://www.research

2.Sequence to Sequence Learning with Neural Networks - 2014

2014 - I. Sutskever, O. Vinyals, and Q. V. Le “Sequence to Sequence Learning with Neural Networks” in NIPShttps://arxiv.org/abs/1409.3215 - 논문 링크

3.NMT by Jointly Learning to Align and Translate - 2014

2014 - Bahdanau et al. “NMT by Jointly Learning to Align and Translate”https://arxiv.org/abs/1409.0473 - 논문 링크논문 "Neural Machine Translation by J

4.Neural Machine Translation of Rare Words with Subword Units - 2015

2015 - Sennrich R, Haddow B, Birch A. “Neural Machine Translation of Rare Words with Subword Units”https://arxiv.org/abs/1508.07909 - 논문 링크논문 "Ne

5.Attention is all you need - 2017

2017 - ”Attention is all you need” (Transformer) https://arxiv.org/abs/1706.03762 - 논문 링크논문 "Attention Is All You Need"에서는 기존의 복잡한 순환 신경망(RNN)이나

6.BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding - 2018

2018 - "BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding"https://arxiv.org/abs/1810.04805 - 논문 링크"BERT: Pre-train

7.Improving Language Understanding by Generative Pre-Training - 2018

2018 - "Improving Language Understanding by Generative Pre-Training" (GPT)https://s3-us-west-2.amazonaws.com/openai-assets/research-covers/langua

8.논문 - LLM 설명 요약 (2010 ~ 2015)

RNN 기반 언어 모델(RNN LM)은 기존 n-gram 기반 백오프 언어 모델에 비해 음성 인식 작업에서 perplexity를 약 50% 줄일 수 있음 (낮을 수록 더 정확하게 예측한다는 것)이유는 RNN LM이 긴 문맥 정보를 더 잘 처리할 수 있기 때문여러 개의

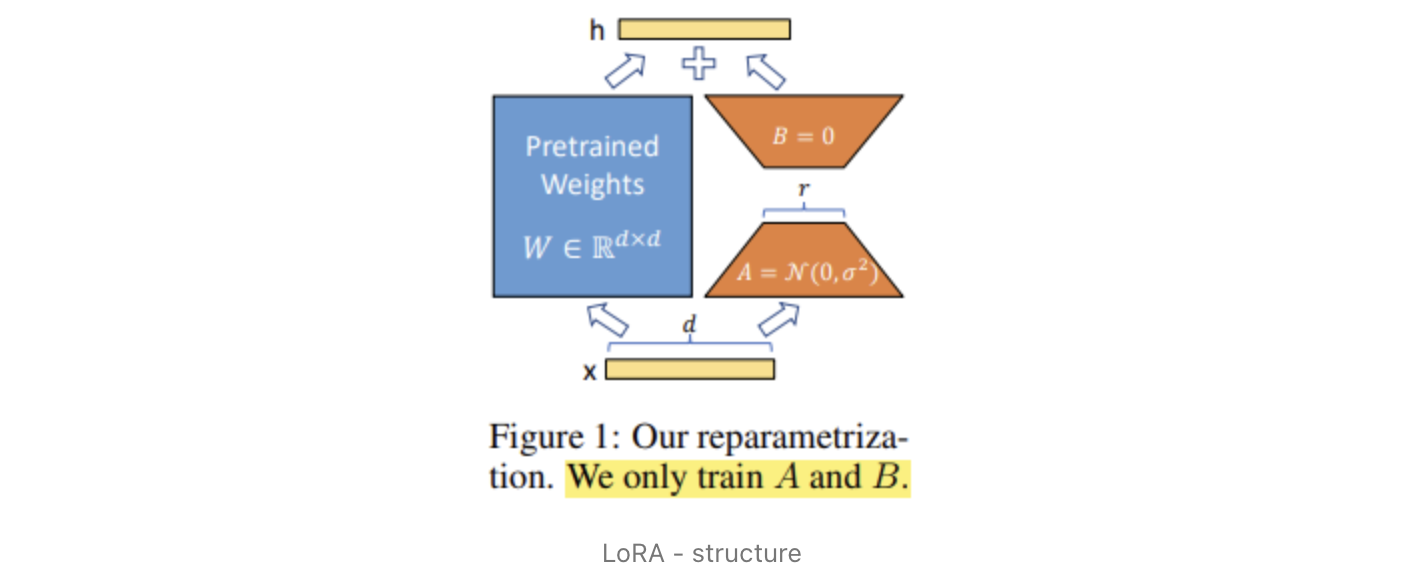

9.LORA: LOW-RANK ADAPTATION OF LARGE LANGUAGE MODELS

https://arxiv.org/pdf/2106.09685 - 논문링크 논문리뷰 Abstract 최근 자연어처리에서는 pre-trained된 language model을 가지고 downstream-application에 적용 다양한 application에 알맞게 적용