FinGPT 논문을 읽던 중, 매일매일 쏟아져나오는 금융 데이터를 가지고 Fine-tuning을 하는데 있어서 LoRA를 사용했다는 것을 보고 LoRA를 간단하게만 내가 알고 있다는 생각을 했다. 그래서 이렇게 LoRA 논문을 찾아보게되었다. 참고로 Microsoft에서 나온 논문인데 읽고 나니 이 논문이 꽤 대단한 내용이라는 점을 다시 한 번 깨달았다.

0. Abstract

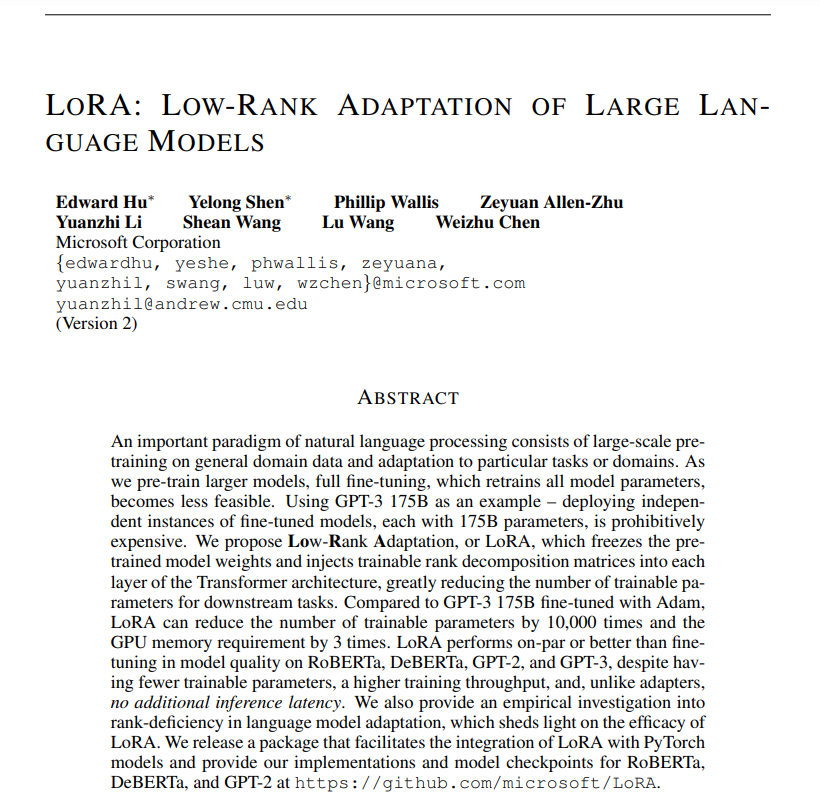

- LLM모델은 일반 도메인 데이터에 대한 대규모 pre-training과 특정 작업 또는 도메인에 대한 fine-tuning으로 이뤄짐

- ex) GPT-3 175B를 사용하면 각각 175B 매개변수가 있는 미세 조정 모델의 독립적인 인스턴스를 배포하는 데 비용이 엄청나게 많이 듭니다.

- 사전 학습 된 모델 가중치 를 동결하고 학습 가능한 순위 분해 행렬을 Transformer 아키텍처의 각 계층에 주입하여 다운스트림 작업에 대해 학습 가능한 매개변수 수를 크게 줄이는 LoRA(Low-Rank A 적응)를 제안

- LoRA는 훈련 가능한 매개변수 수를 10,000배, GPU 메모리 요구 사항을 3배 줄일 수 있었다. 또한 성능 면에서도 RoBERTa, DeBERTa, GPT-2 및 GPT-3의 모델 품질에서 미세 조정보다 동등하거나 더 나은 성능을 발휘함.

1. Introduction

🤔 LLM은 기본적으로 pre-trained model로부터 특정 task(e.g. summarization, question and answering, ...)에 adaptation하기 위해 fine-tuning을 해야 합니다. Fine-tuning을 하면서 LLM모델의 weight parameters를 모두 다시 학습하게 되는데 이때 엄청난 비용이 발생한다.

(예를 들어 GPT-2(or 3), RoBERTa large모델의 경우 fine-tuning만 몇 달이 걸린다.)

❗ 이를 해결하기 위해 해당 논문에서는 Low-Rank Adaptation(LoRA)를 제안

LoRA의 overview 이미지이다.

이 이미지에서 볼 수 있는 것처럼, 기존의 weights 대신 새로운 파라미터를 이용해서 동일한 성능과 더 적은 파라미터로 튜닝할 수 있는 방법론을 제시하고 있다.

LoRA는 Low-Rank 방법을 이용하여 time, resource cost를 줄이게 된다.

(🔻간단한 설명)

위 Figure 1과 같이 fine-tuning시에 pre-trained weights W는 frozen해두고 low rank decomposition된 weights A, B만 학습하고 W에 summation하게 된다. Low rank로 decomposition된 weights는 당연하게도 기존 W보다 훨씬 작은 크기의 weight이기 때문에 time, resource cost를 줄일 수 있게 된다.

또한 pre-trained model을 가지고 있는 상태에서 특정 task에 adaptation하기 위해서 A와 B만 storage에 저장하면 되고 다른 task에 adaptation하기 위해 또 다른 A', B'만 갈아 끼우면 되기 때문에 storage, task switching면에서 매우 효율적이다. 추가적으로 inference시에도 fine-tuned model의 latency성능이 낮아지지도 않는다.

💡Low-Rank 방법을 사용하는 이유?

📑"Measuring the Intrinsic Dimension of Objective Landscapes"논문, 📑"Intrinsic Dimensionality Explains the Effectiveness of Language Model Fine-Tuning"논문에서

❗ over-parameterized model은 low intrinsic dimension으로 존재하고 있다❗

-> model adaptation동안의 weight change에도 low intrinsic rank를 가질 거라고 가정하게 되고 Low-Rank 방법을 사용

LoRA는 기존 pre-trained weights는 frozen해두고 몇 개의 dense(fc) layers만 학습하는 것인데 이때 학습방법이 dense layer의 weight을 low rank로 decomposition한 matrices만을 optimization하는 것!

✅ Terminologies and Conventions

2. Problem Statement

✅ LoRA는 training objective와 상관없이 모두 사용 가능(Agnostic)하지만, LLM에서의 예시로 LoRA를 설명한다.

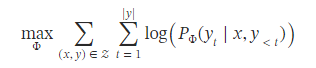

-

기존의 LLM모델(GPT)의 확률함수를 로 정의한다. (y와 x는 context-target pair쌍이라고 생각하면 편함. 는 GPT같은 multi-task learner 모델의 확률함수이다.)

-

그리고 fine-tuning과정에서 LLM이 튜닝되는 Φ가 최적화 되는 식은 아래 식처럼 표현 될 수 있다.

- : pre-trained weights

- 기존의 full fine-tuning model은 pre-trained model weights 로 initialized 되고, 위의 Log-likelihood function을 최대하하고자 을 update 한다.

- Log-likelihood function으로 문제를 해결할 때 가장 적합한 파라미터 Φ의 나올 확률을 최대화 하는 것이라고 생각하면 된다.

- 직관적으로 backpropagation할 때의 모델을 나타내면, 이렇게 된다.

🤔 full fine-tuning을 한다면?

: "각" downstream task를 위해 dimension 과 같은 크기의 을 매번 재학습 해야한다.

=> GPT 같은 LLM 모델이라면, 엄청난 양의 비용이 든다!

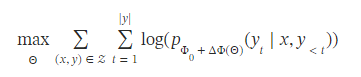

=> 이를 해결하고자 LoRA는 update행 하는 parameter를 로 치환하여 훨씬 작은 size의 parameter 로 대체 학습하는 것이다! (accmulated gradient values )

- 로 치환하여 목적함수가 아래 수식처럼 정의되게 됨.

- 즉 기존의 log-likelihood 문제에서 모델이 backpropagation 과정에서 이용되는 파라미터 연산문제를 더 적은 파라미터 Θ로 치환하여 풀겠다는 의미이다.

- << 이기에, 최적의 를 찾는 task는 를 optimization하는 것으로 대체된다.

3. Aren’t Existing Solutions Good Enough?

우리가 해결하려고 하는 문제는 결코 새로운 것이 아니다.

transformer learning이 시작된 이래로 수십 개의 작업이 model adaptation에서 매개변수 및 계산 효율성을 높이기 위해 노력해왔다.

예를 들어 LM 에서 사용하면 효율적인 adaptation 과 관련하여 두 가지 중요한 전략이 있다.

1) Adapter Layer 추가

2) input layer activation의 일부 형태를 최적화

=> 두 전략 모두 제한 사항이 있으며, 특히 대규모 및 대기 시간에 민감한 프로덕션 시나리오에서는 더욱 그렇다.

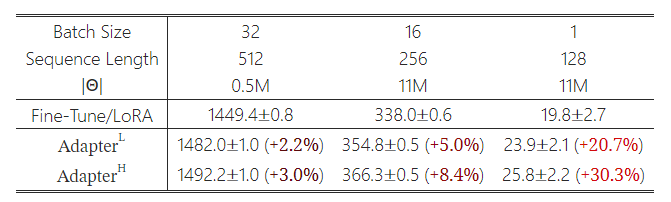

Adapter Layers Introduce Inference Latency

Adapter Layer는 추론 지연 시간을 발생시킨다. 대규모 신경망은 대기 시간을 낮게 유지하기 위해 하드웨어 병렬성에 의존하며 어댑터 계층은 순차적으로 처리되어야 한다.

아래 표에서 단일 GPU를 사용하는 경우 병목 현상 크기가 매우 작은 경우에도 어댑터를 사용할 때 지연 시간이 눈에 띄게 증가하는 것을 확인할 수 있다.

Directly Optimizing the Prompt is Hard

프롬프트를 직접 최적화하는 것은 어렵다.

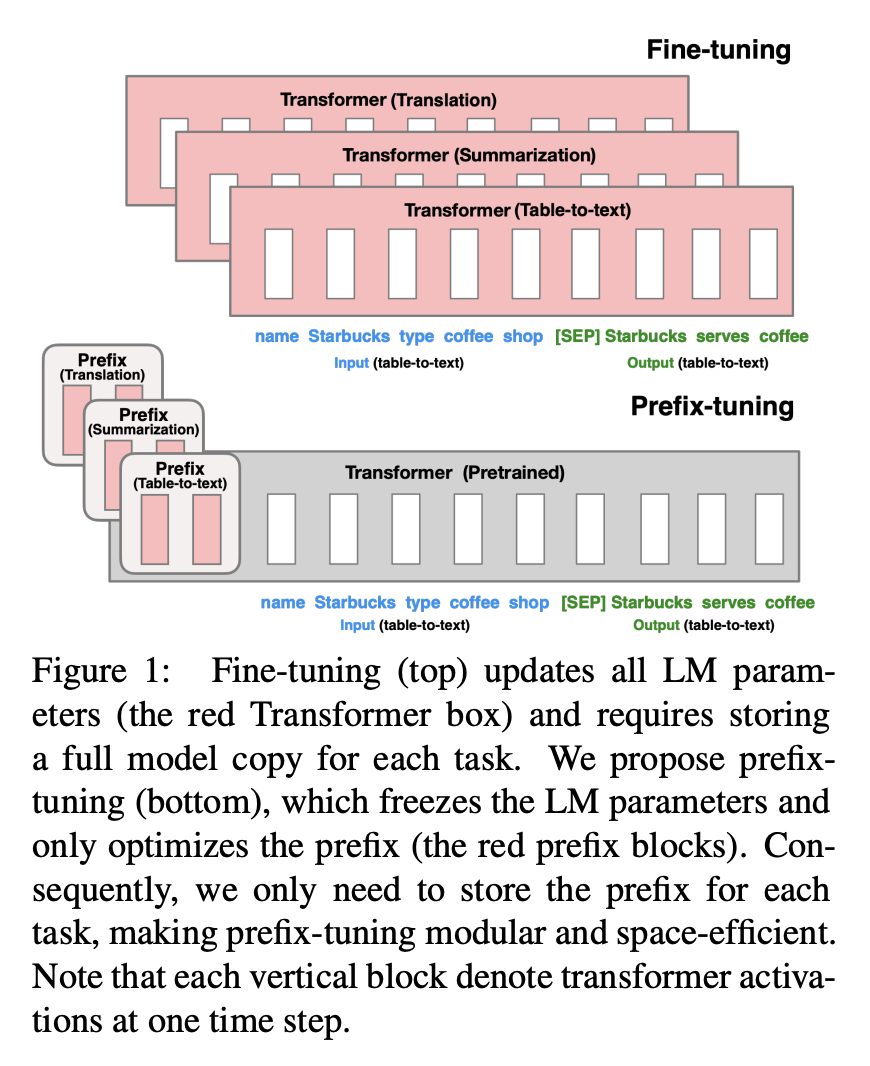

대표적으로 Prefix tuning 방식이 있다.

🤔 Prefix tuning

: 연속적인 태스크 특화 벡터(continuous task-specific vector = prefix)를 활용해 언어모델을 최적화하는 방법

- 언어모델의 파라미터는 고정한 상태(=frozen)

- continuous vector/virtual tokens을 사용한다는 점에서 자연어(discrete tokens)를 사용하는 접근방법과 구분됨

- 하나의 언어모델로 여러 개의 Task를 처리할 수 있음 (prefix를 학습)

- 연속적인 단어 임베딩으로 구성된 설명(instruction)을 최적화함으로써, 모든 Transformer activation과 이후에 등장하는 연속적인 토큰들에 영향을 줌

- 모든 레이어의 prefix를 최적화

우리는 Prefix tuning이 최적화하기 어렵고 훈련 가능한 매개변수에서 성능이 단조롭지 않게 변경된다는 것을 관찰하여 원본 논문에서 유사한 관찰을 확인했다.

❗ Adaptation을 위해 시퀀스 길이의 일부를 예약하면 다운스트림 작업을 처리하는 데 사용할 수 있는 시퀀스 길이가 필연적으로 줄어들고, 이로 인해 다른 방법에 비해 프롬프트 조정 성능이 떨어지는 것으로 의심된다.

4. Our Method

4.1. Low-Rank-Parametrized Update Matrices

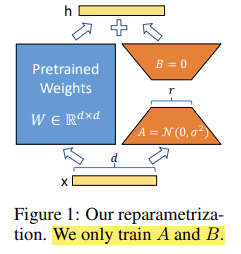

✅ LoRA는 adaptation 동안에 low intrinsic rank를 가진 weight로 update하는 방법!

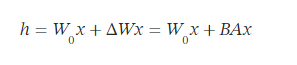

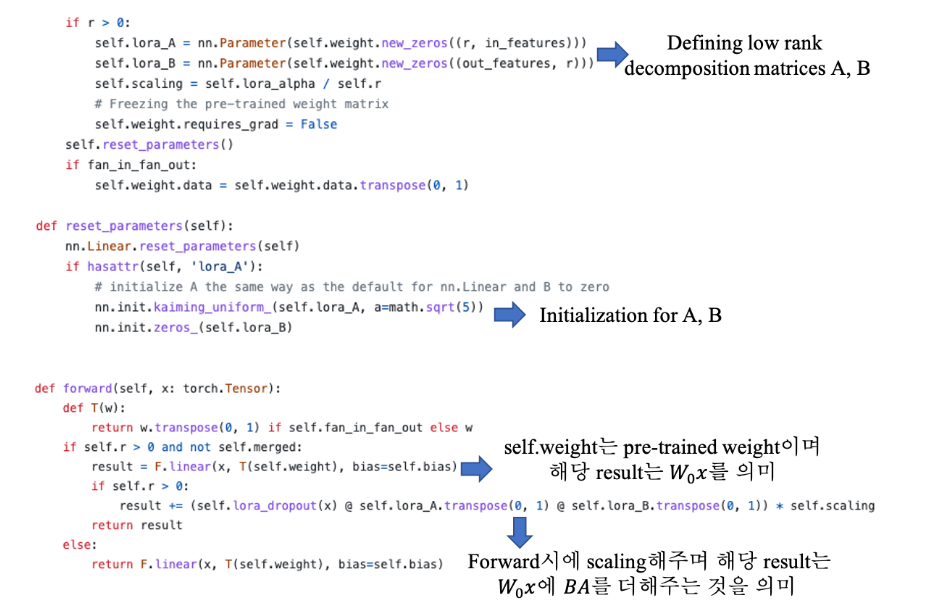

- 수학적으로 pre-trained weight matrix 에 대해 로 update하는 것!

- 즉, 은 frozen되고 low rank로 decomposition된 와 만을 학습하는 것 (을 만족함)

- 와 는 같은 input에 곱해지고 그들의 output vector는 coordinate-wise하게 합(summation)한다. forward pass를 표현하면 다음과 같다.

- A는 random Gaussian initialization되고 B는 0으로 initialization된다. (training 시작 시에 AW = BA또한 0)

- 는 으로 scaling된다.

- Adam으로 optimization 할 때 를 tuning하는 것은 learning rate를 tuning하는 것과 같이 하였다. 그래서 을 처음 값으로 정하였다. Scaling은 r값을 변화시킬때 hyperparameter 를 재조정할 필요를 줄이는 데 도움이 된다.

- 훈련과정에서 W0는 gradient update를 하지 않고, 오히려 BA를 학습하는 과정으로 이루어진다.

아래의 위의 내용을 실제로 구현한 LoRA 공식 github속 구현 코드이다.

A Generalization of Full Fine-tuning.

- LoRA는 보다 일반적인 형태의 fine-tuning을 통해 pre-trained parameters의 하위 집합을 훈련할 수 있다.

- LoRA는 한 단계 더 나아가 adaptation 중에 full-rank를 갖기 위해 가중치 행렬에 대한 accumulated gradient update가 필요하지 않다.

- 즉, 훈련 가능한 매개변수의 수를 늘리면 LoRA 훈련은 대략 원본 모델 훈련으로 수렴하는 반면, adapter 기반 방법은 MLP로 수렴하고 prefix 기반 방법은 긴 입력 시퀀스를 사용할 수 없는 모델로 수렴한다.

No Additional Inference Latency.

- LoRA는 추가 추론 지연 시간이 없다.

- LoRA를 사용하여 inference하려고 할 때는 기존 pre-trained weight 에 학습한 를 더해주고 사용하면 되기 때문에 infernece latency 성능 하락은 전혀 없다.

- 을 기반으로 또 다른 task로 학습한 B'A'가 있을 경우 BA을 빼주고

B'A'을 더해주어 사용하면 되기 때문에 reusability이 좋다.

4.2. Applying LoRA to Transformer

✅ 논문에서는 trainable weight를 최소화하기위해 LoRA를 모든 layer 및 module에 적용하지않았다.

❗ 오직 LoRA를 Transformer의 attention weights인 또는 , 에만 적용하였고 나머지 MLP module에는 적용하지 않았다.

(실제 성능 실험에서는 Wg와 Wu 에만 LoRA를 적용하였다.)

이렇게 셋팅하고 진행함으로써 1,750억개의 parameter를 가진 GPT-3에 대해 fine-tuning시에 원래 VRAM를 1.2TB사용하던 것이 LoRA를 통해 350GB로 줄어들었다.

또한 training speed또한 25%가량 줄었다.

5. Empirical Experiments

5.1. Baselines

- Fine-Tuning (FT) : fine-tuning 중에 모델은 pre-trained weights와 bias로 초기화되고 모든 모델 parameters는 기울기 updater를 거친다

- Bias-only or BitFit : 다른 모든 것을 frozen하면서 bias vector 만 훈련

- Prefix-embedding tuning (PreEmbed) : 입력 토큰 사이에 특수 토큰을 삽입, (특수 토큰에는 훈련 가능한 단어 임베딩이 있으며 일반적으로 모델의 어휘에는 없음)

- Prefix-layer tuning (PreLayer) : prefix-embedding tuning의 연장, 일부 특수 토큰에 대해 임베딩이라는 단어(또는 임베딩 레이어 이후의 활성화)를 학습하는 대신 모든 Transformer 레이어 이후의 활성화를 학습.

- Adapter tuning : self-attention 모듈(및 MLP 모듈)과 후속 잔여 연결 사이에 어댑터 레이어를 삽입

- LoRA : 기존 가중치 행렬과 병렬로 훈련 가능한 순위 분해 행렬 쌍을 추가, , 에만 적용

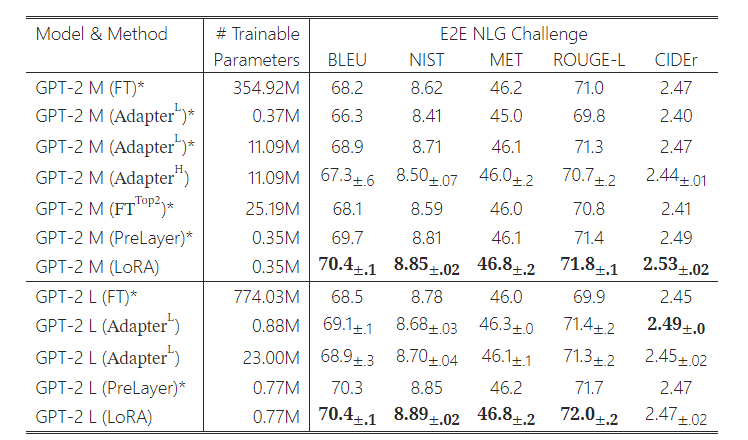

5.4. GPT-2 medium/large

- E2E NLG Challenge에서 앞서 정의한 다양한 adaptation 방법을 사용하는 GPT-2 medium(M) 및 large(L)모델링 결과에 대해서 모든 지표에 대해 높을수록 좋다.

- 위의 표를 보면, LoRA는 훈련 가능한 매개변수가 비슷하거나 더 적은 여러 기준선보다 성능이 뛰어납니다.

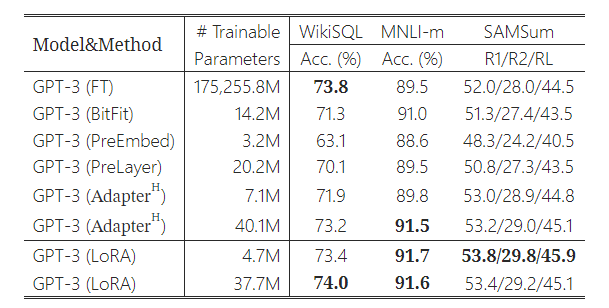

- GPT-3 175B에서 다양한 적응 방법의 성능을 위의 표에서 볼 수 있다.

- WikiSQL의 논리 형식 유효성 검사 정확도, MultiNLI 일치의 유효성 검사 정확도, SAMSum의 Rouge-1/2/L을 확인할 수 있다.

- LoRA는 일반적인 Fine-tuning(FT)를 포함하여 이전 접근 방식보다 더 나은 성능을 발휘했다.

5.5. Scaling up to GPT-3 175B

- LoRA의 최종 스트레스 테스트로 1,750억 개의 매개변수를 갖춘 GPT-3까지 확장하였다.

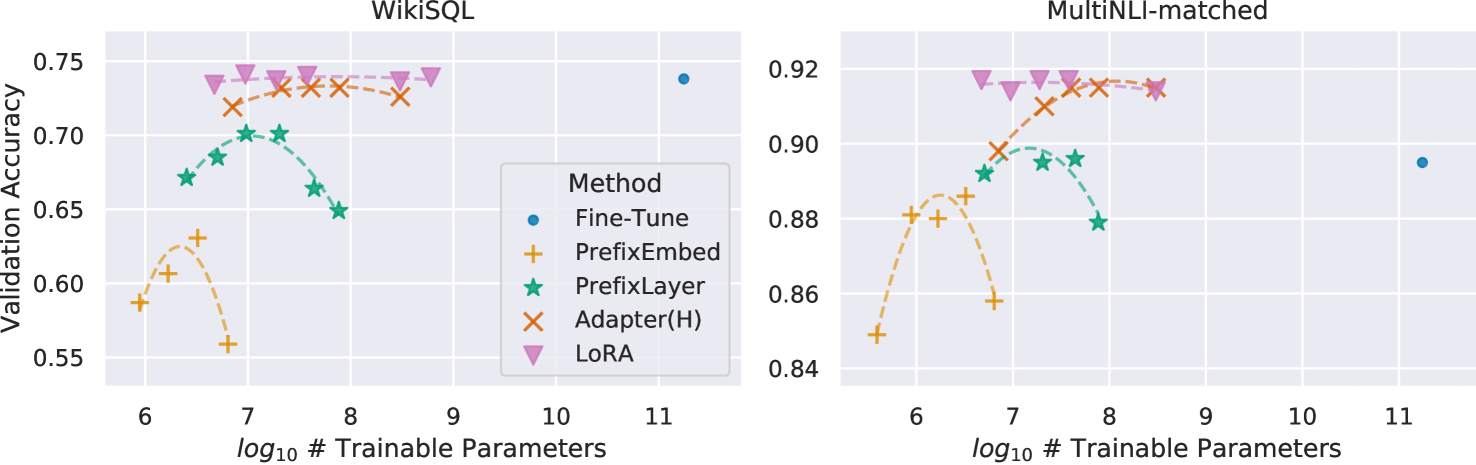

- 아래 그림을 보면, LoRA는 세 가지 데이터 세트 모두에서 fine-tuning 기준선과 일치하거나 초과한다.

- LoRA를 이용하였을 때 해당 fields에서 SOTA를 달성할 뿐만 아니라, GPT-3의 경우 175B의 파라미터 가운데 0.01%의 파라미터 개수만 이용할 정도로 효율성이 좋다.

- 모든 방법이 더 많은 trainable parameters를 갖는다고 해서 이점을 얻는 것은 아니다.

- 접prefix-embedding tuning에 256개 이상의 특수 토큰을 사용하거나 prefix-layer tuning에 32개 이상의 특수 토큰을 사용하면 성능이 크게 저하되는 것을 관찰했다.

6. Related Works

- Transformer Language Models

- Prompt Engineering and Fine-Tuning

- Parameter-Efficient Adaptation

- Low-Rank Structures in Deep Learning

7. Understanding the Low-Rank Updates

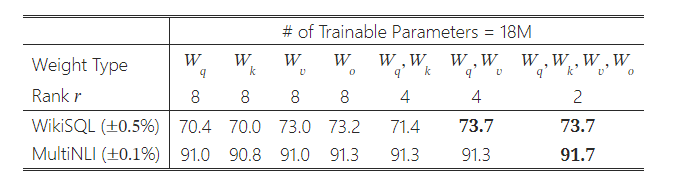

7.1. Which Weight Matrices in Transformer Should We Apply LoRA to?

- , 에 모두 LoRA를 적용하는게 가장 best performance를 낸다.

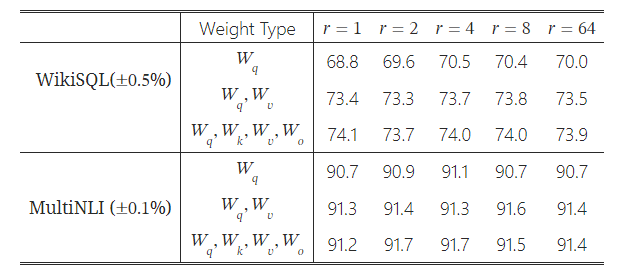

7.2. What is the Optimal Rank for LoRA?

- LoRA를 , 에 모두 적용할 때는 r=1일 때 꽤 좋은 성능이 나온다는 것을 볼 수 있다.

- 에만 LoRA를 적용할 경우 좀 더 큰 r이 낫다는 것을 위의 실험 결과에서 볼 수 있다.

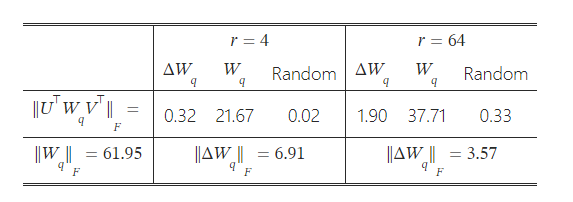

7.3How Does the Adaptation Matrix Compare to ?

- 는 와 높은 상관관계 있다. (compared to a random matrix), 는 에 이미 있는 일부 feature을 증폭시킨 것이다.

- 의 상단 단방향을 반복하는 대신, 는 에서 강조되지 않은 방향만 증폭시킨다.

- 증폭 인자는 다소 크다 (r=4의 경우 21.5 ≒ 6.91/0.32)

8. Conclusion and Future Work

- 거대한 언어 모델을 미세 조정하는 것은 필요한 하드웨어와 다양한 작업을 위한 독립적인 인스턴스를 호스팅하기 위한 저장/전환 비용 측면에서 엄청나게 비싸다.

- 우리는 높은 모델 품질을 유지하면서 추론 대기 시간을 도입하거나 입력 시퀀스 길이를 줄이지 않는 효율적인 적응 전략인 LoRA를 제안한다.

- 💡 중요한 점은 대부분의 모델 매개변수를 공유하여 서비스로 배포할 때 빠른 작업 전환이 가능하다는 것이다.

- Transformer 언어 모델에 중점을 두었지만 제안된 원리는 일반적으로 밀도가 높은 계층이 있는 모든 신경망에 적용 가능하다.

🤔 Future Work

1) LoRA는 다른 효율적인 적응 방법과 결합되어 잠재적으로 직교 개선을 제공할 수 있다.

2) 미세 조정 또는 LoRA의 메커니즘은 명확하지 않다. 사전 훈련 중에 학습된 기능이 다운스트림 작업에서 잘 수행되도록 어떻게 변환되나?

3) LoRA를 적용할 가중치 행렬을 선택하기 위해 주로 휴리스틱에 의존한다. 더 원칙적인 방법이 있나?

🔖 Reference

LoRA 논문

LoRA 논문 설명1

LoRA 논문 설명2

prefix-tuning