Reference

📄 A Simple Introduction to Sequence to Sequence Models

Seq to Seq를 공부하다가 Architecture에 대한 이해가 잘 되지 않아, 해당 포스팅을 찾았고 이를 바탕으로 공부하고 정리합니다.

내용에 오류가 있는 경우 댓글로 알려주시면 감사하겠습니다.

Overview

sequence to sequence models은 machine translation, video captioning, image captioning, question answering 등의 분야에서 사용됩니다.

해당 개념을 이해하기 위해 neural network에 대한 개념을 잘 알고 있어야 하며, 특히 RNN(recurrent neural network), LSTM(long-short-term-memory), GRU model에 대해 알고 계시는게 좋습니다!

저는 이상하게 RNN, LSTM, GRU 이상 seq to seq, attention, transformer ... 등 뒷부분 진도가 잘 안 나갔는데, 포기하지 않고 계속 각자의 돌파구를 찾았으면 좋겠습니다.

Use Cases

Sequence to sequence 모델은 앞에서 언급했듯 일상적으로 접하는 수많은 시스템입니다.

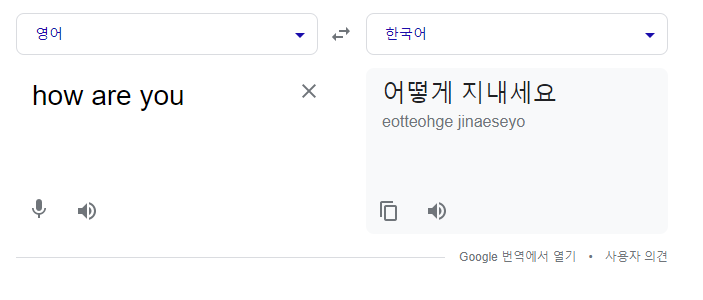

예를 들어 seq2seq 모델은 Google 번역, 음성 지원 장치 및 온라인 챗봇과 같은 애플리케이션을 지원합니다.

다음은 일부 응용 프로그램입니다.

Machine translation

Speech recognition

등이 있습니다.

seq2seq(sequence to sequence)는 모델은 특히 sequence 기반의 문제를 해결한 솔루션이며, 특히 input과 output의 size나 category가 다른 점의 문제점을 해결합니다.

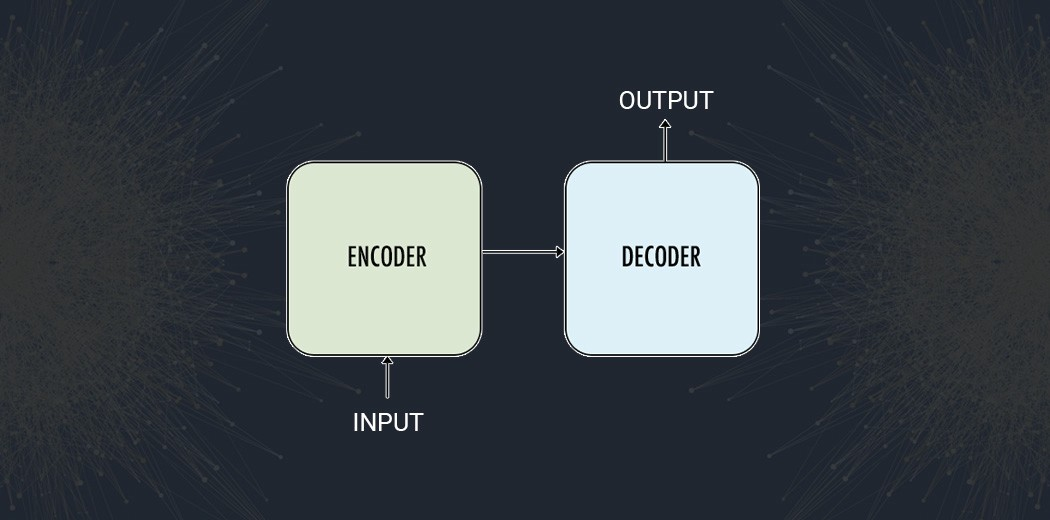

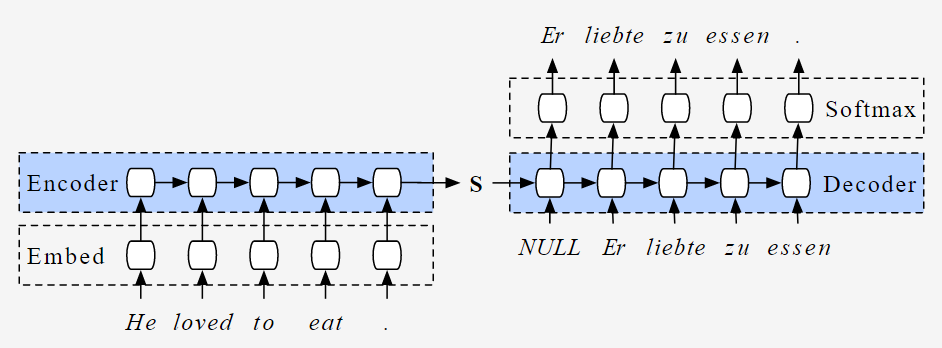

encoder-decoder architecture

seq2seq에는 encoder와 decoder라는 두 가지 구성 요소가 있습니다.

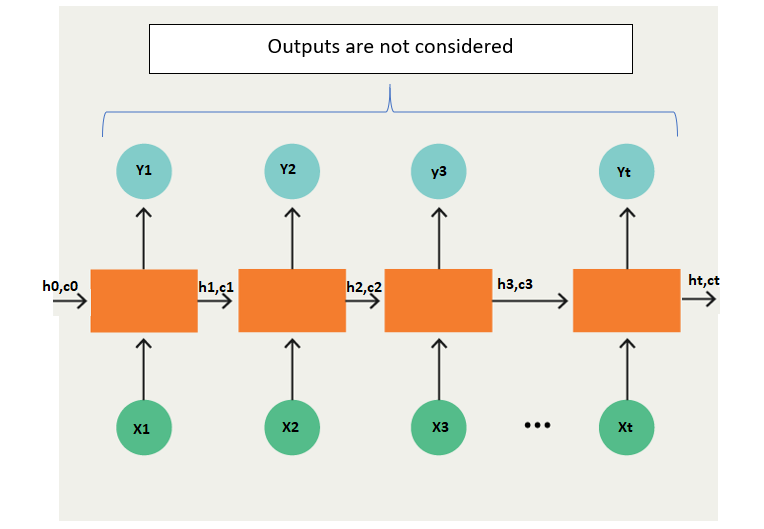

encoder

- encoder와 decoder는 모두 LSTM 모델입니다.

- encoder는 input sequence를 읽고 internal state vectors 나 context vector(LSTM의 hidden state, cell state vectors라고도 합니다.)로 정보를 요약합니다.

- encoder의 출력은 버리고, internal states만 유지합니다.

- context vector는 decoder가 정확한 예측을 할 수 있도록 모든 input elements에 대해 정보를 압축하는 것을 목표로 합니다.

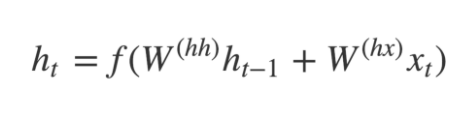

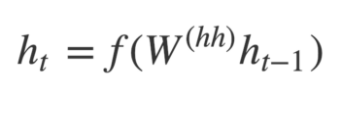

- hidden state 는 다음 공식에 의해 계산됩니다.

LSTM은 데이터를 순서대로 읽는데요.

입력의 길이 't'의 sequence의 경우 LSTM은 't'의 time step에서 이를 읽습니다.

- : i번째 time step의 input sequence

- 및 : LSTM은 각 time step에서 2가지 state(hidden state의 경우 'h', cell state의 경우 'c')를 유지합니다.

- : time step i에서의 output sequene. 는 실제로 softmax activation을 사용하여 생성된 전체 vocabulary에 대한 확률 분포입니다. 따라서 각 는 확률 분포를 나타내는 'vocab_size' 크기의 vector입니다.

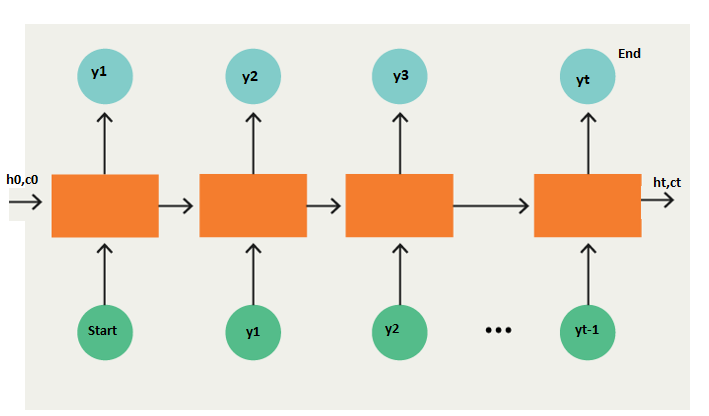

decoder

- decoder는 초기 state가 인코더 LSTM의 최종 state로 초기화되는 LSTM입니다.

- 즉 encoder의 최종 cell의 context vector가 decoder network의 첫 번째 cell에 입력됩니다.

- 이런 initial states을 사용해서 decoder는 output sequence 생성을 시작하고, 출력은 향후 출력에도 계속 고려됩니다.

- 여러 LSTM unit의 stack으로 각 unit이 time step t에서 출력 를 예측합니다.

- 각 반복 unit은 이전 unit의 hidden state를 받아들이고, 자체 hidden state 뿐만 아니라 생성하고 출력합니다.

모든 hidden state 는 다음 공식을 사용하여 계산됩니다.

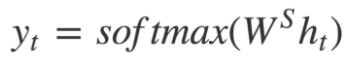

시간 단계 t 에서 출력 y_t 는 다음 공식을 사용하여 계산됩니다.

각 가중치 와 함께 현재 time step에서 hidden state를 사용하여 output을 계산합니다.

Softmax는 최종 출력(예: question-answering problem의 word)를 결정하는 데 도움이 되는 확률 벡터를 만드는 데 사용됩니다.

위의 이미지와 같이 output sequence에 두 개의 token을 추가합니다.

Example

위에 reference로 남긴 posting에서 예시 문장을 제시합니다.

“START_ John is hard working _END”.

가장 중요한 점은 decoder의 초기 state()가 encoder의 최종 state로 결정된다는 점인데요.

이 사실은 decoder가 encoder에 의해 encoding된 정보에 따라 output sequence를 시작하도록 훈련되었음을 의미합니다.

마지막으로 각 time step에서 예측된 output에 대해 loss를 계산하고, network의 parameter를 update하기 위해 error를 time에 따라 backpropagation합니다.

충분히 많은 양의 data로 더 오랜 기간 동안 network를 훈련하면 더 좋은 예측 결과를 얻을 수 있습니다.

Overall Encoder-Decoder Architecture

- inference하는 동안 한 번에 하나의 단어를 생성합니다.

- decoder의 초기 state는 encode의 최종 state에 의해 설정됩니다.

- 초기 input은 항상 START token입니다.

- 각 time step에서 decoder의 state를 유지하고, 다음 time step의 initial state로 설정합니다.

- 각 time step에서 예측된 출력값은 다음 time step의 input으로 제공됩니다.

- decoder가 END token을 예측할 때 loop를 끊습니다.

Encoder-Decoder Models의 장단점

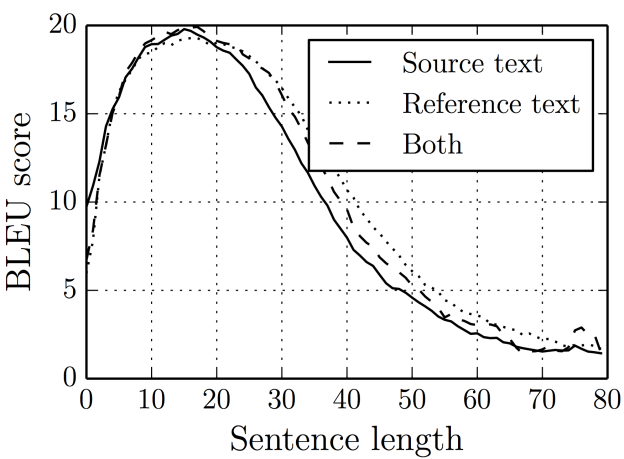

- memory가 아주 제한적입니다.

여기서 S 또는 W라고 부르는 LSTM의 마지막 hidden state는 번역해야 하는 전체 문장을 벼락치기하기 위해 노력하는 파트입니다.

S 또는 W는 일반적으로 길이가 몇 백 단위에 불과한데, 이 고정 차원 vector에서 강제로 적용하려고 하면 neural network가 더 많이 손실됩니다.

lossy compression을 수행해야 한다는 측면에서 neural network를 고려하는 것이 더 유용하다고 합니다. - 일반적으로 신경망이 깊을수록 훈련하기가 더 어렵습니다. recurrent neural network의 경우 sequence가 더 길수록 neural network는 time step에 따라 더 깊어집니다.

이로 인해 RNN이 학습하는 목표의 gradient가 backward 과정에서 vanishing됩니다.

LSTM과 같이 gradient vanishing을 방지하기 위해 특별히 제작된 RNN을 사용하더라도 이는 여전히 근본적인 문제점으로 남아 있습니다.

또한 보다 강력하고 긴 문장을 위해 Attention Models 및 Transformer와 같은 모델이 있습니다.

여기까지 seq2sqe의 encoder, decoder를 살펴보았습니다.