Feature Pyramid Network Paper Review - Enhancing Object Detection with Feature Pyramids

Computer Vision

Summary

The Feature Pyramid Network (FPN) is designed to enhance multi-scale object detection by leveraging both high-level semantic features and low-level spatial details. Unlike previous approaches that relied on image pyramids, which are computationally expensive, FPN constructs a feature hierarchy within a deep CNN using a top-down pathway with lateral connections. This enables accurate detection across a wide range of object sizes while keeping computational costs low.

Introduction

Recognizing Objects at Different Scales: A Fundamental Challenge

One of the most significant challenges in computer vision is detecting objects at vastly different scales within an image. Objects can appear at various sizes due to their distance from the camera, perspective transformations, and variations in image resolution. Traditional object detection models struggle to accurately recognize both small and large objects using a single feature representation.

The Role of Feature Pyramids in Scale-Invariant Object Detection

To address this challenge, feature pyramids have been widely adopted in computer vision tasks.

Image Pyramids are a classical approach that allow models to recognize objects at multiple scales by scanning over different positions and pyramid levels. This technique ensures scale-invariant feature extraction, allowing detectors to be more robust to size variations.

However, image pyramids have significant computational drawbacks:

- High computational cost: Constructing explicit image pyramids requires processing multiple resized versions of the same image, which increases inference time

- Train-test inconsistency: Training deep learning models on multi-scale image pyramids is challenging and inefficient.

Challenges with Low-Level Features in Object Detection

High-resolution feature maps typically capture low-level features such as edges and textures.

However, relying solely on low-level features can degrade a model's representational capacity, as these features lack high-level semantic understanding and lead to poor localization.

Background

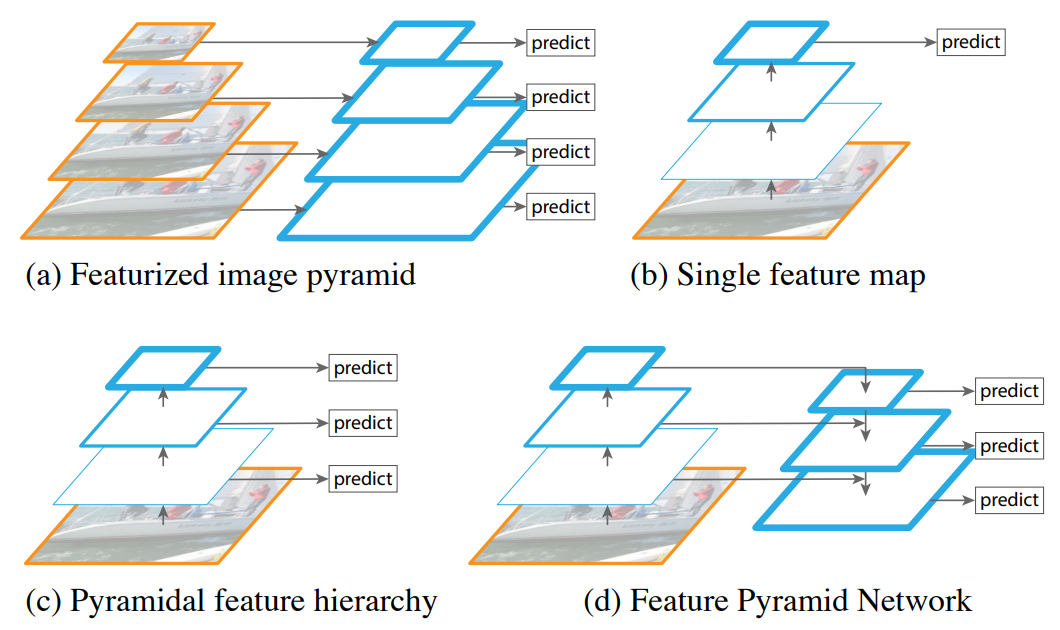

The Evolution of Multi-Scale Object Detection

1. Image Pyramids: The Classical Approach

- Early solutions relied on explicit image pyramids, which resized input images at multiple scales.

- While effective, this method was computationally expensive and impractical for deep learning models.

2. Early CNN-Based Detector

- Initial CNN models (e.g., AlexNet, VGG) used a single feature map for detection.

- Limitations: Unable to detect objects of varying size effectively.

3. The shift to Deep Learning-Based Multi-Scale Feature Extraction

- Single Shot MultiBox Detector (SSD) attempted to use multiple feature maps from different levels of the network.

- However, SSD relied on mid-level feature maps (Conv4_3) which lacked high-level semantic information, making it less effective for detecting small objects.

4. The Emergence of Feature Pyramid Networks (FPN)

- FPN introduced a top-down pathway with lateral connections, addressing the limitations of SSD.

- This allowed lower-resolution feature maps to inherit high-level semantic information while preserving high-resolution spatial details.

- FPN effectively merged the advantages of both image pyramids and deep CNN feature maps.

Feature Pyramid Network

FPN Architecture

FPN enhances multi-scale feature representation by integrating high-level semantic features with low-level spatial details through a feature hierarchy consisting of a top-down pathway and lateral connections.

FPN consists of two key architectural components:

- 1. Bottom-up Pathway

- 2. Top-down Pathway & Lateral Connections

1. Bottom-up Pathway

This follows the standard feedforward computation of a deep CNN (e.g., ResNet) to generate multi-resolution feature maps.

- The CNN backbone processes the input image through multiple layers, progressively reducing spatial resolution but increasing feature abstraction.

- Feature maps at different stages capture different levels of information:

- Shallow layers: High-resolution but low-level details (e.g., edges, textures).

- Deep Layers: Low-resolution but high-level semantic meaning.

In FPN, the final output of each stage in ResNet is used to build the feature pyramid.

Standard object detection models (e.g., Faster R-CNN) typically use only C4 or C5, but FPN utilizes all feature levels.

2. Top-down Pathway & Lateral Connections

The top-down pathway and lateral connections are what make FPN fundamentally different from conventional CNN-based object detection models.

CNNs naturally produce high-resolution feature maps in early layers and low-resolution, high-semantic feature maps in deeper layers.

(2-1) Top-down Pathway

- Starts from C5 (highest-level feature map) and progressively upsamples it.

- Each upsampled feature map is then merged with the corresponding bottom-up feature map (e.g., C4, C3, C2).

- This process enhances the semantic richness of lower layers while maintaining their high resolution.

(2-2) Lateral Connections

- Each bottom-up feature map (C2, C3, C4) is connected laterally to its corresponding upsampled feature map from the top-down pathway.

- A 1 x 1 convolution is applied before merging to normalize channel dimensions.

- Final feature maps (P2, P3, P4, P5) are obtained after fusion.

This hierarchical feature fusion enables small objects to retain high-resolution details while leveraging strong semantic context.

Feature Pyramid Networks for RPN and Fast R-CNN

For a detailed explanation of the original Region Proposal Network (RPN) and Fast R-CNN, refer to my previous Fast R-CNN Paper Review

This section focuses on how FPN enhances both components.

Applying FPN to RPN and Fast R-CNN

FPN improves both RPN (proposal generation) and Fast R-CNN (detection) by introducing multi-scale feature representations through a feature pyramid.

Instead of relying on a single feature level (e.g., C4 in ResNet), FPN distributes computation across multiple levels, ensuring better detection for small, medium, and large objects.

1. Removal of Conv1 in RPN

- Standard RPN includes an additional Conv1 layer before the 3×3 convolution.

- FPN removes this Conv1 layer, reducing redundancy while improving memory efficiency.

- The 3×3 convolution and sibling 1×1 convolutions are applied directly to each level of the feature pyramid, ensuring consistency across scales.

2. Anchor Assignment Across Feature Pyramid Levels

Rather than applying multi-scale anchors to a single feature map, FPN assigns fixed-scale anchors to different feature levels, effectively distributing object detection tasks across multiple scales.

| Feature Level | Anchor Size (pixels²) |

|---|---|

| P2 | 32² |

| P3 | 64² |

| P4 | 128² |

| P5 | 256² |

| P6 | 512² |

- Each feature level retains three aspect ratios (1:1, 1:2, 2:1), leading to a total of 15 anchors across the pyramid.

Feature Pyramid-based RPN

The Region Proposal Network (RPN) in Faster R-CNN generates object proposals but originally operates on a single feature level (e.g., C4 in ResNet). This leads to poor recall for small objects.

Standard RPN Workflow

- Extract a feature map from a CNN backbone.

- Apply a 3×3 convolution to extract region-specific features.

- Use two sibling 1×1 convolutions:

- One for classification (object vs. background).

- One for bounding box regression.

- Predict multi-scale anchors on the same feature map.

Limitations of Standard RPN

- Fixed feature scale: The use of a single feature map makes it difficult to detect objects of varying sizes.

- Anchor scale mismatch: Since predefined anchors are applied to a single feature level, small objects may not activate deep feature maps effectively, leading to poor localization.

How FPN Enhances RPN

- Instead of a single feature map, RPN runs on each level of the feature pyramid (P2–P6).

- Objects of varying sizes are detected at their corresponding feature levels, thereby enhancing recall and localization accuracy.

- This allows better small-object detection without increasing computational overhead.

Feature Pyramid Networks for Fast R-CNN

The standard Fast R-CNN model in Faster R-CNN extracts RoIs from a single feature map (C4 in ResNet), leading to suboptimal feature extraction for objects of different sizes.

Limitations of Standard Fast R-CNN

- Fixed feature resolution: Using a single feature level reduces performance when detecting objects at varying scales.

- Suboptimal feature extraction: Small RoIs may not capture enough fine-grained details, leading to missed detections.

How FPN Enhances Fast R-CNN

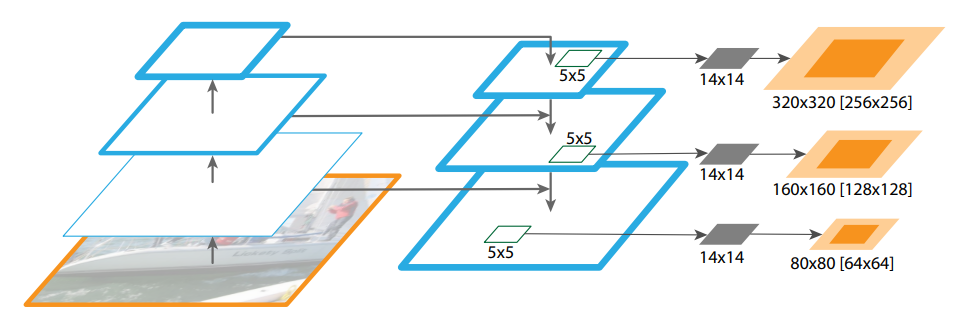

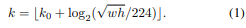

- FPN assigns RoIs to different feature levels based on their sizes, ensuring scale-aware feature extraction.

- Small RoIs are mapped to higher-resolution feature maps (P2, P3), while large RoIs are assigned to lower-resolution feature maps (P5, P6).

RoI Assignment in FPN-Fast R-CNN

The feature level Pk for each Region of Interest (RoI) is determined using:

- k0 : predefined level index (default: P4).

- w, h : width and height of the RoI.

- Small RoIs are assigned to higher-resolution feature maps (P2, P3).

- Large RoIs are assigned to lower-resolution feature maps (P5, P6).

This ensures that small RoIs use higher-resolution features, and large RoIs use lower-resolution features, improving overall detection performance.

Performance

COCO Benchmark Results

| Model | AP | AP@50 | AP@75 | AP (small) | AP (medium) | AP (large) |

|---|---|---|---|---|---|---|

| Faster R-CNN (C4) | 21.9 | 42.7 | 21.2 | 5.8 | 24.1 | 31.4 |

| Faster R-CNN (FPN) | 36.2 | 59.1 | 39.0 | 18.2 | 39.0 | 48.2 |

- Significant improvement for small objects (AP: 18.2 vs. 5.8).

- Overall AP increased by 14.3 points compared to Faster R-CNN without FPN.

Ablation Study: How Important Are FPN Components?

To understand which components of FPN contribute most to performance improvements, we analyze three key factors:

- Effect of FPN in RPN (Feature Pyramid-based RPN)

- Importance of Lateral Connections

- Effect of Feature Pyramid Representation (Multi-Level RoI Assignment)

1. Effect of FPN in RPN (Feature Pyramid-based RPN)

| Method | AR@1k |

|---|---|

| Standard RPN (C4) | 48.3 |

| FPN in RPN | 56.3 (+8.0) |

- Applying FPN to RPN improves region proposal recall (AR@1k) by 8.0 points.

- Since anchors are now assigned across multiple feature levels, recall improves significantly, especially for small objects.

- Conclusion: Multi-scale feature extraction at the proposal stage is essential for improving recall.

2. Importance of Lateral Connections

| Method | AP |

|---|---|

| FPN without lateral connections | 26.5 |

| FPN with lateral connections | 36.2 |

- Removing 1×1 lateral connections causes a 9.7-point drop in AP, showing that these connections are crucial.

- Why? Lateral connections preserve fine-grained spatial details from low-level feature maps, which is essential for small-object detection.

- Conclusion: Lateral connections play a key role in refining high-resolution features for better localization.

3. Effect of Feature Pyramid Representation (Multi-Level RoI Assignment)

| Method | AP |

|---|---|

| RoI assignment to a single level (P2) | 28.0 |

| Multi-Level RoI Assignment (FPN) | 36.2 |

- Assigning all RoIs to P2 alone (highest-resolution feature map) performs worse than multi-level assignment.

- Why? Using only P2 results in excessive small-object anchors, but ignores the semantic richness of deeper feature maps.

- Conclusion: A multi-scale RoI pooling strategy improves object detection at all scales.

From ablation study

1. FPN-RPN improves region proposal recall (+8.0 AR@1k), helping detect small objects early.

2. Lateral connections are essential (+9.7 AP), refining fine-grained spatial features.

3. Feature pyramids for RoI assignment significantly improve detection (+8.2 AP).

Conclusion

FPN has revolutionized multi-scale object detection by seamlessly integrating high-level semantic features with low-level spatial details. By introducing a top-down pathway with lateral connections, FPN enables robust detection across a wide range of object sizes while maintaining computational efficiency.

A key advantage of FPN is its ability to enhance feature representation across different scales without incurring excessive computational costs. As a result, FPN has established itself as a fundamental building block for modern object detection frameworks and continues to shape the evolution of deep learning-based detection architectures.

References

Lin, T.-Y., Dollár, P., Girshick, R., He, K., Hariharan, B., & Belongie, S. (2017). Feature pyramid networks for object detection. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2017, 2117–2125. https://doi.org/10.1109/CVPR.2017.106