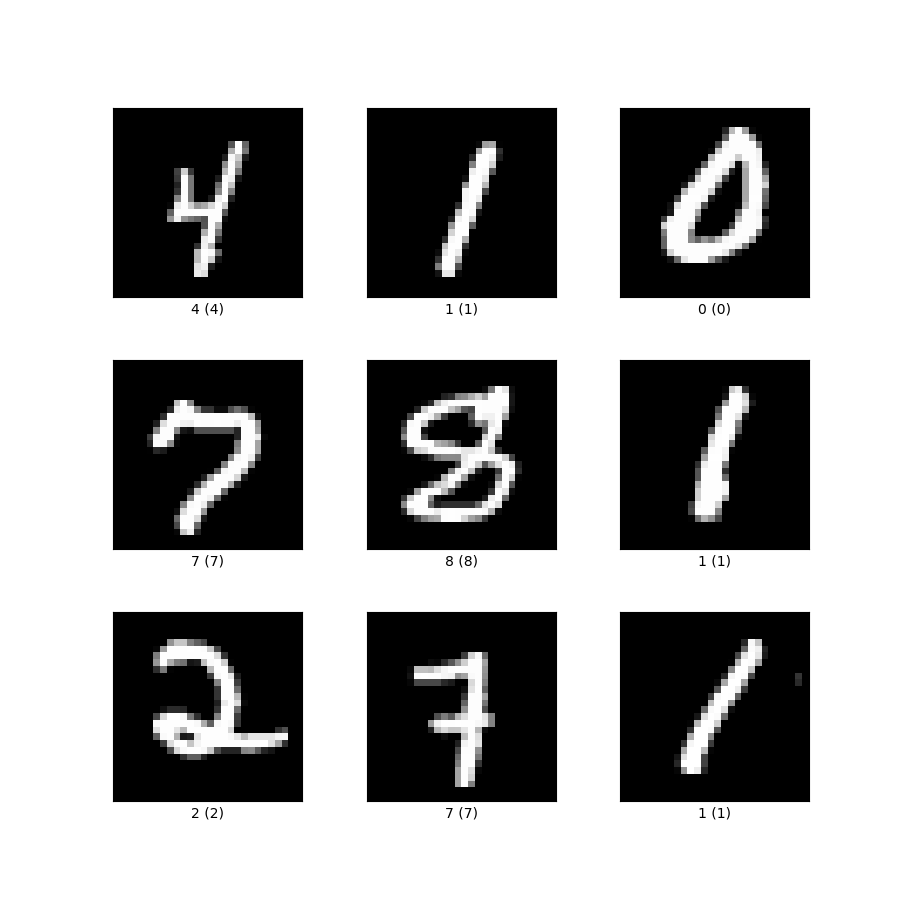

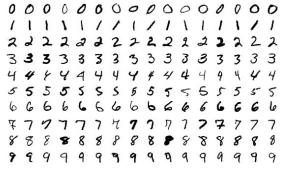

📄 MNIST dataset

- 0 ~ 9 까지의 숫자를 손으로 쓴 dataset

- 28 x 28 해상도의 이미지이며, 1개의 채널을 갖는 gray scale 이다.

Library Import

# python 표준 라이브러리

import os

import time

import glob

# 오픈소스 라이브러리

import tensorflow as tf

from tensorflow.keras import layers

import imageio # 생성 데이터를 GIF형태로 만들어주기 위한 것

import matplotlib.pyplot as plt

import numpy as np

import PIL

# 모든 이미지를 표시하기 위해 IPython 모듈의 클래스를 사용

from IPython import display os

운영체제에서 제공되는 여러기능(경로 가져오기, 폴더 생성, 폴더 내 파일 목록 구하기 등)을 파이썬에서 사용할 수 있도록 하는 모듈

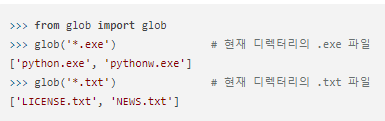

glob

- 인자로 받은 패턴과 이름이 일치하는 모든 파일과 디렉터리의 리스트를 반환

- 패턴을 * 으로 주면 모든 파일과 디렉터리를 볼 수 있다.

Dataset 준비

(train_images, train_labels), (_, _) = tf.keras.datasets.mnist.load_data()train_images의 shape는 (60000, 28, 28)으로 총 6만개의 28 x 28 해상도 이미지가 저장되어 있다.

train_images = train_images.reshape(train_images.shape[0], 28, 28, 1).astype('float32')

train_images = (train_images - 127.5) / 127.5 # 이미지를 [-1, 1]로 정규화합니다.reshape

넘파이 배열의 차원을 변환한다.

astype

데이터 프레임 내 데이터들의 데이터 타입을 변경

- 이미지 데이터를 28x28인 gray scale image 형태로 reshape 하고, data Type을 float32(실수) 타입으로 변경

- 각각의 이미지는 현재 0~255 까지의 값을 갖고 있기 때문에 -1~1 사이의 값을 갖도록 연산한다.

BUFFER_SIZE = 60000

BATCH_SIZE = 256

# 데이터 배치를 만들고 섞습니다.

train_dataset = tf.data.Dataset.from_tensor_slices(train_images).shuffle(BUFFER_SIZE).batch(BATCH_SIZE)tf.data.Dataset.from_tensor_slices

- tf.data.Dataset 를 생성하는 함수로 입력된 텐서로부터 slices를 생성한다.

- 예를 들어 MNIST의 학습데이터 (60000, 28, 28)가 입력되면, 60000개의 slices로 만들고 각각의 slice는 28×28의 이미지 크기를 갖게 된다.

shuffle

- 데이터셋을 임의로 섞어준다.

- BUFFER_SIZE개로 이루어진 버퍼로부터 임의로 샘플을 뽑고, 뽑은 샘플은 다른 샘플로 대체한다.

- 완벽한 셔플을 위해서 전체 데이터셋의 크기에 비해 크거나 같은 버퍼 크기가 요구된다.

batch

데이터셋의 항목들을 하나의 배치로 묶어준다.

모델 구성

- tf.keras.models 모듈의 Sequential 클래스를 사용해서 인공신경망의 각 층을 순서대로 쌓는다.

- Dense 층을 추가하는 이유는 이미지가 픽셀의 위치에 민감하기 때문에 완전 연결을 해줌으로써 학습의 정확도를 높일 수 있기 때문이다.

- flatten은 다차원 배열을 1차원으로 평탄화해주는 함수이다.

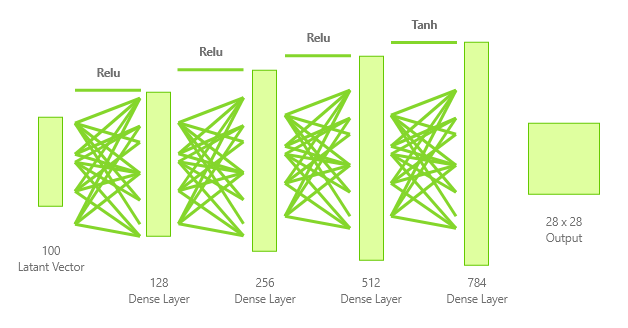

Generator

def make_generator_model():

model = tf.keras.Sequential()

model.add(layers.Dense(128, activation='relu', input_shape=(100,)))

model.add(layers.Dense(256, activation='relu'))

model.add(layers.Dense(512, activation='relu'))

model.add(layers.Dense(28*28*1, activation='tanh'))

model.add(layers.Reshape((28, 28, 1)))

return model- 마지막 Layer에서 Tanh 활성화 함수를 사용한 이유는 이미지 데이터를 -1~1사이의 값으로 연산하였기 때문으로 생각할 수 있다.

생성자 모델의 구조를 그림으로 나타내면 아래와 같다.

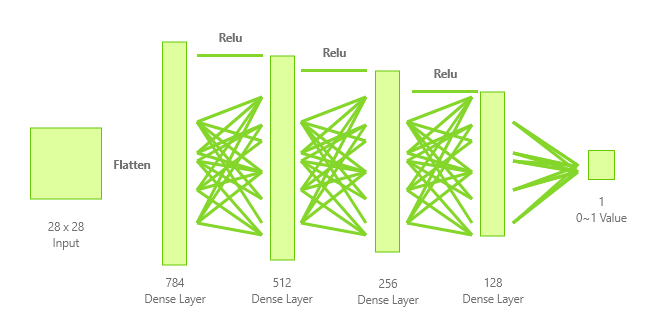

Discriminator

def make_discriminator_model():

model = tf.keras.Sequential()

model.add(layers.Flatten())

model.add(layers.Dense(512, activation='relu'))

model.add(layers.Dense(256, activation='relu'))

model.add(layers.Dense(128, activation='relu'))

model.add(layers.Dense(1))

return model- 최종 Layer의 값은 이미지가 진짜라고 판별한다면 1에 가까운 값, 가짜라고 판별한다면 0에 가까운 값을 출력하도록 학습한다.

판별자 모델의 구조를 그림으로 나타내면 아래와 같다.

generator = make_generator_model()

discriminator = make_discriminator_model()훈련 과정 정의

# 손실함수 정의

cross_entropy = tf.keras.losses.BinaryCrossentropy(from_logits=True)- 손실함수에 들어가는 값은 판별자의 판별값(0 or 1)으로 수렴하기 때문에 BinaryCrossentropy를 사용한다.

- from_logits을 True로 설정해주어야 BinaryCrossEntropy에 입력된 값을 0~1 사이의 값으로 정규화 한 후 알맞게 계산할 수 있다.

# 생성자 손실함수

# fake image를 넣었을 때 1이 나오도록 학습

def generator_loss(fake_output):

return cross_entropy(tf.ones_like(fake_output), fake_output)Generator의 loss는 생성한 fake image를 Discriminator가 판별한 결과값인 fake_output을 받아서, 그 값이 1에서 얼마나 멀리있는가를 기준으로 Loss를 결정한다.

tf.ones_like

특정 tensor와 비슷하면서 모든 element가 1인 tensor를 만들어준다.

# 판별자 손실함수

# real image를 넣으면 1, fake image를 넣으면 0이 나오게 학습

def discriminator_loss(real_output, fake_output):

real_loss = cross_entropy(tf.ones_like(real_output), real_output)

fake_loss = cross_entropy(tf.zeros_like(fake_output), fake_output)

total_loss = real_loss + fake_loss

return total_loss- Generator가 생성한 fake image에 대한 판별 값이 0에서 얼마나 멀리있는지

- real image에 대한 판별 값이 1에서 얼마나 멀리있는지

위 두 개의 Loss를 각각 계산하고, 합친 값을 Discriminator의 loss로 반환한다.

tf.zeos_like

특정 tensor와 비슷하면서 모든 element가 0인 tensor를 만들어준다.

# Optimizer 정의

generator_optimizer = tf.keras.optimizers.Adam(1e-4)

discriminator_optimizer = tf.keras.optimizers.Adam(1e-4)EPOCHS = 300

noise_dim = 100

num_examples_to_generate = 16

# 이 시드를 시간이 지나도 재활용하겠습니다.

# (GIF 애니메이션에서 진전 내용을 시각화하는데 쉽기 때문입니다.)

seed = tf.random.normal([num_examples_to_generate, noise_dim])noise_dim

Generator의 Input으로 사용 될 Latant Vector의 크기를 100으로 정의한다.

num_examples_to_generator

훈련과정에서 Generator가 생성하는 이미지를 몇 개씩 확인 할지 정의하는 변수

seed

- 훈련은 생성자가 입력으로 랜덤시드를 받는 것으로부터 시작되며, 그 시드값을 사용하여 이미지를 생성한다.

- num_examples_to_generator의 개수만큼 Latant Vector를 생성하여 Generator에게 생성을 명령한다.

# `tf.function`이 어떻게 사용되는지 주목해 주세요.

# 이 데코레이터는 함수를 "컴파일"합니다.

@tf.function

def train_step(images):

noise = tf.random.normal([BATCH_SIZE, noise_dim])

with tf.GradientTape() as gen_tape, tf.GradientTape() as disc_tape:

# generator에 noise 넣고 fake image 생성

generated_images = generator(noise, training=True)

# discriminator에 real image와 fake image 넣고 판별값 리턴

real_output = discriminator(images, training=True)

fake_output = discriminator(generated_images, training=True)

# fake image를 discriminator가 1로 학습 하도록 업데이트

gen_loss = generator_loss(fake_output)

# real image loss와 fake image loss 합한 total loss 리턴

disc_loss = discriminator_loss(real_output, fake_output)

# gen_tape.gradient(y, x) 함수로 미분 값(기울기)을 구함

gradients_of_generator = gen_tape.gradient(gen_loss, generator.trainable_variables)

gradients_of_discriminator = disc_tape.gradient(disc_loss, discriminator.trainable_variables)

# 가중치 업데이트

generator_optimizer.apply_gradients(zip(gradients_of_generator, generator.trainable_variables))

discriminator_optimizer.apply_gradients(zip(gradients_of_discriminator, discriminator.trainable_variables))@tf.function 데코레이터

- Tensorflow1 버전에서 session을 사용하며 컴파일하면서 생기는 여러 문제를 해결하고 간편하게 사용하도록 추가한 기능

- 내부적으로 선언되는 Tensorflow Graph를 만들어, Loss 기록, 가중치 기록 및 업데이트 등을 가능하게 한다.

tf.GradientTape()

실행된 모든 연산을 tape에 기록한 후 자동 미분을 사용해 tape에 기록된 연산의 그래디언트를 계산한다.

def train(dataset, epochs):

for epoch in range(epochs):

start = time.time()

for image_batch in dataset:

train_step(image_batch)

# GIF를 위한 이미지를 바로 생성합니다.

display.clear_output(wait=True)

generate_and_save_images(generator,

epoch + 1,

seed)

# print (' 에포크 {} 에서 걸린 시간은 {} 초 입니다'.format(epoch +1, time.time()-start))

print ('Time for epoch {} is {} sec'.format(epoch + 1, time.time()-start))

# 마지막 에포크가 끝난 후 생성합니다.

display.clear_output(wait=True)

generate_and_save_images(generator,

epochs,

seed)def generate_and_save_images(model, epoch, test_input):

# `training`이 False로 맞춰진 것을 주목하세요.

# 이렇게 하면 (배치정규화를 포함하여) 모든 층들이 추론 모드로 실행됩니다.

predictions = model(test_input, training=False)

fig = plt.figure(figsize=(4,4))

for i in range(predictions.shape[0]):

plt.subplot(4, 4, i+1)

plt.imshow(predictions[i, :, :, 0] * 127.5 + 127.5, cmap='gray')

plt.axis('off')

plt.savefig('image_at_epoch_{:04d}.png'.format(epoch))

plt.show()모델 훈련

%%time

train(train_dataset, EPOCHS)

매 epoch 마다 Generator가 생성하는 이미지와 시간을 확인 할 수 있다.

# gif 생성

anim_file = 'gan.gif'

with imageio.get_writer(anim_file, mode='I') as writer:

filenames = glob.glob('image*.png')

filenames = sorted(filenames)

last = -1

for i,filename in enumerate(filenames):

frame = 2*(i**0.5)

if round(frame) > round(last):

last = frame

else:

continue

image = imageio.imread(filename)

writer.append_data(image)

image = imageio.imread(filename)

writer.append_data(image)

import IPython

if IPython.version_info > (6,2,0,''):

display.Image(filename=anim_file)

imageio library로 훈련과정에서 생성한 이미지를 연속으로 이어붙여 gif 파일을 생성할 수 있다.

참조

https://www.tensorflow.org/tutorials/generative/dcgan?hl=ko

https://velog.io/@wo7864/GAN-%EC%BD%94%EB%93%9C%EB%A5%BC-%ED%86%B5%ED%95%9C-%EC%9D%B4%ED%95%B41