Binary Cross-Entropy Loss

Introduction

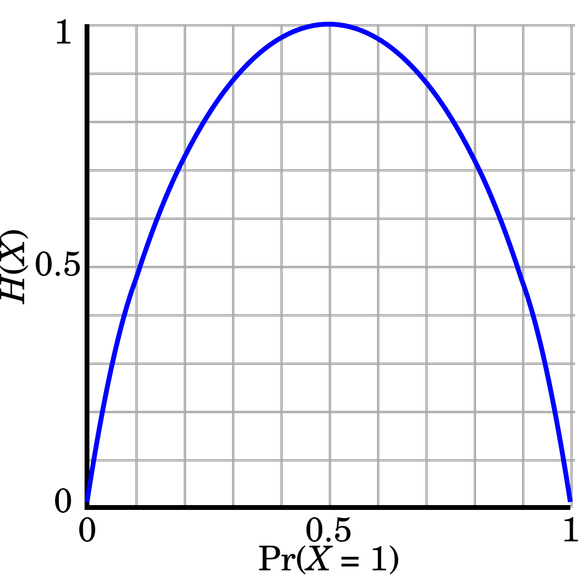

Binary cross-entropy loss is a specific instance of cross-entropy loss used primarily for binary classification tasks. This loss function measures the performance of a model whose output is a probability value between 0 and 1. The goal of binary cross-entropy loss is to quantify the "distance" between the true binary labels and the predicted probabilities, making it a critical tool for training binary classification models such as logistic regression.

Background and Theory

Mathematical Foundations

Binary cross-entropy, also known as logistic loss, arises from the principle of maximum likelihood estimation, where the model aims to maximize the probability of the observed data. Mathematically, it is expressed as follows for a dataset with instances:

Where:

- represents the actual label of the instance, which can be either 0 or 1,

- is the predicted probability of the instance belonging to the positive class (label 1),

- is the total number of instances.

Derivation of the Loss Function

The binary cross-entropy loss can be derived from the likelihood function of a Bernoulli random variable. For a single observation , where , the likelihood given a model prediction is:

Taking the logarithm of the likelihood, we obtain the log-likelihood:

The negative average log-likelihood over all instances gives us the binary cross-entropy loss, encouraging the model to maximize the likelihood of the observed data by adjusting its parameters.

Procedural Steps

Implementing binary cross-entropy loss typically involves the following steps:

- Model Output Calculation: Use a sigmoid function to ensure that the output predictions are probabilities ranging between 0 and 1.

- Loss Computation: Calculate the binary cross-entropy loss using the above formula for all training instances.

- Gradient Computation: Compute the derivatives of the loss with respect to each parameter of the model to guide the training process through backpropagation.

- Parameter Update: Use an optimization algorithm, typically stochastic gradient descent or its variants, to update the model parameters in a way that minimizes the loss.

Applications

Binary cross-entropy loss is widely used in:

- Medical diagnosis systems where outcomes are binary (e.g., disease/no disease).

- Binary classification in finance, such as fraud detection where transactions are classified as fraudulent or not.

Strengths and Limitations

Strengths:

- Directly aligns with the objective of modeling the probability of the positive class, providing a natural probabilistic interpretation of model outputs.

- Sensitive to changes in the predicted probabilities, appropriately penalizing wrong predictions.

Limitations:

- Prone to issues with imbalanced data, where the prevalence of one class significantly outweighs the other.

- The logarithmic function can cause numerical stability issues, especially with predictions close to 0 or 1. This is often handled by adding a small constant (epsilon) in the log function.

Advanced Topics

Connection to Logistic Regression

Binary cross-entropy is the loss function used in logistic regression, a fundamental statistical method for binary classification. The logistic function itself serves as the sigmoid activation function in this context, mapping any real-valued number into the (0, 1) interval, making it suitable for probability prediction.

References

- "Deep Learning" by Ian Goodfellow, Yoshua Bengio, and Aaron Courville.

- "Pattern Recognition and Machine Learning" by Christopher M. Bishop.

- Hastie, Trevor, Robert Tibshirani, and Jerome Friedman. "The Elements of Statistical Learning: Data Mining, Inference, and Prediction."