AdaMax Optimizer

Introduction

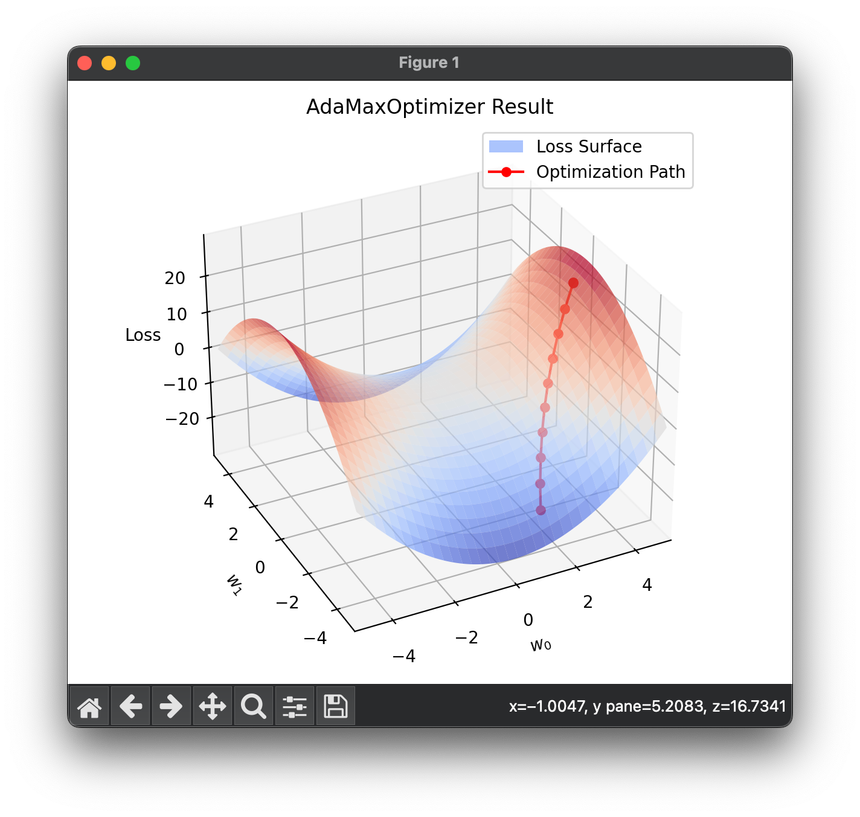

Adamax is a variant of the Adam optimization algorithm, which is itself an extension of the stochastic gradient descent method incorporating momentum and adaptive learning rates. Introduced by Kingma and Ba in their paper alongside Adam, Adamax is often cited as a generalization of Adam based on the infinity norm. Adamax is designed to provide an alternative to the usual norm updates used in Adam, potentially offering more stable and consistent updates under certain conditions.

Background and Theory

While Adam uses the norm of gradients to scale the learning rates, Adamax uses the norm (maximum norm), which may be less sensitive to outliers and large gradients. This can result in a more robust and stable optimization under specific scenarios, especially in cases where the gradient distributions do not conform to expected patterns. Adamax is considered to maintain more of the theoretical properties of AdaGrad while potentially avoiding the vanishing learning rate problem common to Adam.

Mathematical Formulation

The Adamax update rules can be defined through the following steps, incorporating concepts from momentum and scaling based on an infinite norm:

-

Gradient Calculation:

Where is the gradient of the loss function with respect to the parameters at time step .

-

Update Biased First Moment Estimate:

This is similar to Adam, where is the exponential moving average of the gradient moments, and is the decay factor for the first moment.

-

Update Biased Infinite Norm:

Here, updates based on the infinity norm, where is the decay factor for the scaled gradient norms, and is the element-wise absolute value of the gradients.

-

Correct Biased First Moment:

This correction is necessary to account for the initialization bias toward zero.

-

Parameter Update:

Here, is the step size, and the update is scaled inversely by the maximum norm, represented by .

Procedural Steps

- Initialization: Initialize the parameters , the first moment vector , and the scaled gradient norm , and set all values to zero.

- Compute Gradient: Calculate the gradient at each time step.

- Update Moment Vectors: Update the first moment vector and the maximum norm .

- Bias Correction: Apply bias correction to the first moment.

- Update Parameters: Adjust the parameters based on the corrected moment and the scaled learning rate.

- Iteration: Repeat steps 2-5 for each iteration until convergence or a fixed number of epochs is reached.

Applications

Adamax is useful in deep learning applications where the gradients may have large or unpredictable spikes, as its normalization factor based on the norm might confer more stability than the norm used in standard Adam.

Strengths and Limitations

Strengths

- Robustness to Large Gradients: Less sensitive to anomalies in gradient values.

- Simplified Hyperparameter Tuning: Similar to Adam, it requires less tuning of the learning rate compared to basic SGD.

Limitations

- Performance Variability: May not consistently outperform Adam in all scenarios and can be more dataset or problem-specific.

- Complexity: Slightly more complex than standard SGD due to additional moments and normalization calculations.

Advanced Topics

- Comparison with Other Optimizers: Understanding how Adamax differs from Adam, RMSProp, and other adaptive learning rate methods can provide deeper insights into its best use cases.

References

- Kingma, Diederik P., and Jimmy Ba. "Adam: A method for stochastic optimization." arXiv preprint arXiv:1412.6980 (2014).