AdaDelta Optimizer

Introduction

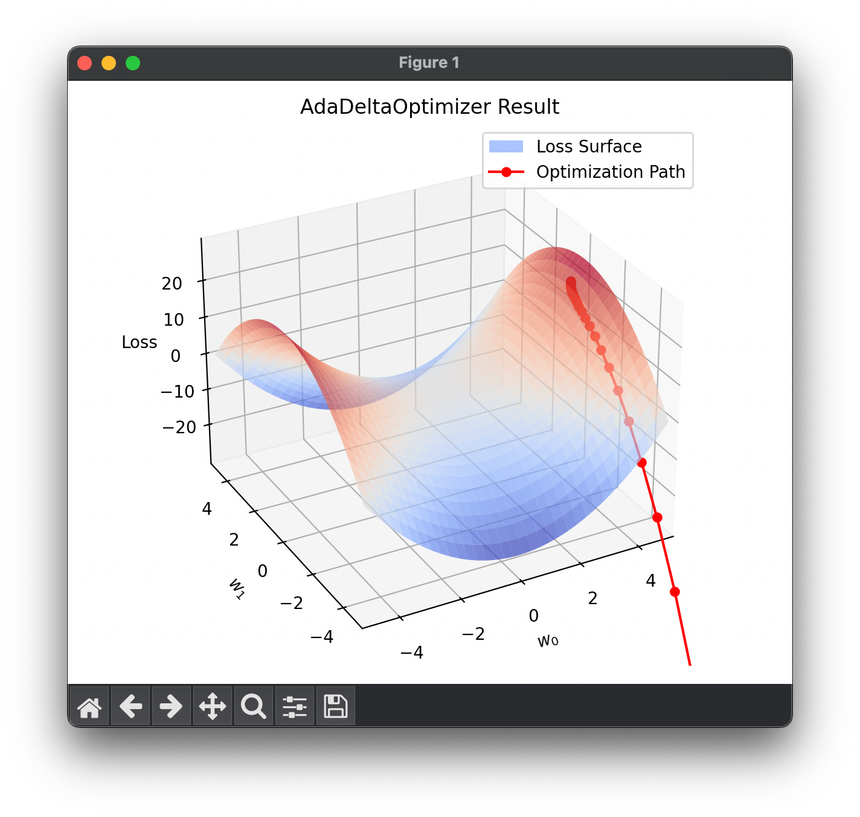

AdaDelta is an optimization algorithm designed to address the rapidly diminishing learning rates encountered in AdaGrad. Introduced by Zeiler in his 2012 paper, AdaDelta modifies AdaGrad's accumulation of all past squared gradients by limiting the accumulation to a fixed-size window of past gradients. This results in an adaptive learning rate approach that overcomes the key challenges of AdaGrad—namely, the aggressive, monotonically decreasing learning rate—without the need for a manually set global learning rate.

Background and Theory

AdaDelta extends the AdaGrad approach of adapting the learning rate to each parameter by considering the decaying average of past squared gradients. Unlike AdaGrad, which continues accumulating squared gradients throughout training, potentially leading to very small learning rates, AdaDelta uses a sliding window of gradient updates (exponential moving average) to keep this accumulation under control. This makes it robust to a wide range of initial configurations and reduces the need to set a default learning rate.

Mathematical Formulation

AdaDelta is characterized by the following key equations, which describe its approach to updating parameters without the need for an explicit learning rate:

-

Gradient Calculation:

Where is the gradient of the loss function with respect to the parameters at time step .

-

Accumulate Exponential Moving Averages of Squared Gradients

Here, is the decaying average of past squared gradients, and is the decay constant.

-

Compute Update Amounts:

is the amount by which parameters are adjusted, where is the exponentially decaying average of past squared parameter updates.

-

Accumulate Exponential Moving Averages of Squared Parameter Updates:

-

Parameter Update:

This update uses the square roots of the decaying averages to scale the gradient and adjust the parameters.

Procedural Steps

- Initialization: Initialize parameters , the decayed average of past squared gradients , and the decayed average of past squared updates to zero.

- Gradient Computation: Calculate the gradient .

- Update Squared Gradient Moving Average: Compute .

- Calculate Parameter Update: Compute using the ratio of the square roots of the accumulated averages.

- Update Squared Update Moving Average: Update .

- Apply Update: Adjust the parameters .

- Repeat: Iterate steps 2-6 until convergence.

Applications

AdaDelta is effectively used in training deep neural networks, particularly in scenarios where the choice of a correct learning rate is difficult or where gradients vary significantly in magnitude.

Strengths and Limitations

Strengths

- Elimination of Learning Rate: Does not require the manual tuning of a learning rate, which simplifies configuration and improves robustness.

- Robust to Vanishing Learning Rate: Overcomes the problem of diminishing learning rates encountered in AdaGrad.

Limitations

- Complexity: More complex implementation compared to simpler methods like SGD or AdaGrad.

- Parameter Sensitivity: The performance of AdaDelta can be sensitive to the choice of decay rate and the initialization of parameters.

Advanced Topics

- Comparison with RMSProp: Both AdaDelta and RMSProp use a moving average of squared gradients, but AdaDelta further refines the approach by also adapting the update magnitudes directly based on historical update magnitudes.

References

- Zeiler, Matthew D. "ADADELTA: an adaptive learning rate method." arXiv preprint arXiv:1212.5701 (2012).