Adam Optimization

Introduction

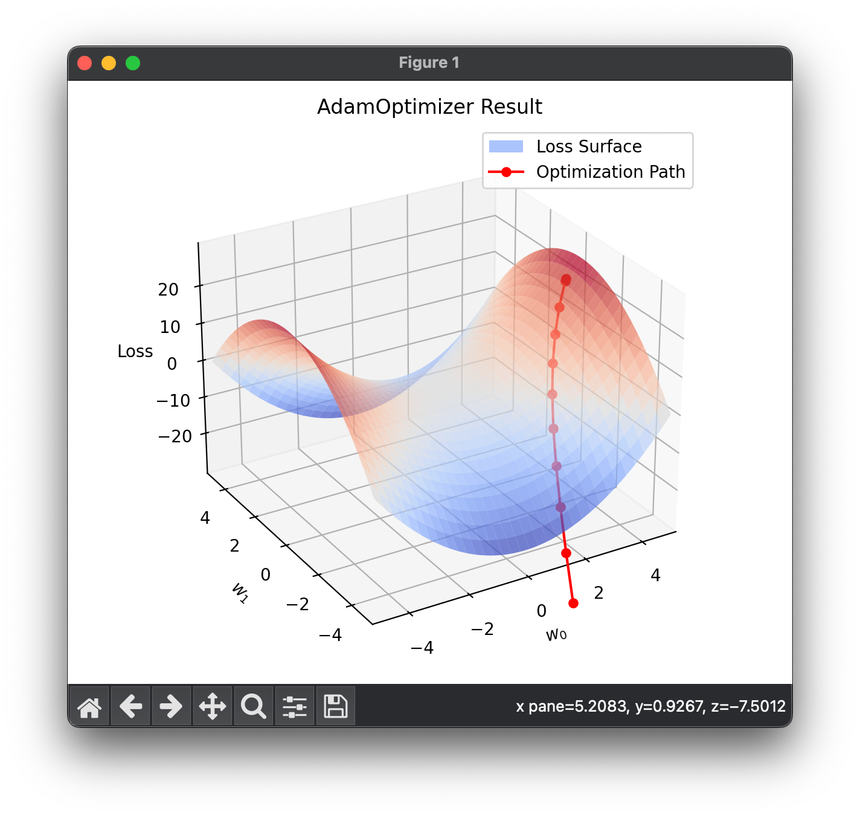

Adam (Adaptive Moment Estimation) is a popular optimization algorithm widely used in the training of deep neural networks. Introduced by Kingma and Ba in their 2014 paper, Adam combines the advantages of two other extensions of stochastic gradient descent, namely RMSProp and Adaptive Gradient Algorithm (AdaGrad), to handle sparse gradients on noisy problems. Adam is particularly effective because it combines the benefits of adaptive gradient algorithm (adaptive learning rates for each parameter) with the benefits of momentum (considering past gradients to smooth the updates).

Background and Theory

Adam is distinguished by its use of squared gradients to scale the learning rate and its incorporation of an exponentially decaying average of past gradients, similar to momentum. It calculates individual adaptive learning rates for different parameters from estimates of first and second moments of the gradients.

The algorithm's update rule is motivated by the desire to perform a more efficient step of gradient descent, adjusting the parameter updates based on a computationally efficient estimate of the first and second moments of the gradients. The inclusion of bias corrections helps Adam to make effective updates even in the initial time steps of the optimization process when the moment estimates may be highly inaccurate due to their initialization at zero.

Mathematical Formulation

Adam's parameters are updated according to the following equations:

-

Gradient Calculation

Where is the gradient of the loss function with respect to the parameters at step .

-

Update Bias-corrected First Moment Estimate

Here, represents the moving average of the gradients, and is the decay rate for this moving average.

-

Update Bias-corrected Second Raw Moment Estimate:

Where is the moving average of the squared gradients, and is the decay rate for the squared gradients.

-

Compute Bias-corrected Moment Estimates:

These bias corrections help adjust for the initialization bias towards zero.

-

Update Parameters:

Where is the step size (learning rate), and is a small number added to prevent division by zero.

Procedural Steps

- Initialization: Initialize parameters , first moment vector , second moment vector , and timestep .

- Compute Gradient: Calculate the gradient of the loss function with respect to the parameters.

- Update Moments: Update the first moment and second moment estimates, including their bias-corrected versions and .

- Parameter Update: Adjust the parameters based on the corrected first and second moment estimates.

- Iteration: Repeat steps 2-4 until convergence or a specified number of epochs is completed.

Applications

Adam's effectiveness and straightforward implementation have made it a popular choice in a wide range of machine learning tasks, including but not limited to training deep convolutional neural networks, recurrent neural networks, and large-scale unsupervised learning models.

Strengths and Limitations

Strengths

- Efficiency: Performs well with large datasets and high-dimensional spaces.

- Robustness: Less sensitive to hyperparameter settings, particularly the learning rate.

Limitations

- Memory Usage: Requires more memory to store the first and second moment vectors.

- Bias Towards Initial Steps: Early in training, estimates of and can be significantly off due to initialization at zero.

Advanced Topics

- AdamW: A variant that decouples weight decay from the optimization steps, often leading to better training stability.

- AMSGrad: A modification that ensures the monotonic decrease of learning rates, aiming to improve the theoretical properties of Adam.

References

- Kingma, Diederik P., and Jimmy Ba. "Adam: A method for stochastic optimization." arXiv preprint arXiv:1412.6980 (2014).