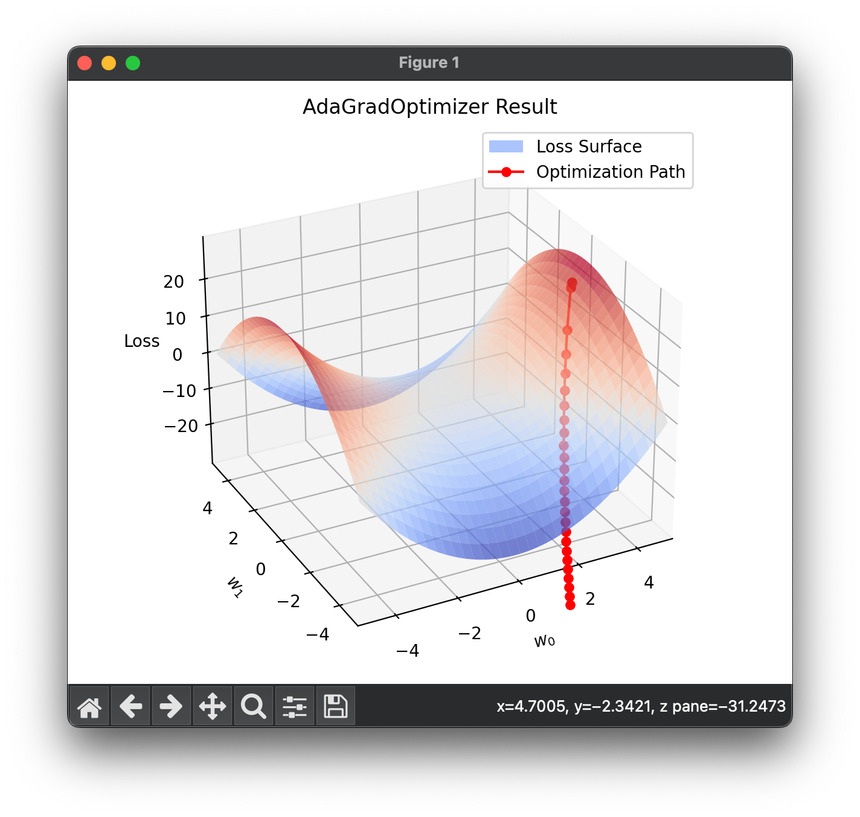

Adaptive Gradient (AdaGrad)

Introduction

AdaGrad (Adaptive Gradient Algorithm) is a gradient-based optimization algorithm that adjusts the learning rate to the parameters, performing smaller updates (i.e., lower learning rates) for parameters associated with frequently occurring features, and larger updates (higher learning rates) for parameters associated with infrequent features. This adaptive learning rate method, introduced by Duchi et al. in 2011, is particularly useful for dealing with sparse data, as seen in large-scale machine learning problems like natural language processing and image recognition.

Background and Theory

AdaGrad's key feature is its ability to modify the learning rate for each parameter individually, scaling inversely with the square root of the sum of all past squared gradients. This approach helps to compensate for the changing distribution of each feature and improves convergence performance over standard SGD, particularly on problems with sparse gradients and varying scales across input features.

Mathematical Formulation

The update rules for AdaGrad are based on accumulating a square gradient for each parameter, which is then used to scale the learning rate for that parameter across each iteration. This can be mathematically described as follows:

-

Gradient Calculation:

Where is the gradient of the loss function with respect to the parameters at time step .

-

Accumulate Squared Gradients:

Here, is a diagonal matrix where each diagonal element is the sum of the squares of the gradients w.r.t. parameter up to time step , and denotes the element-wise product.

-

Parameter Update:

Where is the initial learning rate and is a small smoothing term to prevent division by zero.

Procedural Steps

- Initialization: Start with initial parameters , and initialize a gradient accumulation matrix as zero.

- Compute Gradient: At each step, compute the gradient of the loss function with respect to .

- Accumulate Squared Gradients: Update the gradient accumulation matrix by adding the square of the gradient.

- Update Parameters: Modify using the adjusted learning rate, which depends on the historical squared gradients.

- Repeat: Iterate steps 2-4 until convergence or for a specified number of iterations.

Applications

AdaGrad is particularly suited for applications where data is sparse and features have very different frequencies of occurrence, such as:

- Natural language processing (NLP), especially in tasks involving large vocabularies like language modeling and machine translation.

- Recommendation systems, where user-item interactions are typically sparse.

Strengths and Limitations

Strengths

- Robustness in Sparse Settings: AdaGrad performs well in cases with sparse data by adapting the learning rate to the frequency of parameters' updates.

- Eliminates the Need for Manual Tuning of the Learning Rate: The algorithm adapts the learning rates based on the data, reducing the need for detailed tuning.

Limitations

- Early Stopping: The continual accumulation of squared gradients can lead to an overly aggressive decrease in the learning rate, sometimes causing the training to stall if run for too many epochs.

- Memory Intensive: Storing the gradient squared for every parameter can be memory-intensive, making it less suitable for larger models.

Advanced Topics

- Variants and Extensions: Modifications like AdaDelta and RMSProp address some limitations of AdaGrad by modifying the gradient accumulation into a more sustainable form, preventing the early saturation of learning rates.

References

- Duchi, John, Elad Hazan, and Yoram Singer. "Adaptive subgradient methods for online learning and stochastic optimization." Journal of Machine Learning Research 12.Jul (2011): 2121-2159.