Factor Analysis (FA)

Introduction

Factor Analysis is a statistical method used to describe variability among observed, correlated variables in terms of a potentially lower number of unobserved variables called factors. It is commonly used for data reduction and to identify underlying relationships between data points. This method assumes that any observed variable can be linearly decomposed into a combination of underlying factors and a unique variance component.

Background and Theory

The foundation of Factor Analysis lies in decomposing the variance of each variable into shared and unique components. The shared components are associated with the factors, which are hypothesized to cause the observed correlations among variables. The unique components capture the variance unique to each variable, including error variance.

Mathematical Formulation

Consider a dataset with observations and variables. The goal is to explain the variance-covariance structure of these variables through a smaller number of unobserved latent factors, where . The relationship between observed variables and latent factors can be expressed as:

where:

- is the vector of observed variables.

- is the matrix of factor loadings, representing the relationship between each observed variable and the latent factors.

- is the vector of latent factors.

- is the vector of unique variances for each observed variable.

- is the vector of error terms associated with each observed variable.

The covariance matrix of , denoted as , can be modeled as:

where is a diagonal matrix with the unique variances (specific variances) of the variables on its diagonal.

Estimation of Parameters

The parameters of interest in Factor Analysis, namely the factor loadings () and unique variances (), are typically estimated through Maximum Likelihood Estimation (MLE), Principal Axis Factoring (PAF), or other methods like Expectation-Maximization (EM) algorithm.

Maximum Likelihood Estimation (MLE)

MLE seeks to find the parameter values that maximize the likelihood of observing the data given the model. In the context of Factor Analysis, this involves optimizing:

where is the likelihood function defined by the observed covariance matrix and the model parameters.

Principal Axis Factoring (PAF)

PAF starts with an initial estimate of the communalities and iteratively extracts factors based on these estimates. It reduces the dimensionality by focusing on the shared variance, ignoring unique variances initially.

Steps for MLE

Defining the Likelihood Function

The starting point for MLE in Factor Analysis is the specification of the likelihood function. Given a dataset with observations and variables, the likelihood function for FA models the probability of observing the sample covariance matrix given the model parameters (, ), where is the factor loading matrix, and is the diagonal matrix of unique variances.

The likelihood function is based on the multivariate normal distribution:

where is the model-implied covariance matrix, and is the mean vector of the variables.

Log-Likelihood Function

Due to the complexity of the likelihood function, it's common practice to work with the log-likelihood function, which simplifies the mathematics and numerical optimization.

The log-likelihood of the FA model is given by:

where denotes the trace of a matrix (the sum of its diagonal elements).

Optimization

The goal of MLE is to find the values of and that maximize the log-likelihood function. This is typically achieved through numerical optimization techniques, as analytical solutions are not available for most FA models. Common algorithms include gradient ascent, the expectation-maximization (EM) algorithm, and quasi-Newton methods like the Broyden–Fletcher–Goldfarb–Shanno (BFGS) algorithm.

Factor Rotation

After finding the optimal factor loadings through MLE, it's common to perform a rotation (either orthogonal or oblique) to achieve a more interpretable factor solution. This step does not change the fit of the model to the data but makes the factor structure easier to interpret.

Assessing Fit and Extracting Factors

Finally, assess the fit of the model to the data using various fit indices (e.g., RMSEA, CFI, TLI). If the model fits well, the estimated factor loadings and unique variances can be interpreted. The number of factors is usually determined based on the eigenvalues of the covariance matrix, scree tests, or parallel analysis, before applying MLE.

Implementation

Parameters

n_components:int

Dimensionality of low-space

max_iter:int, default = 1000

Number of iterations

tol:float, default = 0.00001

Threshold for convergence

noise_variance:Matrixlist, default = None

Initial variances for noise of each features

Examples

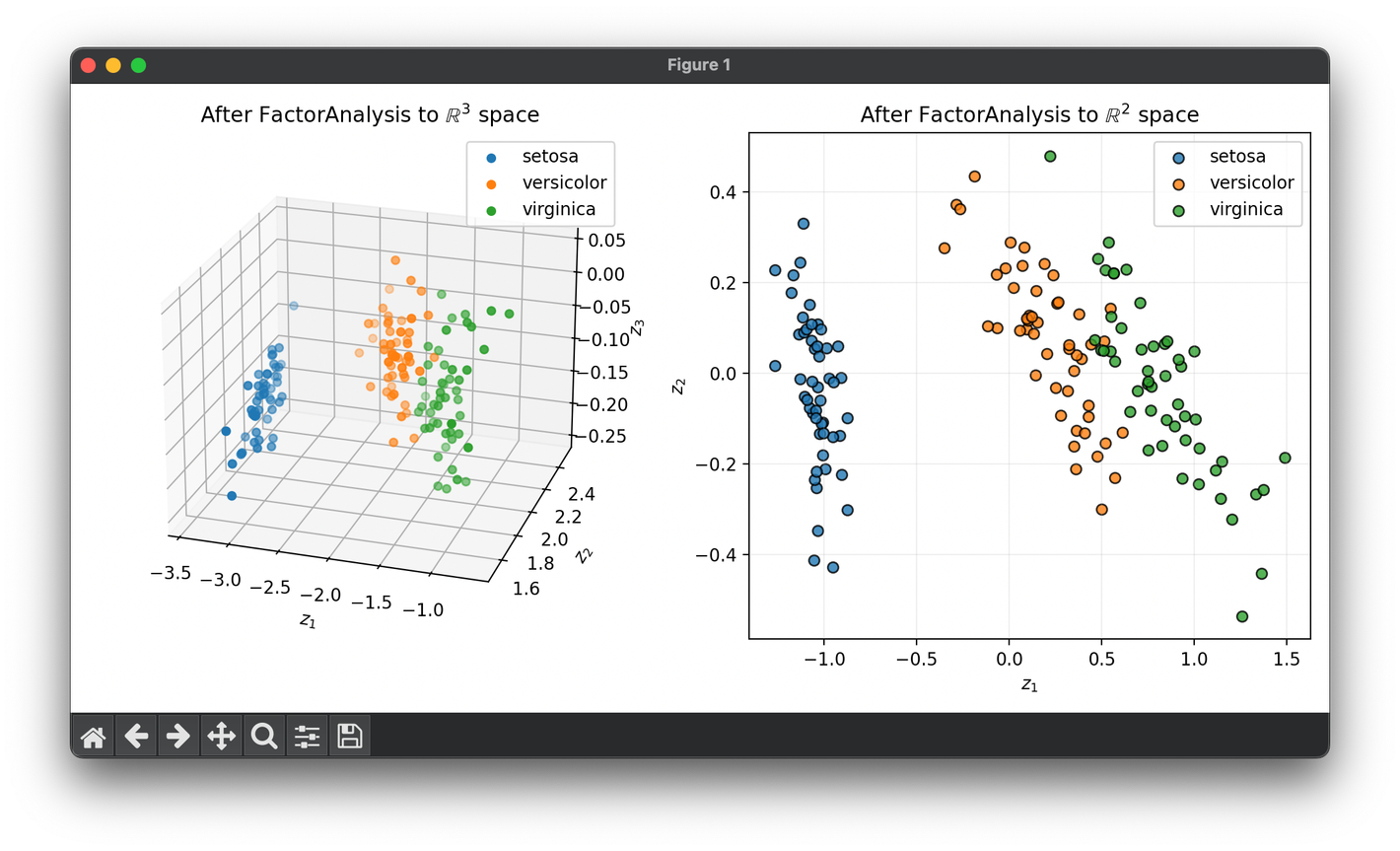

Test on the iris dataset:

from luma.reduction.linear import FactorAnalysis

from sklearn.datasets import load_iris

import matplotlib.pyplot as plt

import numpy as np

data_df = load_iris()

X = data_df.data

y = data_df.target

labels = data_df.target_names

model_3d = FactorAnalysis(n_components=3, max_iter=1000)

X_3d = model_3d.fit_transform(X)

model_2d = FactorAnalysis(n_components=2, max_iter=1000)

X_2d = model_2d.fit_transform(X)

fig = plt.figure(figsize=(10, 5))

ax1 = fig.add_subplot(1, 2, 1, projection="3d")

ax2 = fig.add_subplot(1, 2, 2)

for cl, lb in zip(np.unique(y), labels):

X_cl = X_3d[y == cl]

ax1.scatter(X_cl[:, 0], X_cl[:, 1], X_cl[:, 2], label=lb)

ax1.set_xlabel(r"$z_1$")

ax1.set_ylabel(r"$z_2$")

ax1.set_zlabel(r"$z_3$")

ax1.set_title(

f"After {type(model_3d).__name__} to " +

r"$\mathbb{R}^3$ space")

ax1.legend()

for cl, lb in zip(np.unique(y), labels):

X_cl = X_2d[y == cl]

ax2.scatter(X_cl[:, 0],

X_cl[:, 1],

label=lb,

edgecolors="black",

alpha=0.8)

ax2.set_xlabel(r"$z_1$")

ax2.set_ylabel(r"$z_2$")

ax2.set_title(

f"After {type(model_2d).__name__} to " +

r"$\mathbb{R}^2$ space")

ax2.grid(alpha=0.2)

ax2.legend()

plt.tight_layout()

plt.show()

Applications and Considerations

- Factor Extraction: MLE is used to estimate the factor loadings matrix that represents the relationship between observed variables and latent factors.

- Unique Variances: MLE also estimates the unique variances , which are crucial for understanding the amount of variance in each variable not explained by the factors.

Considerations

- Normality Assumption: MLE in FA assumes multivariate normality of the data, which may not always hold. Violations of this assumption can lead to biased estimates.

Procedural Steps

- Prepare Data: Standardize the variables to have mean zero and unit variance.

- Estimate Initial Parameters: Estimate an initial covariance matrix .

- Factor Extraction: Apply an extraction method (e.g., MLE or PAF) to estimate and .

- Determine Number of Factors: Use criteria like Kaiser's criterion, scree test, or parallel analysis to decide the number of factors .

- Rotate Factors: Optionally, apply a rotation method (e.g., Varimax) to the factor loading matrix to achieve a simpler, more interpretable structure.

- Interpret Factors: Interpret the rotated factors based on the loading of variables.

Applications

- Psychometrics: Understanding personality traits, intelligence, or other abstract constructs.

- Market Research: Identifying underlying factors that influence consumer behavior.

- Data Reduction: Reducing the dimensionality of datasets while retaining essential information.

Strengths and Limitations

Strengths

- Interpretability: Can provide meaningful insights into the structure of complex datasets.

- Dimensionality Reduction: Reduces the number of variables by identifying underlying factors.

Limitations

- Assumptions: Assumes linear relationships and normally distributed error terms.

- Subjectivity in Interpretation: The naming and interpretation of factors can be subjective and context-dependent.

- Estimation Complexity: The estimation of factor models, especially determining the number of factors, can be complex and computationally intensive.

Advanced Topics

- Confirmatory Factor Analysis (CFA): A hypothesis-testing approach to Factor Analysis that tests whether a specific model fits the observed data.

- Exploratory Factor Analysis (EFA) vs. CFA: EFA is used when the researcher has no a priori hypothesis, whereas CFA is used to test a predefined factor structure.

References

- Fabrigar, Leandre R., and Duane T. Wegener. "Exploratory Factor Analysis." Oxford University Press, 2011.

- Harman, Harry H. "Modern Factor Analysis." University of Chicago Press, 1960.

- Bartholomew, David J., Martin Knott, and Irini Moustaki. "Latent Variable Models and Factor Analysis: A Unified Approach." Wiley, 2011.