Support Vector Machines

- Here we approach the two-class classification problem in a direct way :

We try and find a plane that seperates the classes in feature space

- If we cannot, we get creative in two ways :

- We soften what we mean by

seperates, and - We enrich and enlarge the feature space so that separation is possible

- We soften what we mean by

What is a Hyperplane?

- A

hyperplanein dimensions is a flat affine subspace of dimension - In general the equation for a

hyperplanehas the form - In dimensions a

hyperplaneis a line - If the

hyperplanegoes throught the origin, otherwise not - The vector is called the normal vector - it points in a direction

orthogonalto the surface of ahyperplane

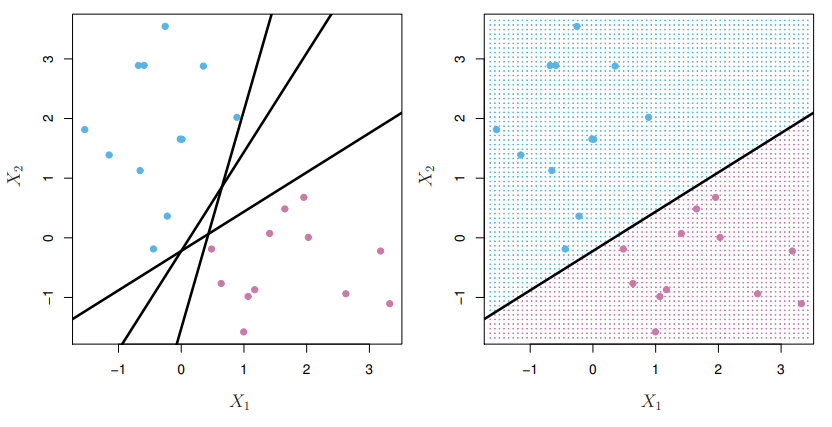

Separating Hyperplanes

- If , then for points on one side of the

hyperplane, and for points on the other - If we code the colored points as for blue, say, and for mauve, then if for all , defines a

separating hyperplane

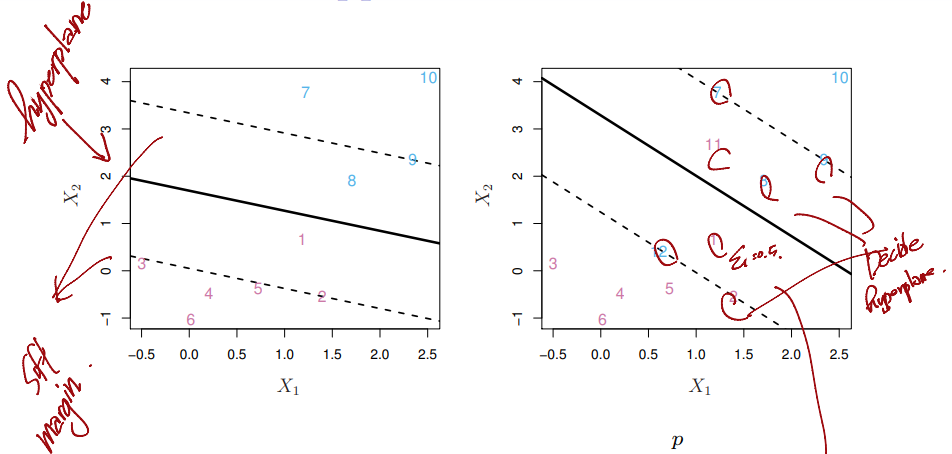

Maximal Margin Classifier

- Among all seperating

hyperplanes, find the one that makes the biggest gap ormarginbetween the two classes

- Constrained optimization problem

Non-separable Data

- The data are not separable by a linear boundary

- This is often the ase, unless

Noisy Data

- Sometimes the data are separable, but noisy

- This can lead to a poor solution for the

maximal-marginclassifier - The

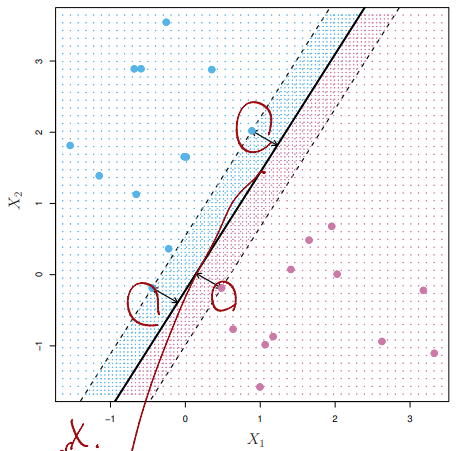

support vector classifiermaximizes asoft margin

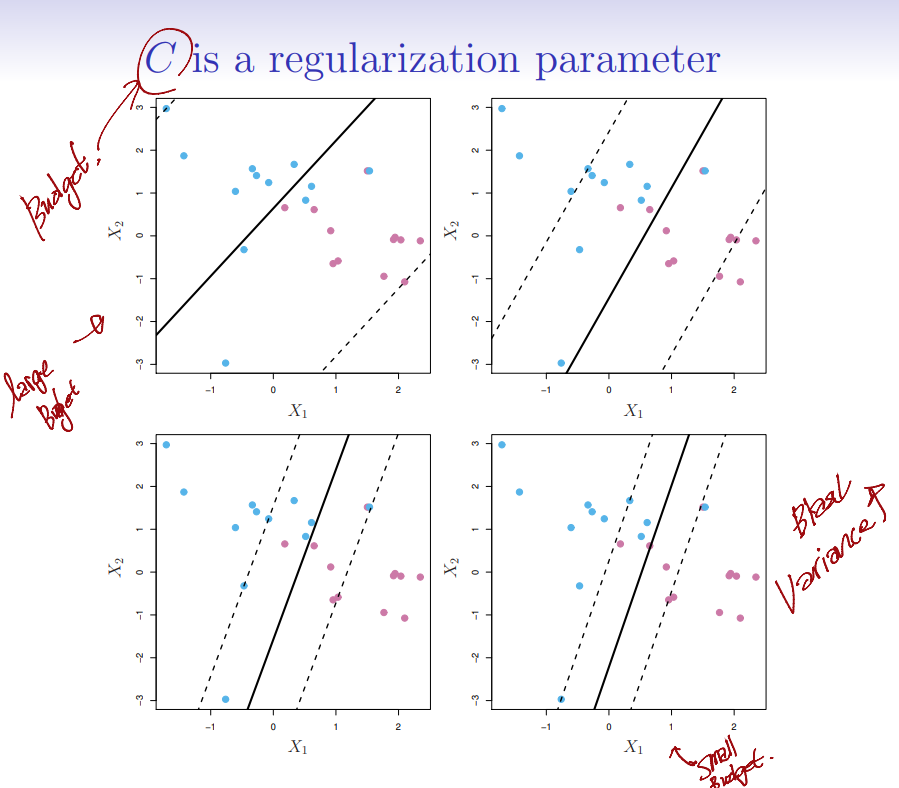

Support Vector Classifier

- : slack variable

- : Budget

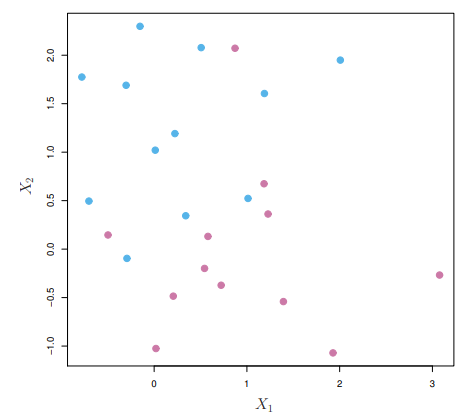

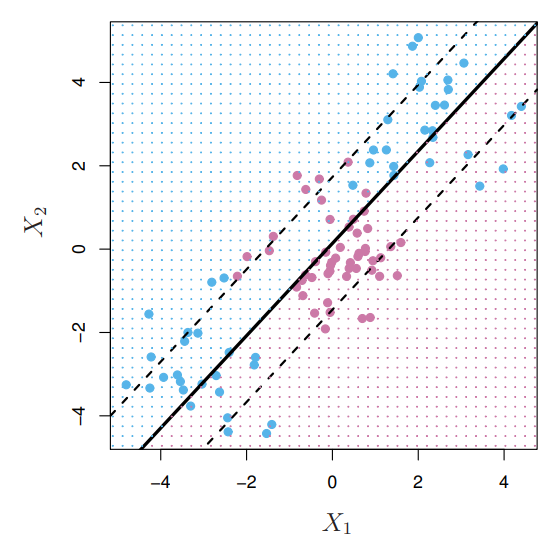

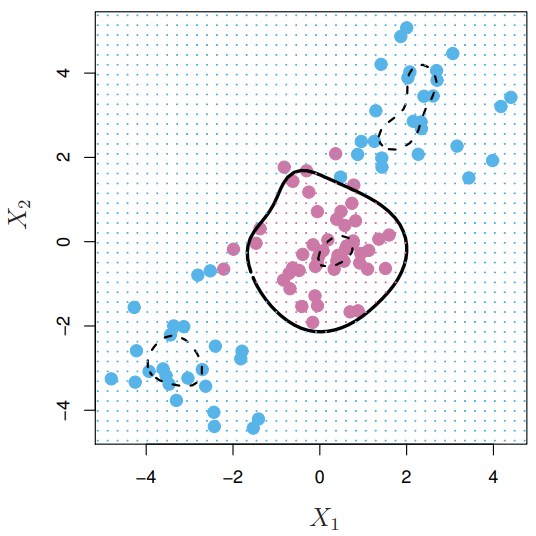

Linear boundary can fail

- Sometimes a linear boundary simply won't work, no matter what value of

- The example on the left is such a case. What to do?

Featue Expansion

- Enlarge the space of features by including transformations;

e.g. Hence go from a -dimensional space to a dimensional space - Fit a

support-vectorclassifier in the enlarged space - This results in

non-lineardecision boundaries in the original space - Example : Suppose we use instead of just . Then the decision boundary would be of the form

- This leads to nonlinear decision boundaries in the original space(quadratic conic sections)

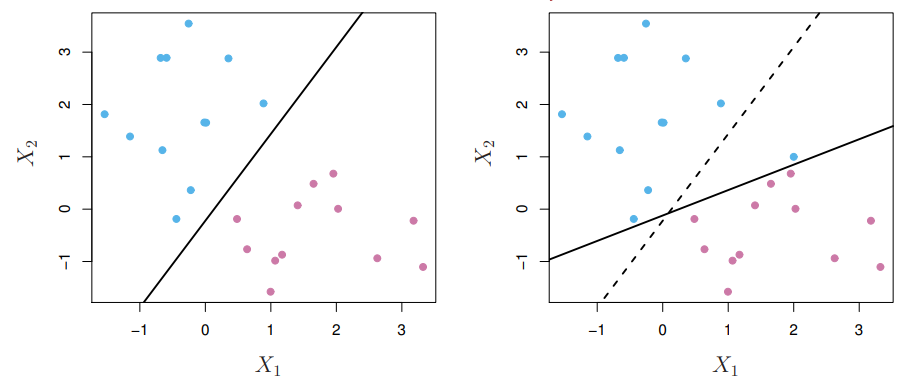

Cubic Polynomials

- Here we use a basis expansion of cubin polynomials

- From variables to

- The

support-vector classifierin the enlarged space solves the problem in the lower-dimensional space

Nonlinearities and Kernels

- Polynomials (especially high-dimensional ones) get wild rather fast

- There is a more elegant and controlled way to introduce nonlinearities in

support-vectorclassifiers - through the use ofkernels - Before we discuss these, we must understand the role of

inner productsinsupport-vectorclassifiers

Inner products and Support Vectors

- The linear

support vectorclassifier can be represented as - To estimate the parameters and , all we need are the inner products between all pairs of training observations

- It turns out that most of the can be zero :

- is the

support setof indies such that

Kernels and Support Vector Machines

- If we can compute inner-products between observations, we can fit a

SVclassifier. Can be quite abstract! - Some special

kernel functionscan do this for uscomputes thisinner-productsneeded for dimensional polynomials - basis functionsTry it for and

- The solution has the form

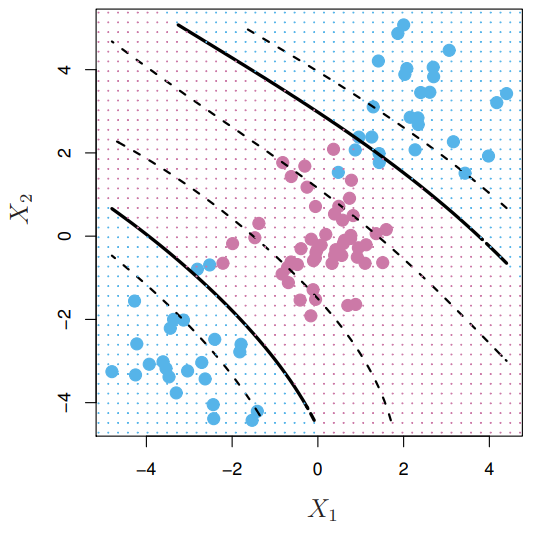

Radial Kernel

- Implict feature space; very high dimensional

- Controls variance by squashing down most dimensions severely

Example : Heart Data

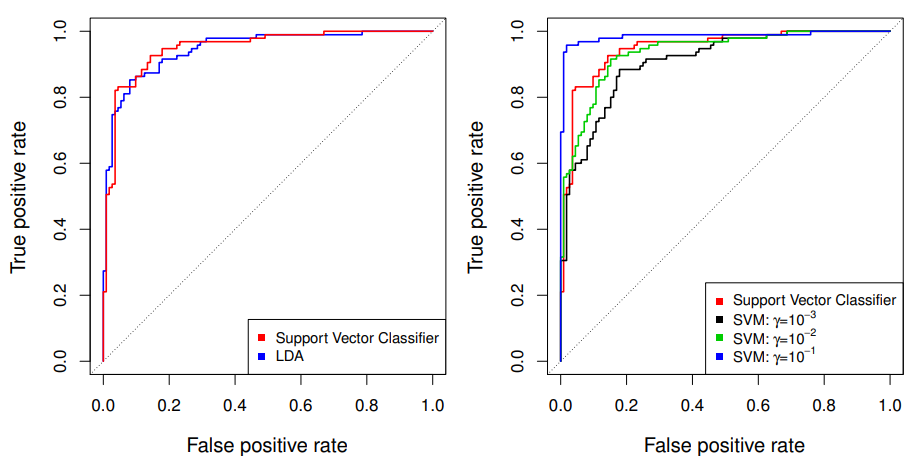

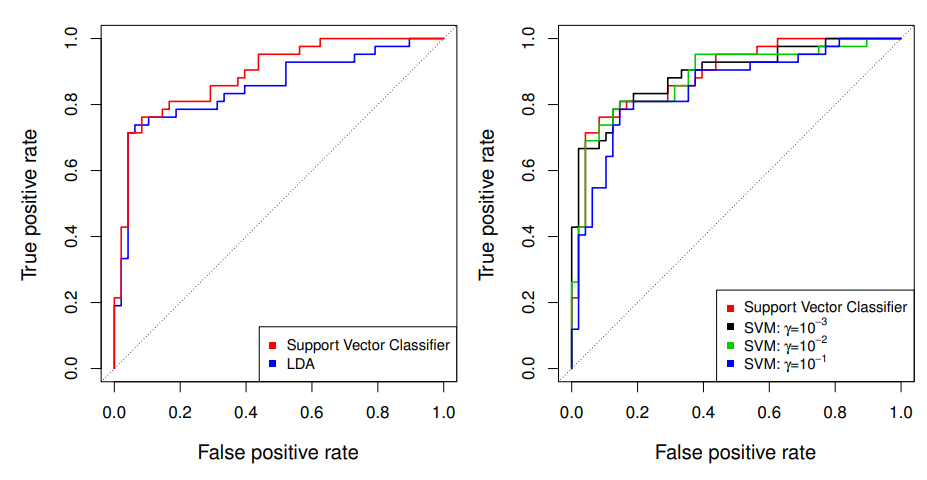

ROC Curveis obtained by changing the threshold to threshold in , and recordingfalse positiveandtrue positiverates as varies- here we see

ROC curveson training data

SVMs : more than 2 classes?

- The

SVMas defined works for classes - What do we do if we have classes?

OVA: One versus All.

Fit different 2-classSVM classifierseach class versus the rest

Classify to the class for whichOVO: One versus One

Fit all pairwise classifiers

Classify to the class that wins the most pairwise competitions

- Which to choose? If is not too large, use

OVO

Support Vector vs Logistic Regression?

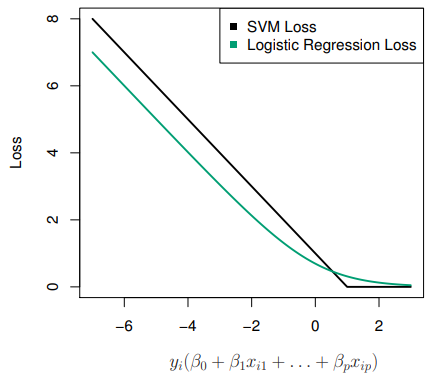

-

With can rephrase

support-vectorclassifier optimization as

-

This has the form

loss plus penalty -

The loss is known as the

hinge lossvery similar tolossin logistic regression (negative log-likelihood)

Which to use : SVM or Logistic Regression

- When classes are (nearly) separable,

SVMdoes better thanLR. So doesLDA - When not,

LR(with ridge penalty) andSVMvery similar - If you wish to estimate probabilities,

LRis the choice - For nonlinear boundaries, kernel

SVMs are popular - Can use kernels with

LRandLDAas well, but computations are more expensive