가시다(gasida) 님이 진행하는 AEWS(Amazon EKS Workshop Study) 3기 과정으로 학습한 내용을 정리 또는 실습한 내용을 정리한 게시글입니다.

8주차는 Jenkins CI/CD, Argo CD 관련사항에 대해 학습한 내용을 정리하였습니다.

실습환경은 macOS M1 + Kind를 이용하여 구성하였습니다.

2024년 12월 CI/CD 시리즈 글이 있어서 내용 정리는 가급적 생략하고,

1주차 - Jenkins CI/CD + Docker

2주차 - GitHub Actions CI/CD

3주차 - Jenkins CI/ArgoCD + K8S(1/2)

3주차 - Jenkins CI/ArgoCD + K8S(2/2)

되새김질 하는 마음으로 실습내용 위주로 정리하고자 합니다.

1. 실습 환경 구성

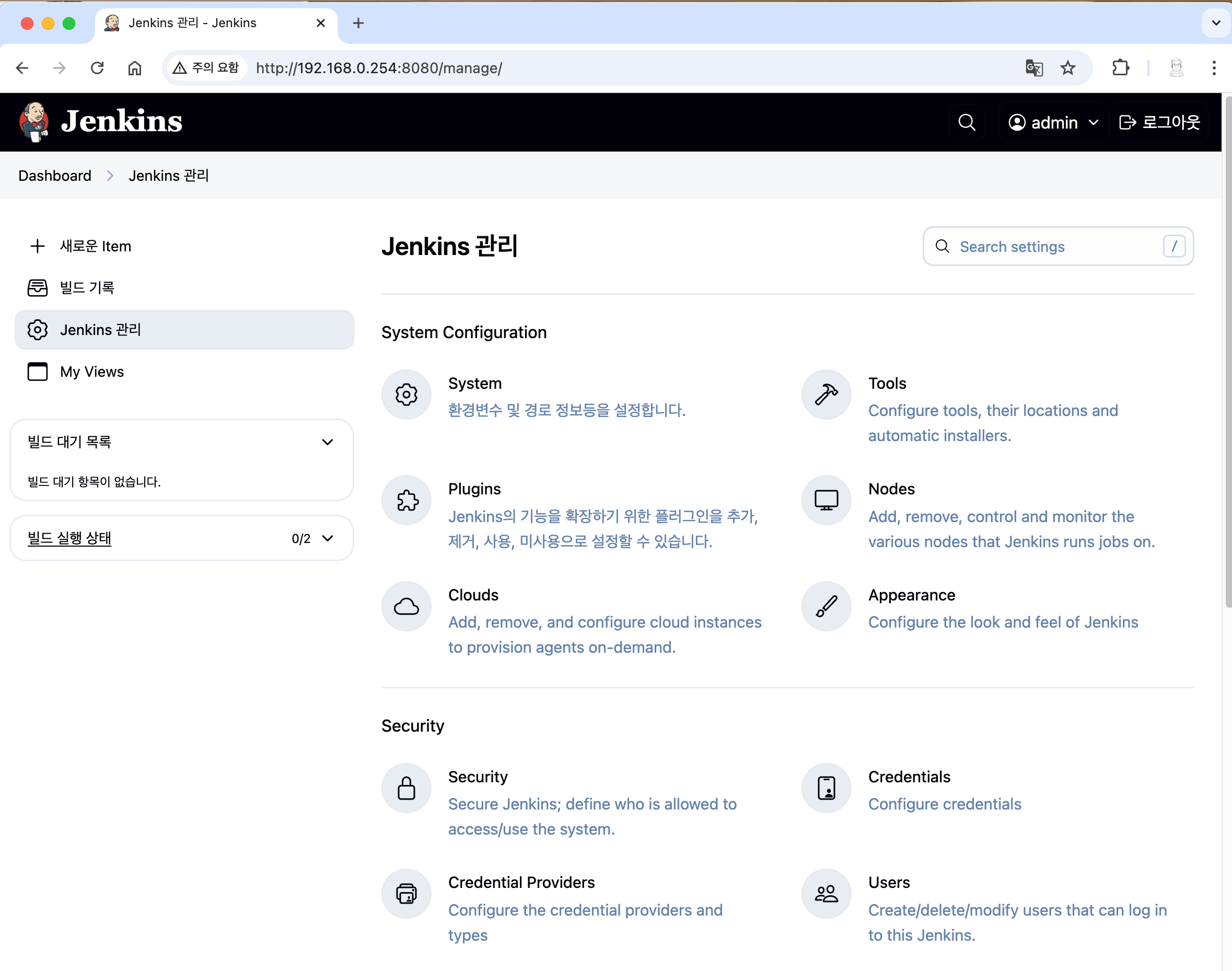

1.1 jenkins

-

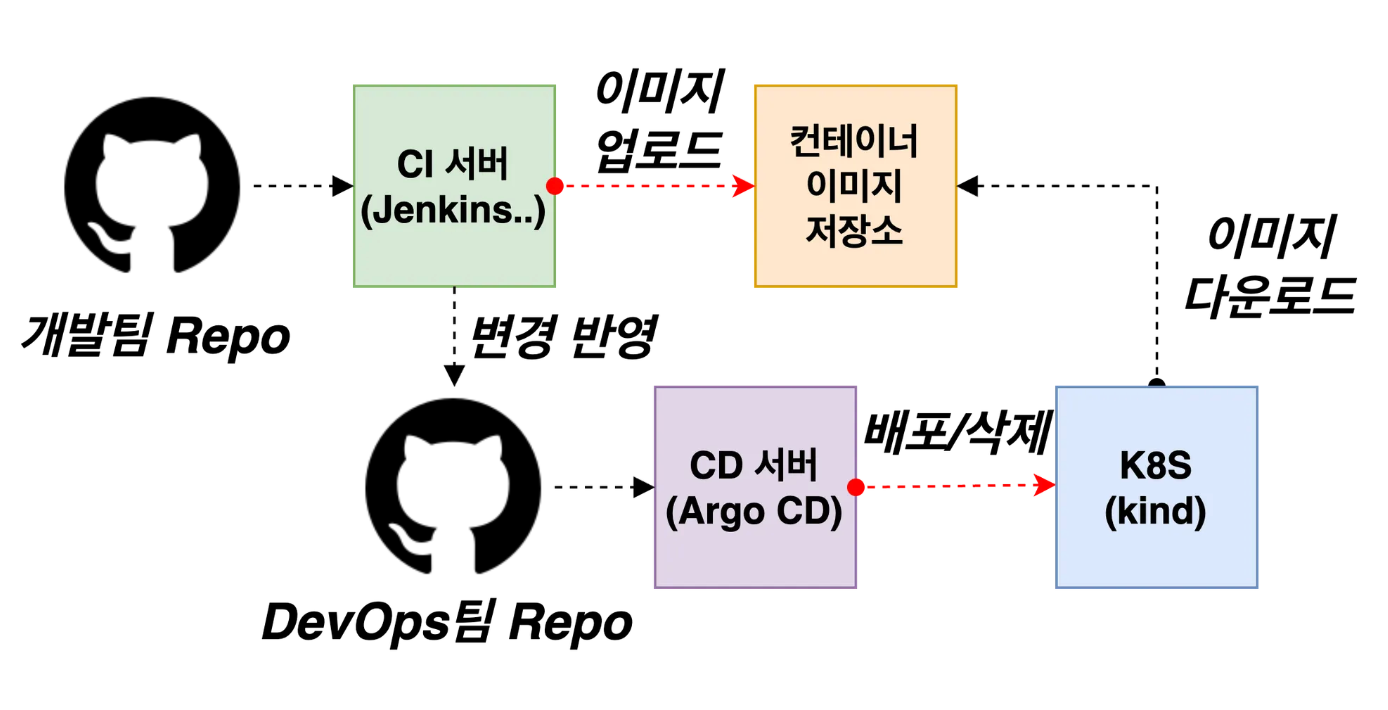

실습환경 아키텍처

-

컨테이터 2대(Jenkins, gogs) : 호스트 OS 포트 노출(expose)로 접속 및 사용

-

Jenkins, gogs 컨테이너 기동

# 작업 디렉토리 생성 후 이동

mkdir cicd-labs

cd cicd-labs

# cicd-labs 작업 디렉토리 IDE(VSCODE 등)로 열어두기

#

cat <<EOT > docker-compose.yaml

services:

jenkins:

container_name: jenkins

image: jenkins/jenkins

restart: unless-stopped

networks:

- cicd-network

ports:

- "8080:8080"

- "50000:50000"

volumes:

- /var/run/docker.sock:/var/run/docker.sock

- jenkins_home:/var/jenkins_home

gogs:

container_name: gogs

image: gogs/gogs

restart: unless-stopped

networks:

- cicd-network

ports:

- "10022:22"

- "3000:3000"

volumes:

- gogs-data:/data

volumes:

jenkins_home:

gogs-data:

networks:

cicd-network:

driver: bridge

EOT

# 배포

docker compose up -d

docker compose ps

NAME IMAGE COMMAND SERVICE CREATED STATUS PORTS

gogs gogs/gogs "/app/gogs/docker/st…" gogs About a minute ago Up About a minute (healthy) 0.0.0.0:3000->3000/tcp, 0.0.0.0:10022->22/tcp

jenkins jenkins/jenkins "/usr/bin/tini -- /u…" jenkins About a minute ago Up About a minute 0.0.0.0:8080->8080/tcp, 0.0.0.0:50000->50000/tcp

# 기본 정보 확인

for i in gogs jenkins ; do echo ">> container : $i <<"; docker compose exec $i sh -c "whoami && pwd"; echo; done

>> container : gogs <<

root

/app/gogs

>> container : jenkins <<

jenkins

/

# 도커를 이용하여 각 컨테이너로 접속

docker compose exec jenkins bash

exit

docker compose exec gogs bash

exit- Jenkins 컨테이너 초기 설정

# Jenkins 초기 암호 확인

docker compose exec jenkins cat /var/jenkins_home/secrets/initialAdminPassword

6dc2c6d1172a41fa9dc3e8086f18530a

# Jenkins 웹 접속 주소 확인 : 계정 / 암호 입력 >> admin / qwe123

open "http://127.0.0.1:8080" # macOS

웹 브라우저에서 http://127.0.0.1:8080 접속 # Windows

# (참고) 로그 확인 : 플러그인 설치 과정 확인

docker compose logs jenkins -f

- (참고) 실습 완료 후 해당 컨테이너 중지 상태로 둘 경우 → 재부팅 및 이후에 다시 실습을 위해 컨테이너 시작 시

# 실습 완료 후 해당 컨테이너 중지 상태로 둘 경우

docker compose stop

docker compose ps

docker compose ps -a

# mac 재부팅 및 이후에 다시 실습을 위해 컨테이너 시작 시

docker compose start

docker compose ps- (참고) 특정 컨테이너만 삭제 후 다시 초기화 상태로 기동 시

# gogs : 볼륨까지 삭제

docker compose down gogs -v

docker compose up gogs -d

# jenkins : 볼륨까지 삭제

docker compose down jenkins -v

docker compose up jenkins -d- (참고) 모든 실습 후 삭제 시 : docker compose down --volumes --remove-orphans

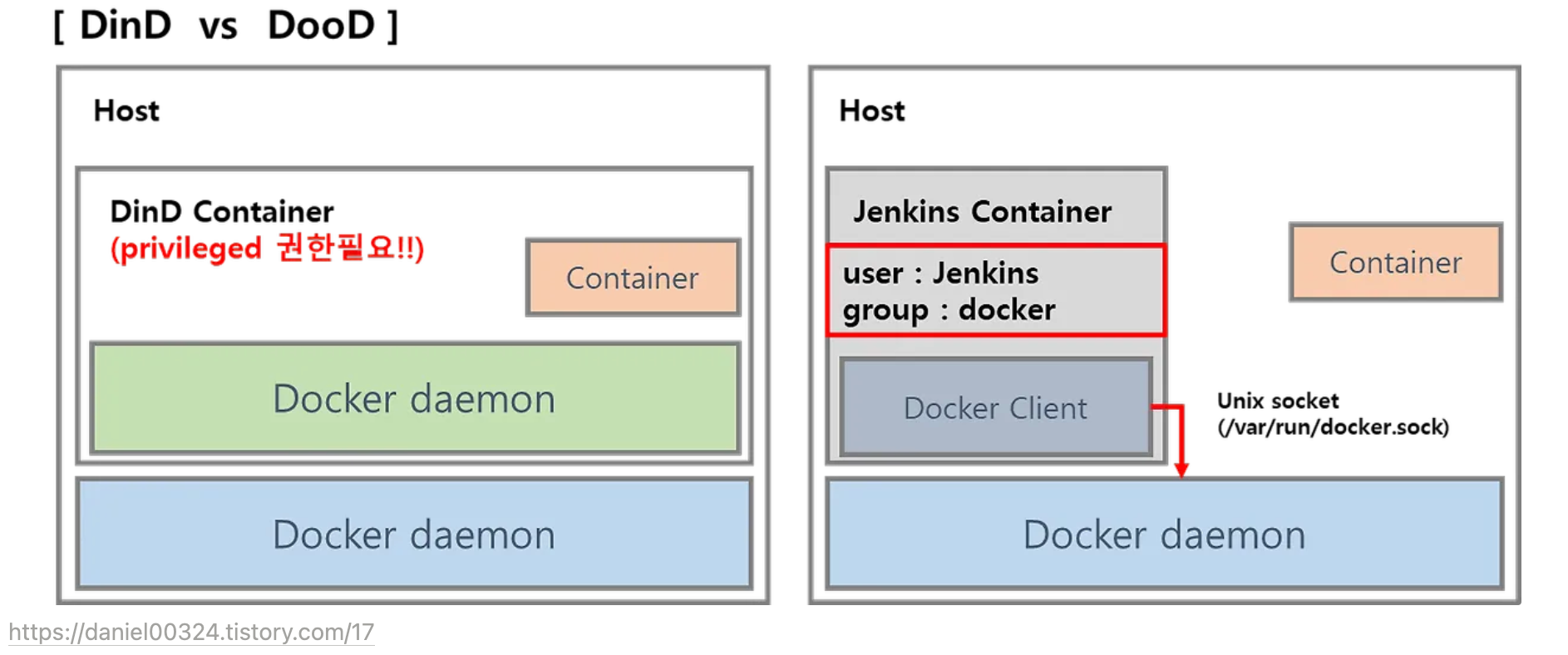

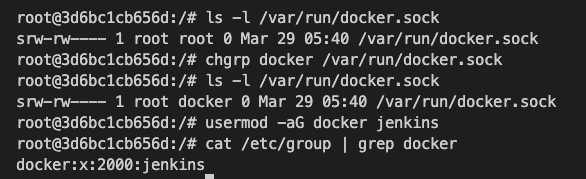

1.2 Docker-out-of-Docker로 Jenkins 환경구성

- 아키텍처 구성도

- Jenkins 컨테이너 내부에 도커 실행 파일 설치

# Jenkins 컨테이너 내부에 도커 실행 파일 설치

docker compose exec --privileged -u root jenkins bash

-----------------------------------------------------

id

uid=0(root) gid=0(root) groups=0(root)

curl -fsSL https://download.docker.com/linux/debian/gpg -o /etc/apt/keyrings/docker.asc

chmod a+r /etc/apt/keyrings/docker.asc

echo \

"deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.asc] https://download.docker.com/linux/debian \

$(. /etc/os-release && echo "$VERSION_CODENAME") stable" | \

tee /etc/apt/sources.list.d/docker.list > /dev/null

apt-get update && apt install docker-ce-cli curl tree jq yq -y

docker info

Client: Docker Engine - Community

Version: 28.0.4

Context: default

Debug Mode: false

Plugins:

buildx: Docker Buildx (Docker Inc.)

Version: v0.22.0

Path: /usr/libexec/docker/cli-plugins/docker-buildx

compose: Docker Compose (Docker Inc.)

Version: v2.34.0

Path: /usr/libexec/docker/cli-plugins/docker-compose

Server:

Containers: 2

Running: 2

Paused: 0

Stopped: 0

Images: 2

Server Version: 28.0.1

Storage Driver: overlayfs

driver-type: io.containerd.snapshotter.v1

...

docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

3d6bc1cb656d jenkins/jenkins "/usr/bin/tini -- /u…" 28 minutes ago Up 28 minutes 0.0.0.0:8080->8080/tcp, 0.0.0.0:50000->50000/tcp jenkins

0537bf5db764 gogs/gogs "/app/gogs/docker/st…" 28 minutes ago Up 28 minutes (healthy) 0.0.0.0:3000->3000/tcp, 0.0.0.0:10022->22/tcp gogs

which docker

/usr/bin/docker

# Jenkins 컨테이너 내부에서 root가 아닌 jenkins 유저도 docker를 실행할 수 있도록 권한을 부여

groupadd -g 2000 -f docker # macOS(Container)

chgrp docker /var/run/docker.sock

ls -l /var/run/docker.sock

usermod -aG docker jenkins

cat /etc/group | grep docker

exit

--------------------------------------------

# jenkins item 실행 시 docker 명령 실행 권한 에러 발생 : Jenkins 컨테이너 재기동으로 위 설정 내용을 Jenkins app 에도 적용 필요

docker compose restart jenkins

# jenkins user로 docker 명령 실행 확인

docker compose exec jenkins id

docker compose exec jenkins docker info

docker compose exec jenkins docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

3d6bc1cb656d jenkins/jenkins "/usr/bin/tini -- /u…" 32 minutes ago Up 36 seconds 0.0.0.0:8080->8080/tcp, 0.0.0.0:50000->50000/tcp jenkins

0537bf5db764 gogs/gogs "/app/gogs/docker/st…" 32 minutes ago Up 32 minutes (healthy) 0.0.0.0:3000->3000/tcp, 0.0.0.0:10022->22/tcp gogs

mac 재부팅 시에 jenkins 컨테이너에서 docker 실행 실패 시 : 소켓 파일에 docker 그룹을 다시 지정

# 소켓 파일에 그룹이 다시 원상태로 복귀...

docker compose exec --privileged -u root jenkins ls -l /var/run/docker.sock

# 소켓 파일에 docker 그룹을 다시 지정

docker compose exec --privileged -u root jenkins chgrp docker /var/run/docker.sock

# 확인

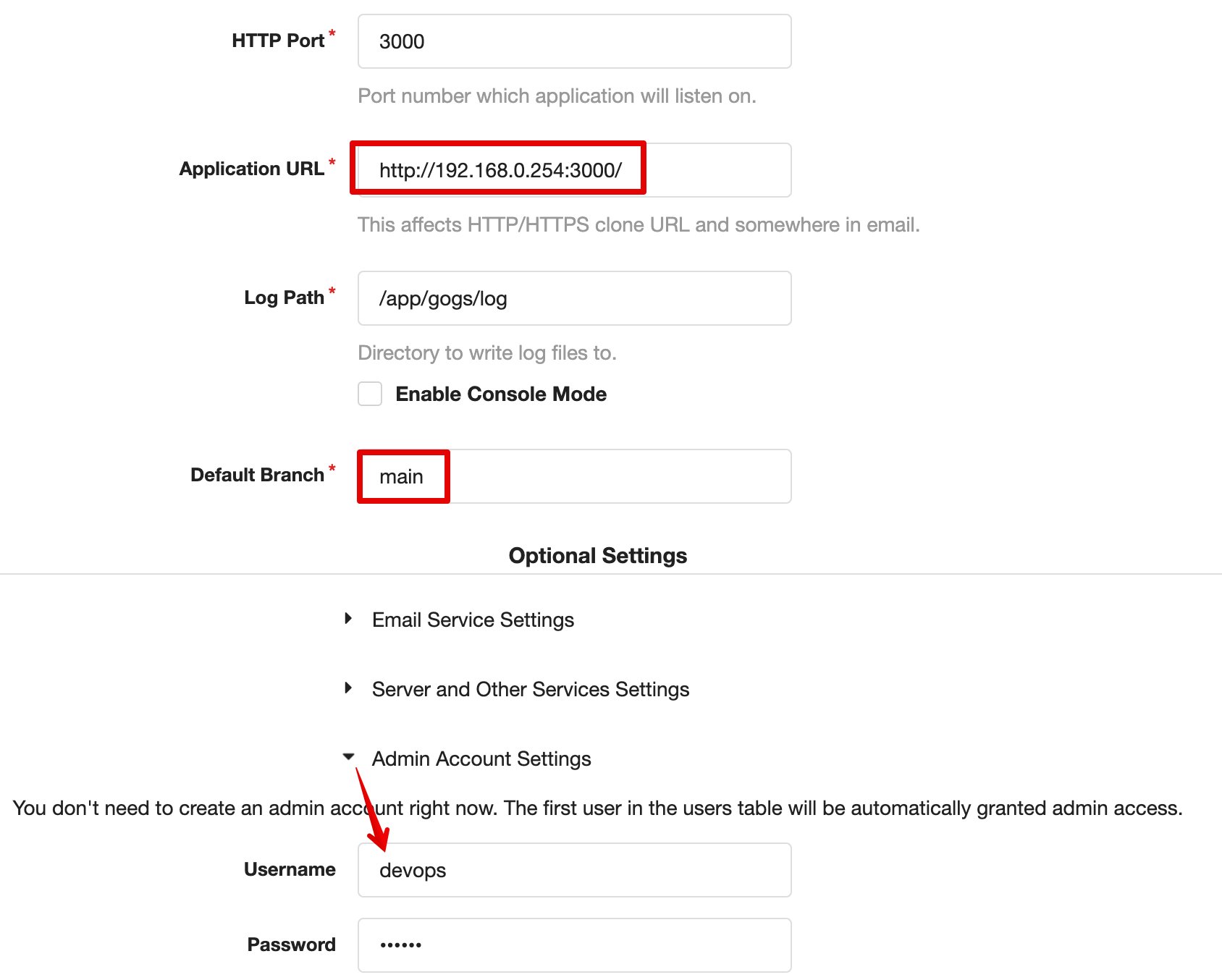

docker compose exec jenkins docker info1.3 gogs

-

Gogs is a painless self-hosted Git service

-

초기 설정위한 웹 접속 : open "http://127.0.0.1:3000/install

-

초기 설정

- 데이터베이스 유형 : SQLite3

- 애플리케이션 URL :

http://<각자 자신의 PC IP>:3000/ - 기본 브랜치 : main

- 관리자 계정 설정 클릭 : 이름(계정명 - 닉네임 사용 devops), 비밀번호(계정암호 qwe123), 이메일 입력

→ Gogs 설치하기 클릭 ⇒ 관리자 계정으로 로그인 후 접속

-

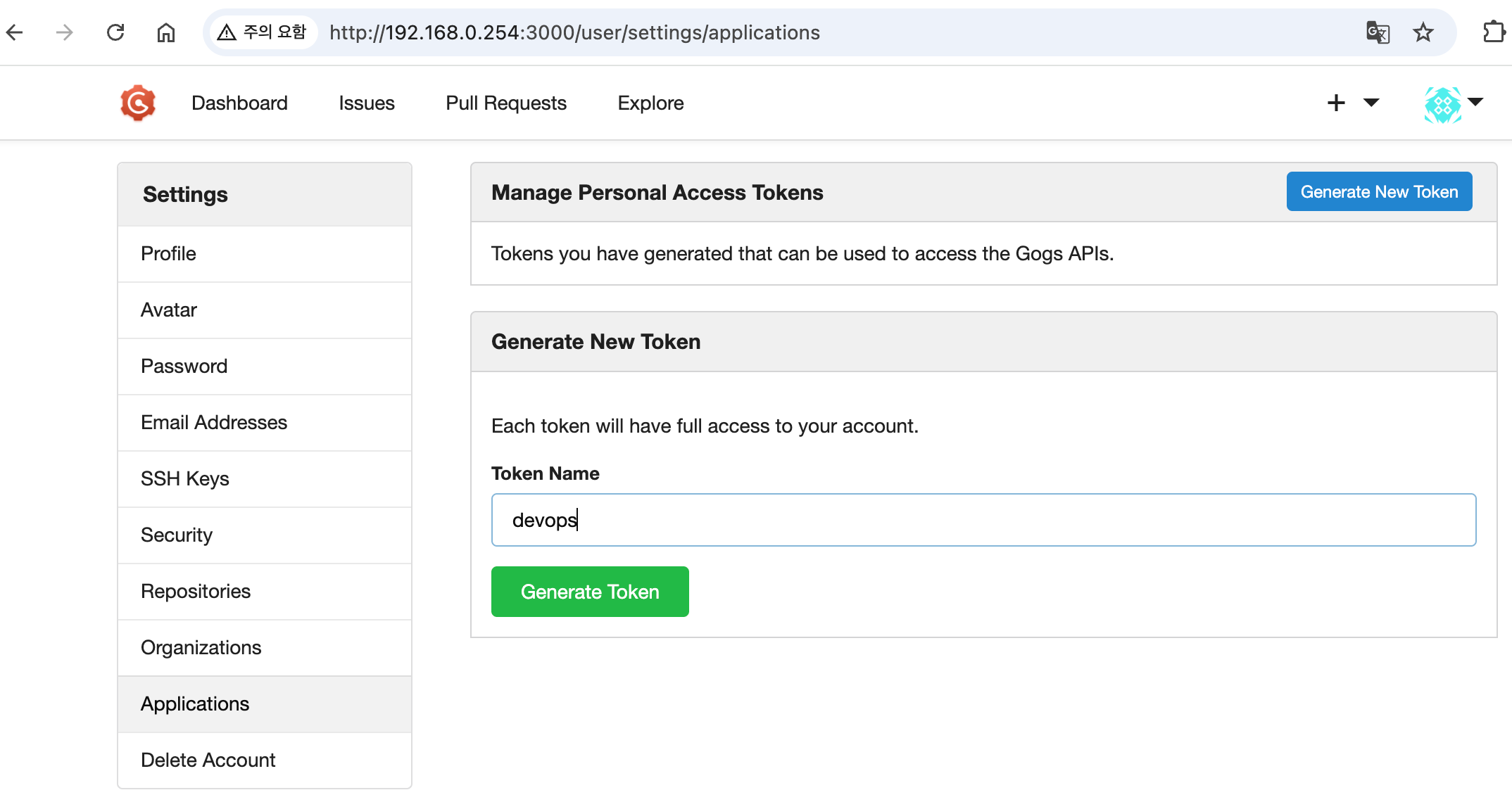

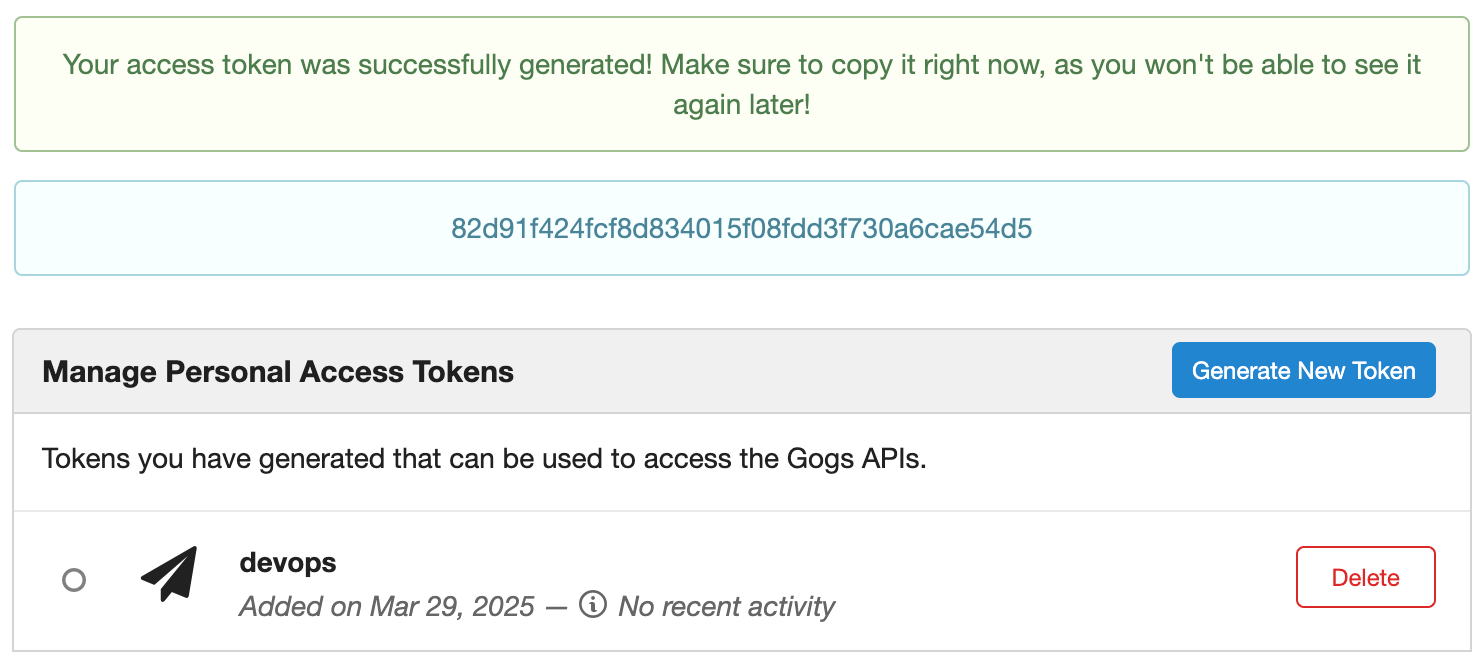

Token 생성로그인 후 → Your Settings → Applications : Generate New Token 클릭 - Token Name(devops) ⇒ Generate Token 클릭 : 메모해두기! 82d91f424fcf8d834015f08fdd3f730a6cae54d5

-

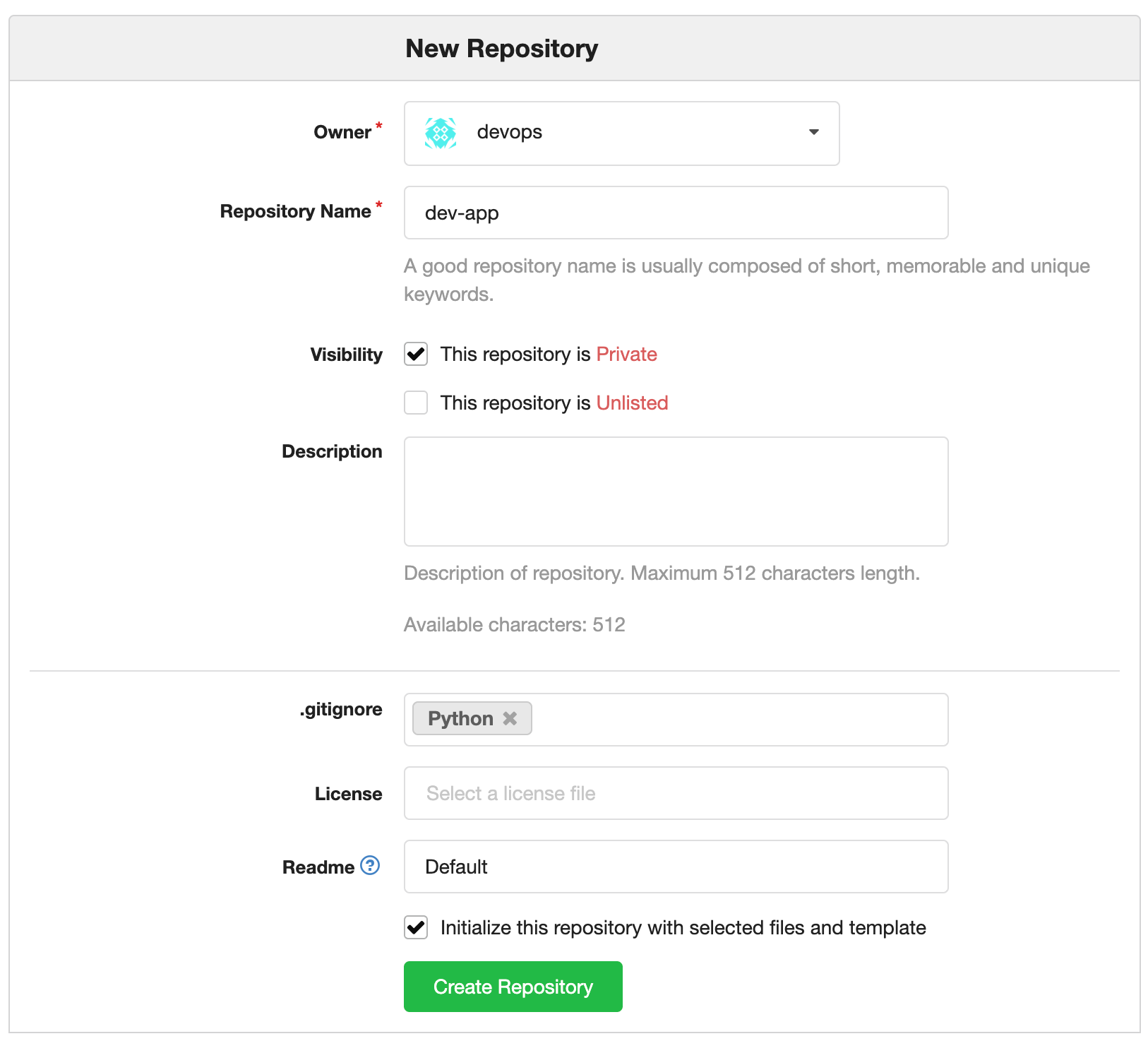

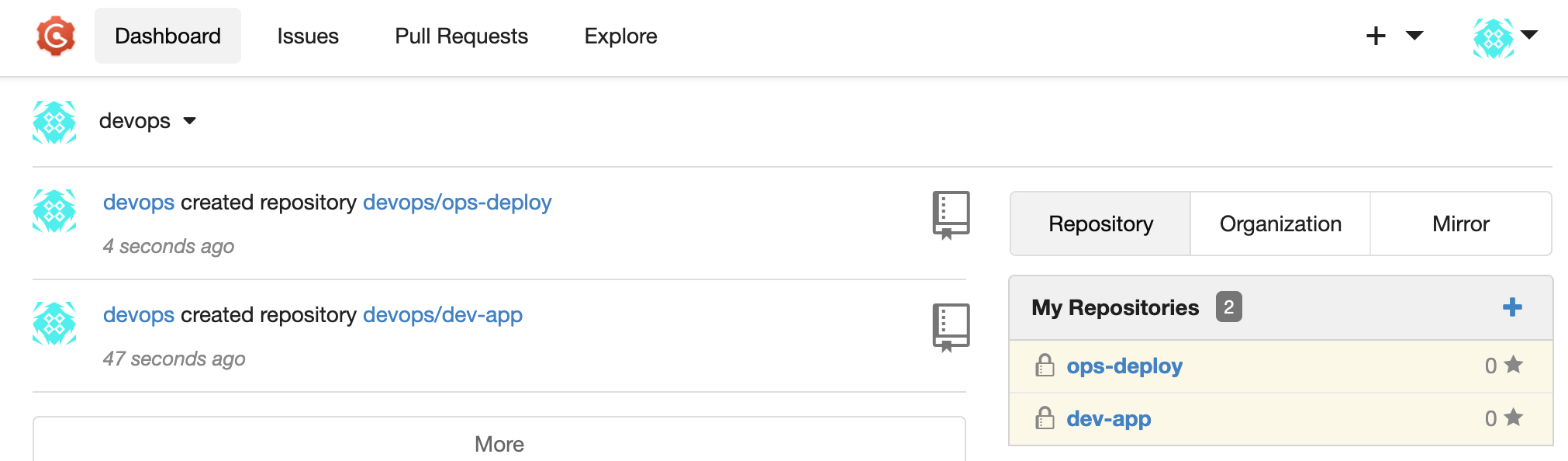

New Repository 1 : 개발팀용

-

Repository Name : dev-app

-

Visibility : (Check) This repository is Private

-

.gitignore : Python

-

Readme : Default → (Check) initialize this repository with selected files and template

⇒ Create Repository 클릭 : Repo 주소 확인

-

-

New Repository 2 : 데브옵스팀용

-

Repository Name : ops-deploy

-

Visibility : (Check) This repository is Private

-

.gitignore : Python

-

Readme : Default → (Check) initialize this repository with selected files and template

⇒ Create Repository 클릭 : Repo 주소 확인

-

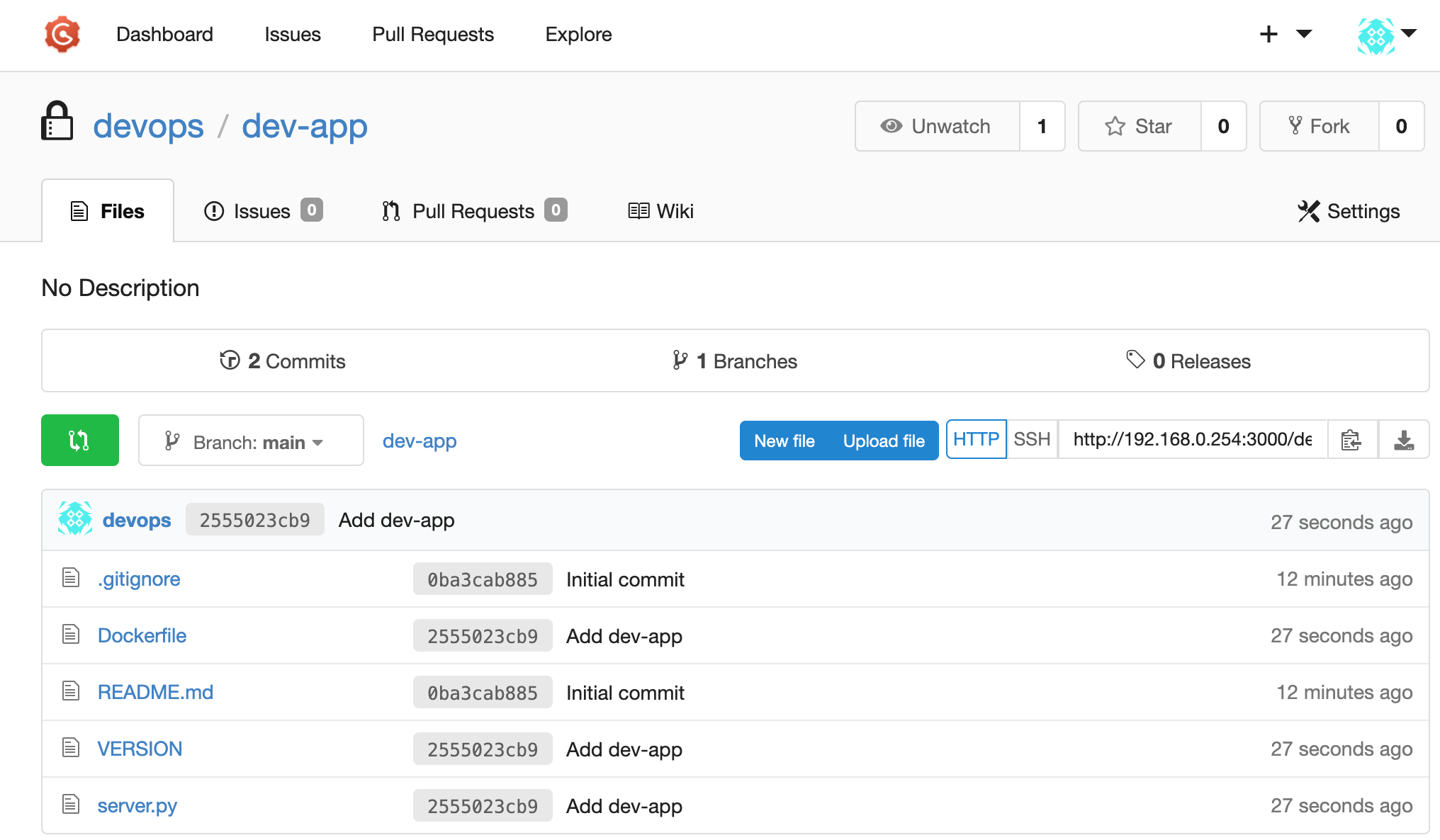

- Gogs 실습을 위한 저장소 설정 : 호스트에서 직접 git 작업

# (옵션) GIT 인증 정보 초기화

git credential-cache exit

#

git config --list --show-origin

#

TOKEN=<각자 Gogs Token>

TOKEN=82d91f424fcf8d834015f08fdd3f730a6cae54d5

MyIP=<각자 자신의 PC IP> # Windows (WSL2) 사용자는 자신의 WSL2 Ubuntu eth0 IP 입력 할 것!

MyIP=192.168.0.254

git clone <각자 Gogs dev-app repo 주소>

git clone http://devops:$TOKEN@$MyIP:3000/devops/dev-app.git

Cloning into 'dev-app'...

...

#

cd dev-app

#

git --no-pager config --local --list

git config --local user.name "devops"

git config --local user.email "a@a.com"

git config --local init.defaultBranch main

git config --local credential.helper store

git --no-pager config --local --list

cat .git/config

#

git --no-pager branch

* main

git remote -v

origin http://devops:82d91f424fcf8d834015f08fdd3f730a6cae54d5@192.168.0.254:3000/devops/dev-app.git (fetch)

origin http://devops:82d91f424fcf8d834015f08fdd3f730a6cae54d5@192.168.0.254:3000/devops/dev-app.git (push)

# server.py 파일 작성

cat > server.py <<EOF

from http.server import ThreadingHTTPServer, BaseHTTPRequestHandler

from datetime import datetime

import socket

class RequestHandler(BaseHTTPRequestHandler):

def do_GET(self):

match self.path:

case '/':

now = datetime.now()

hostname = socket.gethostname()

response_string = now.strftime("The time is %-I:%M:%S %p, VERSION 0.0.1\n")

response_string += f"Server hostname: {hostname}\n"

self.respond_with(200, response_string)

case '/healthz':

self.respond_with(200, "Healthy")

case _:

self.respond_with(404, "Not Found")

def respond_with(self, status_code: int, content: str) -> None:

self.send_response(status_code)

self.send_header('Content-type', 'text/plain')

self.end_headers()

self.wfile.write(bytes(content, "utf-8"))

def startServer():

try:

server = ThreadingHTTPServer(('', 80), RequestHandler)

print("Listening on " + ":".join(map(str, server.server_address)))

server.serve_forever()

except KeyboardInterrupt:

server.shutdown()

if __name__== "__main__":

startServer()

EOF

# (참고) python 실행 확인

python3 server.py

curl localhost

curl localhost/healthz

CTRL+C 실행 종료

# Dockerfile 생성

cat > Dockerfile <<EOF

FROM python:3.12

ENV PYTHONUNBUFFERED 1

COPY . /app

WORKDIR /app

CMD python3 server.py

EOF

# VERSION 파일 생성

echo "0.0.1" > VERSION

#

tree

git status

git add .

git commit -m "Add dev-app"

git push -u origin main

...

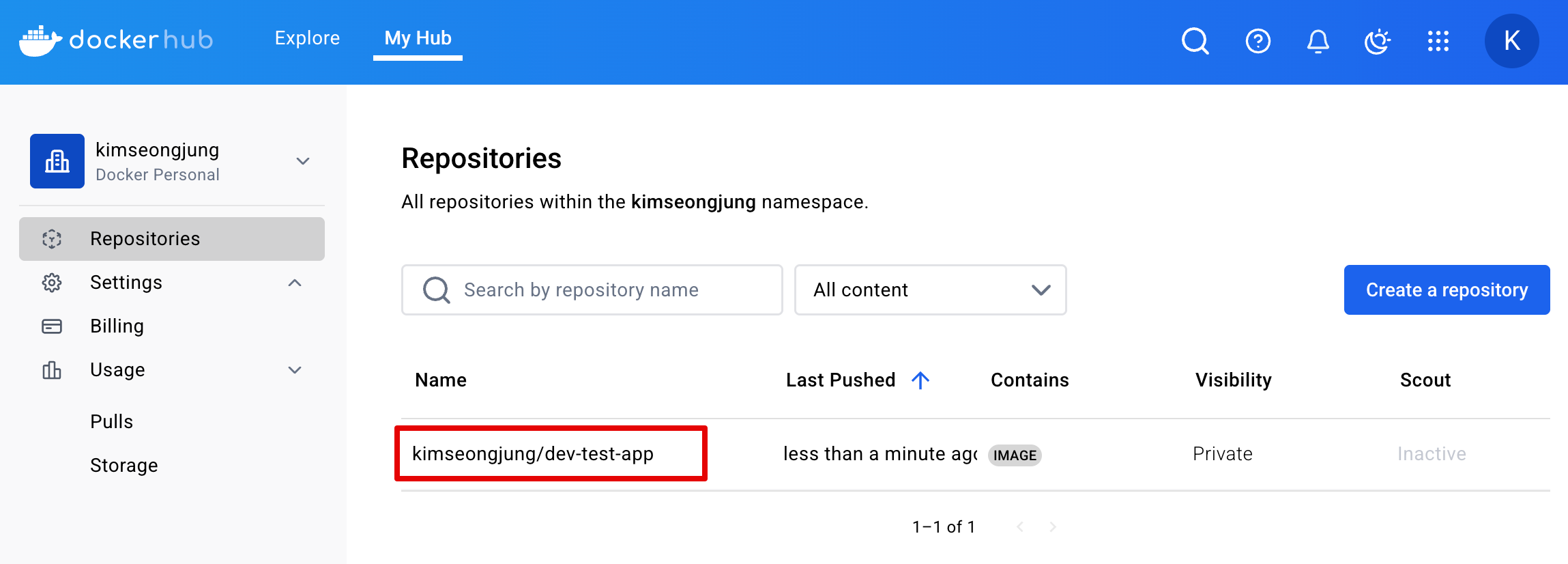

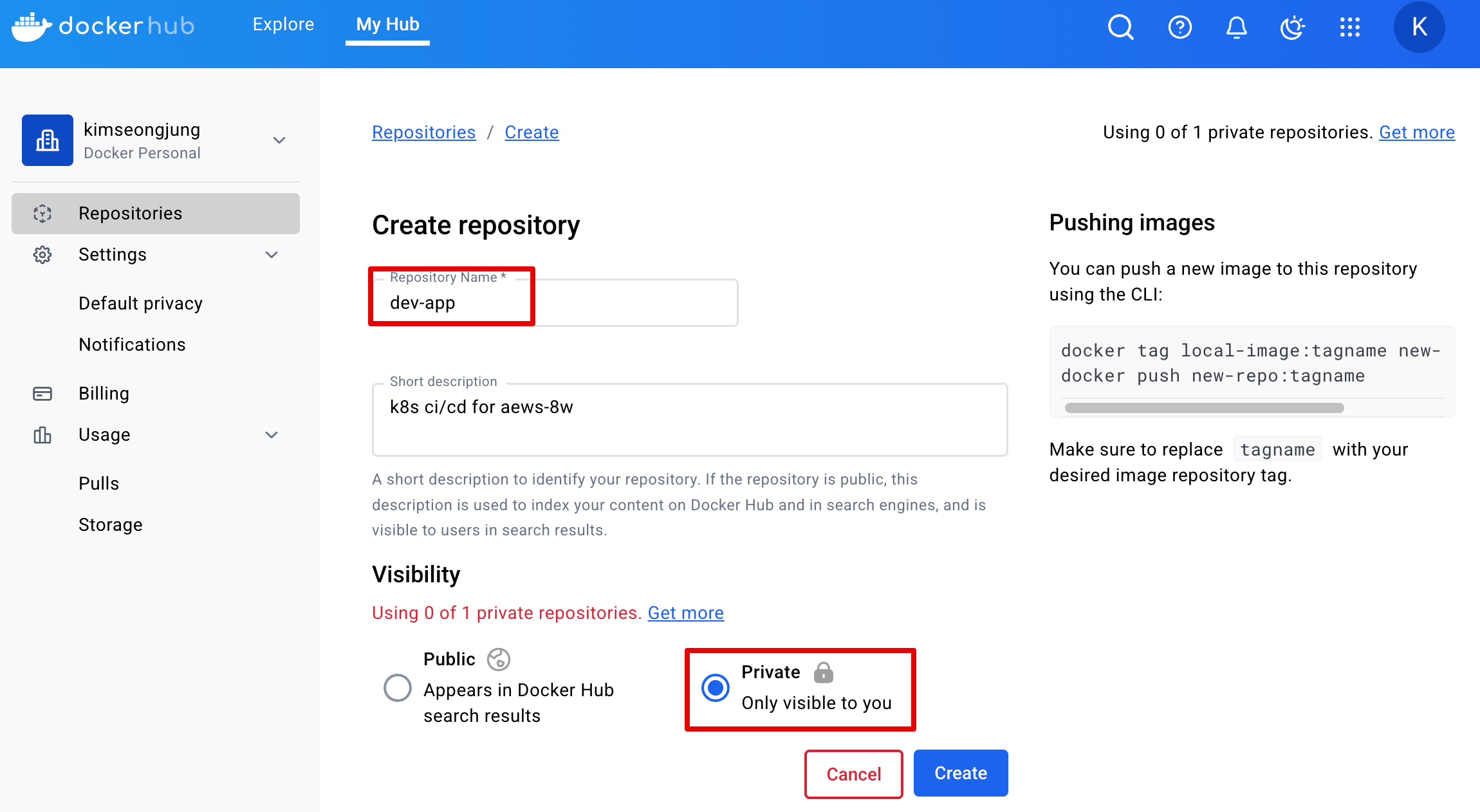

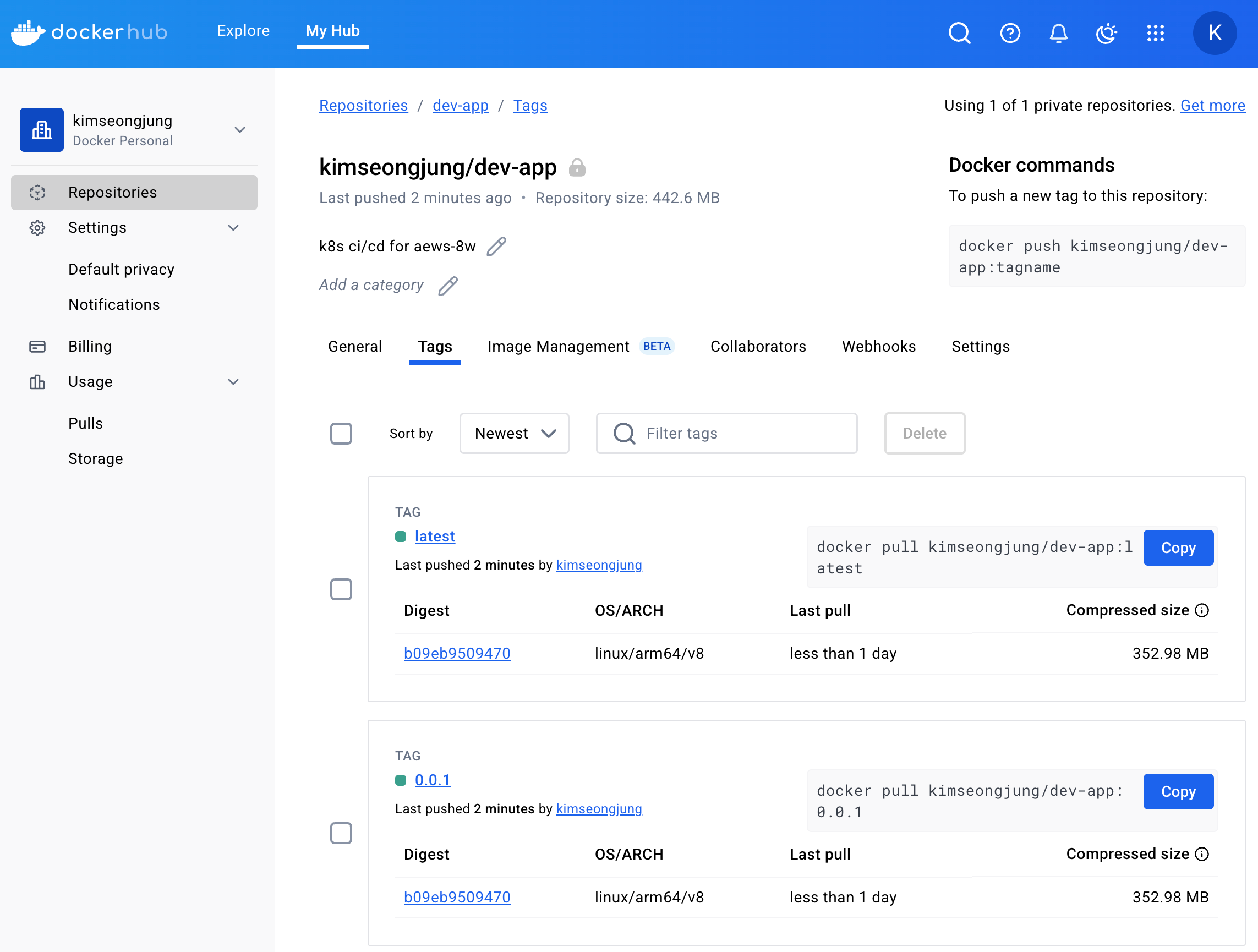

1.4 DockerHub

-

각자 개인계정 생성 및 dev-test-app repository 생성

-

Build and Push a container image to Docker Hub from your computer

-

Start by creating a Dockerfile to specify your application as shown below:

# syntax=docker/dockerfile:1 FROM busybox CMD echo "Hello world! This is my first Docker image." -

Run

docker build -t <your_username>/my-private-repo .to build your Docker image. -

Run

docker run <your_username>/my-private-repoto test your Docker image locally. -

Run

docker push <your_username>/my-private-repoto push your Docker image to Docker Hub. You should see output similar to:

-

-

Dockerfile 파일 작성

# Dockerfile 파일 작성

cat > Dockerfile <<EOF

FROM busybox

CMD echo "Hello world! This is my first Docker image."

EOF

cat Dockerfile

# 빌드

DOCKERID=<자신의 도커 계정명>

DOCKERREPO=<자신의 도커 저장소>

DOCKERID=kimseongjung

DOCKERREPO=dev-test-app

docker build -t $DOCKERID/$DOCKERREPO .

docker images | grep $DOCKERID/$DOCKERREPO

# 실행 확인

docker run $DOCKERID/$DOCKERREPO

# 로그인 확인

docker login -u $DOCKERID

# 푸시

docker push $DOCKERID/$DOCKERREPO

-

(참고) Name your local images using one of these methods:

- When you build them, using

docker build -t <hub-user>/<repo-name>[:<tag> - By re-tagging the existing local image with

docker tag <existing-image> <hub-user>/<repo-name>[:<tag>]. - By using

docker commit <existing-container> <hub-user>/<repo-name>[:<tag>]to commit changes.

- When you build them, using

-

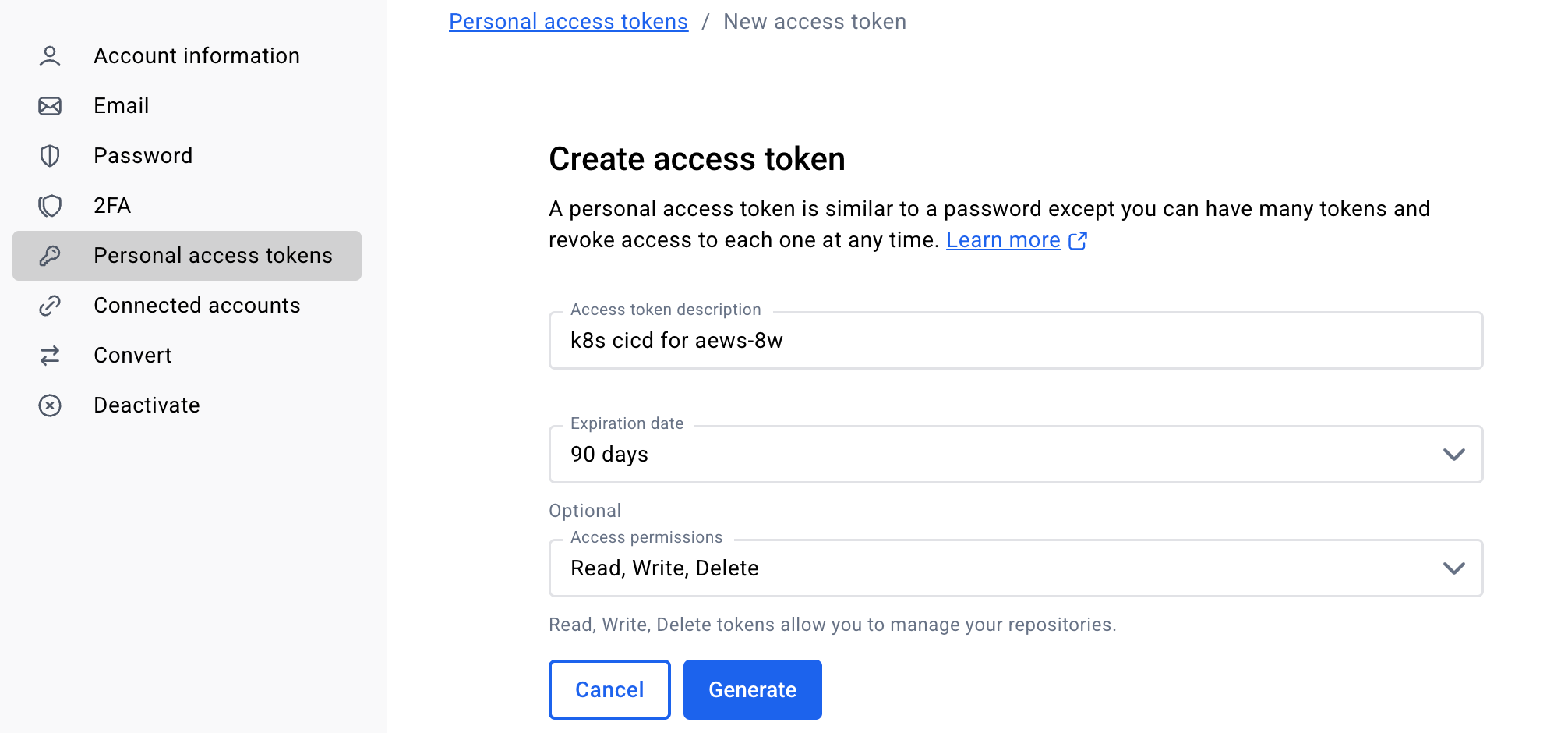

자신의 도커 허브 계정에 Token 발급

-

자신의 도커 허브 계정에 dev-app (Private) repo 생성

-

Token 발급

-

2. Jenkins CI + K8S

2.1 kind로 k8s 설치

# 클러스터 배포 전 확인

docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

3d6bc1cb656d jenkins/jenkins "/usr/bin/tini -- /u…" 2 hours ago Up About an hour 0.0.0.0:8080->8080/tcp, 0.0.0.0:50000->50000/tcp jenkins

0537bf5db764 gogs/gogs "/app/gogs/docker/st…" 2 hours ago Up 2 hours (healthy) 0.0.0.0:3000->3000/tcp, 0.0.0.0:10022->22/tcp gogs

# Create a cluster with kind

MyIP=<각자 자신의 PC IP>

MyIP=192.168.0.254

# cicd-labs 디렉터리에서 아래 파일 작성

cd ..

cat > kind-3node.yaml <<EOF

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

networking:

apiServerAddress: "$MyIP"

nodes:

- role: control-plane

extraPortMappings:

- containerPort: 30000

hostPort: 30000

- containerPort: 30001

hostPort: 30001

- containerPort: 30002

hostPort: 30002

- containerPort: 30003

hostPort: 30003

- role: worker

- role: worker

EOF

kind create cluster --config kind-3node.yaml --name myk8s --image kindest/node:v1.32.2

Creating cluster "myk8s" ...

✓ Ensuring node image (kindest/node:v1.32.2) 🖼

✓ Preparing nodes 📦 📦 📦

✓ Writing configuration 📜

✓ Starting control-plane 🕹️

✓ Installing CNI 🔌

✓ Installing StorageClass 💾

✓ Joining worker nodes 🚜

Set kubectl context to "kind-myk8s"

You can now use your cluster with:

kubectl cluster-info --context kind-myk8s

# 확인

kind get nodes --name myk8s

myk8s-worker

myk8s-worker2

myk8s-control-plane

kubens default

# kind 는 별도 도커 네트워크 생성 후 사용 : 기본값 172.18.0.0/16

docker network ls

NETWORK ID NAME DRIVER SCOPE

71612b3002fa bridge bridge local

375e2ec9dc1c cicd-labs_cicd-network bridge local

88153fefe41f host host local

4a1bac68fb38 kind bridge local

6e2fe0aabee3 none null local

docker inspect kind | jq

[

{

"Name": "kind",

"Id": "4a1bac68fb3814234d8ace297f7410f75b157df2ee87eb6d8b2bd8cbfac7a175",

"Created": "2025-03-29T07:40:25.439081048Z",

"Scope": "local",

"Driver": "bridge",

"EnableIPv4": true,

"EnableIPv6": true,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "fc00:f853:ccd:e793::/64"

},

{

"Subnet": "172.19.0.0/16",

"Gateway": "172.19.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"1df3262a4ebd639ad063bf51eaf72411c9ddcdb48031e9c02f13fa2ee2d64ce5": {

"Name": "myk8s-worker",

"EndpointID": "6accfe76742c83f48ae2bd45f97c03571019023c793009bf4c1864585d0aed6d",

"MacAddress": "aa:de:ad:25:3e:b1",

"IPv4Address": "172.19.0.3/16",

"IPv6Address": "fc00:f853:ccd:e793::3/64"

},

"2d200d8a8fe4c8e36b6eec69ddffafaa862c97fdc1bef560bb0ac8ca5e8d69a6": {

"Name": "myk8s-control-plane",

"EndpointID": "8fb7a395cd8dc3925a47c6bd57c08c65ef4054f43088f897e9af771100319e18",

"MacAddress": "ce:c9:24:43:96:84",

"IPv4Address": "172.19.0.4/16",

"IPv6Address": "fc00:f853:ccd:e793::4/64"

},

"669bbd829f58d76e64764602eef038f99719cd8536f9660bef5d6f593b2869d3": {

"Name": "myk8s-worker2",

"EndpointID": "f91b24c110eedf777eeffd8cba32602c5f08e2377584fe315d8936f05e8d6563",

"MacAddress": "a2:26:77:37:55:f8",

"IPv4Address": "172.19.0.2/16",

"IPv6Address": "fc00:f853:ccd:e793::2/64"

}

},

"Options": {

"com.docker.network.bridge.enable_ip_masquerade": "true",

"com.docker.network.driver.mtu": "65535"

},

"Labels": {}

}

]

# k8s api 주소 확인 : 어떻게 로컬에서 접속이 되는 걸까?

kubectl cluster-info

# 노드 정보 확인 : CRI 는 containerd 사용

kubectl get node -o wide

# 파드 정보 확인 : CNI 는 kindnet 사용

kubectl get pod -A -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system coredns-668d6bf9bc-hnmbv 1/1 Running 0 2m45s 10.244.0.3 myk8s-control-plane <none> <none>

kube-system coredns-668d6bf9bc-w4xr6 1/1 Running 0 2m45s 10.244.0.4 myk8s-control-plane <none> <none>

kube-system etcd-myk8s-control-plane 1/1 Running 0 2m52s 172.19.0.4 myk8s-control-plane <none> <none>

kube-system kindnet-5cwcv 1/1 Running 0 2m43s 172.19.0.3 myk8s-worker <none> <none>

kube-system kindnet-8tkfc 1/1 Running 0 2m43s 172.19.0.2 myk8s-worker2 <none> <none>

kube-system kindnet-f9bnl 1/1 Running 0 2m46s 172.19.0.4 myk8s-control-plane <none> <none>

kube-system kube-apiserver-myk8s-control-plane 1/1 Running 0 2m52s 172.19.0.4 myk8s-control-plane <none> <none>

kube-system kube-controller-manager-myk8s-control-plane 1/1 Running 0 2m52s 172.19.0.4 myk8s-control-plane <none> <none>

kube-system kube-proxy-mzrk8 1/1 Running 0 2m43s 172.19.0.3 myk8s-worker <none> <none>

kube-system kube-proxy-wfpjp 1/1 Running 0 2m43s 172.19.0.2 myk8s-worker2 <none> <none>

kube-system kube-proxy-z86l5 1/1 Running 0 2m46s 172.19.0.4 myk8s-control-plane <none> <none>

kube-system kube-scheduler-myk8s-control-plane 1/1 Running 0 2m52s 172.19.0.4 myk8s-control-plane <none> <none>

local-path-storage local-path-provisioner-7dc846544d-w65rv 1/1 Running 0 2m45s 10.244.0.2 myk8s-control-plane <none> <none>

# 네임스페이스 확인 >> 도커 컨테이너에서 배운 네임스페이스와 다릅니다!

kubectl get namespaces

# 컨트롤플레인/워커 노드(컨테이너) 확인 : 도커 컨테이너 이름은 myk8s-control-plane , myk8s-worker/worker-2 임을 확인

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

1df3262a4ebd kindest/node:v1.32.2 "/usr/local/bin/entr…" 3 minutes ago Up 3 minutes myk8s-worker

669bbd829f58 kindest/node:v1.32.2 "/usr/local/bin/entr…" 3 minutes ago Up 3 minutes myk8s-worker2

2d200d8a8fe4 kindest/node:v1.32.2 "/usr/local/bin/entr…" 3 minutes ago Up 3 minutes 0.0.0.0:30000-30003->30000-30003/tcp, 192.168.0.254:50762->6443/tcp myk8s-control-plane

3d6bc1cb656d jenkins/jenkins "/usr/bin/tini -- /u…" 2 hours ago Up About an hour 0.0.0.0:8080->8080/tcp, 0.0.0.0:50000->50000/tcp jenkins

0537bf5db764 gogs/gogs "/app/gogs/docker/st…" 2 hours ago Up 2 hours (healthy) 0.0.0.0:3000->3000/tcp, 0.0.0.0:10022->22/tcp gogs

docker images

# 디버그용 내용 출력에 ~/.kube/config 권한 인증 로드

kubectl get pod -v6

I0329 16:46:17.766010 55029 loader.go:402] Config loaded from file: /Users/sjkim/.kube/config

I0329 16:46:17.767508 55029 envvar.go:172] "Feature gate default state" feature="WatchListClient" enabled=false

I0329 16:46:17.767517 55029 envvar.go:172] "Feature gate default state" feature="ClientsAllowCBOR" enabled=false

I0329 16:46:17.767520 55029 envvar.go:172] "Feature gate default state" feature="ClientsPreferCBOR" enabled=false

I0329 16:46:17.767522 55029 envvar.go:172] "Feature gate default state" feature="InformerResourceVersion" enabled=false

I0329 16:46:17.792133 55029 round_trippers.go:560] GET https://192.168.0.254:50762/api/v1/namespaces/default/pods?limit=500 200 OK in 20 milliseconds

No resources found in default namespace.

# kube config 파일 확인

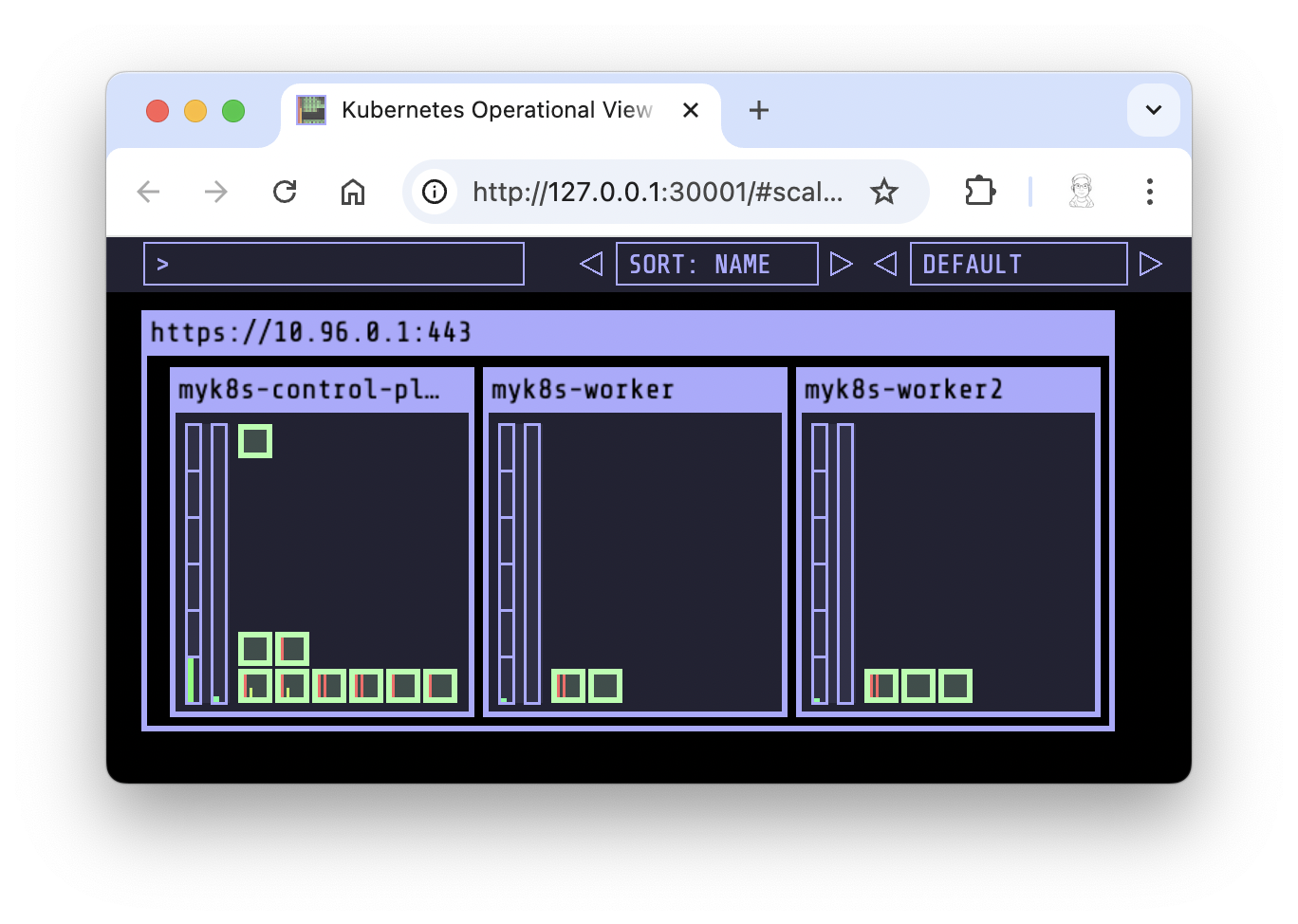

cat ~/.kube/config- kube-ops-view 설치

# kube-ops-view

# helm show values geek-cookbook/kube-ops-view

helm repo add geek-cookbook https://geek-cookbook.github.io/charts/

helm install kube-ops-view geek-cookbook/kube-ops-view --version 1.2.2 --set service.main.type=NodePort,service.main.ports.http.nodePort=30001 --set env.TZ="Asia/Seoul" --namespace kube-system

# 설치 확인

kubectl get deploy,pod,svc,ep -n kube-system -l app.kubernetes.io/instance=kube-ops-view

# kube-ops-view 접속 URL 확인 (1.5 , 2 배율)

open "http://127.0.0.1:30001/#scale=1.5"

open "http://127.0.0.1:30001/#scale=2"

- (참고) 클러스터 삭제

# 클러스터 삭제

kind delete cluster --name myk8s

docker ps

cat ~/.kube/config2.2 Jenkins 작업소개

- 작업 소개 (프로젝트, Job, Item) : 3가지 유형의 지시 사항 포함

- 작업을 수행하는 시점 Trigger

- 작업 수행 태스크 task가 언제 시작될지를 지시

- 작업을 구성하는 단계별 태스크 Built step

- 특정 목표를 수행하기 위한 태스크를 단계별 step로 구성할 수 있다.

- 이것을 젠킨스에서는 빌드 스텝 build step이라고 부른다.

- 태스크가 완료 후 수행할 명령 Post-build action

- 예를 들어 작업의 결과(성공 or 실패)를 사용자에게 알려주는 후속 동작이나, 자바 코드를 컴파일한 후 생성된 클래스 파일을 특정 위치로 복사 등

- (참고) 젠킨스의 빌드 : 젠킨스 작업의 특정 실행 버전

- 사용자는 젠킨스 작업을 여러번 실행할 수 있는데, 실행될 때마다 고유 빌드 번호가 부여된다.

- 작업 실행 중에 생성된 아티팩트, 콘솔 로드 등 특정 실행 버전과 관련된 모든 세부 정보가 해당 빌드 번호로 저장된다.

- 작업을 수행하는 시점 Trigger

2.3 Jenkins 설정

- Jenkins Plugin 설치

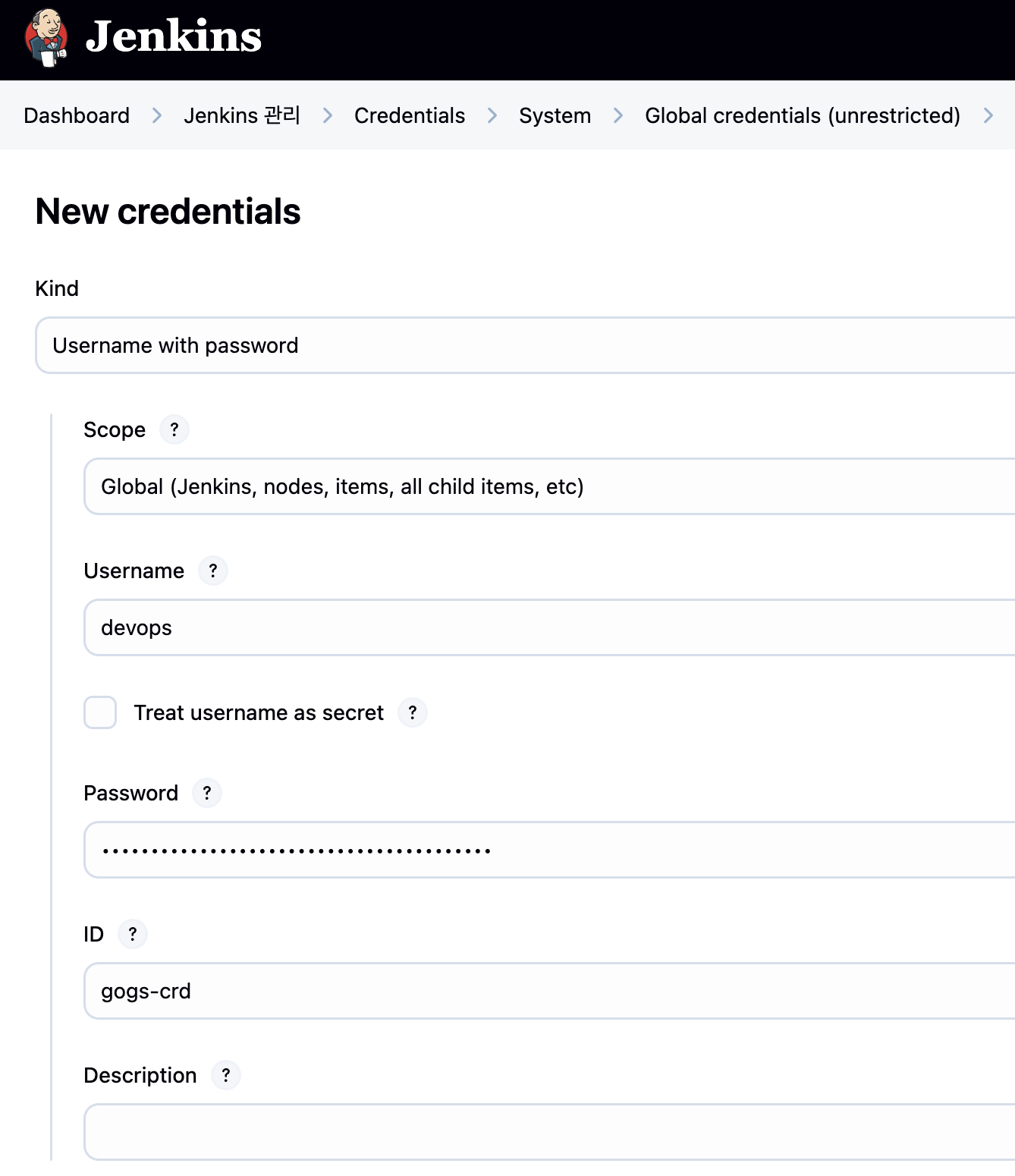

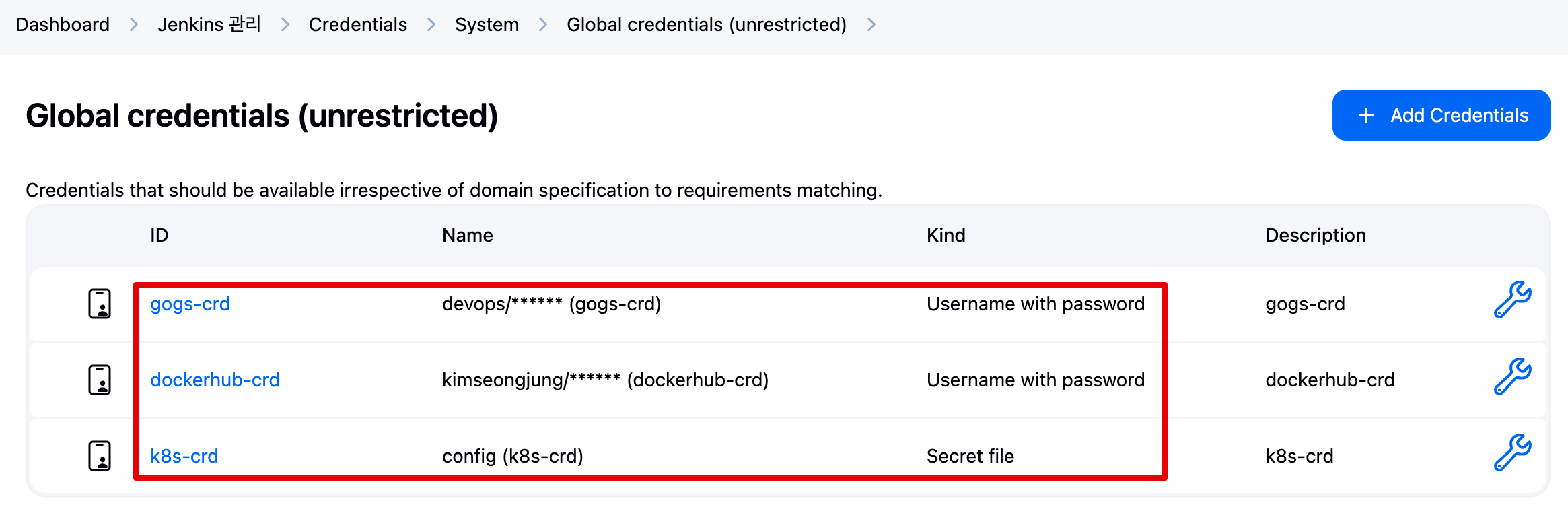

- 자격증명 설정 : Jenkins 관리 → Credentials → Globals → Add Credentials

-

Gogs Repo 자격증명 설정 : gogs-crd

- Kind : Username with password

- Username : devops

- Password : <Gogs 토큰>

- ID : gogs-crd

-

도커 허브 자격증명 설정 : dockerhub-crd

- Kind : Username with password

- Username : <도커 계정명>

- Password : <도커 계정 암호 혹은 토큰>

- ID : dockerhub-crd

-

k8s(kind) 자격증명 설정 : k8s-crd

- Kind : Secret file

- File : <kubeconfig 파일 업로드>

- ID : k8s-crd

⇒ macOS 사용자 경우,

cp ~/.kube/config ./kube-config복사 후 해당 파일 업로드 하자

-

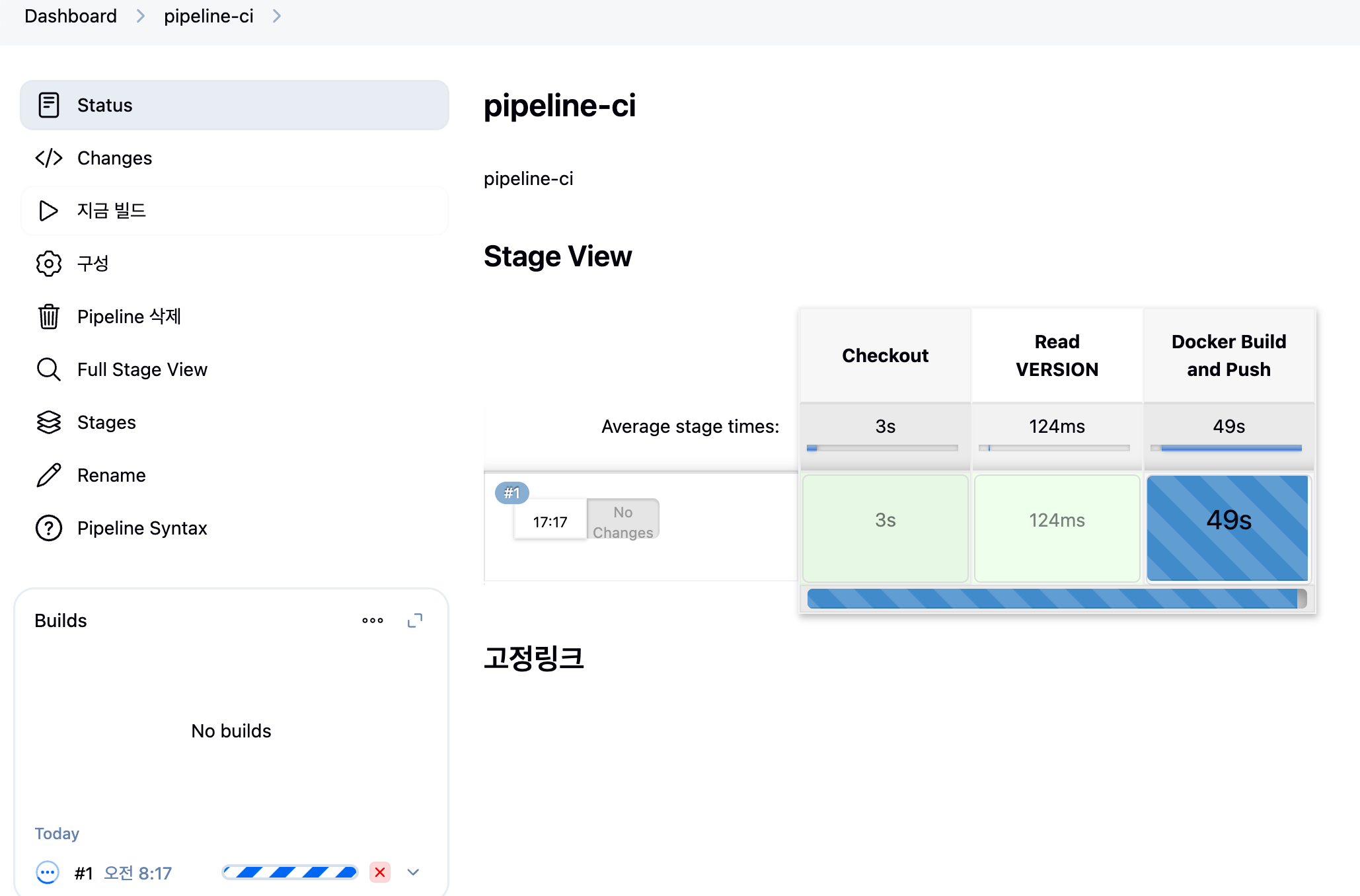

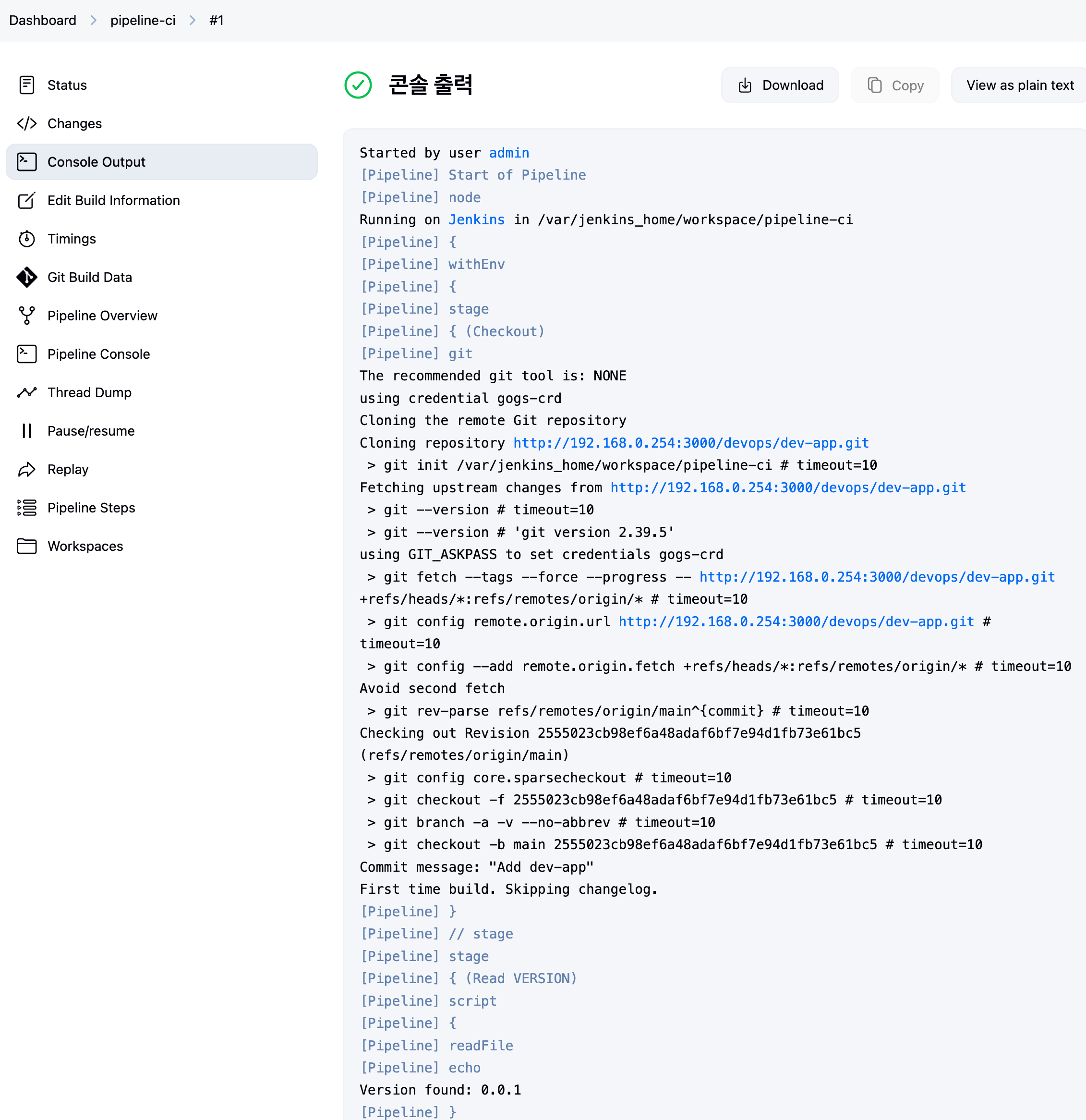

2.4 Jenkins Item 생성(Pipeline)

- Pipeline script : 아래 빨간색 부분은 자신의 환경에 맞게 수정 할 것!

pipeline {

agent any

environment {

DOCKER_IMAGE = '<자신의 도커 허브 계정>/dev-app' // Docker 이미지 이름

}

stages {

stage('Checkout') {

steps {

git branch: 'main',

url: 'http://<자신의 집 IP>:3000/devops/dev-app.git', // Git에서 코드 체크아웃

credentialsId: 'gogs-crd' // Credentials ID

}

}

stage('Read VERSION') {

steps {

script {

// VERSION 파일 읽기

def version = readFile('VERSION').trim()

echo "Version found: ${version}"

// 환경 변수 설정

env.DOCKER_TAG = version

}

}

}

stage('Docker Build and Push') {

steps {

script {

docker.withRegistry('https://index.docker.io/v1/', 'dockerhub-crd') {

// DOCKER_TAG 사용

def appImage = docker.build("${DOCKER_IMAGE}:${DOCKER_TAG}")

appImage.push()

appImage.push("latest")

}

}

}

}

}

post {

success {

echo "Docker image ${DOCKER_IMAGE}:${DOCKER_TAG} has been built and pushed successfully!"

}

failure {

echo "Pipeline failed. Please check the logs."

}

}

}- 지금 빌드 → 콘솔 Output 확인

- 도커 허브 확인

2.5 k8s Deploying an application with Jenkins(pipeline-ci)

-

Deploying to Kubernetes

-

원하는 상태 설정 시 k8s 는 충족을 위해 노력함 : Kubernetes uses declarative configuration, where you declare the state you want (like “I want 3 copies of my container running in the cluster”) in a configuration file. Then, submit that config to the cluster, and Kubernetes will strive to meet the requirements you specified.

# 디플로이먼트 오브젝트 배포 : 리플리카(파드 2개), 컨테이너 이미지 >> 아래 도커 계정 부분만 변경해서 배포해보자

DHUSER=<도커 허브 계정명>

DHUSER=kimseongjung

cat <<EOF | kubectl apply -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: timeserver

spec:

replicas: 2

selector:

matchLabels:

pod: timeserver-pod

template:

metadata:

labels:

pod: timeserver-pod

spec:

containers:

- name: timeserver-container

image: docker.io/$DHUSER/dev-app:0.0.1

livenessProbe:

initialDelaySeconds: 30

periodSeconds: 30

httpGet:

path: /healthz

port: 80

scheme: HTTP

timeoutSeconds: 5

failureThreshold: 3

successThreshold: 1

EOF

watch -d kubectl get deploy,rs,pod -o wide

# 배포 상태 확인 : kube-ops-view 웹 확인

kubectl get events -w --sort-by '.lastTimestamp'

kubectl get deploy,pod -o wide

kubectl describe pod

...

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 6m50s default-scheduler Successfully assigned default/timeserver-7cbd75fb4f-xsnxg to myk8s-worker

Normal Pulling 3m52s (x5 over 6m50s) kubelet Pulling image "docker.io/kimseongjung/dev-app:0.0.1"

Warning Failed 3m51s (x5 over 6m48s) kubelet Failed to pull image "docker.io/kimseongjung/dev-app:0.0.1": failed to pull and unpack image "docker.io/kimseongjung/dev-app:0.0.1": failed to resolve reference "docker.io/kimseongjung/dev-app:0.0.1": pull access denied, repository does not exist or may require authorization: server message: insufficient_scope: authorization failed

Warning Failed 3m51s (x5 over 6m48s) kubelet Error: ErrImagePull

Normal BackOff 101s (x21 over 6m48s) kubelet Back-off pulling image "docker.io/kimseongjung/dev-app:0.0.1"

Warning Failed 101s (x21 over 6m48s) kubelet Error: ImagePullBackOfTROUBLESHOOTING : image pull error (ErrImagePull / ErrImagePullBackOff)

- 보통 컨테이너 이미지 정보를 잘못 기입하는 경우에 발생

- This error indicates that Kubernetes was unable to download the container image.

- 혹은 이미지 저장소에 이미지가 없거나, 이미지 가져오는 자격 증명이 없는 경우에 발생

- This typically means that the image name was misspelled in your configuration, the image doesn’t exist in the image repository, or your cluster doesn’t have the required credentials to access the repository.

- Check the spelling of your image and verify that the image is in your repository.

# k8s secret : 도커 자격증명 설정

kubectl get secret -A # 생성 시 타입 지정

NAMESPACE NAME TYPE DATA AGE

kube-system bootstrap-token-abcdef bootstrap.kubernetes.io/token 6 58m

kube-system sh.helm.release.v1.kube-ops-view.v1 helm.sh/release.v1 1 48m

DHUSER=<도커 허브 계정>

DHPASS=<도커 허브 암호 혹은 토큰>

echo $DHUSER $DHPASS

DHUSER=kimseongjung

DHPASS=dckr_pat_160jvWwmMPK0sVQjApWFHYqtvQ9

echo $DHUSER $DHPASS

kubectl create secret docker-registry dockerhub-secret \

--docker-server=https://index.docker.io/v1/ \

--docker-username=$DHUSER \

--docker-password=$DHPASS

# 확인

kubectl get secret

NAME TYPE DATA AGE

dockerhub-secret kubernetes.io/dockerconfigjson 1 12s

kubectl describe secret

Name: dockerhub-secret

Namespace: default

Labels: <none>

Annotations: <none>

Type: kubernetes.io/dockerconfigjson

Data

====

.dockerconfigjson: 197 bytes

kubectl get secrets -o yaml | kubectl neat # base64 인코딩 확인

SECRET=eyJhdXRocyI6eyJodHRwczovL2luZGV4LmRvY2tlci5pby92MS8iOnsidXNlcm5hbWUiOiJraW1zZW9uZ2p1bmciLCJwYXNzd29yZCI6ImRja3JfcGF0XzE2MGp2V3dtTVBLMHNWUWpBcFdGSFlxdHZRNCIsImF1dGgiOiJ*****JWdmJtZHFkVzVuT21SamEzSmZjR0YwWHpFMk1HcDJWM2R0VFZCTE1ITldVV3BCY0ZkR1NGbHhkSFpSTkE9PSJ9fX0=

echo "$SECRET" | base64 -d ; echo

# 디플로이먼트 오브젝트 업데이트 : 시크릿 적용 >> 아래 도커 계정 부분만 변경해서 배포해보자

cat <<EOF | kubectl apply -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: timeserver

spec:

replicas: 2

selector:

matchLabels:

pod: timeserver-pod

template:

metadata:

labels:

pod: timeserver-pod

spec:

containers:

- name: timeserver-container

image: docker.io/$DHUSER/dev-app:0.0.1

livenessProbe:

initialDelaySeconds: 30

periodSeconds: 30

httpGet:

path: /healthz

port: 80

scheme: HTTP

timeoutSeconds: 5

failureThreshold: 3

successThreshold: 1

imagePullSecrets:

- name: dockerhub-secret

EOF

watch -d kubectl get deploy,rs,pod -o wide

# 확인

kubectl get events -w --sort-by '.lastTimestamp'

kubectl get deploy,pod

# 접속을 위한 curl 파드 생성

kubectl run curl-pod --image=curlimages/curl:latest --command -- sh -c "while true; do sleep 3600; done"

kubectl get pod -owide

# timeserver 파드 IP 1개 확인 후 접속 확인

PODIP1=<timeserver-Y 파드 IP>

PODIP1=10.244.1.5

kubectl exec -it curl-pod -- curl $PODIP1

The time is 8:52:33 AM, VERSION 0.0.1

Server hostname: timeserver-768d78ddf9-ktcz5

kubectl exec -it curl-pod -- curl $PODIP1/healthz

Healthy

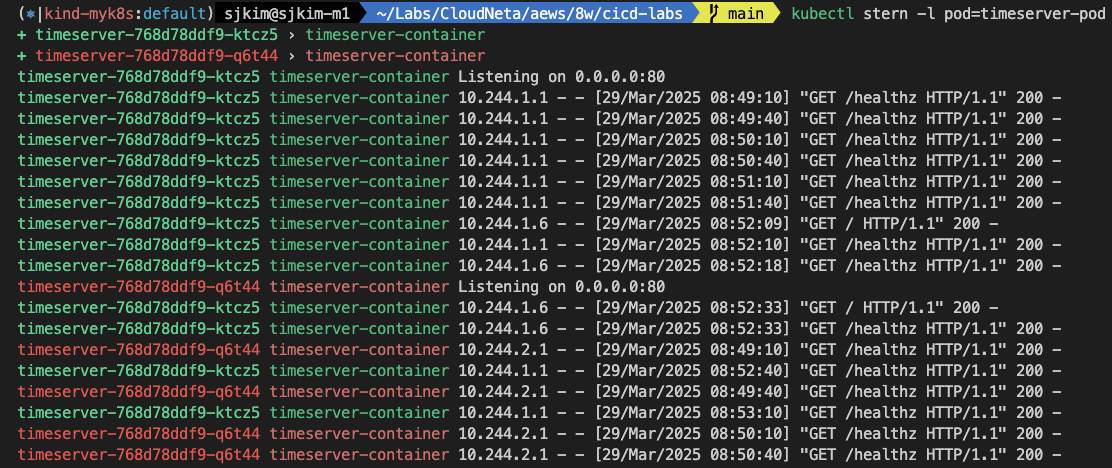

# 로그 확인

kubectl logs deploy/timeserver

kubectl logs deploy/timeserver -f

kubectl stern deploy/timeserver

kubectl stern -l pod=timeserver-pod

- 서비스 생성

# 서비스 생성

cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Service

metadata:

name: timeserver

spec:

selector:

pod: timeserver-pod

ports:

- port: 80

targetPort: 80

protocol: TCP

nodePort: 30000

type: NodePort

EOF

#

kubectl get service,ep timeserver -owide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/timeserver NodePort 10.96.141.9 <none> 80:30000/TCP 10s pod=timeserver-pod

NAME ENDPOINTS AGE

endpoints/timeserver 10.244.1.5:80,10.244.2.6:80 10s

# Service(ClusterIP)로 접속 확인 : 도메인네임, ClusterIP

kubectl exec -it curl-pod -- curl timeserver

The time is 8:58:09 AM, VERSION 0.0.1

Server hostname: timeserver-768d78ddf9-ktcz5

kubectl exec -it curl-pod -- curl timeserver/healthz

kubectl exec -it curl-pod -- curl $(kubectl get svc timeserver -o jsonpath={.spec.clusterIP})

The time is 8:58:45 AM, VERSION 0.0.1

Server hostname: timeserver-768d78ddf9-ktcz5

# Service(NodePort)로 접속 확인 "노드IP:NodePort"

curl http://127.0.0.1:30000

The time is 8:59:10 AM, VERSION 0.0.1

Server hostname: timeserver-768d78ddf9-q6t44

curl http://127.0.0.1:30000

The time is 8:59:31 AM, VERSION 0.0.1

Server hostname: timeserver-768d78ddf9-ktcz5

curl http://127.0.0.1:30000/healthz

# 반복 접속 해두기 : 부하분산 확인

while true; do curl -s --connect-timeout 1 http://127.0.0.1:30000 ; sleep 1 ; done

for i in {1..100}; do curl -s http://127.0.0.1:30000 | grep name; done | sort | uniq -c | sort -nr

53 Server hostname: timeserver-768d78ddf9-q6t44

47 Server hostname: timeserver-768d78ddf9-ktcz5

# 파드 복제복 증가 : service endpoint 대상에 자동 추가

kubectl scale deployment timeserver --replicas 4

kubectl get service,ep timeserver -owide

NAME ENDPOINTS AGE

endpoints/timeserver 10.244.1.5:80,10.244.1.7:80,10.244.2.6:80 + 1 more... 4m9s

# 반복 접속 해두기 : 부하분산 확인

while true; do curl -s --connect-timeout 1 http://127.0.0.1:30000 ; sleep 1 ; done

for i in {1..100}; do curl -s http://127.0.0.1:30000 | grep name; done | sort | uniq -c | sort -nr

33 Server hostname: timeserver-768d78ddf9-xgj2c

25 Server hostname: timeserver-768d78ddf9-zrjj7

21 Server hostname: timeserver-768d78ddf9-q6t44

21 Server hostname: timeserver-768d78ddf9-ktcz52.6 Updating your application

- 샘플 앱 server.py 코드 변경 → 젠킨스(지금 빌드 실행) : 새 0.0.2 버전 태그로 컨테이너 이미지 빌드 → 컨테이너 저장소 Push ⇒ k8s deployment 업데이트 배포

# VERSION 변경 : 0.0.2

# server.py 변경 : 0.0.2

git add . && git commit -m "VERSION $(cat VERSION) Changed" && git push -u origin main

- 태그는 버전 정보 사용을 권장 : You can make this tag anything you like, but it’s a good convention to use version numbers.

# 파드 복제복 증가

kubectl scale deployment timeserver --replicas 4

kubectl get service,ep timeserver -owide

# 반복 접속 해두기 : 부하분산 확인

while true; do curl -s --connect-timeout 1 http://127.0.0.1:30000 ; sleep 1 ; done

for i in {1..100}; do curl -s http://127.0.0.1:30000 | grep name; done | sort | uniq -c | sort -nr

#

kubectl set image deployment timeserver timeserver-container=$DHUSER/dev-app:0.0.Y && watch -d "kubectl get deploy,ep timeserver; echo; kubectl get rs,pod"

kubectl set image deployment timeserver timeserver-container=$DHUSER/dev-app:0.0.2 && watch -d "kubectl get deploy,ep timeserver; echo; kubectl get rs,pod"

# 롤링 업데이트 확인

watch -d kubectl get deploy,rs,pod,svc,ep -owide

kubectl get deploy,rs,pod,svc,ep -owide

# kubectl get deploy $DEPLOYMENT_NAME

kubectl get deploy timeserver

kubectl get pods -l pod=timeserver-pod

#

curl http://127.0.0.1:300002.7 Gogs Webhooks 설정 : Jenkins Job Trigger

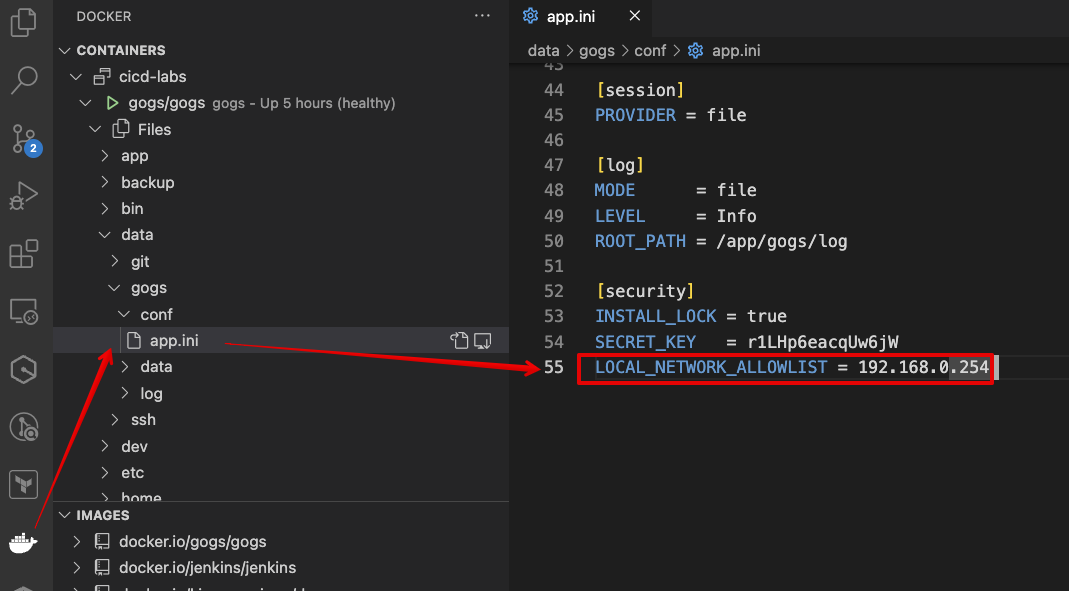

- gogs 에 /data/gogs/conf/app.ini 파일 수정 후 컨테이너 재기동 - issue

[security]

INSTALL_LOCK = true

SECRET_KEY = r1LHp6eacqUw6jW

LOCAL_NETWORK_ALLOWLIST = 192.168.254.127

-

docker compose restart gogs

-

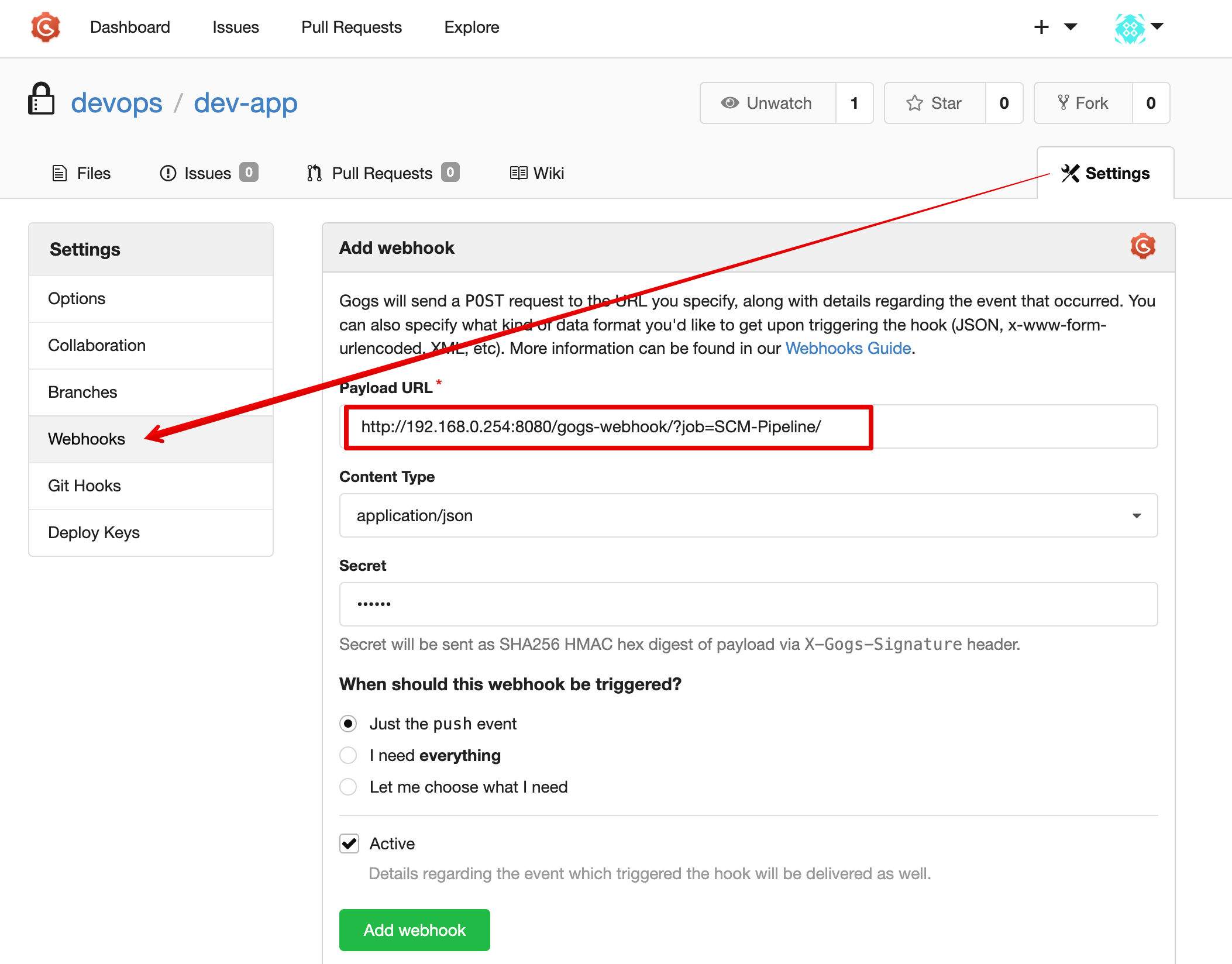

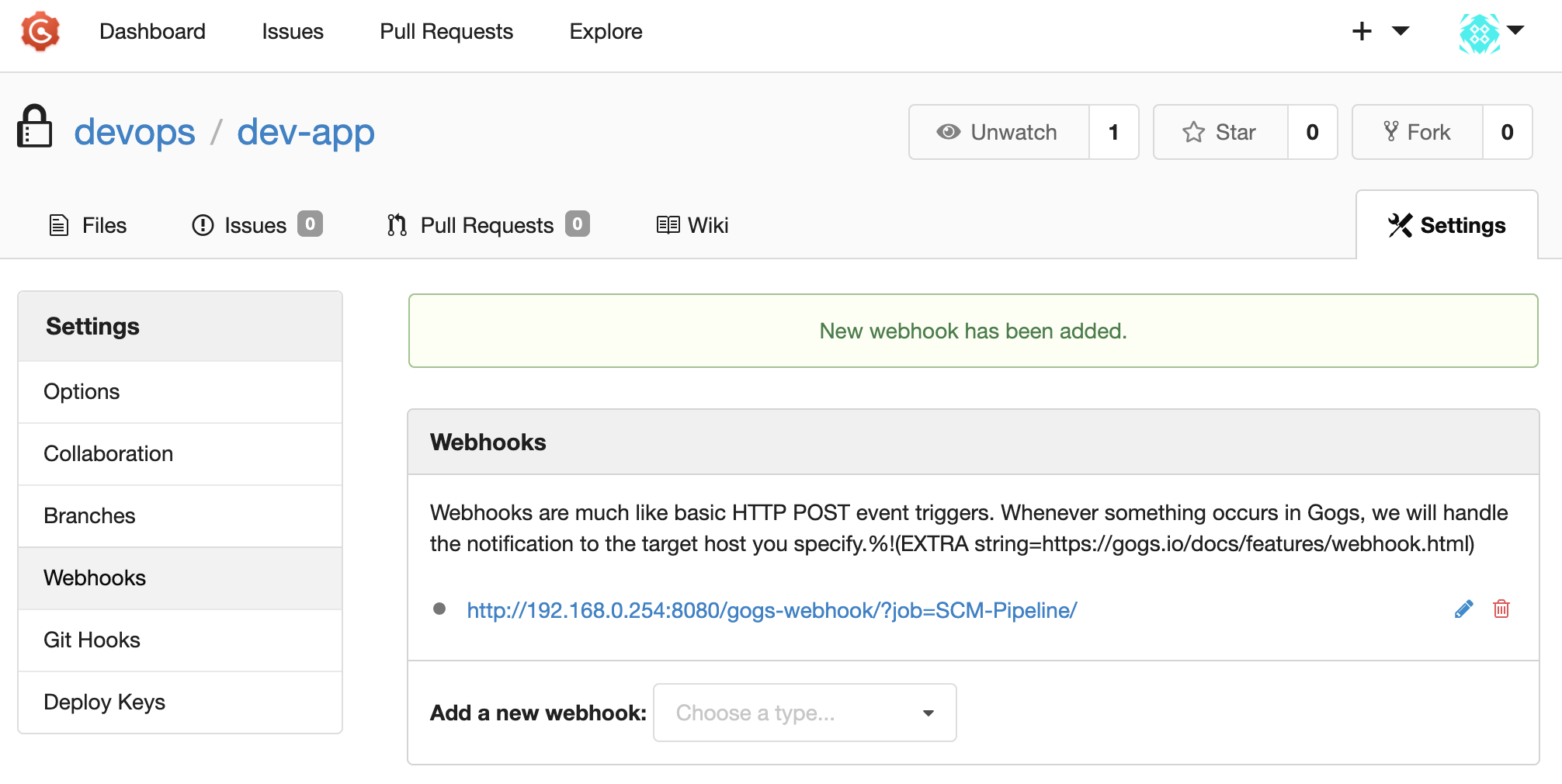

gogs 에 Webhooks 설정 : Jenkins job Trigger - Setting → Webhooks → Gogs 클릭

- Webhooks are much like basic HTTP POST event triggers. Whenever something occurs in Gogs,

- we will handle the notification to the target host you specify.

%!(EXTRA string=https://gogs.io/docs/features/webhook.html) - Payload URL :

http://***<자신의 집 IP>***:8080/gogs-webhook/?job=**SCM-Pipeline**/, http://**192.168.254.127**:8080/gogs-webhook/?job=**SCM-Pipeline**/ - Content Type :

application/json - Secret :

qwe123 - When should this webhook be triggered? : Just the push event

- Active : Check

⇒ Add webhook

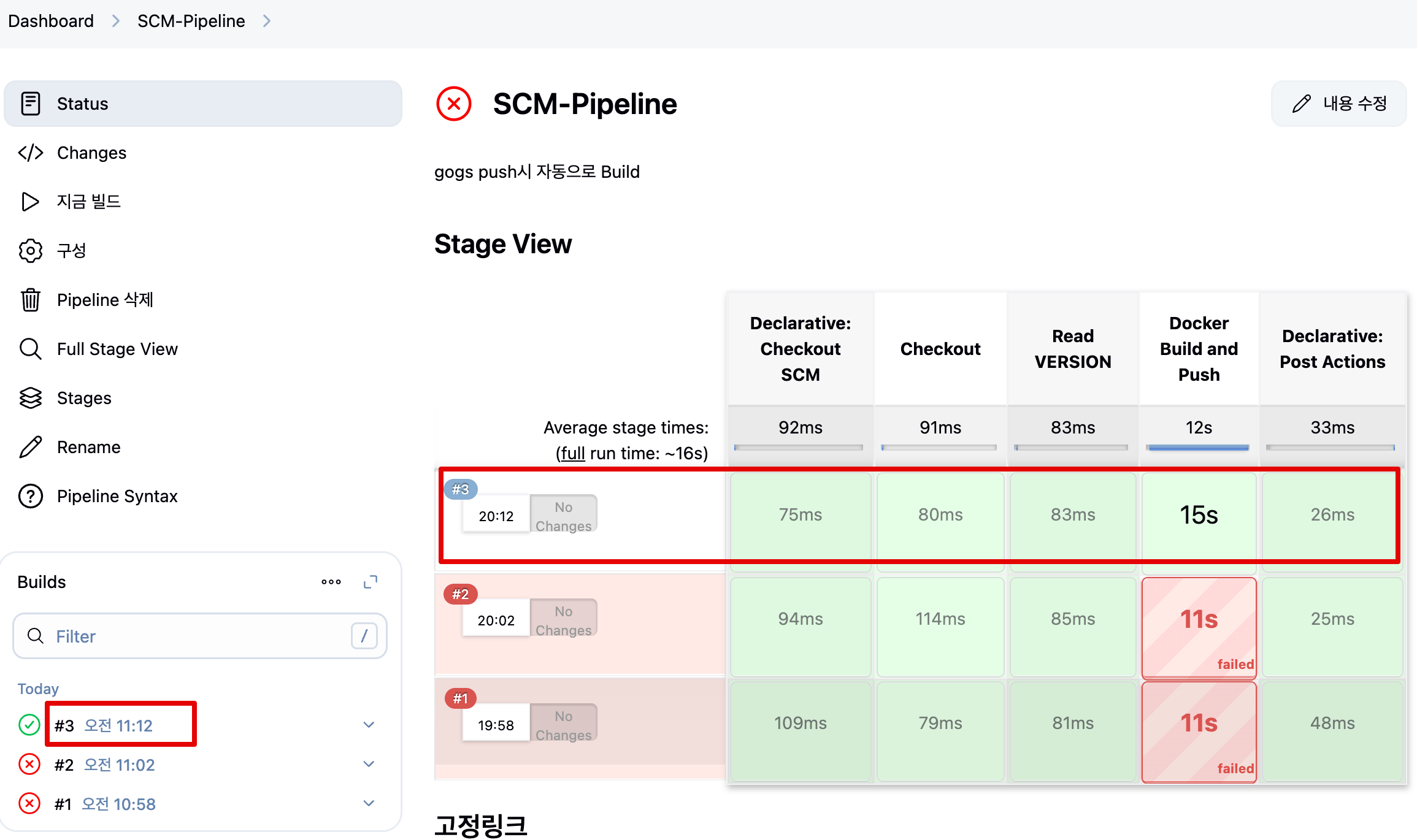

2.8 Jenkins Item 생성(Pipeline) : item name(SCM-Pipeline)

- GitHub project :

http://***<mac IP>***:3000/***<Gogs 계정명>***/dev-app← .git 은 제거- GitHub project : http://192.168.254.127:3000/devops/dev-app

- Use Gogs secret : qwe123

- Build Triggers : Build when a change is pushed to Gogs 체크

- Pipeline script from SCM

- SCM : Git

- Repo URL(

http://***<mac IP>***:3000/***<Gogs 계정명>***/dev-app) - Credentials(devops/***)

- Branch(*/main)

- Repo URL(

- Script Path : Jenkinsfile

- SCM : Git

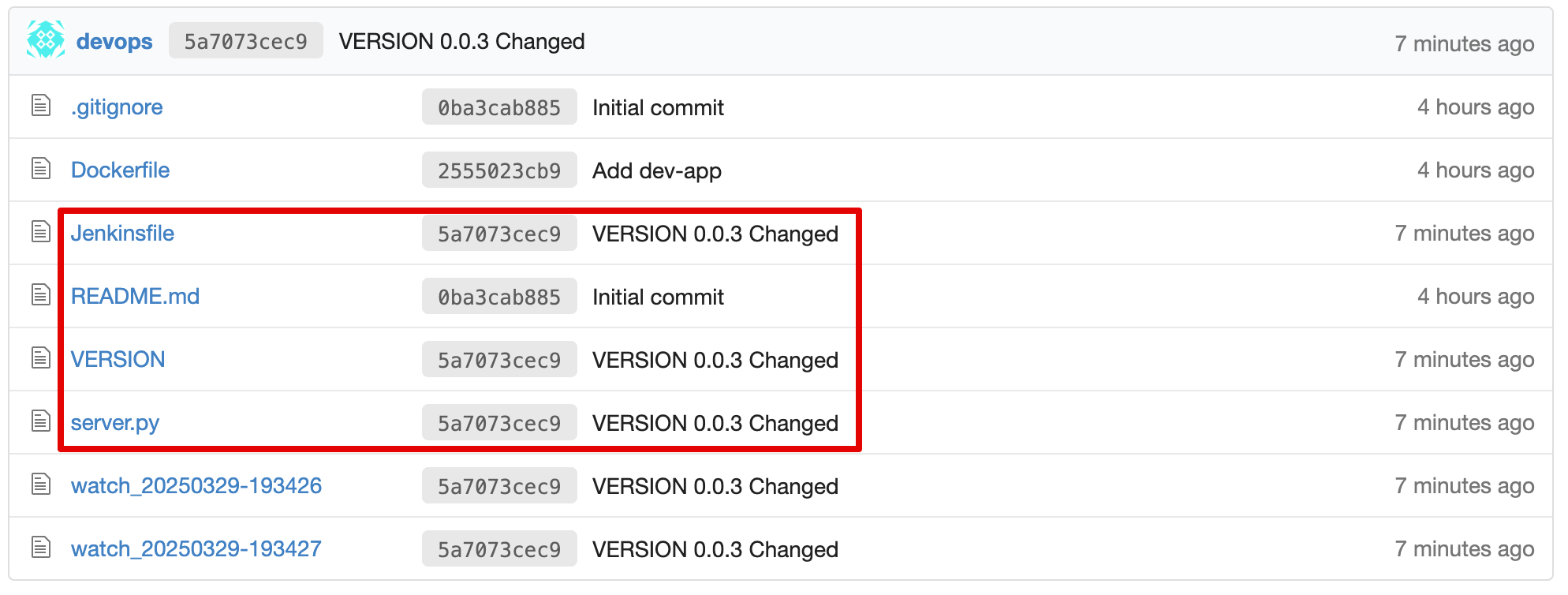

2.9 Jenkinsfile 작성 후 Git push

- git 작업

# Jenkinsfile 빈 파일 작성

touch Jenkinsfile

# VERSION 파일 : 0.0.3 수정

# server.py 파일 : 0.0.3 수정- IDE(VSCODE..) 로 Jenkinsfile 파일 작성

pipeline {

agent any

environment {

DOCKER_IMAGE = '<자신의 도커 허브 계정>/dev-app' // Docker 이미지 이름

}

stages {

stage('Checkout') {

steps {

git branch: 'main',

url: 'http://<자신의 집 IP>:3000/devops/dev-app.git', // Git에서 코드 체크아웃

credentialsId: 'gogs-crd' // Credentials ID

}

}

stage('Read VERSION') {

steps {

script {

// VERSION 파일 읽기

def version = readFile('VERSION').trim()

echo "Version found: ${version}"

// 환경 변수 설정

env.DOCKER_TAG = version

}

}

}

stage('Docker Build and Push') {

steps {

script {

docker.withRegistry('https://index.docker.io/v1/', 'dockerhub-crd') {

// DOCKER_TAG 사용

def appImage = docker.build("${DOCKER_IMAGE}:${DOCKER_TAG}")

appImage.push()

appImage.push("latest")

}

}

}

}

}

post {

success {

echo "Docker image ${DOCKER_IMAGE}:${DOCKER_TAG} has been built and pushed successfully!"

}

failure {

echo "Pipeline failed. Please check the logs."

}

}

}- 작성된 파일 push

git add . && git commit -m "VERSION $(cat VERSION) Changed" && git push -u origin main-

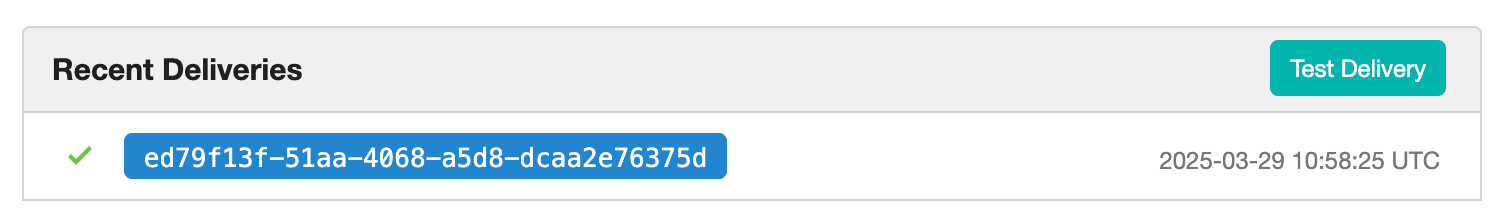

Gogs WebHook 기록 확인

-

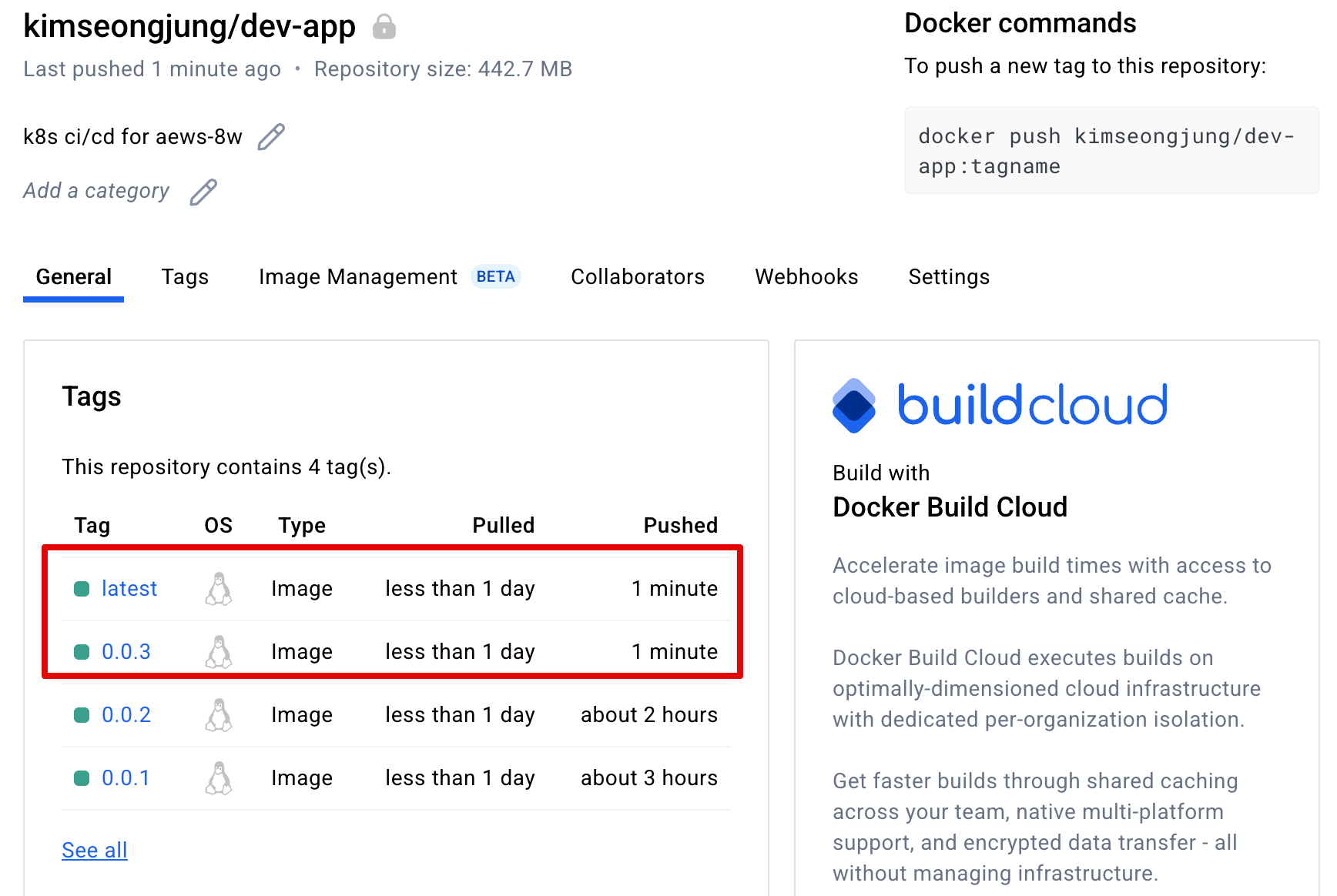

도커 저장소 확인

-

Jenkins 트리거 빌드 확인, dev-app:0.0.3 정상, dev-app:latest 때 timeout 발생, 재시도 후 정상!

-

k8s 에 신규 버전 적용

# 신규 버전 적용

kubectl set image deployment timeserver timeserver-container=$DHUSER/dev-app:**0.0.3** &&* while true; do curl -s --connect-timeout 1 http://127.0.0.1:30000 ; sleep 1 ; done

# 확인

watch -d "kubectl get deploy,ep timeserver; echo; kubectl get rs,pod"3. Jenkins CI/CD + K8S

3.1 Jenkins 컨테이너 내부에 툴 설치 : kubectl(v1.32), helm

# Install kubectl, helm

docker compose exec --privileged -u root jenkins bash

--------------------------------------------

#curl -LO "https://dl.k8s.io/release/v1.32.2/bin/linux/amd64/kubectl"

curl -LO "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/arm64/kubectl" # macOS

curl -LO "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/amd64/kubectl" # WindowOS

install -o root -g root -m 0755 kubectl /usr/local/bin/kubectl

kubectl version --client=true

#

curl https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3 | bash

helm version

exit

--------------------------------------------

docker compose exec jenkins kubectl version --client=true

Client Version: v1.32.3

Kustomize Version: v5.5.0

docker compose exec jenkins helm version

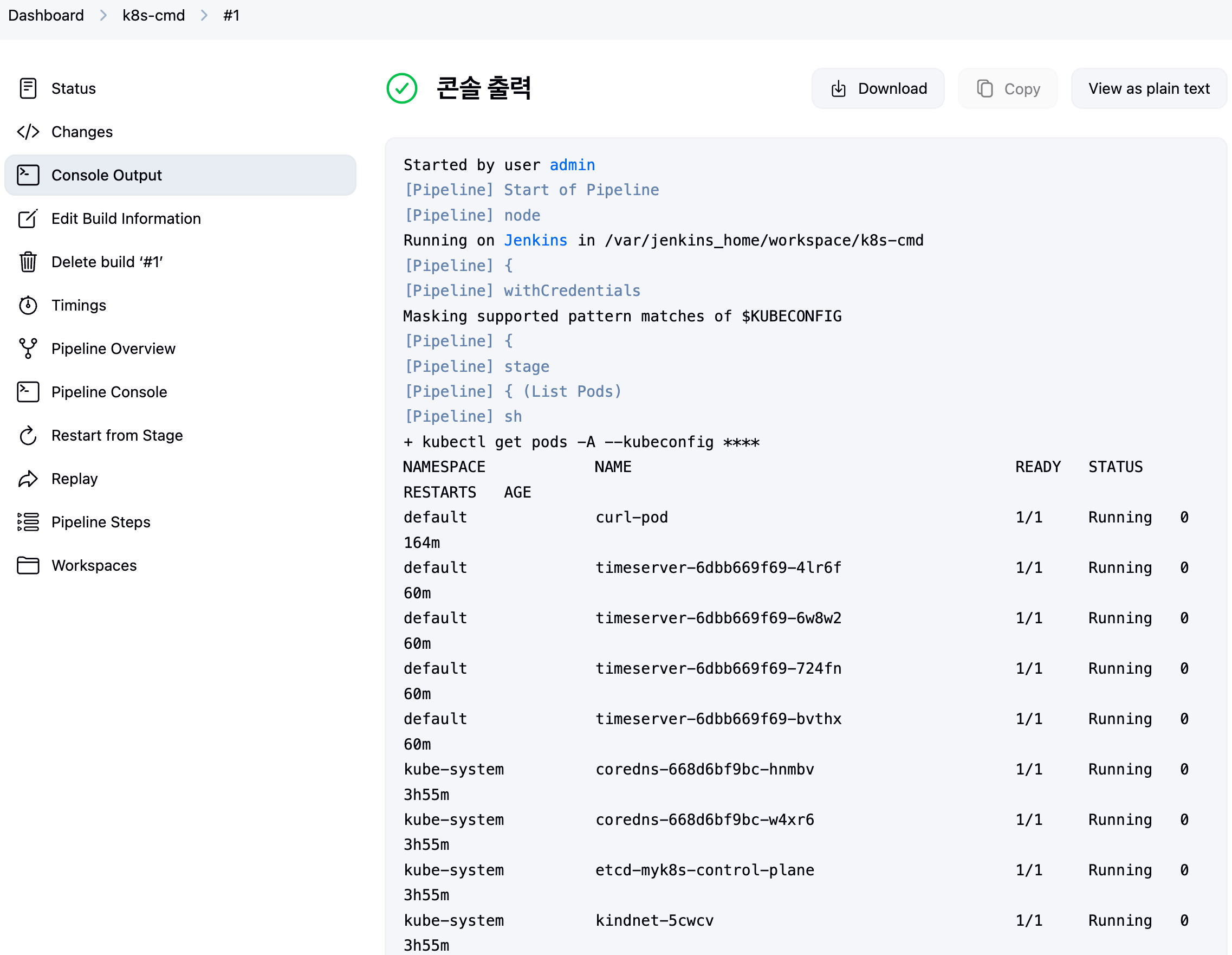

version.BuildInfo{Version:"v3.17.2", GitCommit:"cc0bbbd6d6276b83880042c1ecb34087e84d41eb", GitTreeState:"clean", GoVersion:"go1.23.7"}3.2 Jenkins Item 생성(Pipeline) : item name(k8s-cmd)

pipeline {

agent any

environment {

KUBECONFIG = credentials('k8s-crd')

}

stages {

stage('List Pods') {

steps {

sh '''

# Fetch and display Pods

kubectl get pods -A --kubeconfig "$KUBECONFIG"

'''

}

}

}

}

3.3 [K8S CD 실습] Jenkins 를 이용한 blue-green 배포 준비

- 디플로이먼트 / 서비스 yaml 파일 작성 - http-echo 및 코드 push

#

cd dev-app

#

mkdir deploy

#

cat > deploy/echo-server-blue.yaml <<EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name: echo-server-blue

spec:

replicas: 2

selector:

matchLabels:

app: echo-server

version: blue

template:

metadata:

labels:

app: echo-server

version: blue

spec:

containers:

- name: echo-server

image: hashicorp/http-echo

args:

- "-text=Hello from Blue"

ports:

- containerPort: 5678

EOF

cat > deploy/echo-server-service.yaml <<EOF

apiVersion: v1

kind: Service

metadata:

name: echo-server-service

spec:

selector:

app: echo-server

version: blue

ports:

- protocol: TCP

port: 80

targetPort: 5678

nodePort: 30000

type: NodePort

EOF

cat > deploy/echo-server-green.yaml <<EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name: echo-server-green

spec:

replicas: 2

selector:

matchLabels:

app: echo-server

version: green

template:

metadata:

labels:

app: echo-server

version: green

spec:

containers:

- name: echo-server

image: hashicorp/http-echo

args:

- "-text=Hello from Green"

ports:

- containerPort: 5678

EOF

#

tree

.

├── Dockerfile

├── Jenkinsfile

├── README.md

├── VERSION

├── deploy

│ ├── echo-server-blue.yaml

│ ├── echo-server-green.yaml

│ └── echo-server-service.yaml

└── server.py

git add . && git commit -m "Add echo server yaml" && git push -u origin main- (참고) 직접 블루-그린 업데이트 실행

#

cd deploy

kubectl delete deploy,svc --all

kubectl apply -f .

#

kubectl get deploy,svc,ep -owide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/echo-server-blue 2/2 2 2 10s echo-server hashicorp/http-echo app=echo-server,version=blue

deployment.apps/echo-server-green 2/2 2 2 10s echo-server hashicorp/http-echo app=echo-server,version=green

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/echo-server-service NodePort 10.96.82.118 <none> 80:30000/TCP 10s app=echo-server,version=blue

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 60s <none>

NAME ENDPOINTS AGE

endpoints/echo-server-service 10.244.1.14:5678,10.244.2.15:5678 10s

endpoints/kubernetes 172.19.0.4:6443 60s

curl -s http://127.0.0.1:30000

Hello from Blue

#

kubectl patch svc echo-server-service -p '{"spec": {"selector": {"version": "green"}}}'

kubectl get deploy,svc,ep -owide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/echo-server-blue 2/2 2 2 62s echo-server hashicorp/http-echo app=echo-server,version=blue

deployment.apps/echo-server-green 2/2 2 2 62s echo-server hashicorp/http-echo app=echo-server,version=green

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/echo-server-service NodePort 10.96.82.118 <none> 80:30000/TCP 62s app=echo-server,version=green

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 112s <none>

NAME ENDPOINTS AGE

endpoints/echo-server-service 10.244.1.15:5678,10.244.2.14:5678 62s

endpoints/kubernetes 172.19.0.4:6443 112s

curl -s http://127.0.0.1:30000

Hello from Green

#

kubectl patch svc echo-server-service -p '{"spec": {"selector": {"version": "blue"}}}'

kubectl get deploy,svc,ep -owide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/echo-server-blue 2/2 2 2 99s echo-server hashicorp/http-echo app=echo-server,version=blue

deployment.apps/echo-server-green 2/2 2 2 99s echo-server hashicorp/http-echo app=echo-server,version=green

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/echo-server-service NodePort 10.96.82.118 <none> 80:30000/TCP 99s app=echo-server,version=blue

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 2m29s <none>

NAME ENDPOINTS AGE

endpoints/echo-server-service 10.244.1.14:5678,10.244.2.15:5678 99s

endpoints/kubernetes 172.19.0.4:6443 2m29s

curl -s http://127.0.0.1:30000

Hello from Blue

# 삭제

kubectl delete -f .

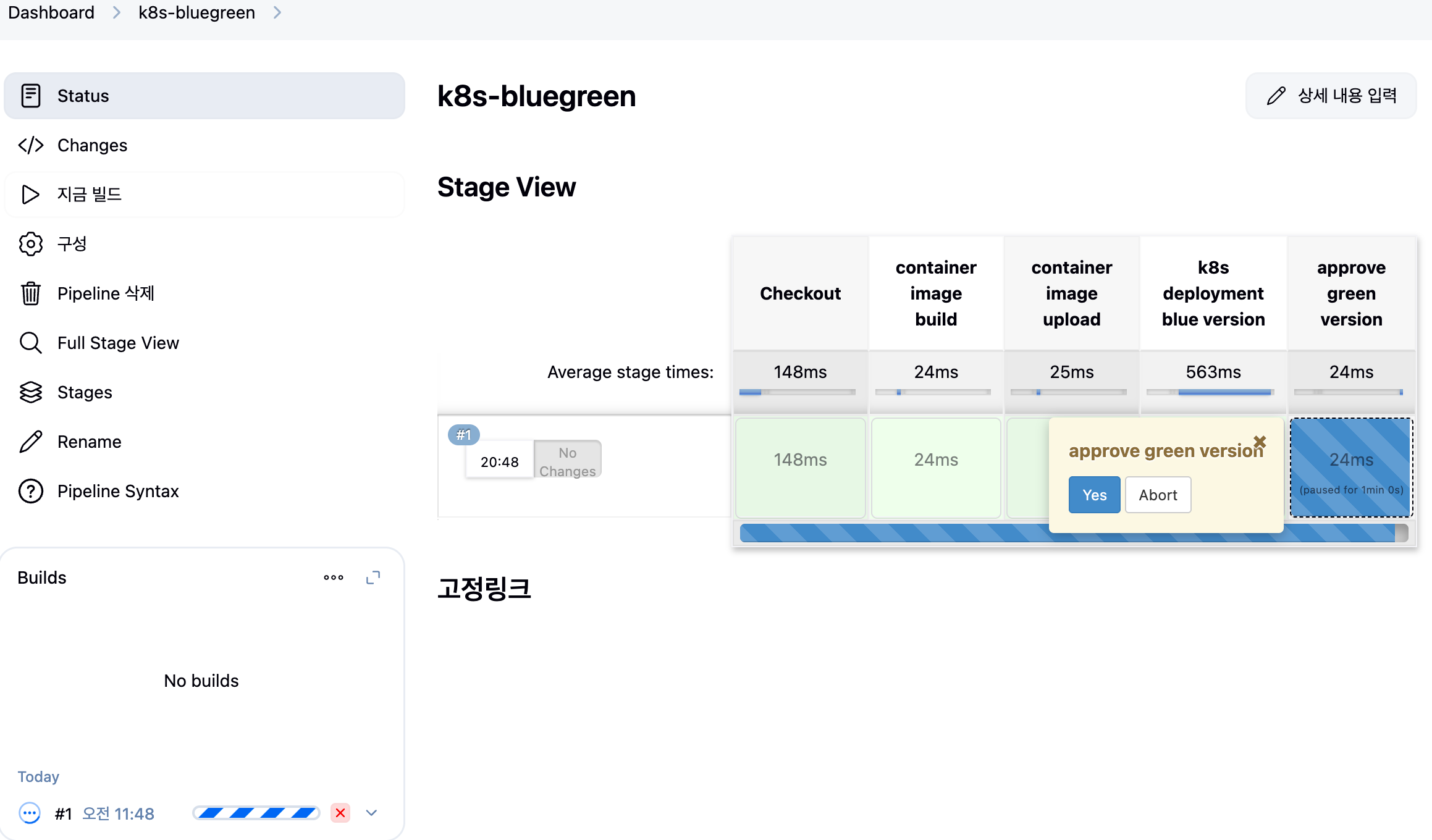

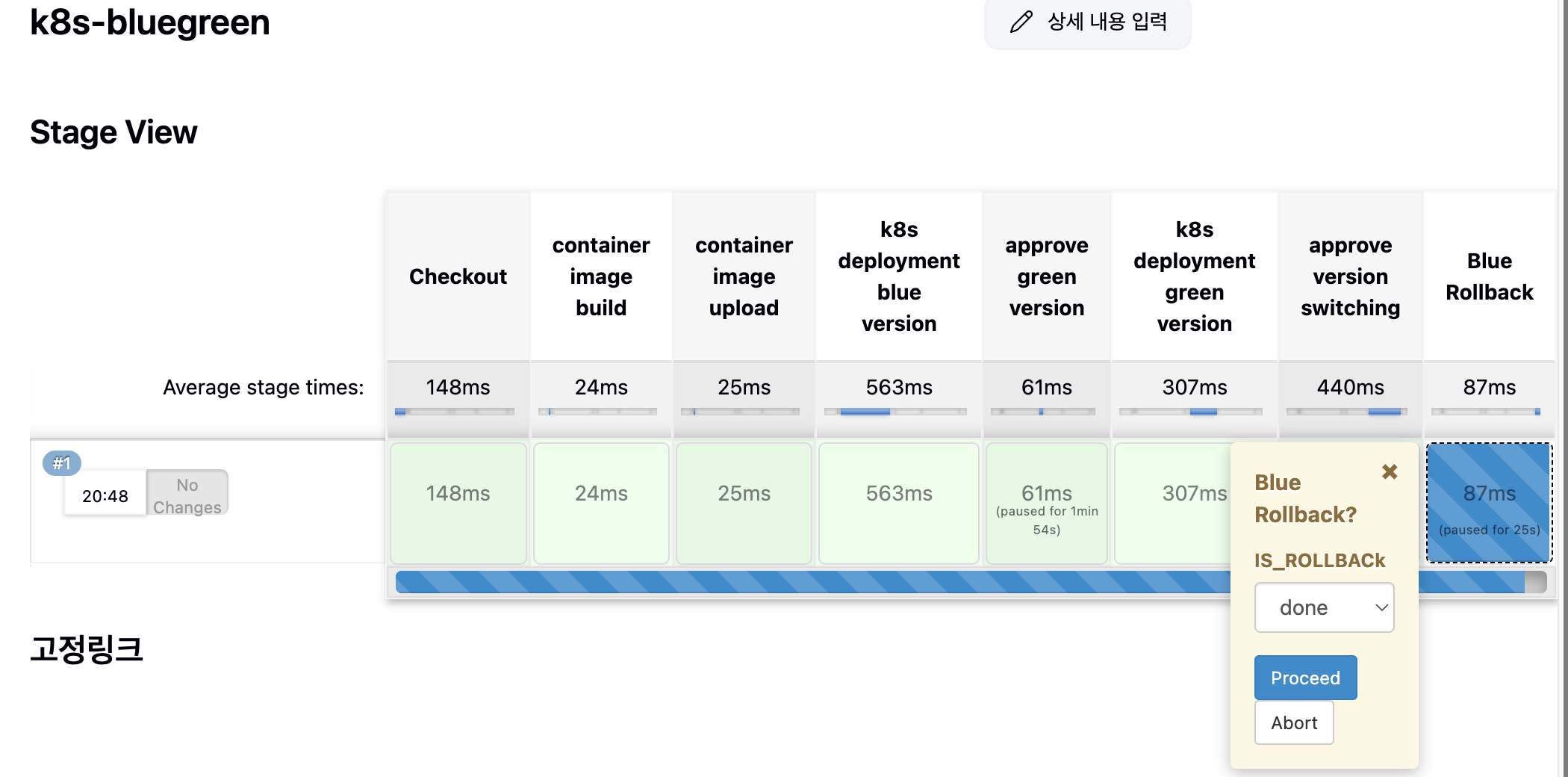

cd ..3.4 Jenkins Item 생성(Pipeline) : item name(k8s-bluegreen) - Jenkins 통한 k8s 기본 배포

- 이전 실습에 디플로이먼트, 서비스 삭제

kubectl delete deploy,svc timeserver- 반복 접속 미리 실행

while true; do curl -s --connect-timeout 1 http://127.0.0.1:30000 ; echo ; sleep 1 ; kubectl get deploy -owide ; echo ; kubectl get svc,ep echo-server-service -owide ; echo "------------" ; done

혹은

while true; do curl -s --connect-timeout 1 http://127.0.0.1:30000 ; date ; echo "------------" ; sleep 1 ; done- pipeline script : Windows (WSL2) 사용자는 자신의 WSL2 Ubuntu eth0

pipeline {

agent any

environment {

KUBECONFIG = credentials('k8s-crd')

}

stages {

stage('Checkout') {

steps {

git branch: 'main',

url: 'http://<자신의 집 IP>:3000/devops/dev-app.git', // Git에서 코드 체크아웃

credentialsId: 'gogs-crd' // Credentials ID

}

}

stage('container image build') {

steps {

echo "container image build"

}

}

stage('container image upload') {

steps {

echo "container image upload"

}

}

stage('k8s deployment blue version') {

steps {

sh "kubectl apply -f ./deploy/echo-server-blue.yaml --kubeconfig $KUBECONFIG"

sh "kubectl apply -f ./deploy/echo-server-service.yaml --kubeconfig $KUBECONFIG"

}

}

stage('approve green version') {

steps {

input message: 'approve green version', ok: "Yes"

}

}

stage('k8s deployment green version') {

steps {

sh "kubectl apply -f ./deploy/echo-server-green.yaml --kubeconfig $KUBECONFIG"

}

}

stage('approve version switching') {

steps {

script {

returnValue = input message: 'Green switching?', ok: "Yes", parameters: [booleanParam(defaultValue: true, name: 'IS_SWITCHED')]

if (returnValue) {

sh "kubectl patch svc echo-server-service -p '{\"spec\": {\"selector\": {\"version\": \"green\"}}}' --kubeconfig $KUBECONFIG"

}

}

}

}

stage('Blue Rollback') {

steps {

script {

returnValue = input message: 'Blue Rollback?', parameters: [choice(choices: ['done', 'rollback'], name: 'IS_ROLLBACk')]

if (returnValue == "done") {

sh "kubectl delete -f ./deploy/echo-server-blue.yaml --kubeconfig $KUBECONFIG"

}

if (returnValue == "rollback") {

sh "kubectl patch svc echo-server-service -p '{\"spec\": {\"selector\": {\"version\": \"blue\"}}}' --kubeconfig $KUBECONFIG"

}

}

}

}

}

}-

지금 배포 후 동작 확인

- Hello from Blue

- Hello from Green

- Rollback 후 Hello from Blue 출력 됨

- Hello from Blue

-

실습 완료 후 삭제

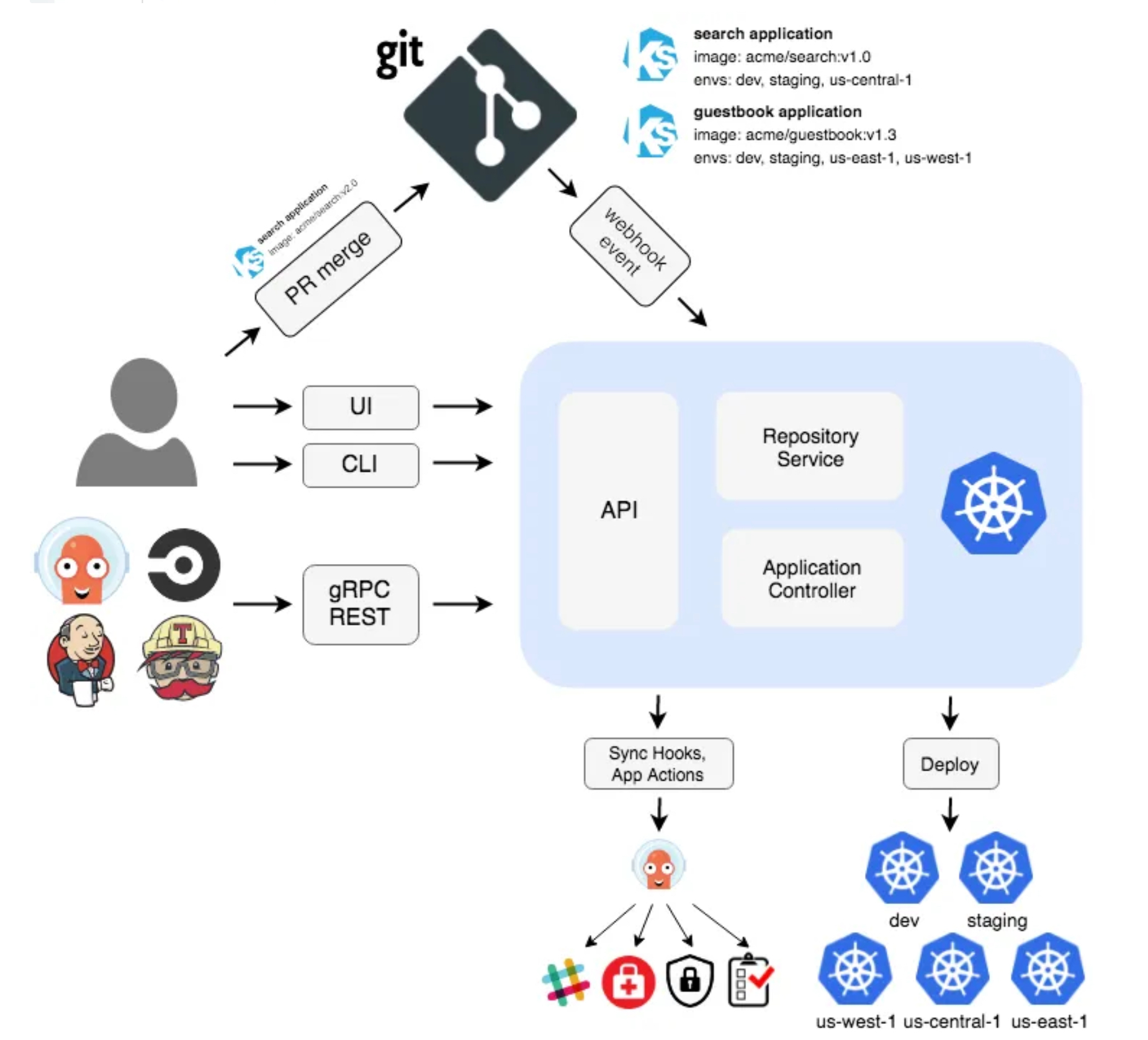

kubectl delete deploy echo-server-blue echo-server-green && kubectl delete svc echo-server-service4. Argo CD + K8S

Argo CD is a declarative, GitOps continuous delivery tool for Kubernetes

4.1 Argo CD 설치 및 기본 설정

- 참조 : helm_chart

# 네임스페이스 생성 및 파라미터 파일 작성

cd cicd-labs

kubectl create ns argocd

cat <<EOF > argocd-values.yaml

dex:

enabled: false

server:

service:

type: NodePort

nodePortHttps: 30002

extraArgs:

- --insecure # HTTPS 대신 HTTP 사용

EOF

# 설치

helm repo add argo https://argoproj.github.io/argo-helm

helm install argocd argo/argo-cd --version 7.8.13 -f argocd-values.yaml --namespace argocd # 7.7.10

NAME: argocd

LAST DEPLOYED: Sat Mar 29 21:08:51 2025

NAMESPACE: argocd

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

In order to access the server UI you have the following options:

1. kubectl port-forward service/argocd-server -n argocd 8080:443

and then open the browser on http://localhost:8080 and accept the certificate

2. enable ingress in the values file `server.ingress.enabled` and either

- Add the annotation for ssl passthrough: https://argo-cd.readthedocs.io/en/stable/operator-manual/ingress/#option-1-ssl-passthrough

- Set the `configs.params."server.insecure"` in the values file and terminate SSL at your ingress: https://argo-cd.readthedocs.io/en/stable/operator-manual/ingress/#option-2-multiple-ingress-objects-and-hosts

After reaching the UI the first time you can login with username: admin and the random password generated during the installation. You can find the password by running:

kubectl -n argocd get secret argocd-initial-admin-secret -o jsonpath="{.data.password}" | base64 -d

(You should delete the initial secret afterwards as suggested by the Getting Started Guide: https://argo-cd.readthedocs.io/en/stable/getting_started/#4-login-using-the-cli)

# 확인

kubectl get pod,svc,ep,secret,cm -n argocd

kubectl get crd | grep argo

applications.argoproj.io 2025-03-29T12:08:55Z

applicationsets.argoproj.io 2025-03-29T12:08:55Z

appprojects.argoproj.io 2025-03-29T12:08:55Z

kubectl get appproject -n argocd -o yaml

apiVersion: v1

items:

- apiVersion: argoproj.io/v1alpha1

kind: AppProject

metadata:

creationTimestamp: "2025-03-29T12:09:24Z"

generation: 1

name: default

namespace: argocd

resourceVersion: "26026"

uid: 63f73b3a-d119-42bf-b137-1c03cad30730

spec:

clusterResourceWhitelist:

- group: '*'

kind: '*'

destinations:

- namespace: '*'

server: '*'

sourceRepos:

- '*'

status: {}

kind: List

# configmap

kubectl get cm -n argocd argocd-cm -o yaml

kubectl get cm -n argocd argocd-rbac-cm -o yaml

...

data:

policy.csv: ""

policy.default: ""

policy.matchMode: glob

scopes: '[groups]'

# 최초 접속 암호 확인

kubectl -n argocd get secret argocd-initial-admin-secret -o jsonpath="{.data.password}" | base64 -d ;echo

CqGhj77xbweIiCLO

# Argo CD 웹 접속 주소 확인 : 초기 암호 입력 (admin 계정)

open "http://127.0.0.1:30002" # macOS

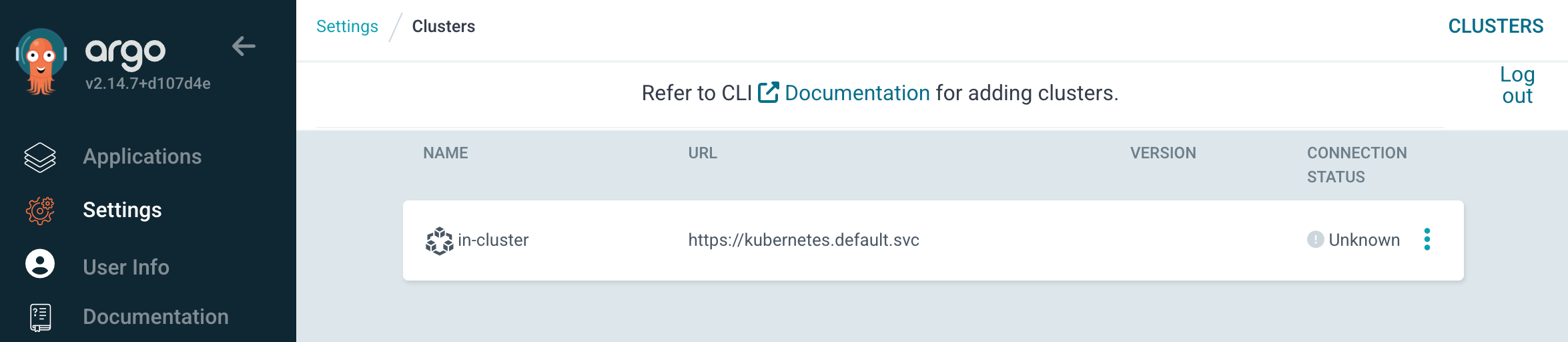

# Windows OS경우 직접 웹 브라우저에서 http://127.0.0.1:30002 접속- Argo CD 웹 접속 확인

- User info → UPDATE PASSWORD 로 admin 계정 암호 변경 (qwe12345)

- 기본 정보 확인 (Settings) : Clusters, Projects, Accounts

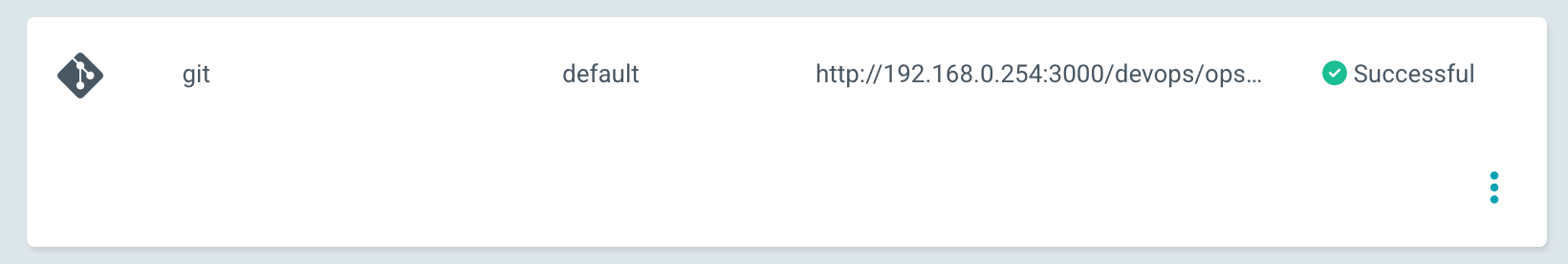

- ops-deploy Repo 등록 : Settings → Repositories → CONNECT REPO 클릭

- connection method : VIA HTTPS

- Type : git

- Project : default

- Repo URL :

http://***<자신의 집 IP>***:3000/devops/ops-deployhttp://192.168.254.127:3000/devops/ops-deploy- Windows (WSL2) 사용자는 자신의 WSL2 Ubuntu eth0 IP

- Username : devops

- Password : <Gogs 토큰>

⇒ 입력 후 CONNECT 클릭

4.2 helm chart 를 통한 배포 실습

#

cd cicd-labs

mkdir nginx-chart

cd nginx-chart

mkdir templates

cat > templates/configmap.yaml <<EOF

apiVersion: v1

kind: ConfigMap

metadata:

name: {{ .Release.Name }}

data:

index.html: |

{{ .Values.indexHtml | indent 4 }}

EOF

cat > templates/deployment.yaml <<EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name: {{ .Release.Name }}

spec:

replicas: {{ .Values.replicaCount }}

selector:

matchLabels:

app: {{ .Release.Name }}

template:

metadata:

labels:

app: {{ .Release.Name }}

spec:

containers:

- name: nginx

image: {{ .Values.image.repository }}:{{ .Values.image.tag }}

ports:

- containerPort: 80

volumeMounts:

- name: index-html

mountPath: /usr/share/nginx/html/index.html

subPath: index.html

volumes:

- name: index-html

configMap:

name: {{ .Release.Name }}

EOF

cat > templates/service.yaml <<EOF

apiVersion: v1

kind: Service

metadata:

name: {{ .Release.Name }}

spec:

selector:

app: {{ .Release.Name }}

ports:

- protocol: TCP

port: 80

targetPort: 80

nodePort: 30000

type: NodePort

EOF

cat > values.yaml <<EOF

indexHtml: |

<!DOCTYPE html>

<html>

<head>

<title>Welcome to Nginx!</title>

</head>

<body>

<h1>Hello, Kubernetes!</h1>

<p>Nginx version 1.26.1</p>

</body>

</html>

image:

repository: nginx

tag: 1.26.1

replicaCount: 1

EOF

cat > Chart.yaml <<EOF

apiVersion: v2

name: nginx-chart

description: A Helm chart for deploying Nginx with custom index.html

type: application

version: 1.0.0

appVersion: "1.26.1"

EOF

# 이전 timeserver/service(nodeport) 삭제

kubectl delete deploy,svc --all

# 직접 배포 해보기

helm template dev-nginx . -f values.yaml

helm install dev-nginx . -f values.yaml

helm list

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

dev-nginx default 1 2025-03-29 21:28:48.259547 +0900 KST deployed nginx-chart-1.0.0 1.26.1

kubectl get deploy,svc,ep,cm dev-nginx -owide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/dev-nginx 1/1 1 1 25s nginx nginx:1.26.1 app=dev-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/dev-nginx NodePort 10.96.141.182 <none> 80:30000/TCP 25s app=dev-nginx

NAME ENDPOINTS AGE

endpoints/dev-nginx 10.244.2.20:80 25s

NAME DATA AGE

configmap/dev-nginx 1 25s

#

curl http://127.0.0.1:30000

<!DOCTYPE html>

<html>

<head>

<title>Welcome to Nginx!</title>

</head>

<body>

<h1>Hello, Kubernetes!</h1>

<p>Nginx version 1.26.1</p>

</body>

</html>

curl -s http://127.0.0.1:30000 | grep version

<p>Nginx version 1.26.1</p>

open http://127.0.0.1:30000

# value 값 변경 후 적용 해보기 : version/tag, replicaCount

cat > values.yaml <<EOF

indexHtml: |

<!DOCTYPE html>

<html>

<head>

<title>Welcome to Nginx!</title>

</head>

<body>

<h1>Hello, Kubernetes!</h1>

<p>Nginx version 1.26.2</p>

</body>

</html>

image:

repository: nginx

tag: 1.26.2

replicaCount: 2

EOF

sed -i '' "s|1.26.1|1.26.2|g" Chart.yaml

# helm chart 업그레이드 적용

helm template dev-nginx . -f values.yaml # 적용 전 렌더링 확인 Render chart templates locally and display the output.

helm upgrade dev-nginx . -f values.yaml

# 확인

helm list

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

dev-nginx default 2 2025-03-29 21:31:52.57648 +0900 KST deployed nginx-chart-1.0.0 1.26.2

kubectl get deploy,svc,ep,cm dev-nginx -owide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/dev-nginx 2/2 2 2 3m25s nginx nginx:1.26.2 app=dev-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/dev-nginx NodePort 10.96.141.182 <none> 80:30000/TCP 3m25s app=dev-nginx

NAME ENDPOINTS AGE

endpoints/dev-nginx 10.244.2.20:80,10.244.2.21:80 3m25s

NAME DATA AGE

configmap/dev-nginx 1 3m25s

curl http://127.0.0.1:30000

<!DOCTYPE html>

<html>

<head>

<title>Welcome to Nginx!</title>

</head>

<body>

<h1>Hello, Kubernetes!</h1>

<p>Nginx version 1.26.2</p>

</body>

</html>

curl -s http://127.0.0.1:30000 | grep version

<p>Nginx version 1.26.2</p>

open http://127.0.0.1:30000

# 확인 후 삭제

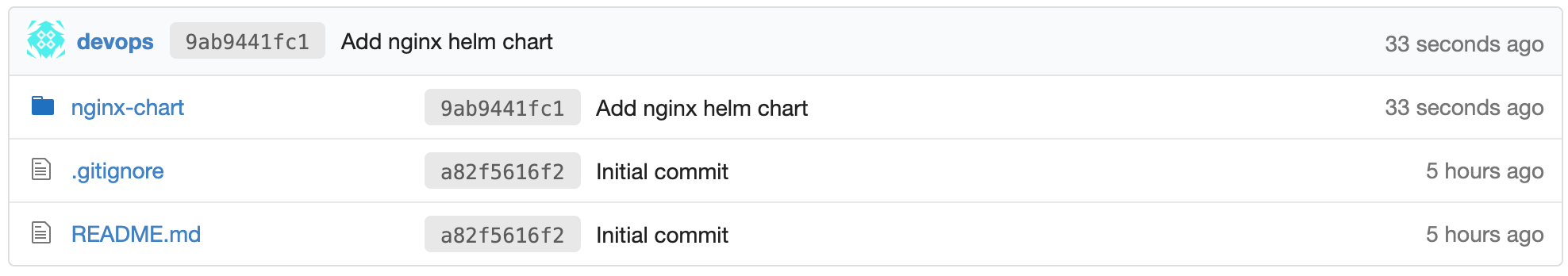

helm uninstall dev-nginx4.3 Repo(ops-deploy) 에 nginx helm chart 를 Argo CD를 통한 배포 1

- git 작업

#

cd cicd-labs

TOKEN=<>

git clone http://devops:$TOKEN@$MyIP:3000/devops/ops-deploy.git

cd ops-deploy

#

git --no-pager config --local --list

git config --local user.name "devops"

git config --local user.email "a@a.com"

git config --local init.defaultBranch main

git config --local credential.helper store

git --no-pager config --local --list

cat .git/config

#

git --no-pager branch

* main

git remote -v

origin http://devops:82d91f424fcf8d834015f08fdd3f730a6cae54d5@192.168.0.254:3000/devops/ops-deploy.git (fetch)

origin http://devops:82d91f424fcf8d834015f08fdd3f730a6cae54d5@192.168.0.254:3000/devops/ops-deploy.git (push)

#

VERSION=1.26.1

mkdir nginx-chart

mkdir nginx-chart/templates

cat > nginx-chart/VERSION <<EOF

$VERSION

EOF

cat > nginx-chart/templates/configmap.yaml <<EOF

apiVersion: v1

kind: ConfigMap

metadata:

name: {{ .Release.Name }}

data:

index.html: |

{{ .Values.indexHtml | indent 4 }}

EOF

cat > nginx-chart/templates/deployment.yaml <<EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name: {{ .Release.Name }}

spec:

replicas: {{ .Values.replicaCount }}

selector:

matchLabels:

app: {{ .Release.Name }}

template:

metadata:

labels:

app: {{ .Release.Name }}

spec:

containers:

- name: nginx

image: {{ .Values.image.repository }}:{{ .Values.image.tag }}

ports:

- containerPort: 80

volumeMounts:

- name: index-html

mountPath: /usr/share/nginx/html/index.html

subPath: index.html

volumes:

- name: index-html

configMap:

name: {{ .Release.Name }}

EOF

cat > nginx-chart/templates/service.yaml <<EOF

apiVersion: v1

kind: Service

metadata:

name: {{ .Release.Name }}

spec:

selector:

app: {{ .Release.Name }}

ports:

- protocol: TCP

port: 80

targetPort: 80

nodePort: 30000

type: NodePort

EOF

cat > nginx-chart/values-dev.yaml <<EOF

indexHtml: |

<!DOCTYPE html>

<html>

<head>

<title>Welcome to Nginx!</title>

</head>

<body>

<h1>Hello, Kubernetes!</h1>

<p>DEV : Nginx version $VERSION</p>

</body>

</html>

image:

repository: nginx

tag: $VERSION

replicaCount: 1

EOF

cat > nginx-chart/values-prd.yaml <<EOF

indexHtml: |

<!DOCTYPE html>

<html>

<head>

<title>Welcome to Nginx!</title>

</head>

<body>

<h1>Hello, Kubernetes!</h1>

<p>PRD : Nginx version $VERSION</p>

</body>

</html>

image:

repository: nginx

tag: $VERSION

replicaCount: 2

EOF

cat > nginx-chart/Chart.yaml <<EOF

apiVersion: v2

name: nginx-chart

description: A Helm chart for deploying Nginx with custom index.html

type: application

version: 1.0.0

appVersion: "$VERSION"

EOF

tree nginx-chart

.

├── README.md

└── nginx-chart

├── Chart.yaml

├── VERSION

├── templates

│ ├── configmap.yaml

│ ├── deployment.yaml

│ └── service.yaml

├── values-dev.yaml

└── values-prd.yaml

#

helm template dev-nginx nginx-chart -f nginx-chart/values-dev.yaml

helm template prd-nginx nginx-chart -f nginx-chart/values-prd.yaml

DEVNGINX=$(helm template dev-nginx nginx-chart -f nginx-chart/values-dev.yaml | sed 's/---//g')

PRDNGINX=$(helm template prd-nginx nginx-chart -f nginx-chart/values-prd.yaml | sed 's/---//g')

diff <(echo "$DEVNGINX") <(echo "$PRDNGINX")

#

git add . && git commit -m "Add nginx helm chart" && git push -u origin main

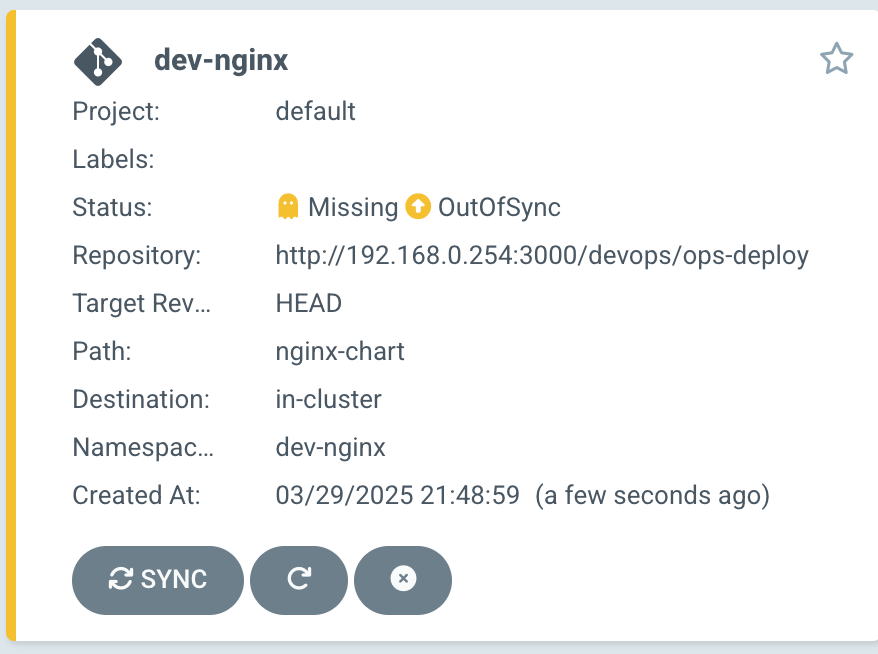

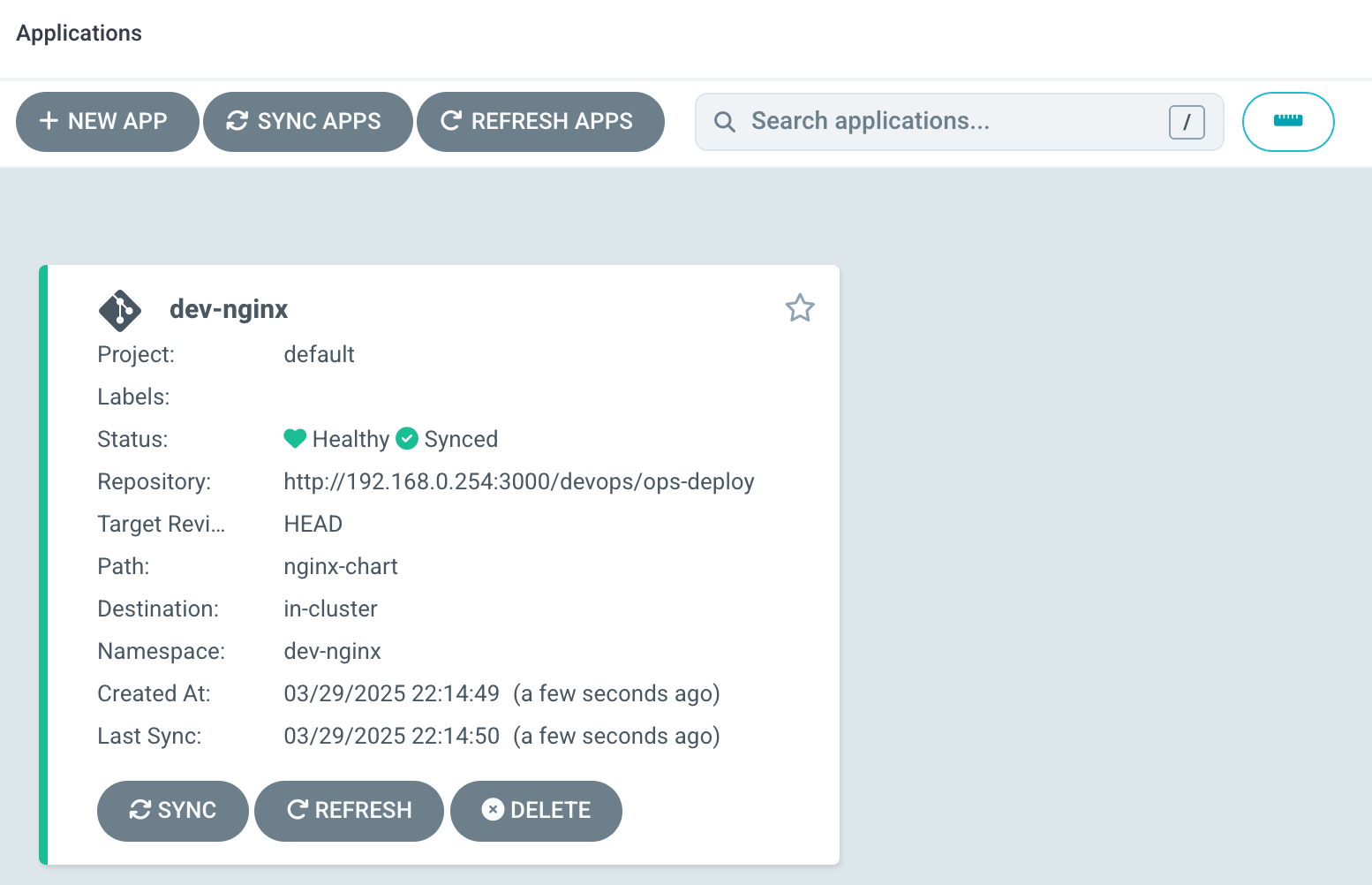

- Argo CD에 App 등록 : Application → NEW APP

- GENERAL

- App Name : dev-nginx

- Project Name : default

- SYNC POLICY : Manual

- AUTO-CREATE NAMESPACE : 클러스터에 네임스페이스가 없을 시 argocd에 입력한 이름으로 자동 생성

- APPLY OUT OF SYNC ONLY : 현재 동기화 상태가 아닌 리소스만 배포

- SYNC OPTIONS : AUTO-CREATE NAMESPACE(Check)

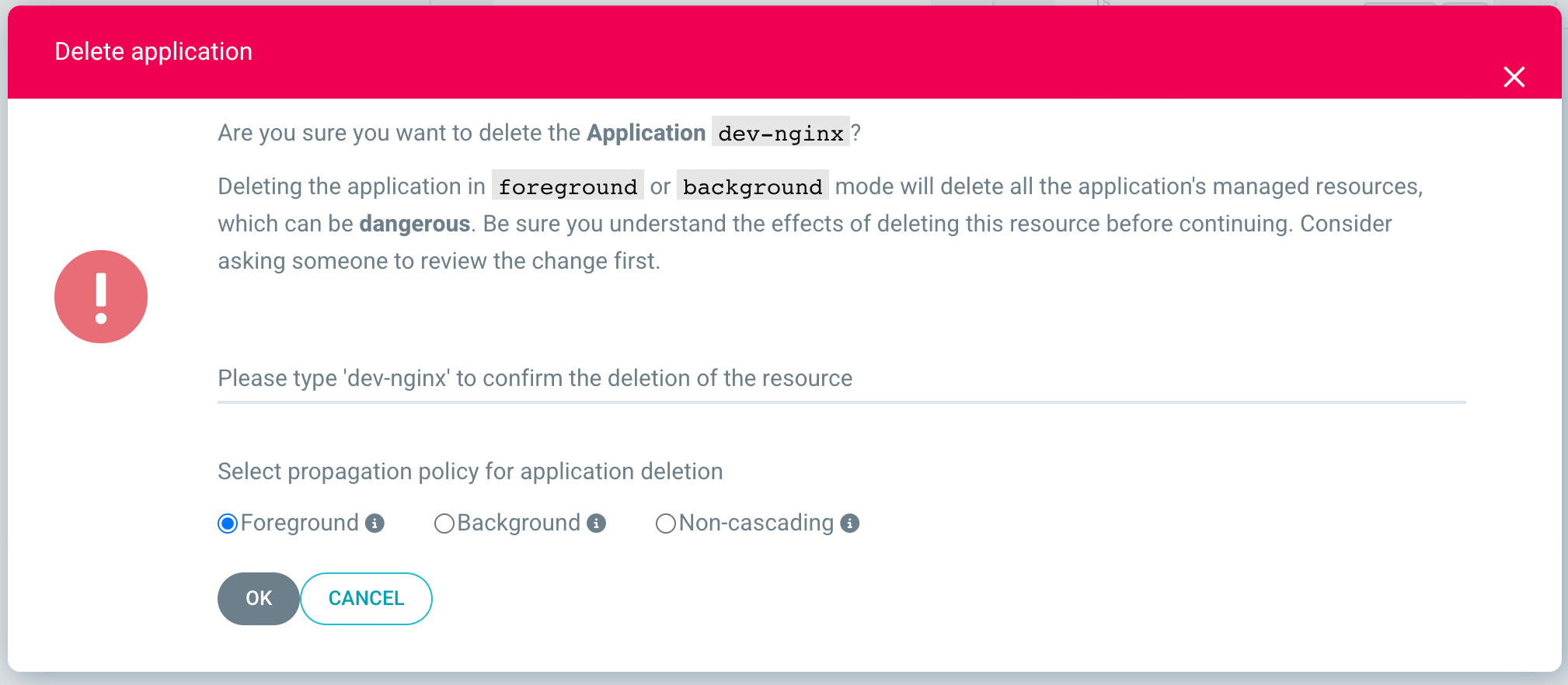

- PRUNE PROPAGATION POLICY

- foreground : 부모(소유자, ex. deployment) 자원을 먼저 삭제함

- background : 자식(종속자, ex. pod) 자원을 먼저 삭제함

- orphan : 고아(소유자는 삭제됐지만, 종속자가 삭제되지 않은 경우) 자원을 삭제함

- Source

- Repo URL : 설정되어 있는 것 선택

- Revision : HEAD

- PATH : nginx-chart

- DESTINATION

- Cluster URL : <기본값>

- NAMESPACE : dev-nginx

- HELM

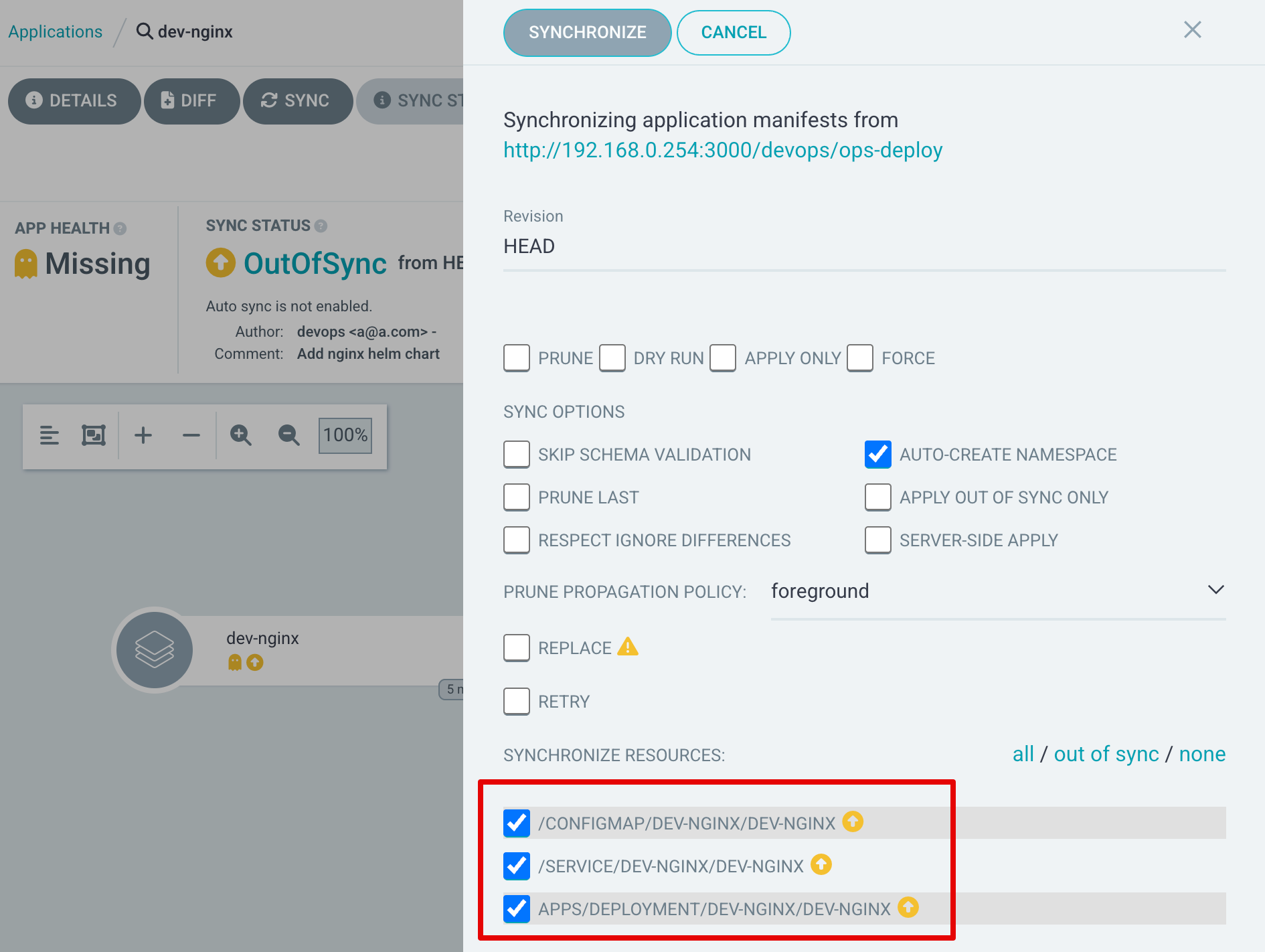

- Values files : values-dev.yaml⇒ 작성 후 상단 CREATE 클릭 - PRUNE : GIt에서 자원 삭제 후 배포시 K8S에서는 삭제되지 않으나, 해당 옵션을 선택하면 삭제시킴 - FORCE : --force 옵션으로 리소스 삭제 - APPLY ONLY : ArgoCD의 Pre/Post Hook은 사용 안함 (리소스만 배포) - DRY RUN : 테스트 배포 (배포에 에러가 있는지 한번 확인해 볼때 사용)

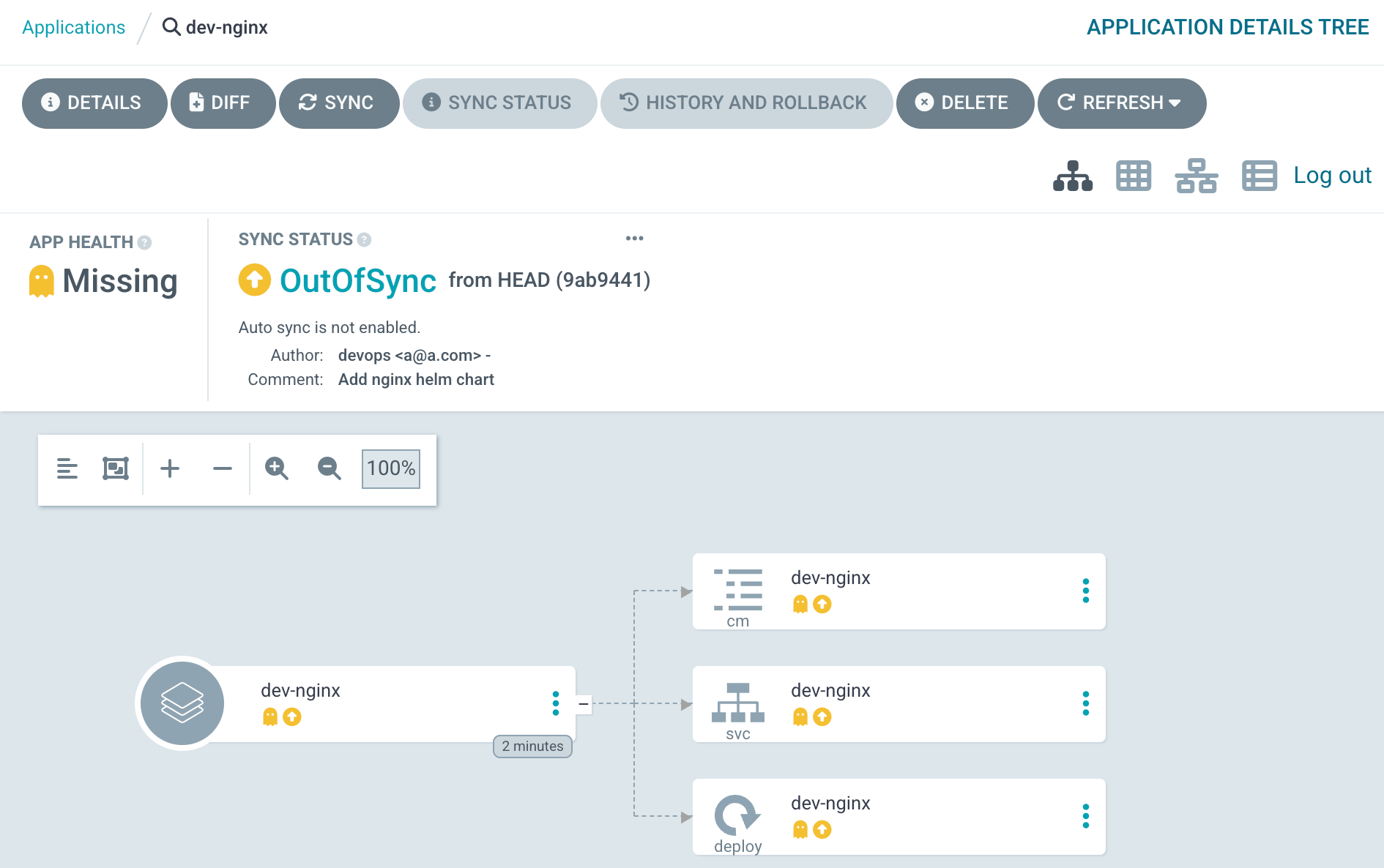

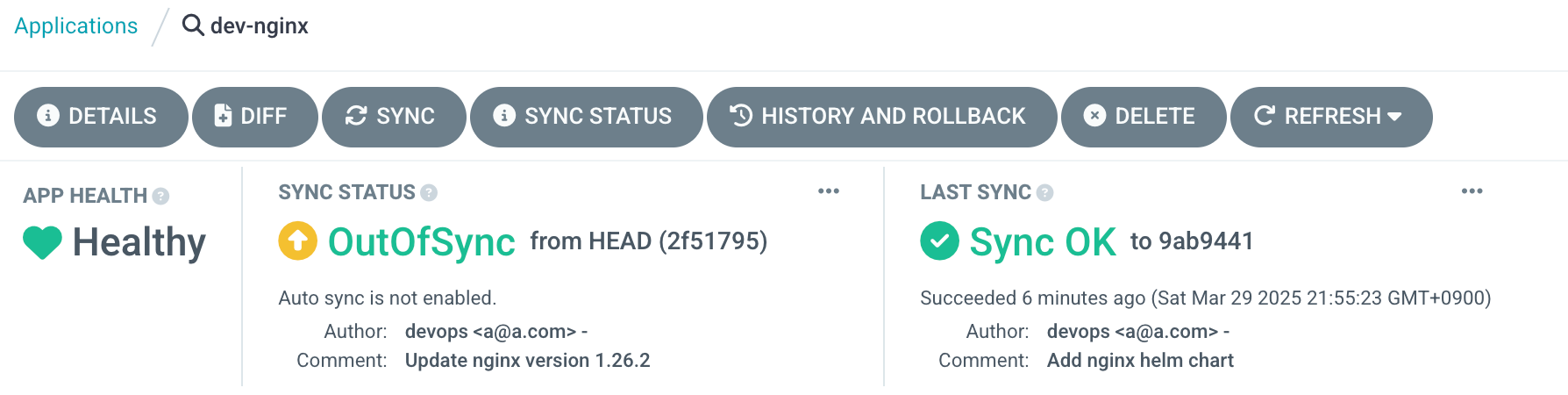

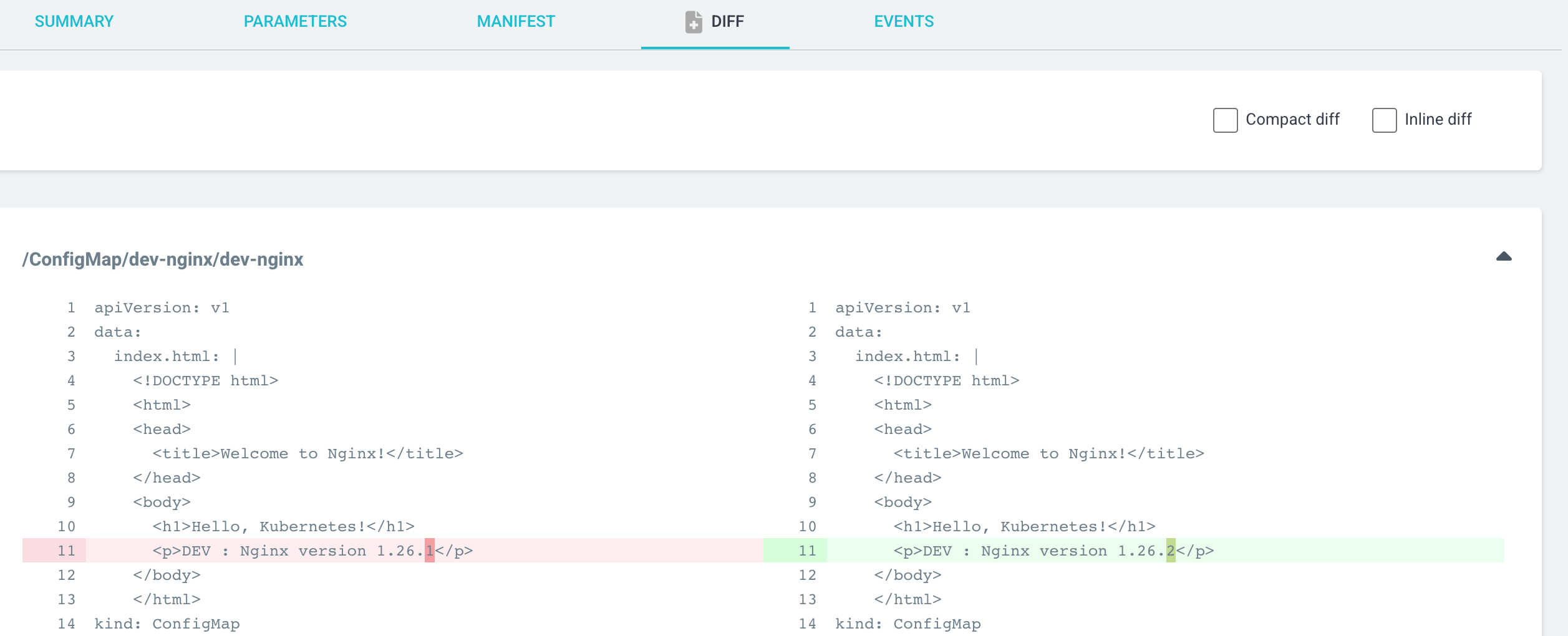

- dev-nginx App 클릭 후 상세 정보 확인 → DIFF 클릭 확인

```bash

#

kubectl get applications -n argocd

NAME SYNC STATUS HEALTH STATUS

dev-nginx OutOfSync Missing

kubectl describe applications -n argocd dev-nginx

# 상태 모니터링

kubectl get applications -n argocd -w

# 반복 접속 시도

while true; do curl -s --connect-timeout 1 http://127.0.0.1:30000 ; date ; echo "------------" ; sleep 1 ; done- SYNC 클릭 으로 K8S(Live) 반영 확인 : 생성될 리소스 확인

# 아래 처럼 yaml 로 APP 생성 가능

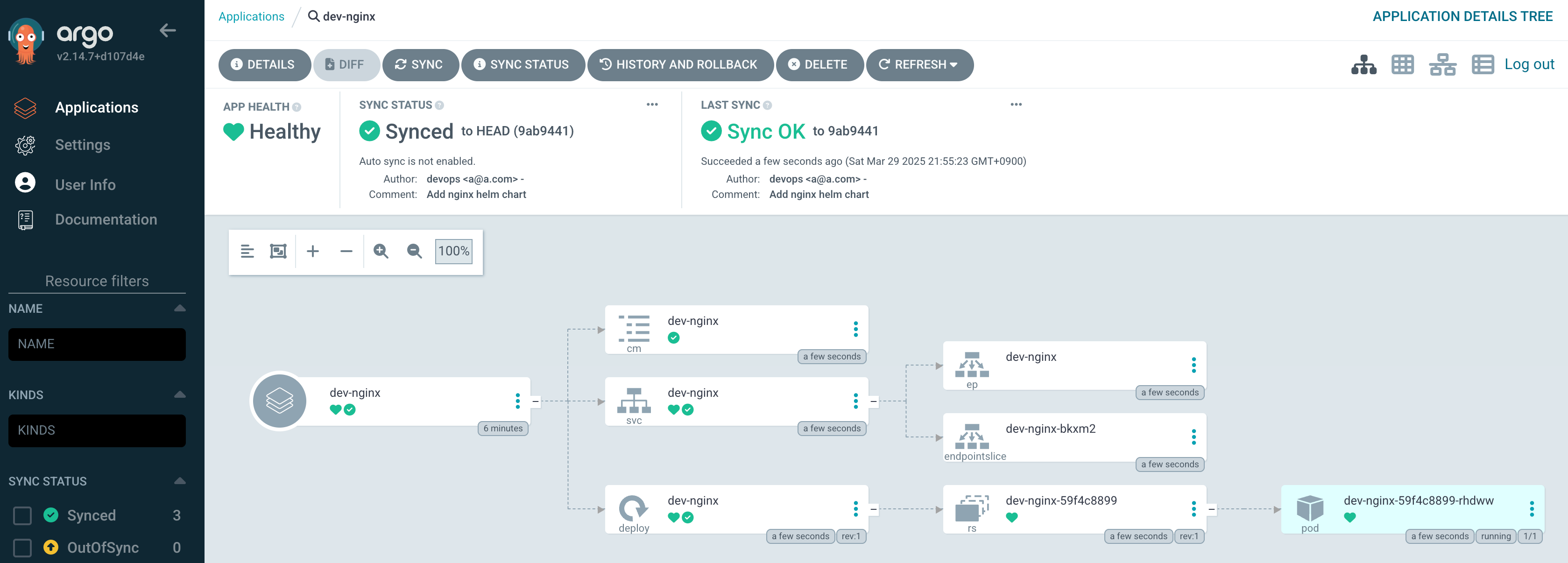

kubectl get applications -n argocd

NAME SYNC STATUS HEALTH STATUS

dev-nginx Synced Healthy

kubectl get applications -n argocd -o yaml | kubectl neat

apiVersion: v1

items:

- apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: dev-nginx

namespace: argocd

spec:

destination:

namespace: dev-nginx

server: https://kubernetes.default.svc

project: default

source:

helm:

valueFiles:

- values-dev.yaml

path: nginx-chart

repoURL: http://192.168.0.254:3000/devops/ops-deploy

targetRevision: HEAD

syncPolicy:

syncOptions:

- CreateNamespace=true

kind: List

# 배포 확인

kubectl get all -n dev-nginx -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/dev-nginx-59f4c8899-rhdww 1/1 Running 0 78s 10.244.2.22 myk8s-worker2 <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/dev-nginx NodePort 10.96.80.226 <none> 80:30000/TCP 79s app=dev-nginx

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/dev-nginx 1/1 1 1 78s nginx nginx:1.26.1 app=dev-nginx

NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR

replicaset.apps/dev-nginx-59f4c8899 1 1 1 78s nginx nginx:1.26.1 app=dev-nginx,pod-template-hash=59f4c8899

- 1.26.2 로 업데이트(코드 수정) 후 반영 확인

#

VERSION=1.26.2

cat > nginx-chart/VERSION <<EOF

$VERSION

EOF

cat > nginx-chart/values-dev.yaml <<EOF

indexHtml: |

<!DOCTYPE html>

<html>

<head>

<title>Welcome to Nginx!</title>

</head>

<body>

<h1>Hello, Kubernetes!</h1>

<p>DEV : Nginx version $VERSION</p>

</body>

</html>

image:

repository: nginx

tag: $VERSION

replicaCount: 2

EOF

cat > nginx-chart/values-prd.yaml <<EOF

indexHtml: |

<!DOCTYPE html>

<html>

<head>

<title>Welcome to Nginx!</title>

</head>

<body>

<h1>Hello, Kubernetes!</h1>

<p>PRD : Nginx version $VERSION</p>

</body>

</html>

image:

repository: nginx

tag: $VERSION

replicaCount: 2

EOF

cat > nginx-chart/Chart.yaml <<EOF

apiVersion: v2

name: nginx-chart

description: A Helm chart for deploying Nginx with custom index.html

type: application

version: 1.0.0

appVersion: "$VERSION"

EOF

#

git add . && git commit -m "Update nginx version $(cat nginx-chart/VERSION)" && git push -u origin main

👉 How often does Argo CD check for changes to my Git or Helm repository ?

The default polling interval is 3 minutes (180 seconds). You can change the setting by updating the timeout.reconciliation value in the argocd-cm config map

kubectl get cm -n argocd argocd-cm -o yaml | grep timeout

timeout.hard.reconciliation: 0s

timeout.reconciliation: 180s- SYNC 클릭 → SYNCHRONIZE 클릭

# 배포 확인

kubectl get all -n dev-nginx -o wide- Argo CD 웹에서 App 삭제

watch -d kubectl get all -n dev-nginx -o wide4.4 Repo(ops-deploy) 에 nginx helm chart 를 Argo CD를 통한 배포 2 : ArgoCD Declarative Setup

- ArgoCD Declarative Setup - Project, applications(ArgoCD App 자체를 yaml로 생성), ArgoCD Settings - Docs

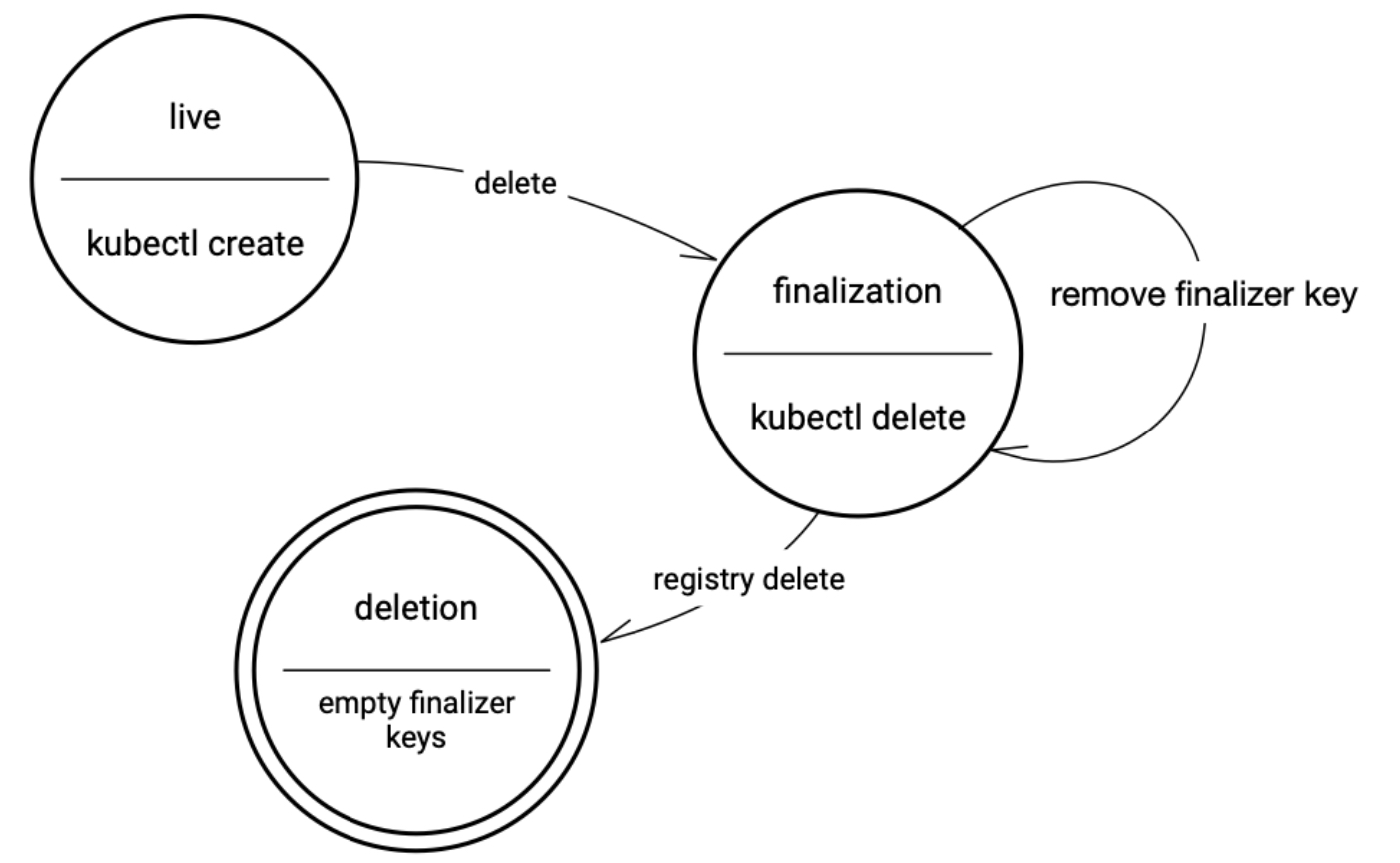

- (참고) K8S Finalizers 와 Argo Finalizers 동작 - Docs , Blog

- Kubernetes에서 finalizers는 리소스의 metadata.finalizers 필드에 정의된 이름 목록으로, 리소스가 삭제 요청을 받았을 때(즉, kubectl delete나 API 호출로 삭제가 시작될 때) 바로 제거되지 않고, 지정된 작업이 완료될 때까지 "종료 중"(Terminating) 상태로 유지되게 합니다.

- ArgoCD는 이 메커니즘을 활용해 애플리케이션 삭제 시 관리 대상 리소스의 정리(cleanup)를 제어합니다.

- ArgoCD에서 가장 흔히 사용되는 finalizer는 `resources-finalizer.argocd.argoproj.io`입니다. 이 finalizer는 애플리케이션이 삭제될 때 해당 애플리케이션이 관리하는 모든 리소스(예: Pod, Service, ConfigMap 등)를 함께 삭제하도록 보장합니다.

- **ArgoCD Finalizers의 목적**

1. **리소스 정리 보장**: 애플리케이션 삭제 시 **관련 리소스가 남지 않도록 보장**합니다. 이는 GitOps 워크플로우에서 **선언적 상태를 유지하는 데 중요**합니다.

2. **의도치 않은 삭제 방지**: finalizer가 없으면 실수로 **Argo App을 삭제**해도 **K8S 리소스가 남아 혼란**이 생길 수 있습니다. finalizer는 이를 **방지**합니다.

3. **App of Apps 패턴 지원**: 여러 애플리케이션을 계층적으로 관리할 때, 상위 애플리케이션 삭제 시 **하위 리소스까지 정리**되도록 합니다.

- dev-nginx App 생성 및 Auto SYNC

#

echo $MyIP

cat <<EOF | kubectl apply -f -

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: dev-nginx

namespace: argocd

finalizers:

- resources-finalizer.argocd.argoproj.io

spec:

project: default

source:

helm:

valueFiles:

- values-dev.yaml

path: nginx-chart

repoURL: http://$MyIP:3000/devops/ops-deploy

targetRevision: HEAD

syncPolicy:

automated:

prune: true

syncOptions:

- CreateNamespace=true

destination:

namespace: dev-nginx

server: https://kubernetes.default.svc

EOF

#

kubectl get applications -n argocd dev-nginx

NAME SYNC STATUS HEALTH STATUS

dev-nginx Synced Healthy

kubectl get applications -n argocd dev-nginx -o yaml | kubectl neat

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: dev-nginx

namespace: argocd

spec:

destination:

namespace: dev-nginx

server: https://kubernetes.default.svc

project: default

source:

helm:

valueFiles:

- values-dev.yaml

path: nginx-chart

repoURL: http://192.168.0.254:3000/devops/ops-deploy

targetRevision: HEAD

syncPolicy:

automated:

prune: true

syncOptions:

- CreateNamespace=true

kubectl describe applications -n argocd dev-nginx

Name: dev-nginx

Namespace: argocd

Labels: <none>

Annotations: <none>

API Version: argoproj.io/v1alpha1

Kind: Application

Metadata:

Creation Timestamp: 2025-03-29T13:14:49Z

Finalizers:

resources-finalizer.argocd.argoproj.io

Generation: 13

Resource Version: 32380

UID: a32229e8-ef38-443c-a408-eff67d2a1904

Spec:

Destination:

Namespace: dev-nginx

Server: https://kubernetes.default.svc

Project: default

Source:

Helm:

Value Files:

values-dev.yaml

Path: nginx-chart

Repo URL: http://192.168.0.254:3000/devops/ops-deploy

Target Revision: HEAD

Sync Policy:

Automated:

Prune: true

Sync Options:

CreateNamespace=true

Status:

Controller Namespace: argocd

Health:

Last Transition Time: 2025-03-29T13:14:51Z

Status: Healthy

History:

Deploy Started At: 2025-03-29T13:14:49Z

Deployed At: 2025-03-29T13:14:50Z

Id: 0

Initiated By:

Automated: true

Revision: 2f517955de8b39f6de250e8e617ada6db1b1a95a

Source:

Helm:

Value Files:

values-dev.yaml

Path: nginx-chart

Repo URL: http://192.168.0.254:3000/devops/ops-deploy

Target Revision: HEAD

Operation State:

Finished At: 2025-03-29T13:14:50Z

Message: successfully synced (all tasks run)

Operation:

Initiated By:

Automated: true

Retry:

Limit: 5

Sync:

Prune: true

Revision: 2f517955de8b39f6de250e8e617ada6db1b1a95a

Sync Options:

CreateNamespace=true

Phase: Succeeded

Started At: 2025-03-29T13:14:49Z

Sync Result:

Resources:

Group:

Hook Phase: Running

Kind: ConfigMap

Message: configmap/dev-nginx created

Name: dev-nginx

Namespace: dev-nginx

Status: Synced

Sync Phase: Sync

Version: v1

Group:

Hook Phase: Running

Kind: Service

Message: service/dev-nginx created

Name: dev-nginx

Namespace: dev-nginx

Status: Synced

Sync Phase: Sync

Version: v1

Group: apps

Hook Phase: Running

Kind: Deployment

Message: deployment.apps/dev-nginx created

Name: dev-nginx

Namespace: dev-nginx

Status: Synced

Sync Phase: Sync

Version: v1

Revision: 2f517955de8b39f6de250e8e617ada6db1b1a95a

Source:

Helm:

Value Files:

values-dev.yaml

Path: nginx-chart

Repo URL: http://192.168.0.254:3000/devops/ops-deploy

Target Revision: HEAD

Reconciled At: 2025-03-29T13:14:50Z

Resources:

Kind: ConfigMap

Name: dev-nginx

Namespace: dev-nginx

Status: Synced

Version: v1

Health:

Status: Healthy

Kind: Service

Name: dev-nginx

Namespace: dev-nginx

Status: Synced

Version: v1

Group: apps

Health:

Status: Healthy

Kind: Deployment

Name: dev-nginx

Namespace: dev-nginx

Status: Synced

Version: v1

Source Hydrator:

Source Type: Helm

Summary:

Images:

nginx:1.26.2

Sync:

Compared To:

Destination:

Namespace: dev-nginx

Server: https://kubernetes.default.svc

Source:

Helm:

Value Files:

values-dev.yaml

Path: nginx-chart

Repo URL: http://192.168.0.254:3000/devops/ops-deploy

Target Revision: HEAD

Revision: 2f517955de8b39f6de250e8e617ada6db1b1a95a

Status: Synced

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal OperationStarted 89s argocd-application-controller Initiated automated sync to '2f517955de8b39f6de250e8e617ada6db1b1a95a'

Normal ResourceUpdated 89s argocd-application-controller Updated sync status: -> OutOfSync

Normal ResourceUpdated 89s argocd-application-controller Updated health status: -> Missing

Normal ResourceUpdated 88s argocd-application-controller Updated sync status: OutOfSync -> Synced

Normal ResourceUpdated 88s argocd-application-controller Updated health status: Missing -> Progressing

Normal OperationCompleted 88s argocd-application-controller Sync operation to 2f517955de8b39f6de250e8e617ada6db1b1a95a succeeded

Normal ResourceUpdated 87s argocd-application-controller Updated health status: Progressing -> Healthy

kubectl get pod,svc,ep,cm -n dev-nginx

NAME READY STATUS RESTARTS AGE

pod/dev-nginx-744568f6b4-dxskt 1/1 Running 0 2m

pod/dev-nginx-744568f6b4-wd8mz 1/1 Running 0 2m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/dev-nginx NodePort 10.96.14.98 <none> 80:30000/TCP 2m

NAME ENDPOINTS AGE

endpoints/dev-nginx 10.244.1.28:80,10.244.2.24:80 2m

NAME DATA AGE

configmap/dev-nginx 1 2m

configmap/kube-root-ca.crt 1 21m

#

curl http://127.0.0.1:30000

<!DOCTYPE html>

<html>

<head>

<title>Welcome to Nginx!</title>

</head>

<body>

<h1>Hello, Kubernetes!</h1>

<p>DEV : Nginx version 1.26.2</p>

</body>

</html>

open http://127.0.0.1:30000

# Argo CD App 삭제

kubectl delete applications -n argocd dev-nginx

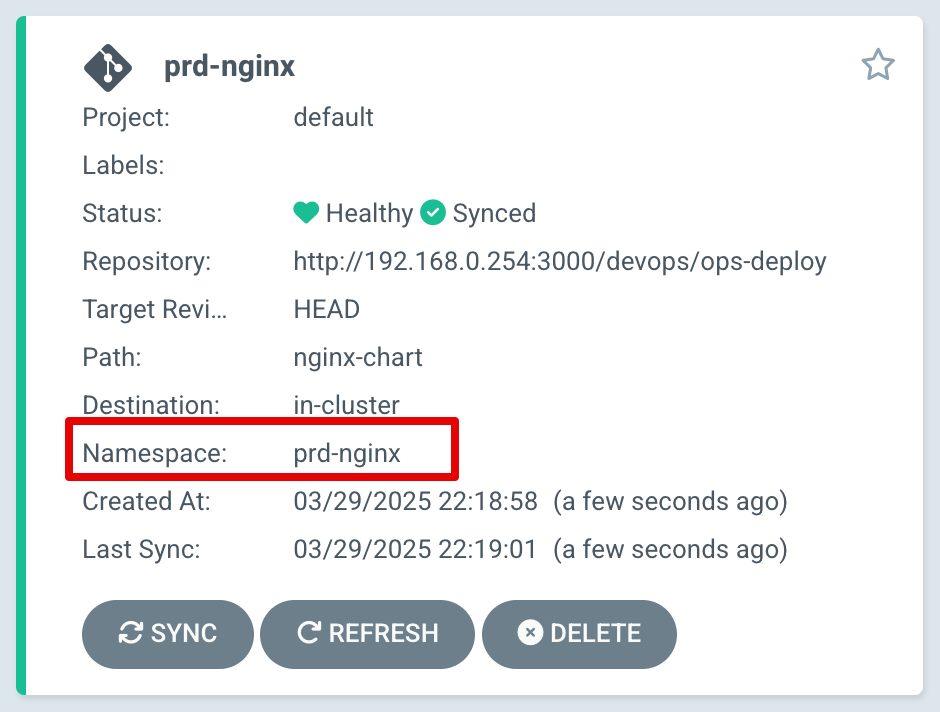

- prd-nginx App 생성 및 Auto SYNC

#

cat <<EOF | kubectl apply -f -

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: prd-nginx

namespace: argocd

finalizers:

- resources-finalizer.argocd.argoproj.io

spec:

destination:

namespace: prd-nginx

server: https://kubernetes.default.svc

project: default

source:

helm:

valueFiles:

- values-prd.yaml

path: nginx-chart

repoURL: http://$MyIP:3000/devops/ops-deploy

targetRevision: HEAD

syncPolicy:

automated:

prune: true

syncOptions:

- CreateNamespace=true

EOF

#

kubectl get applications -n argocd prd-nginx

kubectl describe applications -n argocd prd-nginx

kubectl get pod,svc,ep,cm -n prd-nginx

#

curl http://127.0.0.1:30000

open http://127.0.0.1:30000

# Argo CD App 삭제

kubectl delete applications -n argocd prd-nginx

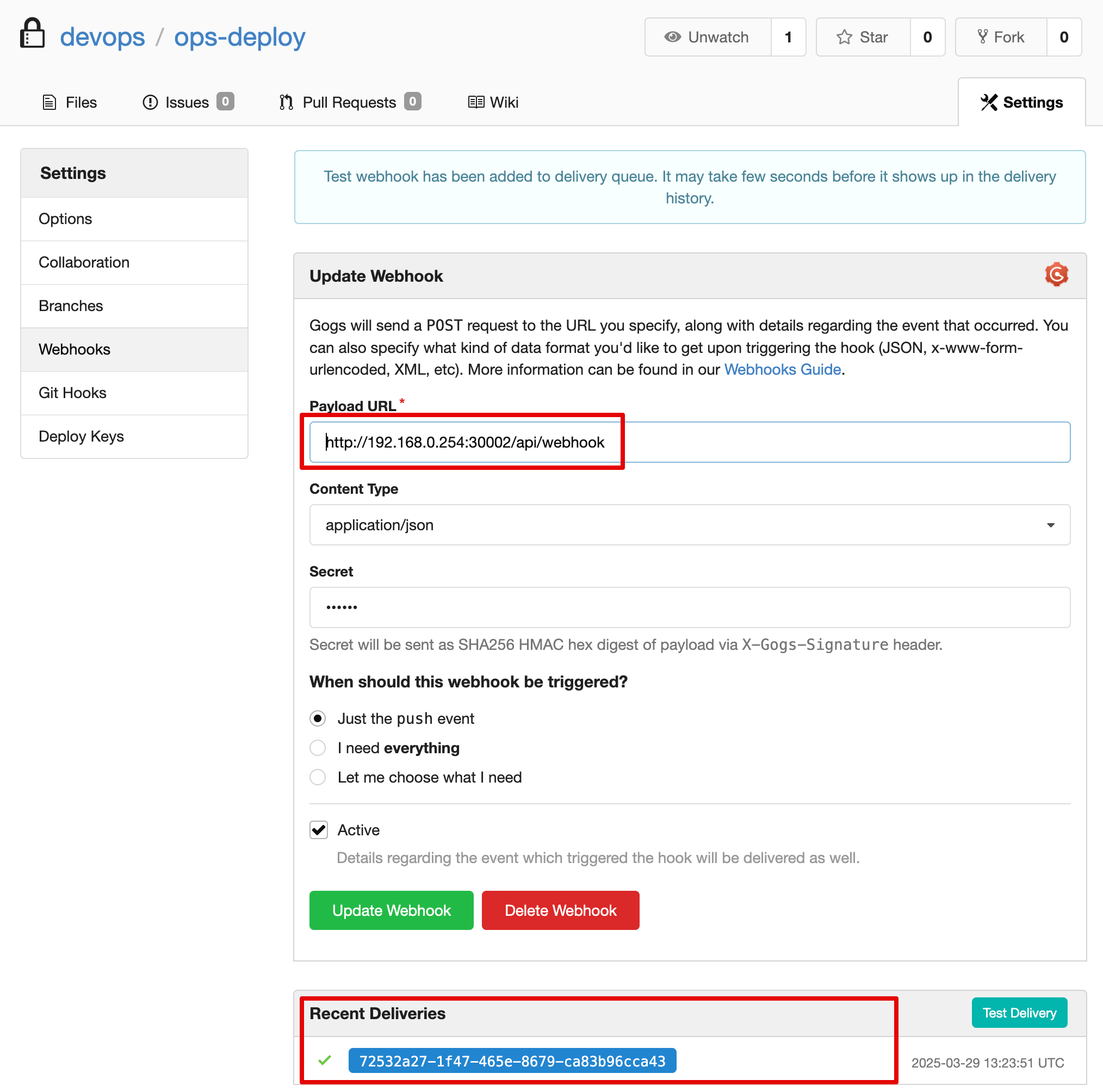

4.5 Repo(ops-deploy) 에 Webhook 를 통해 Argo CD 에 즉시 반영 trigger하여 k8s 배포 할 수 있게 설정 - Docs

- Repo(ops-deploy) 에 webhooks 설정 : Gogs 선택

- Payload URL : http://192.168.254.127:30002/api/webhook

- Windows (WSL2) 사용자는 자신의 WSL2 Ubuntu eth0 IP

- 나머지 항목 ‘기본값’ ⇒ Add webhook

- 이후 생성된 webhook 클릭 후 Test Delivery 클릭 후 정상 응답 확인

- Payload URL : http://192.168.254.127:30002/api/webhook

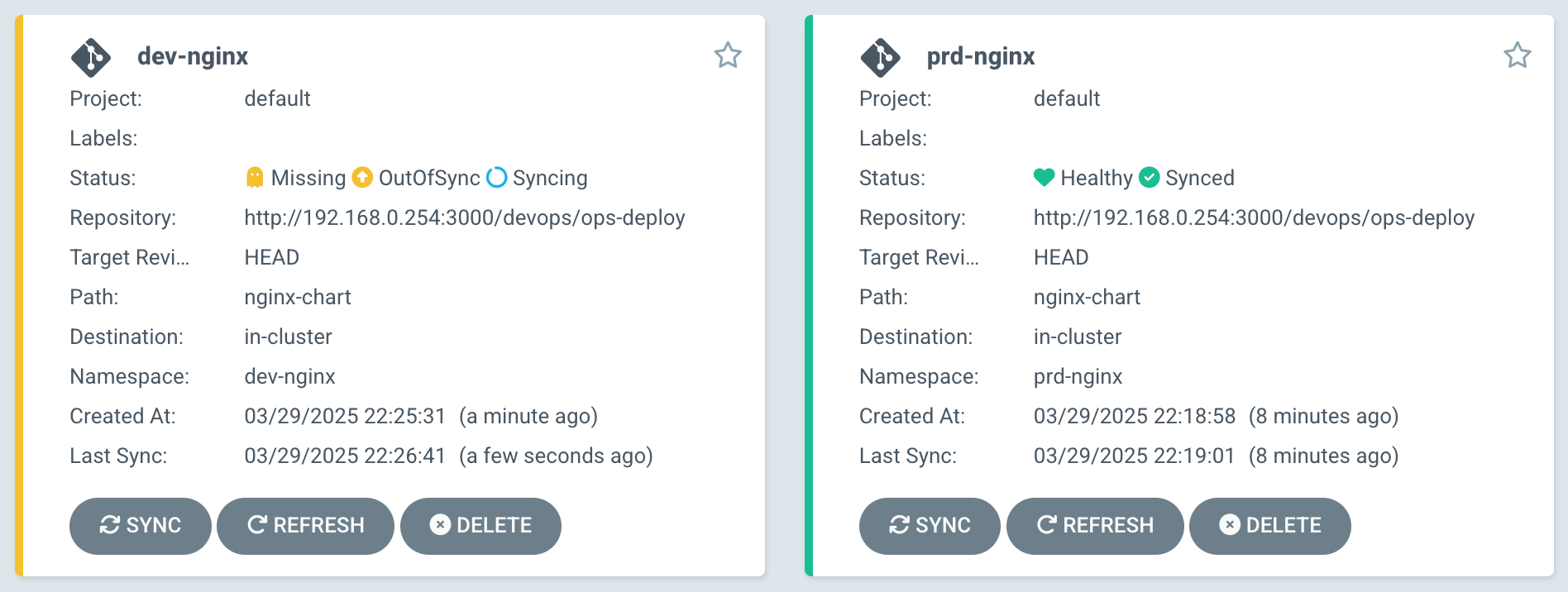

- dev-nginx App 생성 및 Auto SYNC

#

echo $MyIP

cat <<EOF | kubectl apply -f -

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: dev-nginx

namespace: argocd

finalizers:

- resources-finalizer.argocd.argoproj.io

spec:

project: default

source:

helm:

valueFiles:

- values-dev.yaml

path: nginx-chart

repoURL: http://$MyIP:3000/devops/ops-deploy

targetRevision: HEAD

syncPolicy:

automated:

prune: true

syncOptions:

- CreateNamespace=true

destination:

namespace: dev-nginx

server: https://kubernetes.default.svc

EOF

#

kubectl get applications -n argocd dev-nginx

dev-nginx OutOfSync Missing

kubectl get applications -n argocd dev-nginx -o yaml | kubectl neat

kubectl describe applications -n argocd dev-nginx

NAME READY STATUS RESTARTS AGE

pod/dev-nginx-744568f6b4-tc9cg 1/1 Running 0 80s

pod/dev-nginx-744568f6b4-tsk44 1/1 Running 0 80s

NAME DATA AGE

configmap/dev-nginx 1 80s

configmap/kube-root-ca.crt 1 31m

kubectl get pod,svc,ep,cm -n dev-nginx

#

curl http://127.0.0.1:30000

<!DOCTYPE html>

<html>

<head>

<title>Welcome to Nginx!</title>

</head>

<body>

<h1>Hello, Kubernetes!</h1>

<p>PRD : Nginx version 1.26.2</p>

</body>

</html>

open http://127.0.0.1:30000

- Git(Gogs) 수정 후 ArgoCD 즉시 반영 확인

#

cd cicd-labs/ops-deploy/nginx-chart

#

sed -i -e "s|replicaCount: 2|replicaCount: 3|g" values-dev.yaml

git add values-dev.yaml && git commit -m "Modify nginx-chart : values-dev.yaml" && git push -u origin main

watch -d kubectl get all -n dev-nginx -o wide

#

sed -i -e "s|replicaCount: 3|replicaCount: 4|g" values-dev.yaml

git add values-dev.yaml && git commit -m "Modify nginx-chart : values-dev.yaml" && git push -u origin main

watch -d kubectl get all -n dev-nginx -o wide

#

sed -i -e "s|replicaCount: 4|replicaCount: 2|g" values-dev.yaml

git add values-dev.yaml && git commit -m "Modify nginx-chart : values-dev.yaml" && git push -u origin main

watch -d kubectl get all -n dev-nginx -o wide- Argo CD App 삭제

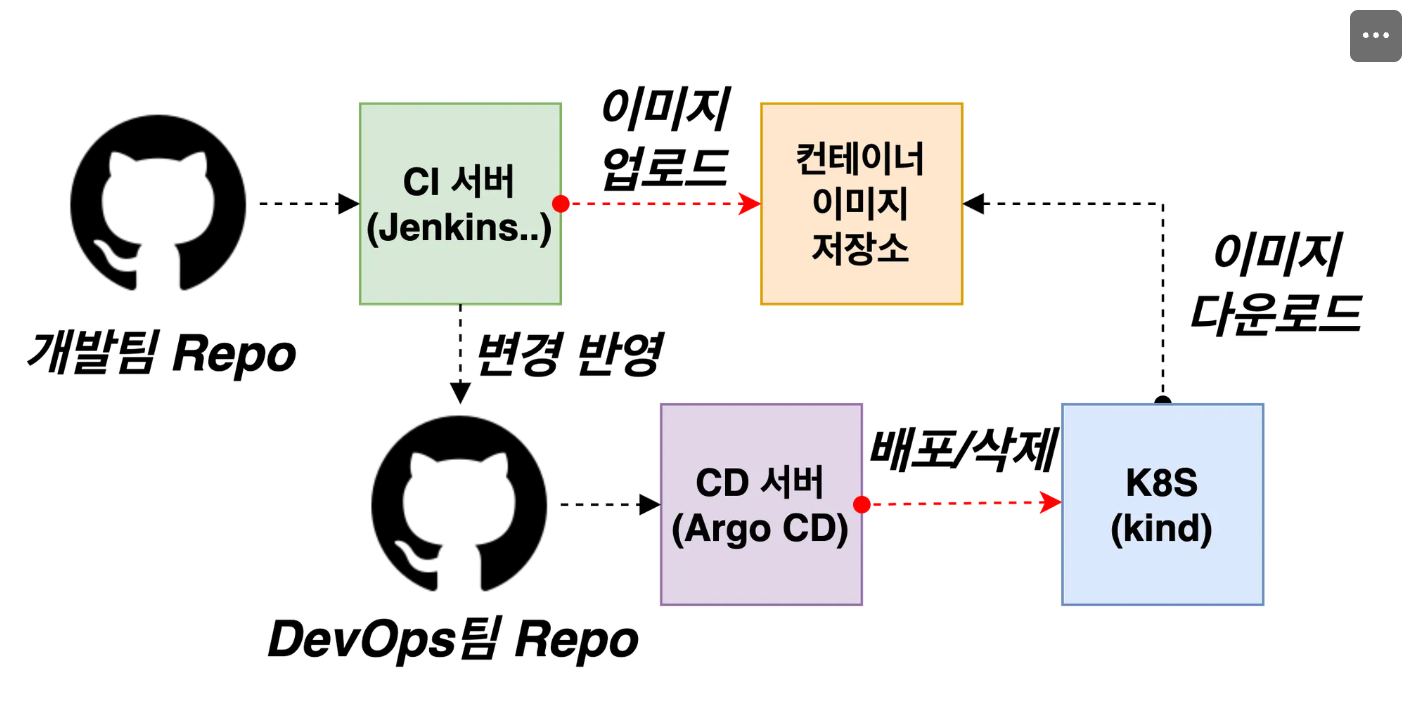

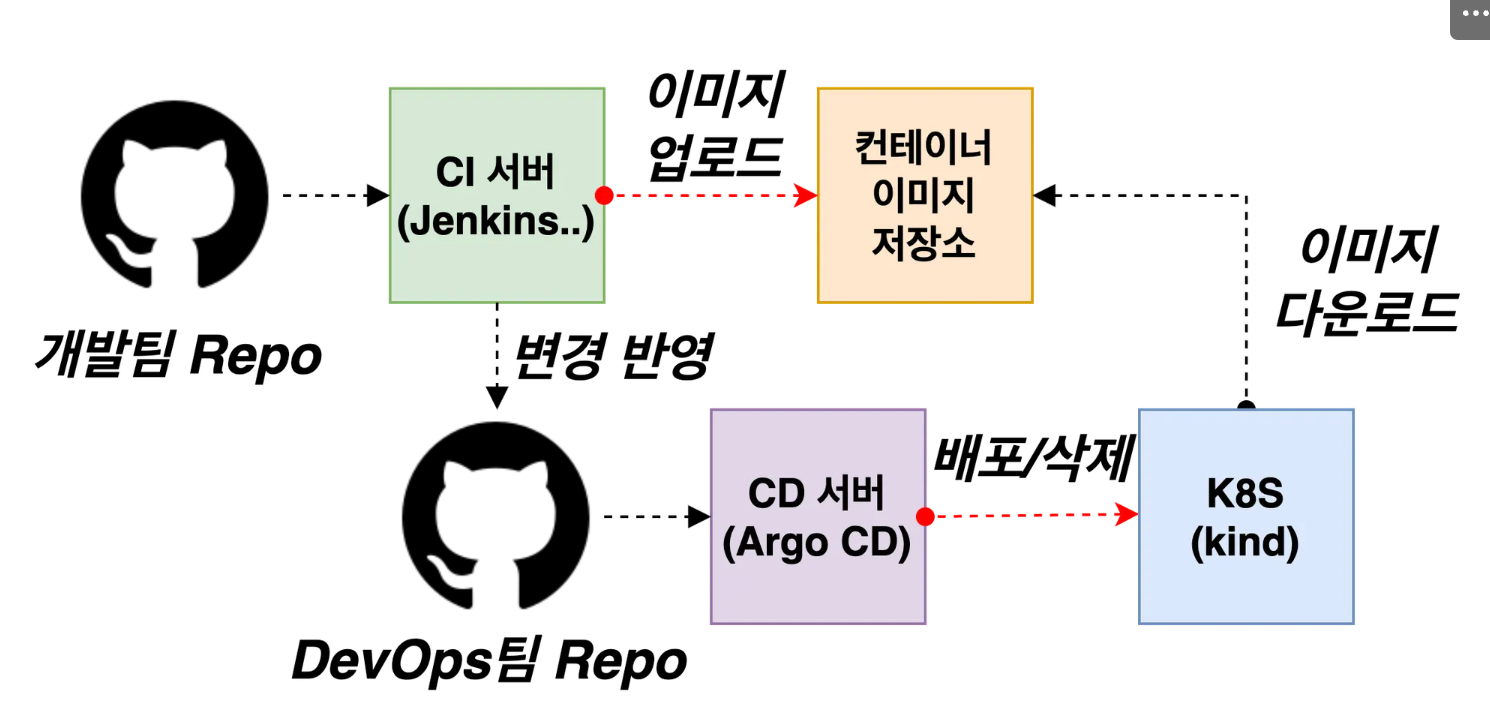

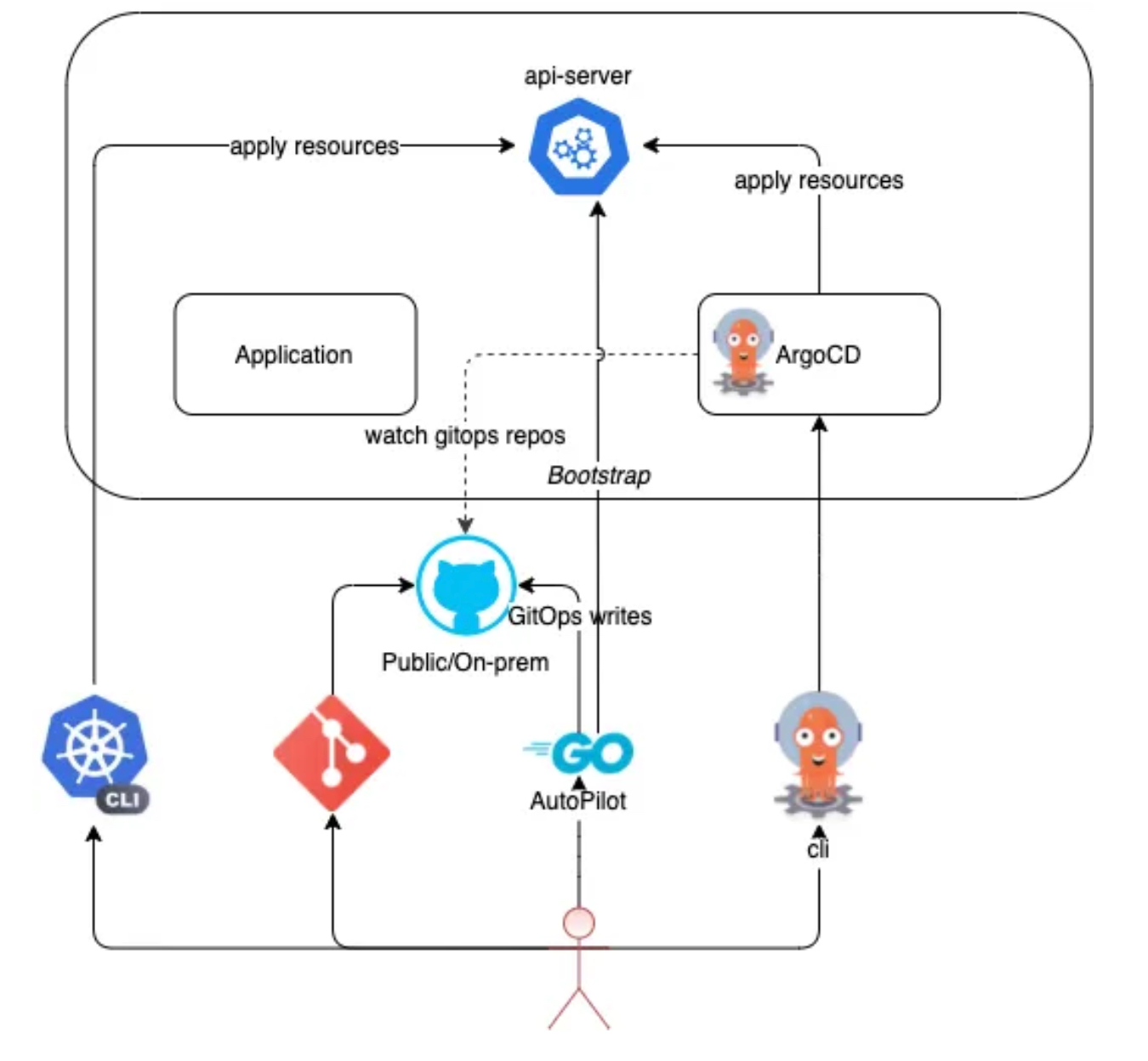

kubectl delete applications -n argocd dev-nginx5. Jenkins CI + Argo CD + K8S

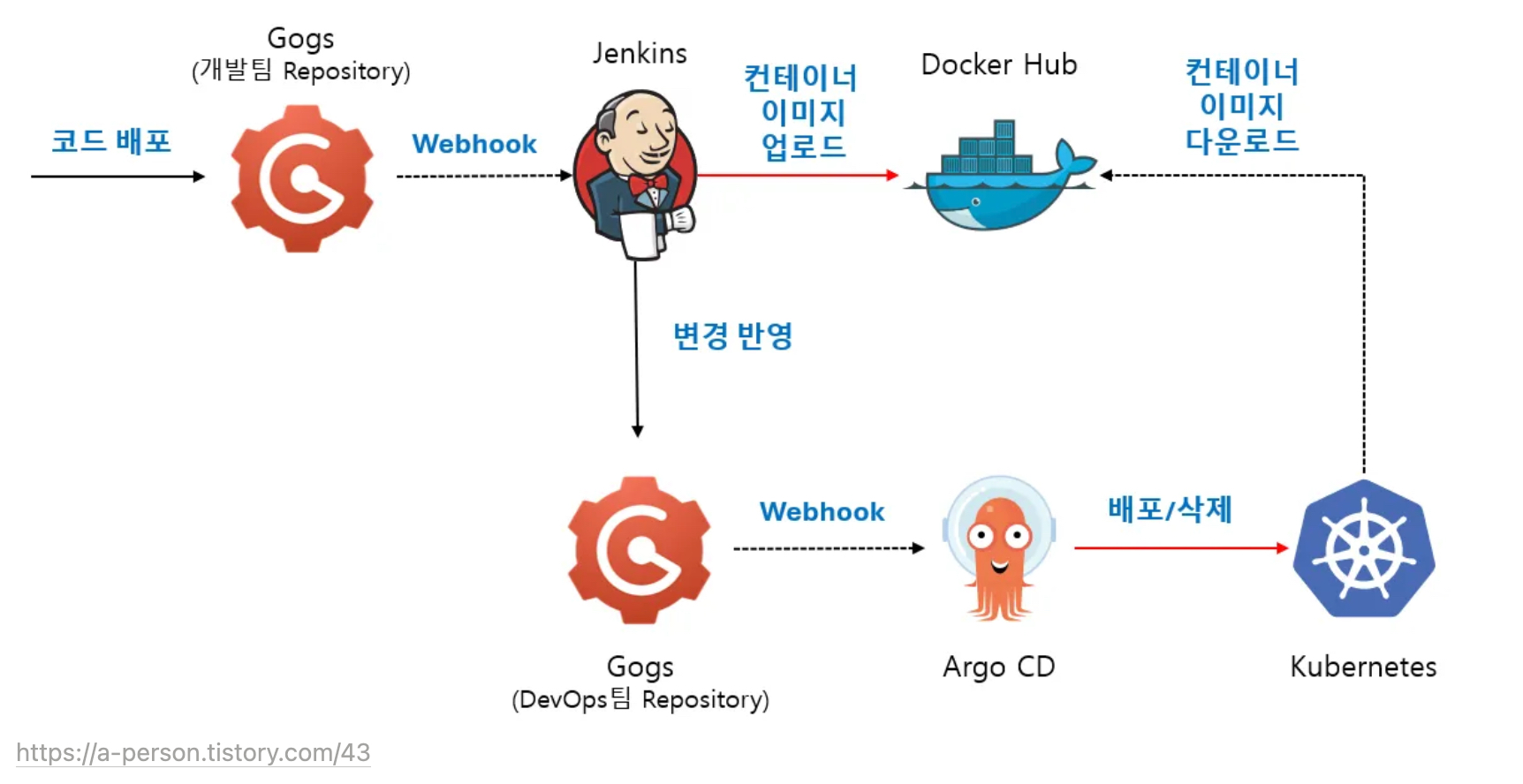

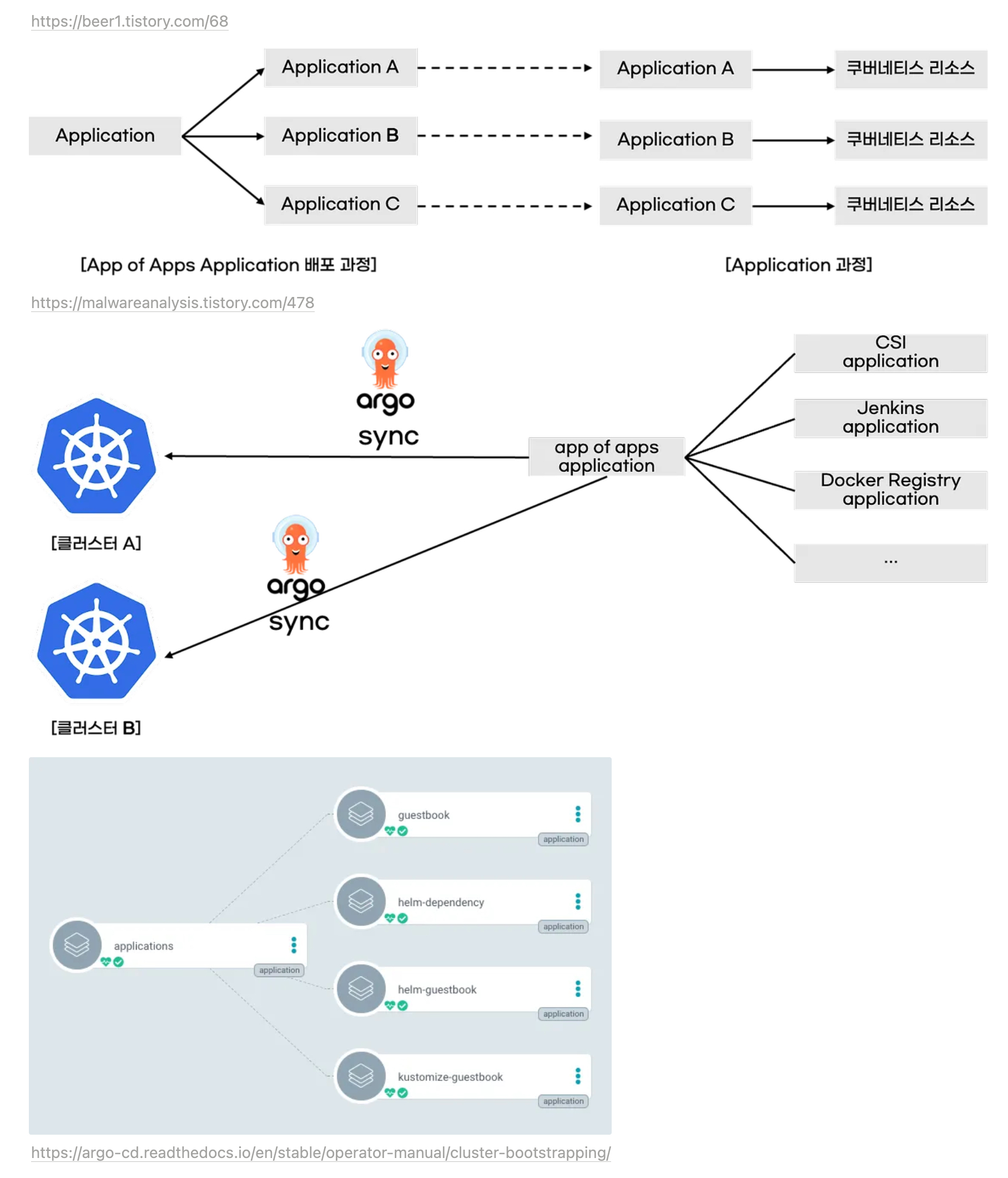

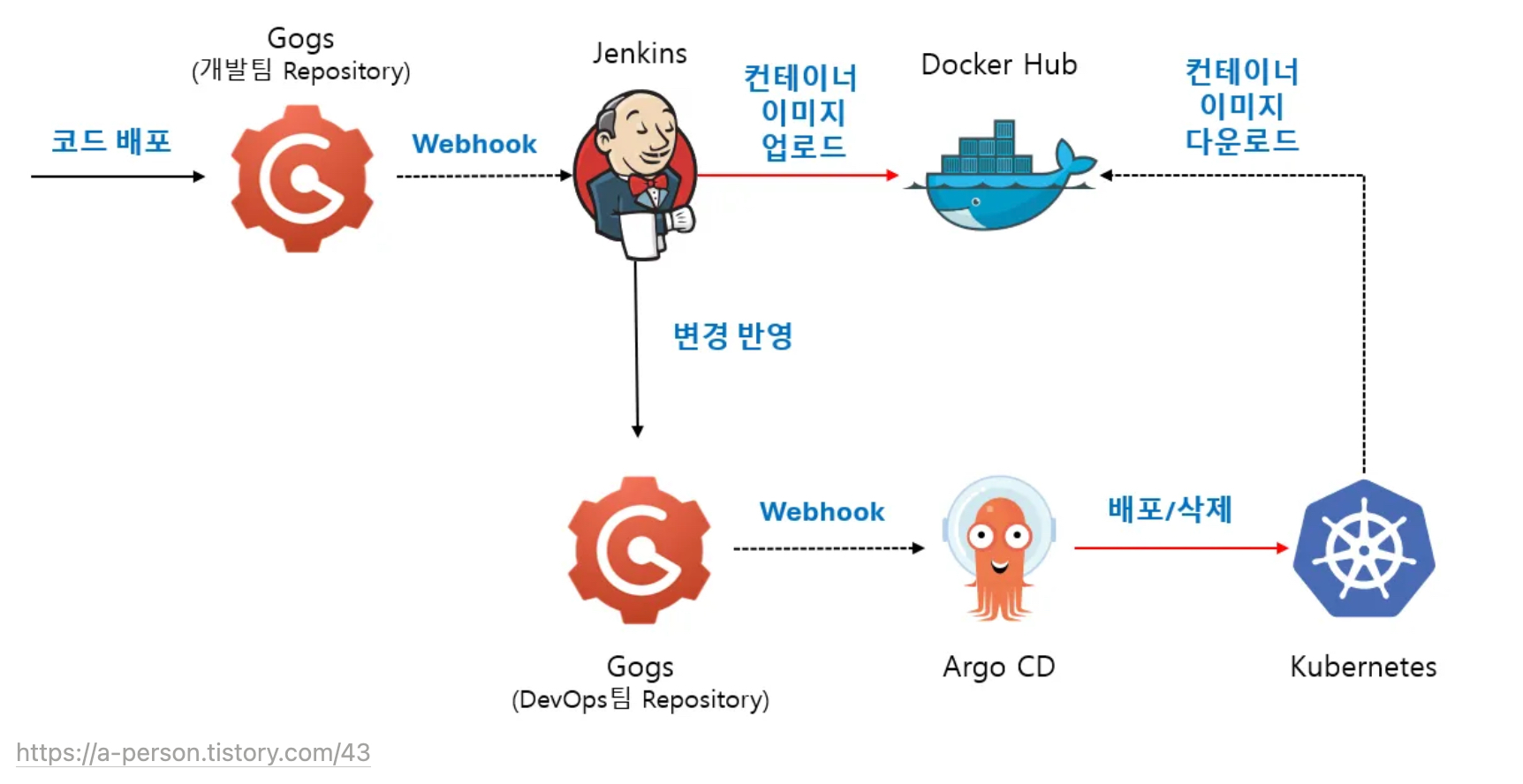

- Full CI/CD 구성도

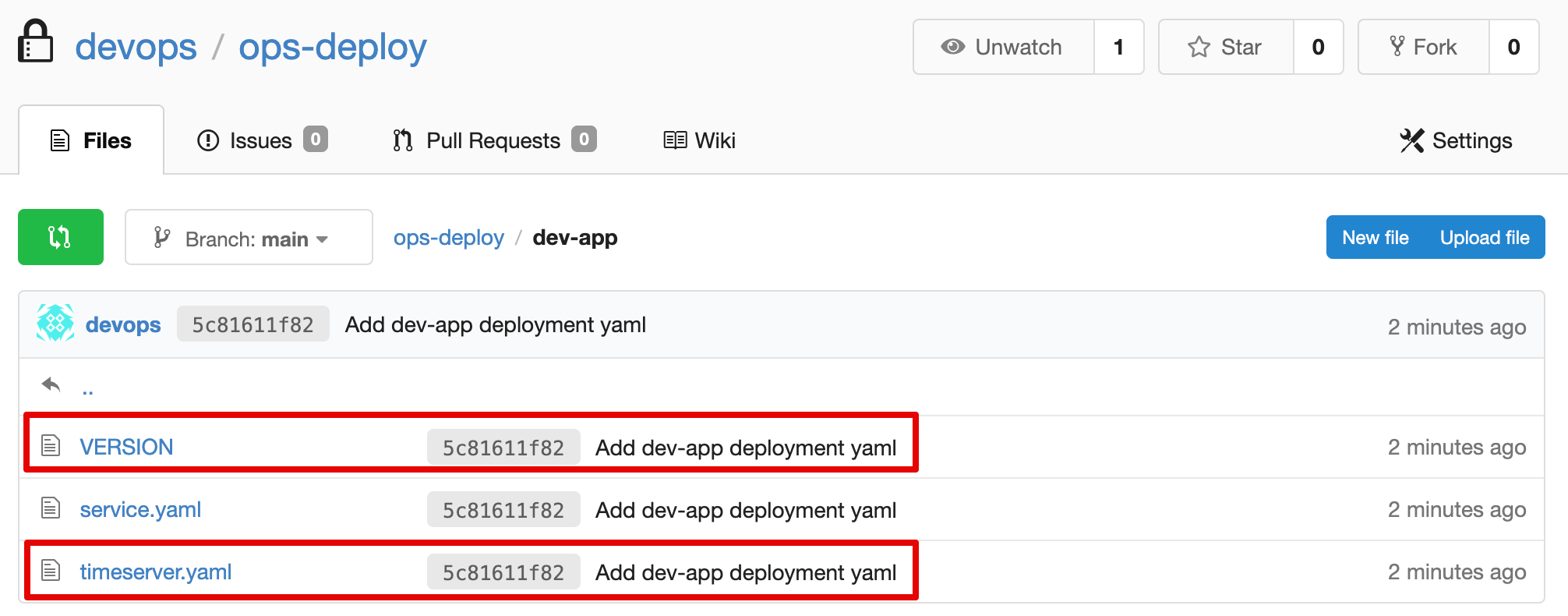

5.1 Repo(ops-deploy) 기본 코드 작업

#

cd ops-deploy

#

mkdir dev-app

# 도커 계정 정보

DHUSER=<도커 허브 계정>

DHUSER=kimseongjung

# 버전 정보

VERSION=0.0.1

#

cat > dev-app/VERSION <<EOF

$VERSION

EOF

cat > dev-app/timeserver.yaml <<EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name: timeserver

spec:

replicas: 2

selector:

matchLabels:

pod: timeserver-pod

template:

metadata:

labels:

pod: timeserver-pod

spec:

containers:

- name: timeserver-container

image: docker.io/$DHUSER/dev-app:$VERSION

livenessProbe:

initialDelaySeconds: 30

periodSeconds: 30

httpGet:

path: /healthz

port: 80

scheme: HTTP

timeoutSeconds: 5

failureThreshold: 3

successThreshold: 1

imagePullSecrets:

- name: dockerhub-secret

EOF

cat > dev-app/service.yaml <<EOF

apiVersion: v1

kind: Service

metadata:

name: timeserver

spec:

selector:

pod: timeserver-pod

ports:

- port: 80

targetPort: 80

protocol: TCP

nodePort: 30000

type: NodePort

EOF

#

git add . && git commit -m "Add dev-app deployment yaml" && git push -u origin main

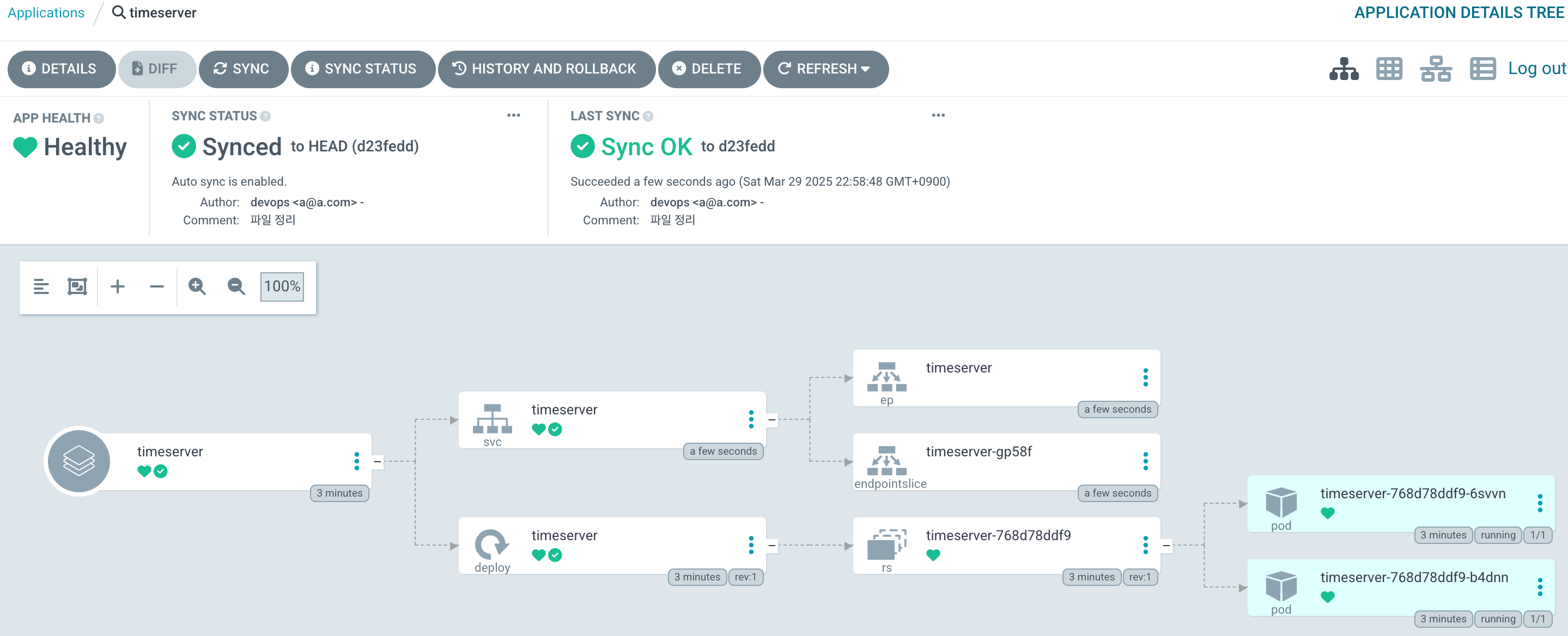

5.2 Repo(ops-deploy) 를 바라보는 ArgoCD App 생성

#

echo $MyIP

cat <<EOF | kubectl apply -f -

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: timeserver

namespace: argocd

finalizers:

- resources-finalizer.argocd.argoproj.io

spec:

project: default

source:

path: dev-app

repoURL: http://$MyIP:3000/devops/ops-deploy

targetRevision: HEAD

syncPolicy:

automated:

prune: true

syncOptions:

- CreateNamespace=true

destination:

namespace: default

server: https://kubernetes.default.svc

EOF

#

kubectl get applications -n argocd timeserver

kubectl get applications -n argocd timeserver -o yaml | kubectl neat

kubectl describe applications -n argocd timeserver

kubectl get deploy,rs,pod

kubectl get svc,ep timeserver

#

curl http://127.0.0.1:30000

curl http://127.0.0.1:30000/healthz

open http://127.0.0.1:30000

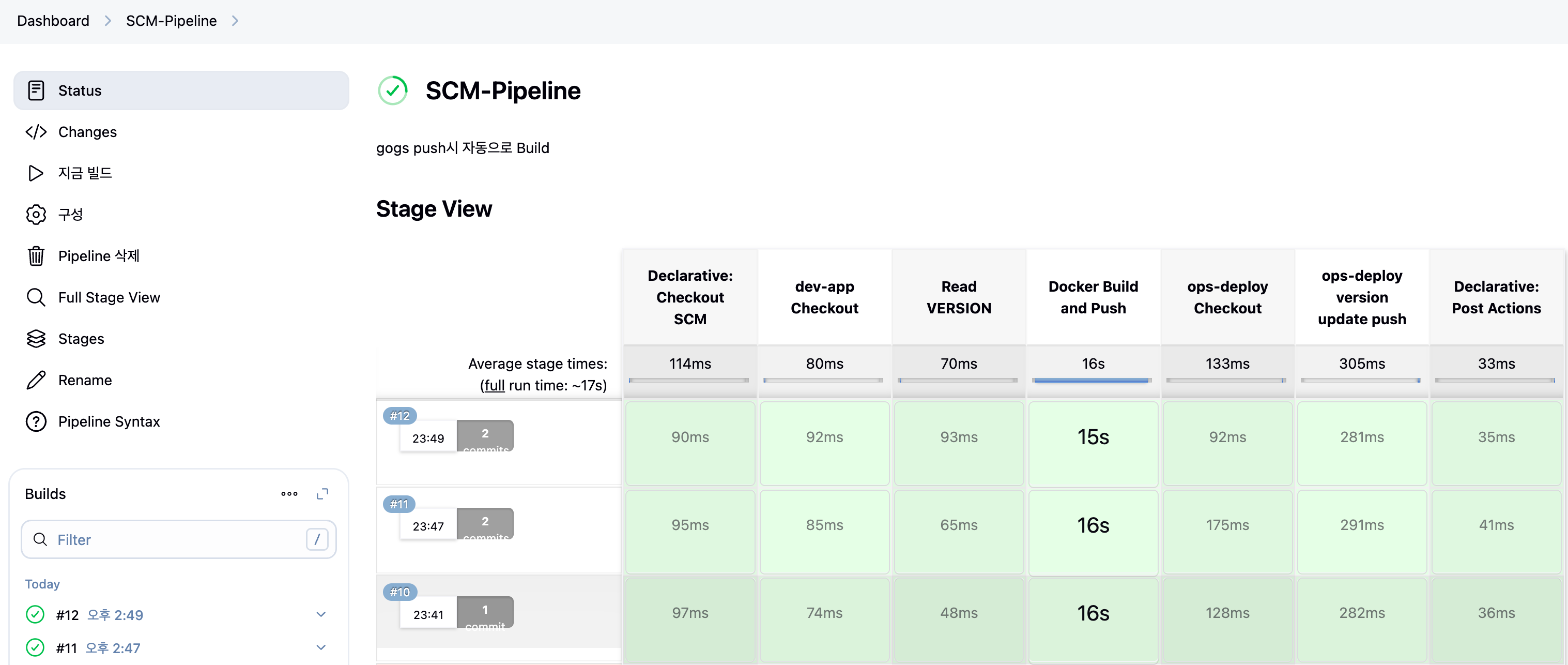

5.3 Repo(dev-app) 코드 작업

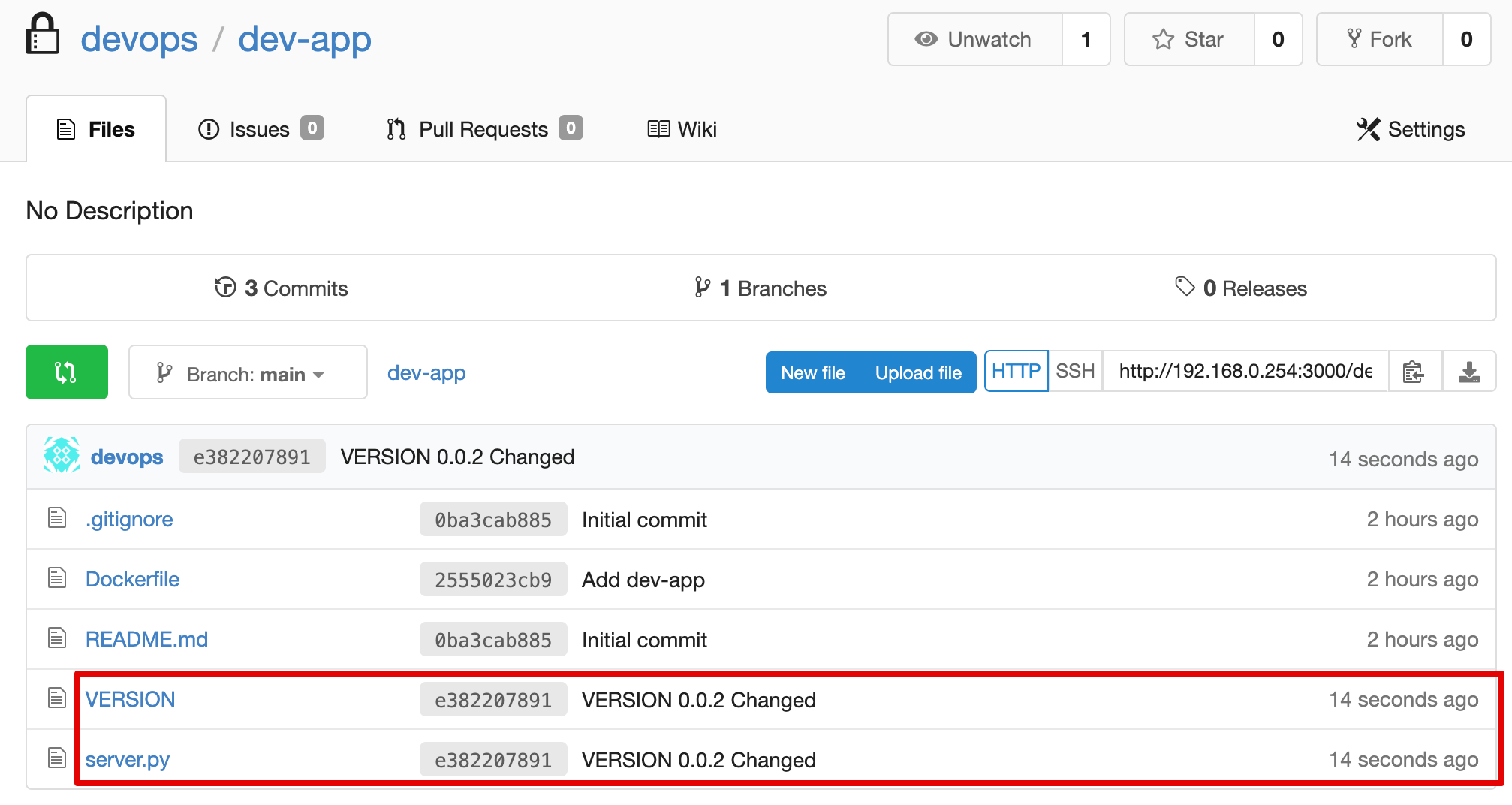

- dev-app Repo에 VERSION 업데이트 시 → ops-deploy Repo 에 dev-app 에 파일에 버전 정보 업데이트 작업 추가

- 기존 버전 정보는 VERSION 파일 내에 정보를 가져와서 변수 지정 :

OLDVER=$(cat dev-app/VERSION) - 신규 버전 정보는 environment 도커 태그 정보를 가져와서 변수 지정 :

NEWVER=$(echo ${DOCKER_TAG}) - 이후 sed 로 ops-deploy Repo 에 dev-app/VERSION, timeserver.yaml 2개 파일에 ‘기존 버전’ → ‘신규 버전’으로 값 변경

- 이후 ops-deploy Repo 에 git push ⇒ Argo CD App Trigger 후 AutoSync 로 신규 버전 업데이트 진행

- 기존 버전 정보는 VERSION 파일 내에 정보를 가져와서 변수 지정 :

- 아래는 dev-app 에 위치한 Jenkinsfile 로 젠킨스에 SCM-Pipeline(SCM:git) 으로 사용되고 있는 파일을 수정해서 실습에 사용

pipeline {

agent any

environment {

DOCKER_IMAGE = 'kimseongjung/dev-app' // Docker 이미지 이름

GOGSCRD = credentials('gogs-crd')

}

stages {

stage('dev-app Checkout') {

steps {

git branch: 'main',

url: 'http://192.168.0.254:3000/devops/dev-app.git', // Git에서 코드 체크아웃

credentialsId: 'gogs-crd' // Credentials ID

}

}

stage('Read VERSION') {

steps {

script {

// VERSION 파일 읽기

def version = readFile('VERSION').trim()

echo "Version found: ${version}"

// 환경 변수 설정

env.DOCKER_TAG = version

}

}

}

stage('Docker Build and Push') {

steps {

script {

docker.withRegistry('https://index.docker.io/v1/', 'dockerhub-crd') {

// DOCKER_TAG 사용

def appImage = docker.build("${DOCKER_IMAGE}:${DOCKER_TAG}")

appImage.push()

appImage.push("latest")

}

}

}

}

stage('ops-deploy Checkout') {

steps {

git branch: 'main',

url: 'http://192.168.0.254:3000/devops/ops-deploy.git', // Git에서 코드 체크아웃

credentialsId: 'gogs-crd' // Credentials ID

}

}

stage('ops-deploy version update push') {

steps {

sh '''

OLDVER=$(cat dev-app/VERSION)

NEWVER=$(echo ${DOCKER_TAG})

sed -i -e "s/$OLDVER/$NEWVER/" dev-app/timeserver.yaml

sed -i -e "s/$OLDVER/$NEWVER/" dev-app/VERSION

git add ./dev-app

git config user.name "devops"

git config user.email "a@a.com"

git commit -m "version update ${DOCKER_TAG}"

git push http://${GOGSCRD_USR}:${GOGSCRD_PSW}@192.168.0.254:3000/devops/ops-deploy.git

'''

}

}

}

post {

success {

echo "Docker image ${DOCKER_IMAGE}:${DOCKER_TAG} has been built and pushed successfully!"

}

failure {

echo "Pipeline failed. Please check the logs."

}

}

}- 아래는 dev-app (Repo) 에서 git push 수행

# [터미널] 동작 확인 모니터링

while true; do curl -s --connect-timeout 1 http://127.0.0.1:30000 ; echo ; kubectl get deploy timeserver -owide; echo "------------" ; sleep 1 ; done

#

cd cicd-labs/dev-app

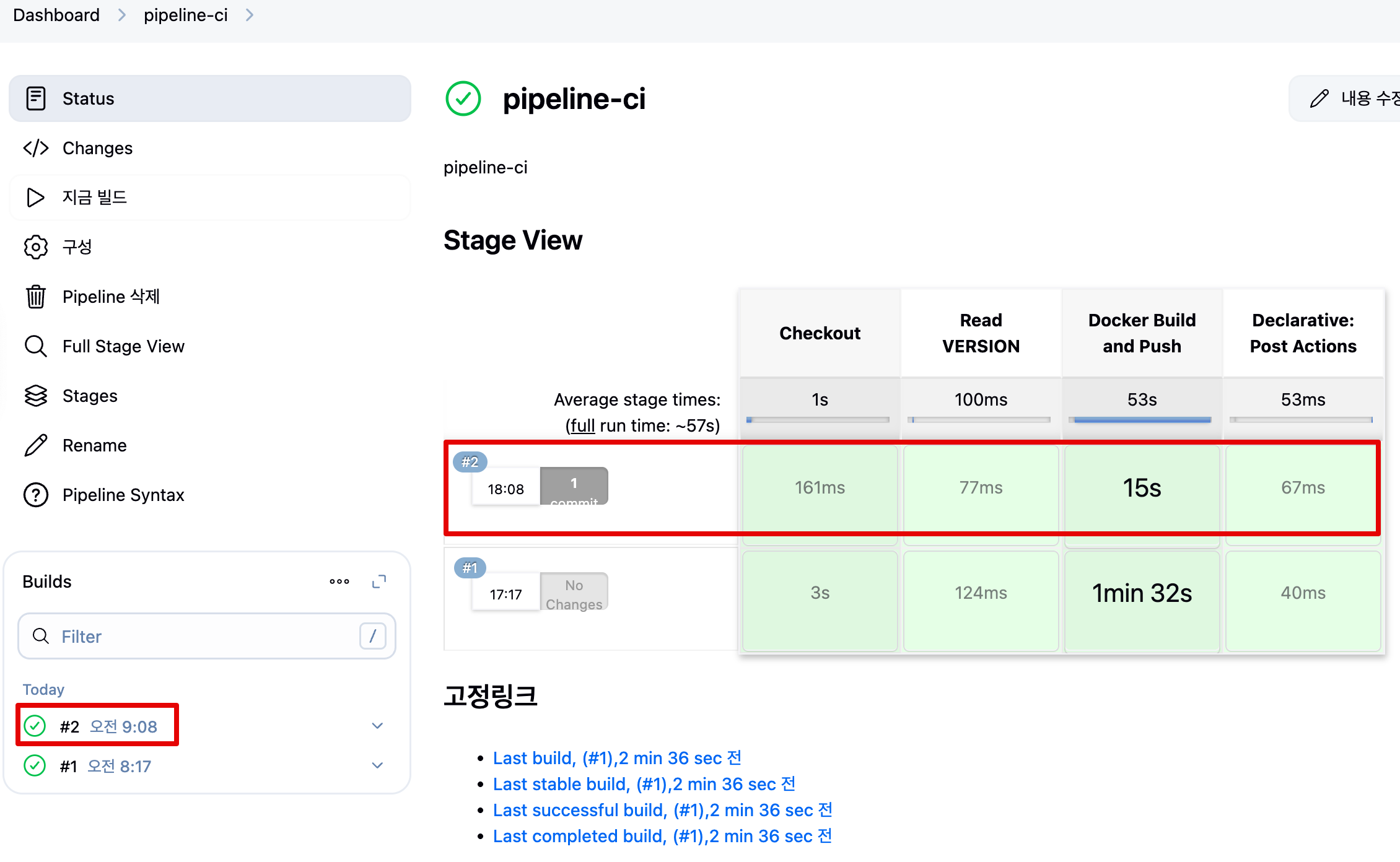

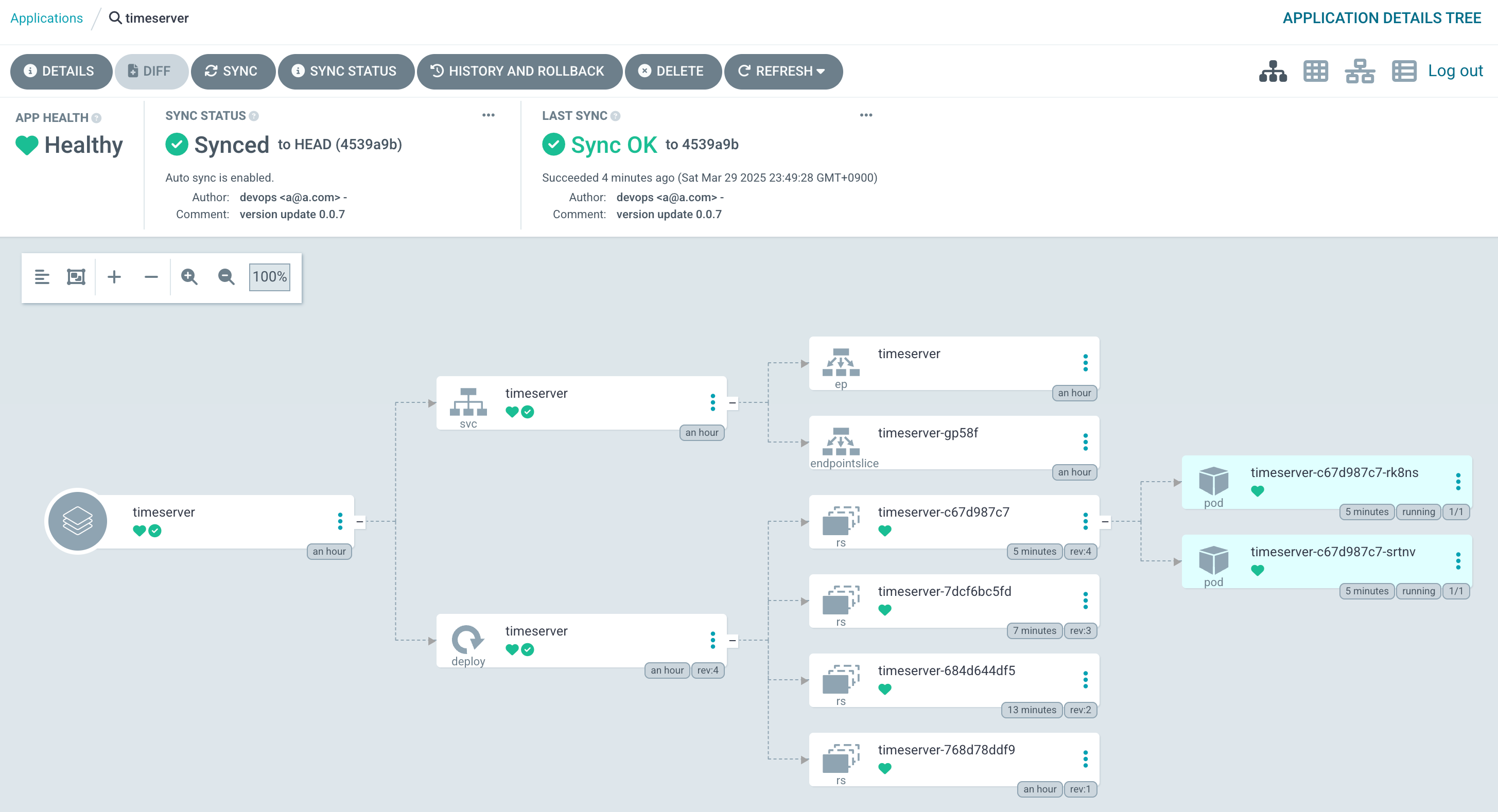

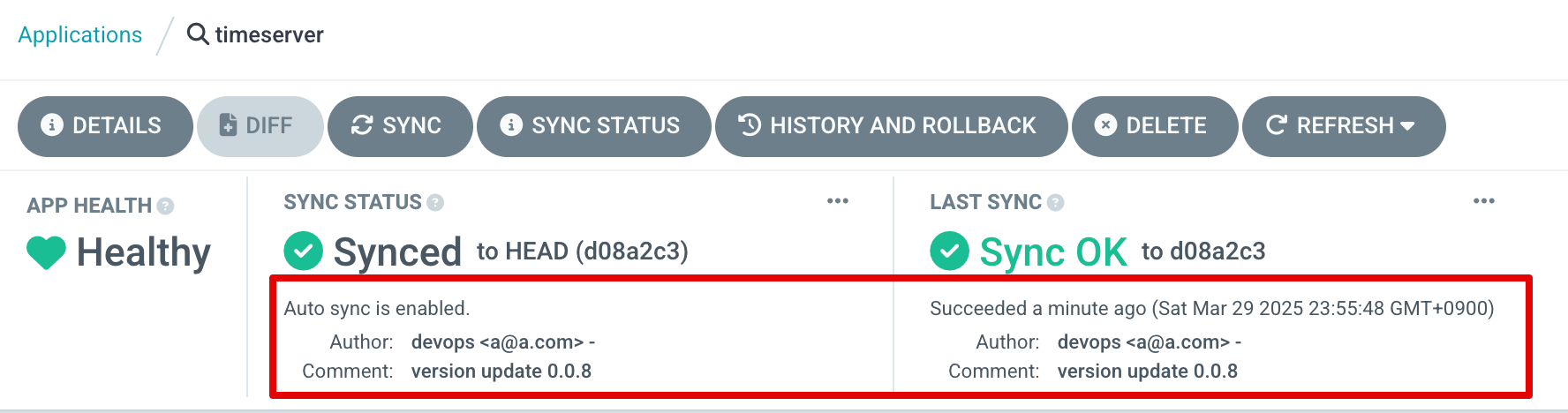

# VERSION 파일 수정 : 0.0.3