NLP task, architecture의 역사는 Transformer 이전과 이후로 봐도 될 만큼, Transfomer, self-attention 에 대한 내용은 꼭 알아야 하는 내용이라고 생각한다. 오늘 이 포스팅을 하면서 Transformer에 대해서 다시 한 번 제대로 과정을 공부하고 정리하였다.

이 연구 또한 Google Brain, Google Research에서 수행된 연구 결과라고 논문에 기재되어 있습니다.

0. Abstract

- 주요 sequence 모델은 Encoder와 Decoder을 포함하는 RNN, CNN을 기반으로 함.

- (당시) 최고 성능의 모델은 attention mechanism을 통해 Encoder와 Decoder를 연결험 => Seq2Seq+Attention 모델

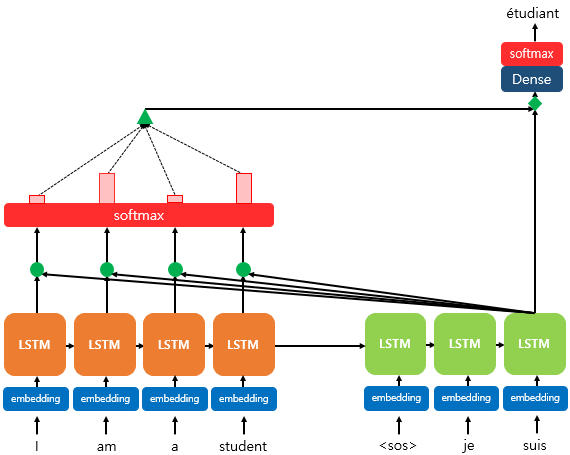

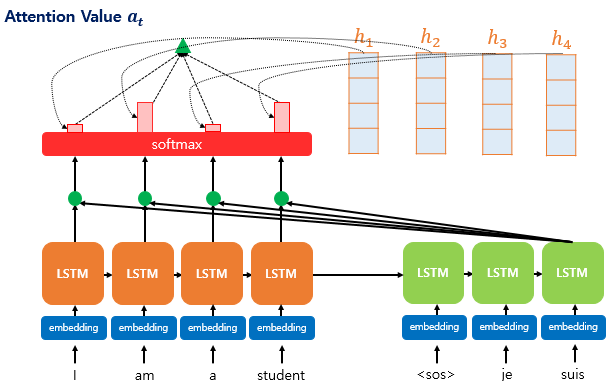

아래 그림이 Seq2Seq+Attention 모델임!

recurrence 과 convolutions을 완전히 없애고, attention mechanism에만 기반한 새로운 간단한 네트워크 아키텍처인 Transformer를 제안

=> RNN과 Attention을 함께 사용했던 Seq2Seq+Attention 모델에서, RNN을 걷어내고 Attention 연산만을 사용

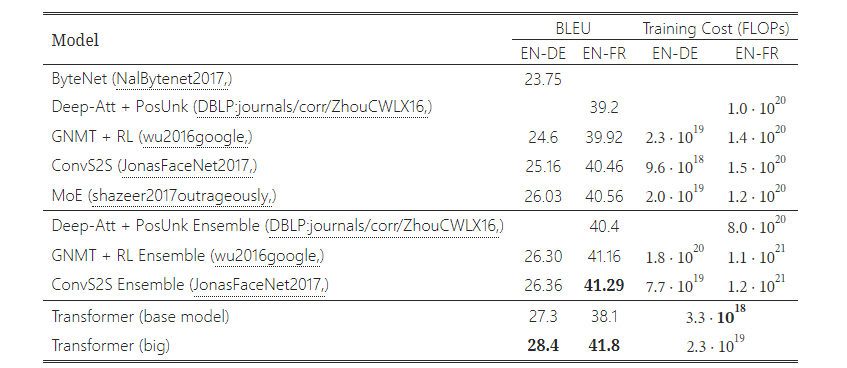

- 기계 번역 task에 대한 실험 결과

: 모델이 품질면에서 우수하면서도 병렬화가 더 용이하고 학습에 소요되는 시간이 훨씬 적은 것으로 나타남.

[WMT 2014 영어-독일어 번역 작업] - 28.4 BLEU를 달성 (앙상블을 포함한 기존 최고 결과보다 2 BLEU 이상 향상)

[WMT 2014 영어-프랑스어 번역 작업] - 8개의 GPU에서 3.5일 동안 훈련한 후 새로운 단일 모델 최첨단 BLEU 점수 41.8을 달성

: Transformer가 large and limited training data를 사용하여 영어 선거구 구문 분석에 성공적으로 적용함으로써 다른 작업에 잘 일반화된다는 것을 확인함

🤔 Attention 이란?

encoder에서 일정 크기로 모든 시퀀스 정보를 압축하여 표현하려고 하기 때문에 정보손실이 발생하는 문제가 있다. 이러한 문제를 보완하고자 Attention 모델이 제안되었다.

Attention 모델은 decoder가 단순히 encoder의 압축된 정보만을 받아 예측 시퀀스를 출력하는 것이 아니라, decoder가 출력되는 시점마다 encoder에서의 전체 입력 문장을 한번더 검토하도록 한다. 이 때, decoder는 encoder의 모든 입력 시퀀스를 동일한 가중치로 받아들이지 않고, 중요한 단어에 대하여 더 큰 가중치를 주어 중요성을 나타내도록 한다. 즉, encoder에서 중요한 단어에 집중하여 이를 decoder에 바로 전달하도록 한다.

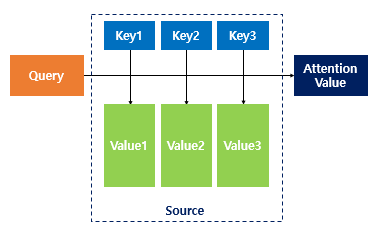

Attention(Q, K, V) = Attention value

Value += similarity(Q, K)

=> Attention value

"어텐션 함수는 주어진 '쿼리(Query)'에 대해서 모든 '키(Key)'와의 유사도를 각각 구한다. 그리고 구해낸 이 유사도를 키와 맵핑되어있는 각각의 '값(Value)'에 반영한다. 그리고 유사도가 반영된 '값(Value)'을 모두 더해서 리턴한다. 여기서는 이를 어텐션 값(Attention Value)이라고 한다."

Qeury에 대하여 모든 Key와의 유사도를 구하고, 해당 유사도를 Key에 매핑된 Value에 반영한다.

모든 Value 값들을 더하여 attention value값을 얻게 된다.

➕ (ex) Seq2Seq + Attention 모델의 과정

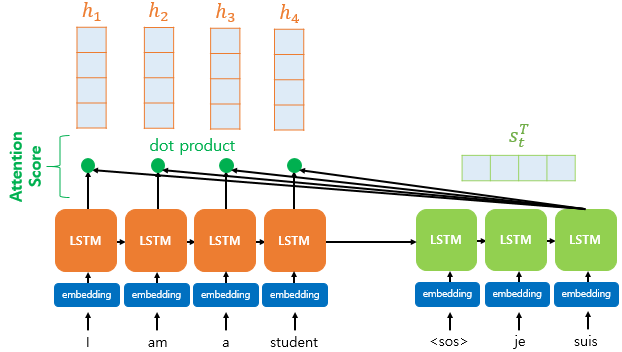

Attention 기법은 다양하게 있는데, 가장 기본적인 Attention 개념을 이해하기 위해 dot-product attention를 적용한 모델의 과정을 보여주려고 한다.

Q (Query): decoder에서 t 시점의 은닉 벡터 ()

K (Key): encoder에서 모든 시점에 대한 은닉 벡터 ()

V (Value): encoder에서 모든 시점의 은닉 벡터 값

(전체 과정은 아래와 같다.)

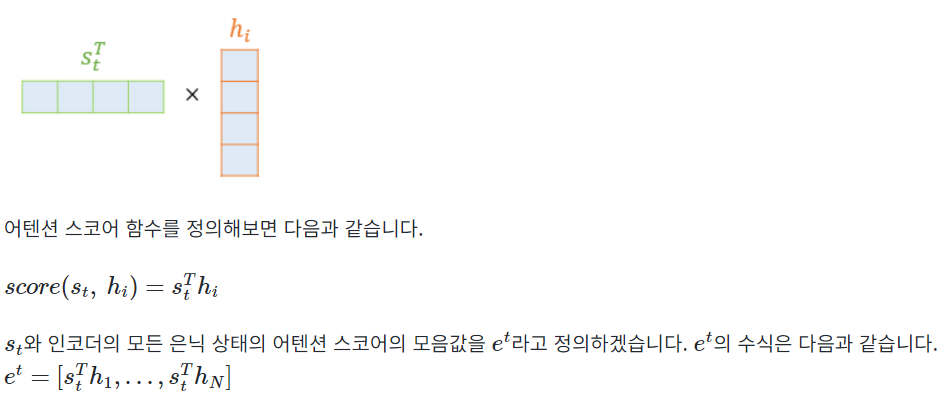

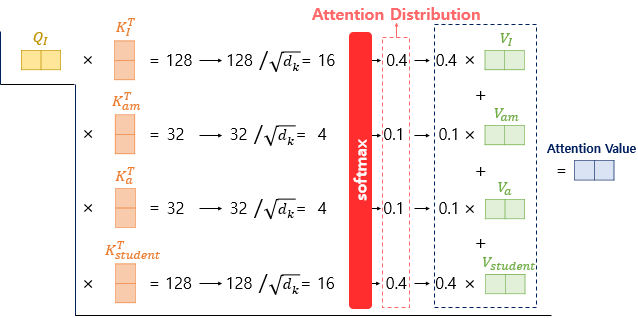

Step1) Attention score 구하기

dot-product attention에서는 이 스코어 값을 구하기 위해 를 전치(transpose)하고 각 은닉 상태와 내적(dot product)을 수행합니다. 즉, 모든 어텐션 스코어 값은 스칼라이다.

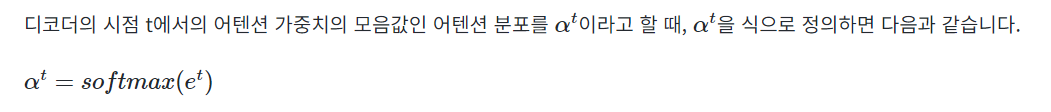

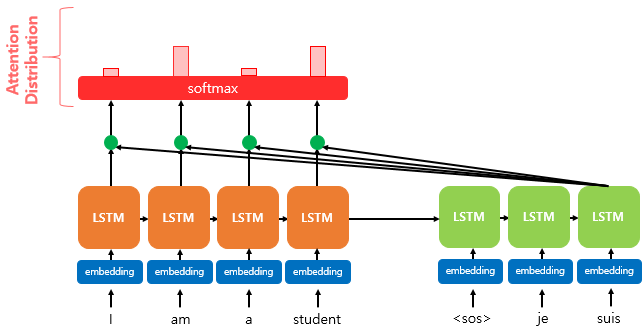

Step2) Softmax 함수를 통해 Attention Distribution를 구하기

에 소프트맥스 함수를 적용하여, 모든 값을 합하면 1이 되는 확률 분포를 얻어냅니다. 이를 어텐션 분포(Attention Distribution)라고 하며, 각각의 값은 어텐션 가중치(Attention Weight)라고 합니다. 예를 들어 소프트맥스 함수를 적용하여 얻은 출력값인 I, am, a, student의 어텐션 가중치를 각각 0.1, 0.4, 0.1, 0.4라고 합시다. 이들의 합은 1입니다. 위의 그림은 각 인코더의 은닉 상태에서의 어텐션 가중치의 크기를 직사각형의 크기를 통해 시각화하였습니다. 즉, 어텐션 가중치가 클수록 직사각형이 큽니다.

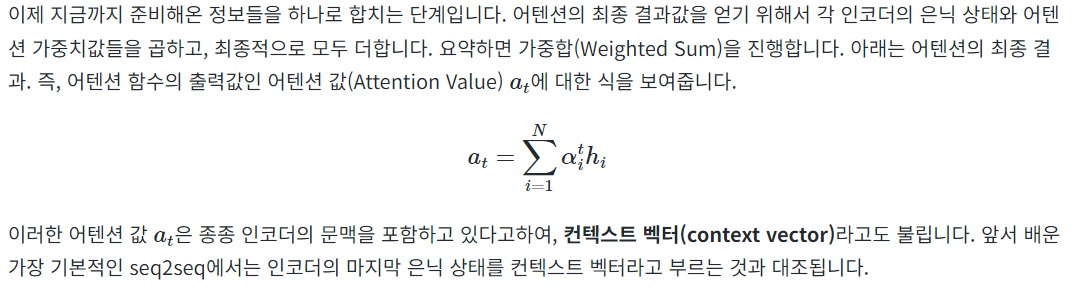

Step3) 각 인코더의 어텐션 가중치와 은닉 상태를 가중합하여 Attention Value 구하기

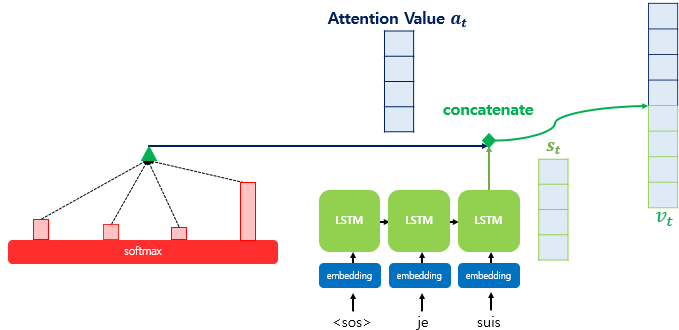

Step4) 어텐션 값과 디코더의 t 시점의 은닉 상태를 연결 (Concatenate)

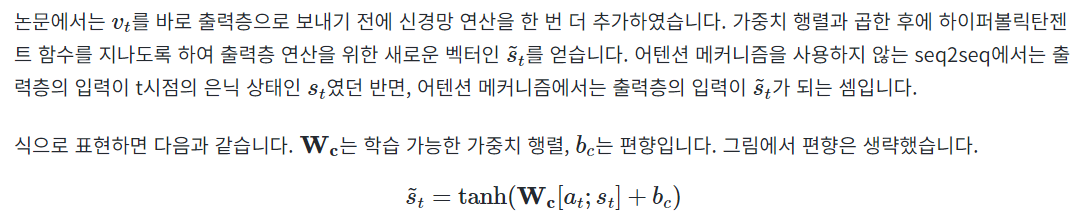

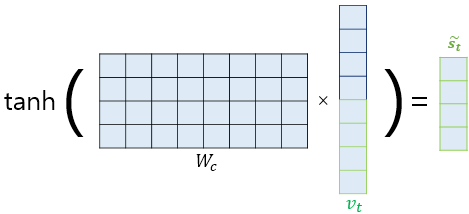

Step5) 출력층 연산의 입력이 되는 를 계산

Step5) 를 출력층의 입력으로 사용

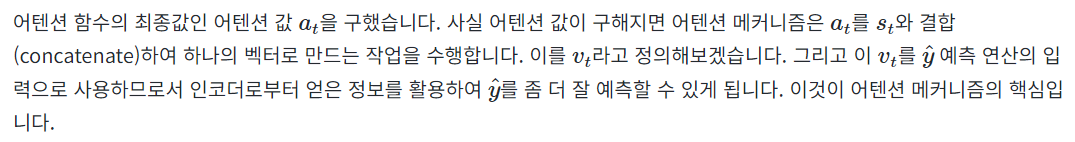

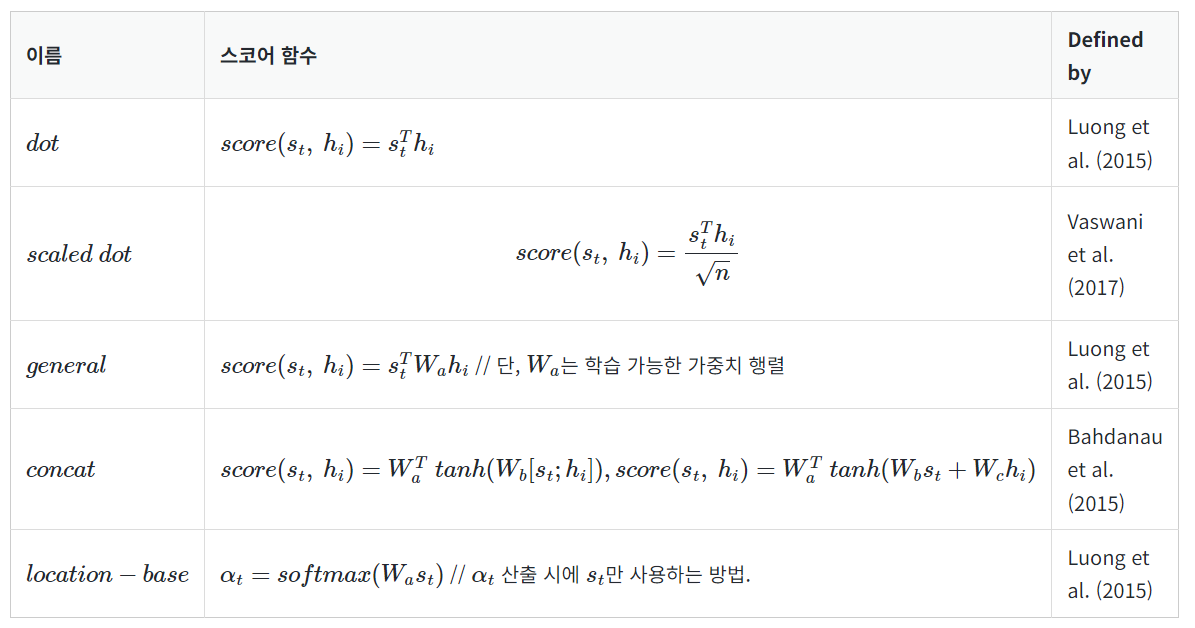

다양한 종류의 어텐션(Attention)

앞서 Seq2Seq + Attention 모델에 쓰일 수 있는 다양한 어텐션 종류가 있지만, dot-product attetntion과 다른 어텐션들의 차이는 중간 수식의 차이다. 여기서 말하는 중간 수식은 attention score 함수를 말한다. 위의 attention이 dot-product attention인 이유는 attention score를 구하는 방법이 내적이었기 때문이다.

어텐션 스코어를 구하는 방법은 여러가지가 제시되어있으며, 현재 제시된 여러 종류의 어텐션 스코어 함수는 다음과 같다.

1. Introduction

Recurrent 모델은 일반적으로 입력 및 출력 시퀀스의 기호 위치를 따라 계산을 고려한다. 계산 시간의 단계에 따라 위치를 정렬하여 이전 숨겨진 상태의 함수()과 t 시점의 입력에 따라서일련의 hidden state()를 생성한다.

이러한 본질적인 순차적 특성으로 인해 훈련에서 병렬화가 불가능하며, 이는 메모리 제약으로 인해 예제 간 일괄 처리가 제한되므로 시퀀스 길이가 길어짐에 제약이 있다.

❗ Attention 메커니즘은 다양한 작업에서 강력한 시퀀스 모델링 및 변환 모델의 필수적인 부분이 되었으며, 입력 또는 출력 시퀀스의 거리에 관계없이 timestamp에 대한 종속성을 모델링할 수 있다. 이러한 Attention 메커니즘은 RNN과 함께 사용된다.

❗ 이 연구에서 우리는 반복을 피하고 대신 Attention 메커니즘에 전적으로 의존하여 입력과 출력 사이의 global dependencies(전역 종속성)을 그리는 모델 아키텍처인 Transformer를 제안한다.

❗ Transformer는 훨씬 더 많은 병렬화를 허용하며 8개의 P100 GPU에서 단 12시간 동안 교육을 받은 후 번역 품질에서 새로운 최첨단 기술에 도달할 수 있었다.

2. Background

-

순차 계산을 줄이고자 Extended Neural GPU (extendedngpu,), ByteNet (NalBytenet2017,) and ConvS2S (JonasFaceNet2017,), 모델들이 제안되었고, 모두 컨볼루션 신경망을 기본 빌딩 블록으로 사용하여 모든 입력 및 출력 위치에 대해 hidden representations을 병렬로 계산함

=> 하지만, 먼 위치 간의 종속성을 학습하는 것에 어려움 있음

=> 임의의 두 입력 또는 출력 위치의 신호를 연결하는 데 필요한 작업 수가 위치 간 거리에 따라 ConvS2S의 경우 선형적으로, ByteNet의 경우 로그적으로 증가함. -

Transformer에서는 이는 일정한 수의 작업으로 감소됩니다. cost of reduced effective resolution due to averaging attention-weighted positions는 Multi-Head Attention으로 이에 대응함

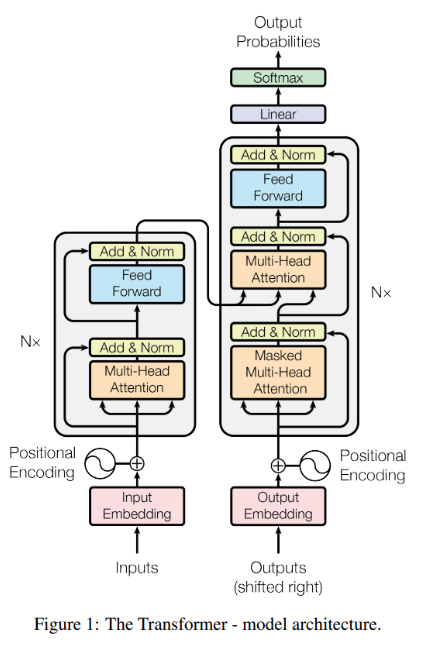

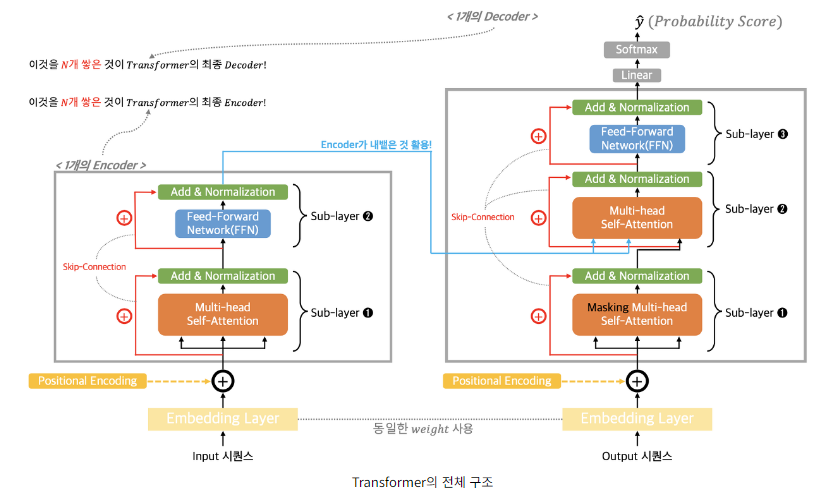

3. Model Architecture

Transformer는 왼쪽과 오른쪽에 각각 표시된 것처럼 인코더와 디코더 모두에 대해 누적된 self-attention 레이어와 포인트별 완전 연결 레이어를 사용하는 전체 아키텍처를 따릅니다.

3.1. Encoder and Decoder Stacks

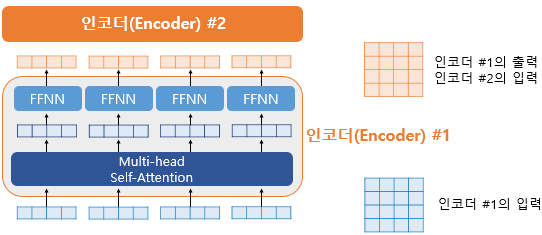

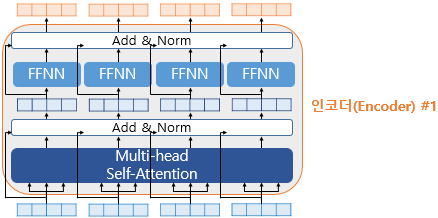

✅ Encoder 구성

- N = 6 의 동일한 레이어가 스택된 구조

- 각 레이어에는 두 개의 하위 레이어로 구성됨.

🔸 첫 번째는 Multi-Head self-attention 메커니즘

(Multi-Head self-attention은 self-attention을 병렬적으로 사용했다는 의미)

🔸 두 번째는 position-wise fully connected feed-forward network(feed-forward network)로, residual connection을 사용 - 두 개의 하위 레이어 각각 주위에 LayerNorm(x+sublayer(x), sublayer() = function implemented by the sub-layer itself) 처리함

- residual connection을 용이하게 하기 위해 모델의 모든 하위 레이어와 임베딩 레이어는 512 차원의 출력을 생성함.

✅ Decoder 구성

- N = 6 의 동일한 레이어가 스택된 구조

- Encoder output에 대해 Multi-Head attention를 수행하는 레이어 존재

- Encoder와 유사하게 각 하위 계층 주위에 residual connection을 사용하고 이어서 LayerNorm을수행

- Masking 처리된 Multi-Head attention 존재 (이후 순서의 값이 이전 순서 예측에 사용되지 않도록, 또한 the predictions for position can depend only on the known outputs at positions less than => 번째 위치의 예측 값은 이전의 결과물들에만 영향을 받도록 함

3.2. Attention 💡

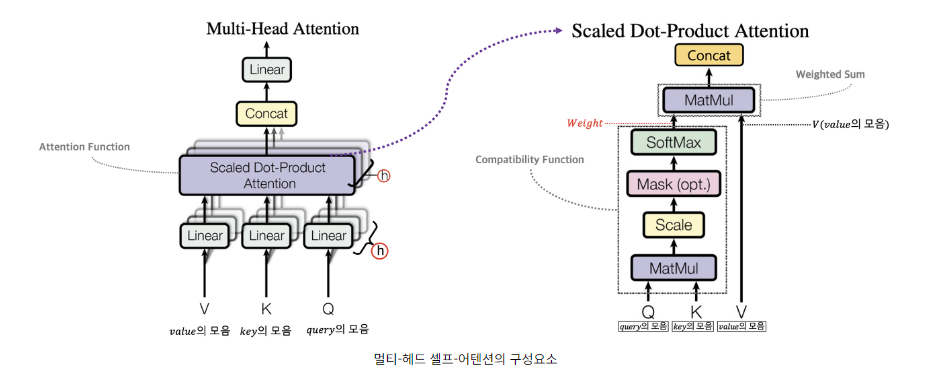

Attention function은 Query와 key-value 쌍 세트를 output에 매핑하는 것으로 설명할 수 있다.

❗ Query, Key, Value 및 Output은 모두 vector!

❗ Output은 Value의 가중치 합으로 계산!

❗ 여기서 각 Value에 할당된 가중치는 해당 Key에 대한 Query의 compatibility function에 의해 계산!

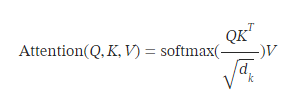

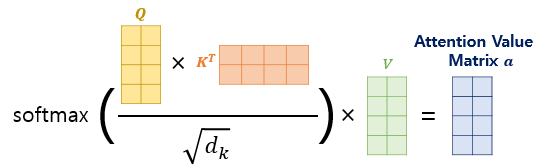

3.2.1. Scaled Dot-Product Attention

- queries and keys of dimension 인 를 사용해 로 나눠주면서 내적 어텐션 값을 스케일링 해준다.

- input 시퀀스 길이에 의해서 attention 값이 무한히 커지는 것을 스케일링 한다.

- 앞서 언급하였듯이 논문에서 는 /num_heads 라는 식에 따라서 64의 값을 가지므로 는 8의 값을 가집니다.

- 상관없이 분산 값이 같아짐.

좀 더 구체적으로 설명하면 아래와 같다.

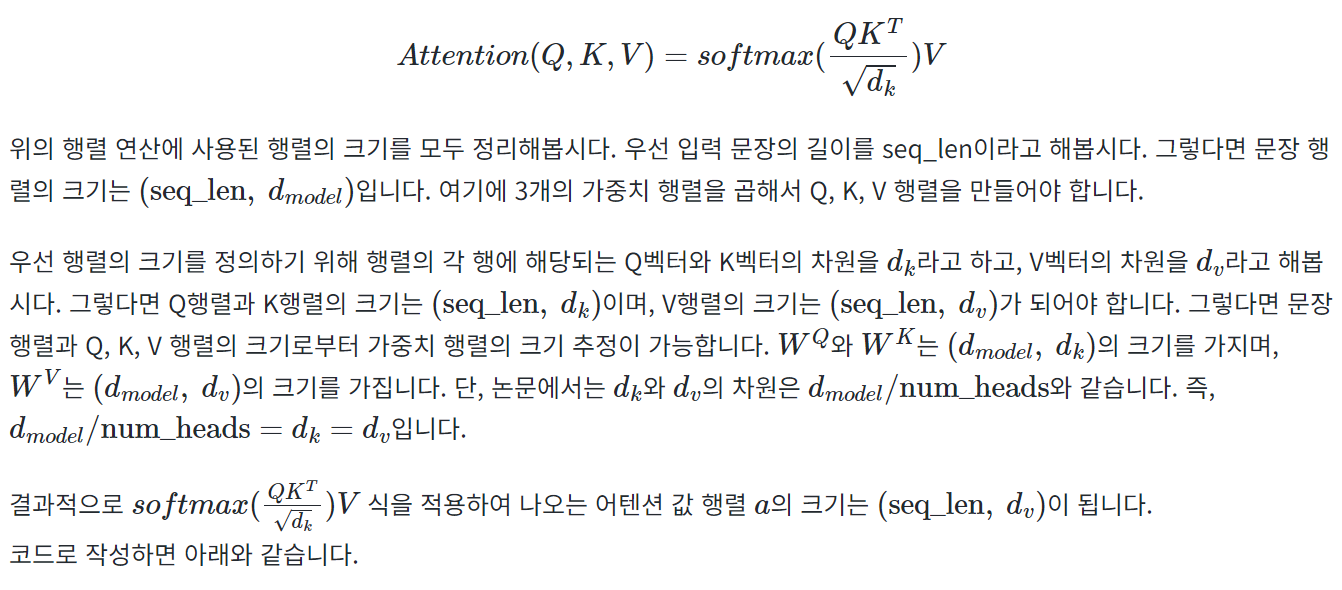

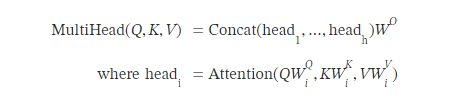

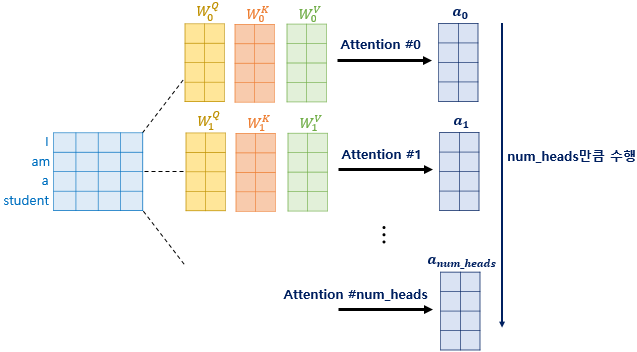

3.2.2. Multi-Head Attention

-

h개의 Scaled Dot-Product Attention로 multi-head attention 이 구성된다.

-

즉, transformer은 여러번의 attention 을 수행하는 것 => input을 여러번 이해하는 효과

-

앞서 셀프 어텐션은 입력 문장의 단어 벡터들을 가지고 수행한다고 하였는데, 사실 셀프 어텐션은 인코더의 초기 입력인 의 차원을 가지는 단어 벡터들을 사용하여 셀프 어텐션을 수행하는 것이 아니라 우선 각 단어 벡터들로부터 Q벡터, K벡터, V벡터를 얻는 작업을 거칩니다.

-

이때 이 Q벡터, K벡터, V벡터들은 초기 입력인 의 차원을 가지는 단어 벡터들보다 더 작은 차원을 가지는데, 논문에서는 =512의 차원을 가졌던 각 단어 벡터들을 64의 차원을 가지는 Q벡터, K벡터, V벡터로 변환하였습니다.

-

64라는 값은 트랜스포머의 또 다른 하이퍼파라미터인 numheads로 인해 결정되는데, 트랜스포머는 을 numheads로 나눈 값을 각 Q벡터, K벡터, V벡터의 차원으로 결정합니다. 논문에서는 numheads를 8로하였습니다.

트랜스포머 연구진은 한 번의 어텐션을 하는 것보다 여러번의 어텐션을 병렬로 사용하는 것이 더 효과적이라고 판단했0다.

그래서 의 차원을 numheads개로 나누어, /numheads의 차원을 가지는 Q, K, V에 대해서

numheads개의 병렬 어텐션을 수행한다.

논문에서는 하이퍼파라미터인 numheads의 값을 8로 지정하였고, 8개의 병렬 어텐션이 이루어지게 된다. 다시 말해 위에서 설명한 어텐션이 8개로 병렬로 이루어지게 되는데, 이때 각각의 어텐션 값 행렬을 어텐션 헤드라고 부른다.

이때 가중치 행렬 ,, 의 값은 8개의 어텐션 헤드마다 전부 다르다.

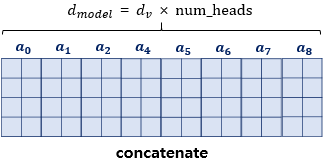

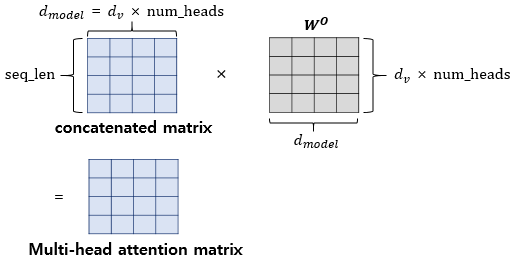

병렬 어텐션을 모두 수행하였다면 모든 어텐션 헤드를 연결(concatenate)한다. 모두 연결된 어텐션 헤드 행렬의 크기는 (seq-len, )가 된다.

각 헤드의 차원이 줄어들기 때문에 총 계산 비용은 전체 차원을 갖춘 단일 헤드 어텐션의 비용과 유사해진다.

어텐션 헤드를 모두 연결한 행렬은 또 다른 가중치 행렬

을 곱하게 되는데, 이렇게 나온 결과 행렬이 멀티-헤드 어텐션의 최종 결과물이다. 위의 그림은 어텐션 헤드를 모두 연결한 행렬이 가중치 행렬 과 곱해지는 과정을 보여준다. 이때 결과물인 멀티-헤드 어텐션 행렬은 인코더의 입력이었던 문장 행렬의 (seq-len, ) 크기와 동일하다.

3.2.3. Applications of Attention in our Model

transformer에서는 Multi-Head Attention layer가 3개 존재하고, 각각의 특징이 다르다.

1) encoder-decoder attention layer

이전 decoder 레이어에서 오는 query들과 encoder의 출력으로 나오는 memory key, value들과의 attention임

👉🏻 이는 decoder의 모든 위치에서 input sequence의 모든 위치를 참조할 수 있도록 함

2) self-attention layer in Encoder

Encoder의 self-attention 레이어에서는 모든 키, 값 및 쿼리가 동일한 위치(이 경우 인코더의 이전 레이어 출력)에서 나옴

👉🏻 Encoder의 각 위치들은 이전 레이어의 모든 위치들을 참조할 수 있음

3) self-attention layer in Decoder

Decoder의 self-attention 레이어는 디코더의 각 위치가 해당 위치까지 포함하는 Decoder의 모든 위치에 주의를 기울이는 것을 허용

👉🏻 하지만, Auto-Regressive 특징 살리고자 이전 위치부터 자신 위치까지만을 참조할 수 있음(Masking 방법 사용)

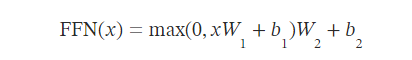

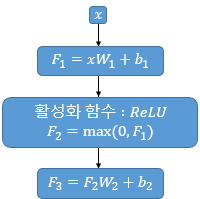

3.3. Position-wise Feed-Forward Networks

모든 Attention layer와 함께 fully connected feed-forward network가 사용됨, 인코더 및 디코더의 각 계층에 개별적으로 위치함(Position-wise FFNN은 인코더와 디코더에서 공통적으로 가지고 있는 서브층임)

여기서 는 앞서 멀티 헤드 어텐션의 결과로 나온 (seq-len, )의 크기를 가지는 행렬을 말합니다. 가중치 행렬 은 (, )의 크기를 가지고, 가중치 행렬 은 (, )의 크기를 가집니다. 논문에서 은닉층의 크기인 는 앞서 하이퍼파라미터를 정의할 때 언급했듯이 2,048의 크기를 가집니다.

여기서 매개변수 ,, , 는 하나의 인코더 층 내에서는 다른 문장, 다른 단어들마다 정확하게 동일하게 사용됩니다. 하지만 인코더 층마다는 다른 값을 가집니다.

- 사이에 ReLU 활성화가 있는 두 개의 선형 변환으로 구성

- input과 output의 차원은 512, 은닉층의 차원은 2048

그림으로 생각하면,

Encoder는 이런 구조인 것이다.

❗Add & Norm

그리고 추가로 잔차 연결(Residual connection)과 층 정규화(Layer Normalization)도 추가되어서 Encoder를 구성하는데 아래와 같다.

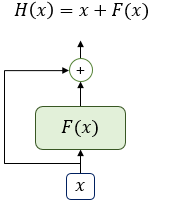

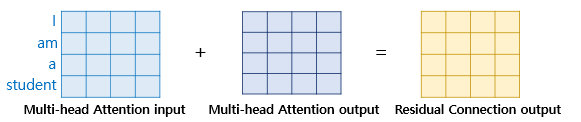

1) 잔차 연결(Residual connection)

잔차 연결은 서브층의 입력과 출력을 더하는 것을 말한다 앞서 언급했듯이 트랜스포머에서 서브층의 입력과 출력은 동일한 차원을 갖고 있으므로, 서브층의 입력과 서브층의 출력은 덧셈 연산을 할 수 있다. 이것이 바로 위의 인코더 그림에서 각 화살표가 서브층의 입력에서 출력으로 향하도록 그려졌던 이유다. 잔차 연결은 컴퓨터 비전 분야에서 주로 사용되는 모델의 학습을 돕는 기법이다.

서브층이 멀티 헤드 어텐션이었다면 잔차 연결 연산은 다음과 같다.

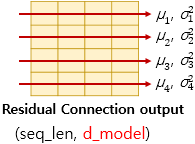

2) 층 정규화(Layer Normalization)

잔차 연결을 거친 결과는 이어서 층 정규화 과정을 거치게됩니다.

층 정규화는 텐서의 마지막 차원에 대해서 평균과 분산을 구하고, 이를 가지고 어떤 수식을 통해 값을 정규화하여 학습을 돕는다. 여기서 텐서의 마지막 차원이란 것은 트랜스포머에서는 차원을 의미한다. 아래 그림은 차원의 방향을 화살표로 표현하였다.

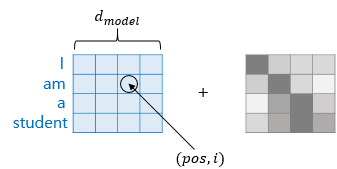

3.4. Embeddings and Softmax

- 다른 시퀀스 변환 모델과 마찬가지로 학습된 임베딩을 사용하여 입력 토큰과 출력 토큰을 차원 벡터로 변환한다.

- 일반적으로 학습된 linear transformation 및 Softmax function를 사용하여 디코더 출력을 예측된 다음 토큰 확률로 변환한다.

- two embedding layers와 pre-softmax linear transformation 간에 동일한 가중치 행렬을 공유함

- 임베딩 레이어에서는 을 가중치에 곱해준다.

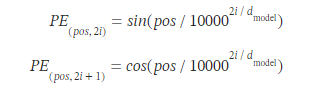

3.5. Positional Encoding

- recurrenct나 convolution이 없기 때문에 모델이 시퀀스의 순서를 활용하려면 시퀀스에서 토큰의 상대적 또는 절대 위치에 대한 일부 정보를 주입해야 함. (단어의 위치 정보를 다른 방식으로 알려줄 필요가 있음)

- 이를 위해 인코더 및 디코더 스택의 하단에 있는 입력 임베딩에

Positional Encoding을 추가함

- sin 함수와 cos 함수의 값을 임베딩 벡터에 더해주므로서 단어의 순서 정보를 더한다.

- 는 입력 문장에서의 임베딩 벡터의 위치를 나타내며, 는 임베딩 벡터 내의 차원의 인덱스를 의미한다. 위의 식에 따르면 임베딩 벡터 내의 각 차원의 인덱스가 짝수인 경우에는 사인 함수의 값을 사용하고 홀수인 경우에는 코사인 함수의 값을 사용한다. 위의 수식에서 일 때는 사인 함수를 사용하고, 일 때는 코사인 함수를 사용하고 있다.

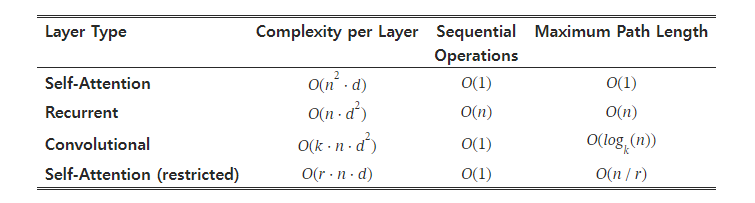

4. Why Self-Attention

은 시퀀스 길이, 는 표현하는 차원, 는 컨볼루션의 커널 사이즈, 은 제한된 self-attention에서의 neighborhood 크기임

(1) Self-Attention vs Recurrent

Recurrent layer는 순차적으로 계산해나가기에, 한번에 행렬 계산으로 attention을 계산하는 Self-Attention과 비교했을 때, Sequential Operations 복잡도는 O(n)이다. => Self-Attention layer가 Recurrent layer보다 sequential operations

면에서 빠름

또한, n<d 인 경우, 계산 복잡도(Complexity per Layer)면에서도 Self-Attention layer이 Recurrent layer보다 더 낫다.

(1) Self-Attention vs Convolutional

Convolutional layers는 일반적으로 recurrent layers보다 로 인해서 더 많은 비용이 든다.

또한 Maximun Path Length의 경우에도 복잡도가 Self-Attention 보다 크다.

(3) Self-Attention은 더 해석 가능한 모델을 생성할 수 있음

Individual attention heads는 다양한 작업을 수행하는 방법을 명확하게 학습할 뿐만 아니라, 많은 경우 문장의 구문 및 의미 구조와 관련된 행동을 보이는 것으로 보인다.

5. Training

5.1. Training Data and Batching

- Training Data : standard WMT 2014 English-German dataset consisting of about 4.5 million sentence pairs (Sentences encoding : using byte-pair encoding (shared source-target vocabulary of about 37000 tokens)

- Training Data : larger WMT 2014 English-French dataset (split tokens into a 32000 word-piece vocabulary)

- Batching : 각 훈련 배치에는 약 25000개의 소스 토큰과 25000개의 대상 토큰이 포함된 문장 쌍 세트가 포함되어 있다.

5.2. Hardware and Schedule

- 8개의 NVIDIA P100 GPU가 장착된 하나의 시스템에서 모델을 훈련함

- 문서 전반에 걸쳐 설명된 하이퍼파라미터를 사용하는 기본 모델의 경우 각 학습 단계는 약 0.4초가 소요됨

- 총 100,000단계 또는 12시간 동안 기본 모델을 훈련함

5.3. Optimizer

- Adam optimize ( = 0.9 , = 0.98, = )

- learning rate formula

- 초반에는 warmup_step 4000으로 하여 선형적으로 lr을 증가시키고, 점점 감소하도록 구현

5.4. Regularization

3가지의 Regularization 방법론 사용

- Residual Dropout1 : Add가 되기 전에 layer output에 Dropout 적용 ( = 0.1)

- Residual Dropout2 : encoder and decoder stacks의 sums of the embeddings 과 the positional encodings에 Dropout 적용 ( = 0.1)

- Label Smoothing : = 0.1 (모델이 더 불확실한 것을 학습하므로 복잡성이 해소되지만 정확도와 BLEU 점수는 향상되었음)

6. Results

6.1. Machine Translation

Transformer는 적은 교육 비용으로 e English-to-German and English-to-French newstest2014 tests에서 이전 최첨단 모델보다 더 나은 BLEU 점수를 달성했다.

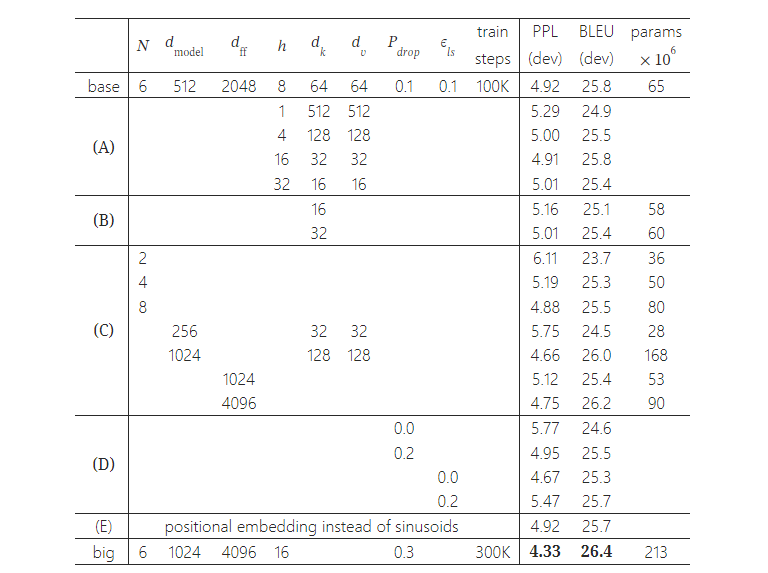

6.2. Model Variations

Transformer의 다양한 구성 요소의 중요성을 평가하기 위해 우리는 기본 모델을 다양한 방식으로 변경하여 개발 세트인 newstest2013의 English-to-German 번역 성능 변화를 측정했다.

- (A)를 보면 single-head attention는 최고 설정보다 0.9 BLEU점수가 떨어지지만, Head가 너무 많으면 품질도 떨어진다. (적절한 head 개수는 16)

- (B)를 보면 attention key size ()를 감소시키면 모델 성능이 떨어짐 (호환성을 결정하는 것이 쉽지 않으며 내적보다 더 정교한 호환성 함수가 유리할 수 있음을 시사)

- (C, D) 더 큰 모델이 더 좋고 드롭아웃이 과적합을 피하는 데 매우 도움이 됨

- (E) learned positional embeddings 방법론을 활용하면 큰 차이 없음

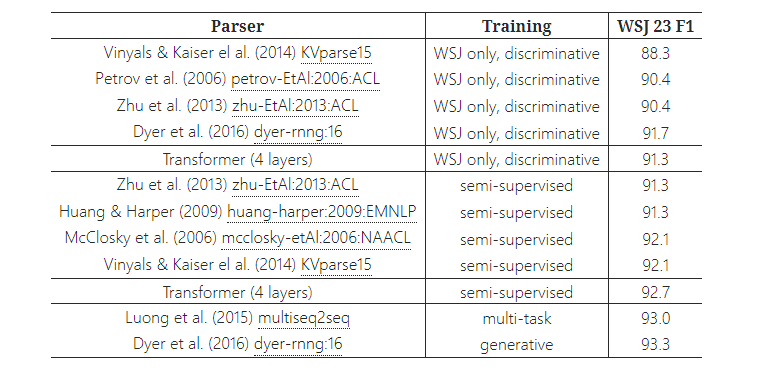

6.3. English Constituency Parsing

Transformer가 다른 작업으로 일반화할 수 있는지 평가하기 위해 영어 선거구 구문 분석에 대한 실험을 수행했다.

RNN sequence-to-sequence models과 정반대로, the Transformer가 BerkeleyParser을 능가하는 성능을 보였다. (40K sentences로 구성된 Wall Street Journal (WSJ)만을 학습했는데도)

7. Conclusion

✅ 최초의 시퀀스 변환 모델인 Transformer를 제시

: 이 작업에서 우리는 인코더-디코더 아키텍처에서 가장 일반적으로 사용되는 순환 레이어를 Multi-headed self-attention으로 대체하여 전적으로 Attention을 기반으로 하는 Transformer 제시

✅ Translation Task의 경우 Transformer는 Recurrent 또는 Covolutional layer를 기반으로 하는 아키텍처보다 훨씬 빠르게 학습될 수 있음

✅ WMT 2014 English-to-German and WMT 2014 English-to-French translation tasks 모두에서 new state of the art(SOTA) 달성

: Best Transformer 모델은 이전에 보고된 모든 Ensemble model보다 성능이 뛰어남

💡 다른 Task에 적용할 계획

💡 텍스트 이외의 입력 및 출력 양식과 관련된 문제로 Transformer를 확장하고 이미지, 오디오 및 비디오와 같은 대규모 입력 및 출력을 효율적으로 처리하기 위해 지역적이고 제한된 주의 메커니즘을 조사할 계획

💡 Making generation less sequential is another research goals of ours.

Reference

위키독스_Trasnformer

attention 구조

transfomer 계산 과정 설명