딥 러닝5 - tensorflow_datasets

BatchNormlizationDenseKerasMaxPooling2DSequentialcompileconv2ddropoutflattenjsonnumpytensorflowtensorflow_datasets

복습

목록 보기

18/49

새싹 인공지능 응용sw 개발자 양성 교육 프로그램 심선조 강사님 수업 정리 글입니다.

tensorflow_datasets

import tensorflow as tf

import numpy as np

import json

import matplotlib.pyplot as plt

import tensorflow_datasets as tfdsdata_dir = 'dataset/'

(train_ds,valid_ds),info = tfds.load('eurosat/rgb', #(train_ds,valid_ds),info 에 저장

split=['train[:80%]','train[80%:]'],#[:80%] 맨 처음부터 80%까지

shuffle_files=True,

as_supervised=True,

with_info=True, #관련된 정보들도 다운로드

data_dir=data_dir) #, data_dir: 데이터를 어디로 받을 건지Downloading and preparing dataset 89.91 MiB (download: 89.91 MiB, generated: Unknown size, total: 89.91 MiB) to dataset/eurosat/rgb/2.0.0...

Dl Completed...: 0 url [00:00, ? url/s]

Dl Size...: 0 MiB [00:00, ? MiB/s]

Extraction completed...: 0 file [00:00, ? file/s]

Generating splits...: 0%| | 0/1 [00:00<?, ? splits/s]

Generating train examples...: 0%| | 0/27000 [00:00<?, ? examples/s]

Shuffling dataset/eurosat/rgb/2.0.0.incompleteT7N1BN/eurosat-train.tfrecord*...: 0%| | 0/27000 [00:…

Dataset eurosat downloaded and prepared to dataset/eurosat/rgb/2.0.0. Subsequent calls will reuse this data.train_ds,valid_ds(<PrefetchDataset element_spec=(TensorSpec(shape=(64, 64, 3), dtype=tf.uint8, name=None), TensorSpec(shape=(), dtype=tf.int64, name=None))>,

<PrefetchDataset element_spec=(TensorSpec(shape=(64, 64, 3), dtype=tf.uint8, name=None), TensorSpec(shape=(), dtype=tf.int64, name=None))>)infotfds.core.DatasetInfo(

name='eurosat',

full_name='eurosat/rgb/2.0.0',

description="""

EuroSAT dataset is based on Sentinel-2 satellite images covering 13 spectral

bands and consisting of 10 classes with 27000 labeled and

geo-referenced samples.

Two datasets are offered:

- rgb: Contains only the optical R, G, B frequency bands encoded as JPEG image.

- all: Contains all 13 bands in the original value range (float32).

URL: https://github.com/phelber/eurosat

""",

config_description="""

Sentinel-2 RGB channels

""",

homepage='https://github.com/phelber/eurosat',

data_path='dataset/eurosat/rgb/2.0.0',

file_format=tfrecord,

download_size=89.91 MiB,

dataset_size=89.50 MiB,

features=FeaturesDict({

'filename': Text(shape=(), dtype=tf.string),

'image': Image(shape=(64, 64, 3), dtype=tf.uint8),

'label': ClassLabel(shape=(), dtype=tf.int64, num_classes=10),

}),

supervised_keys=('image', 'label'),

disable_shuffling=False,

splits={

'train': <SplitInfo num_examples=27000, num_shards=1>,

},

citation="""@misc{helber2017eurosat,

title={EuroSAT: A Novel Dataset and Deep Learning Benchmark for Land Use and Land Cover Classification},

author={Patrick Helber and Benjamin Bischke and Andreas Dengel and Damian Borth},

year={2017},

eprint={1709.00029},

archivePrefix={arXiv},

primaryClass={cs.CV}

}""",

)tfds.show_examples(train_ds,info)

tfds.as_dataframe(valid_ds.take(5),info)| image | label | |

|---|---|---|

| 0 | 5 (Pasture) | |

| 1 | 7 (Residential) | |

| 2 | 0 (AnnualCrop) | |

| 3 | 1 (Forest) | |

| 4 | 0 (AnnualCrop) |

<button class="colab-df-convert" onclick="convertToInteractive('df-2c30cfbb-fc73-48ab-9130-de0ed1f8c07f')"

title="Convert this dataframe to an interactive table."

style="display:none;"> <svg xmlns="http://www.w3.org/2000/svg" height="24px"viewBox="0 0 24 24"

width="24px">

<script>

const buttonEl =

document.querySelector('#df-2c30cfbb-fc73-48ab-9130-de0ed1f8c07f button.colab-df-convert');

buttonEl.style.display =

google.colab.kernel.accessAllowed ? 'block' : 'none';

async function convertToInteractive(key) {

const element = document.querySelector('#df-2c30cfbb-fc73-48ab-9130-de0ed1f8c07f');

const dataTable =

await google.colab.kernel.invokeFunction('convertToInteractive',

[key], {});

if (!dataTable) return;

const docLinkHtml = 'Like what you see? Visit the ' +

'<a target="_blank" href=https://colab.research.google.com/notebooks/data_table.ipynb>data table notebook</a>'

+ ' to learn more about interactive tables.';

element.innerHTML = '';

dataTable['output_type'] = 'display_data';

await google.colab.output.renderOutput(dataTable, element);

const docLink = document.createElement('div');

docLink.innerHTML = docLinkHtml;

element.appendChild(docLink);

}

</script>

</div>num_classes = info.features['label'].num_classes

num_classes #클래스에 정의된 게 몇 개인지10print(info.features['label'].int2str(1)) #확률이 나오면 argmax로 index값 확인 = int2str()메서드Forestbatch_size=64 #batch_size 데이터가 총 100개라면 batch_size만(한 번에 불러오는 데이터 사이즈)큼 한 번에 데이터 불러와서 학습하고 검증함 = 1epochs

buffer_size=1000 #buffer_size 정보를 가져와서 필요한 만큼 조금씩 가져옴, 메모리에서 처리할 수 있는 만큼

#buffer에 올려놓아서 batch_size만큼 빼가면 buffer가 빠져나간 batch_size 제거하고 보충해서 항상 1000유지

#데이터 증강에서 이미지를 확대, 회전 등등 데이터 제공하듯이

#데이터 제공전에 함수를 만들어 처리하고 데이터를 제공할 수 있다

def preprocess_data(image,label):

image = tf.cast(image,tf.float32)/255. #이미지 읽어와서 0~1사이값으로 바꾸기, 정수로 나누면 0이 되버려서 실수값으로 바꿔서 나눠줌

return image, label

train_data = train_ds.map(preprocess_data,num_parallel_calls=tf.data.AUTOTUNE) # map함수: dataset에 함수를 적용할 수 있다. dataframe에서 apply와 똑같음

#num_parallel_calls=tf.data.AUTOTUNE : 데이터를 처리할 때 병렬처리 함, 앞에 끝날 때까지 안 기다려도 됨

valid_data = valid_ds.map(preprocess_data,num_parallel_calls=tf.data.AUTOTUNE)

#여기서 데이터가 알아서 들어간다. data는 한 번에 batch_size만큼 64개씩 제공된다.

train_data = train_data.shuffle(buffer_size).batch(batch_size).prefetch(tf.data.AUTOTUNE)

valid_data = valid_data.shuffle(batch_size).cache().prefetch(tf.data.AUTOTUNE)from keras import Sequential

from keras.layers import Dense,Dropout,Flatten,Conv2D,MaxPooling2D,BatchNormalization

def build_model(): #가중치값 불러오기

model = Sequential([ #모델 생성

BatchNormalization(), #값을 줄여줌

Conv2D(32,(3,3),padding='same',activation='relu'),

MaxPooling2D((2,2)), #MaxPooling2D((2,2))기본 : 절반으로 만들어 줌

BatchNormalization(),

Conv2D(64,(3,3),padding='same',activation='relu'), #64: 필터의 갯수

MaxPooling2D((2,2)),

#출력층, 분류기 만들기

Flatten(),

Dense(128,activation='relu'),

Dropout(0.3), #과적합방지위해 일부 연결 끊어줌

Dense(64,activation='relu'),

Dropout(0.3),

Dense(num_classes,activation='softmax'),

])

return model

model = build_model()model.compile(optimizer='adam',loss='sparse_categorical_crossentropy',metrics=['acc'])#model.summary() history = model.fit(train_data,validation_data=valid_data,epochs=50)Epoch 1/50

338/338 [==============================] - 16s 23ms/step - loss: 1.6277 - acc: 0.4371 - val_loss: 1.6342 - val_acc: 0.4735

Epoch 2/50

338/338 [==============================] - 5s 16ms/step - loss: 1.2433 - acc: 0.5631 - val_loss: 0.9623 - val_acc: 0.7024

Epoch 3/50

338/338 [==============================] - 6s 17ms/step - loss: 1.0262 - acc: 0.6457 - val_loss: 0.7905 - val_acc: 0.7385

Epoch 4/50

338/338 [==============================] - 5s 13ms/step - loss: 0.9095 - acc: 0.6864 - val_loss: 0.6720 - val_acc: 0.7739

Epoch 5/50

338/338 [==============================] - 4s 12ms/step - loss: 0.8352 - acc: 0.7136 - val_loss: 0.7217 - val_acc: 0.7583

Epoch 6/50

338/338 [==============================] - 4s 13ms/step - loss: 0.7373 - acc: 0.7528 - val_loss: 0.5405 - val_acc: 0.8237

Epoch 7/50

338/338 [==============================] - 4s 13ms/step - loss: 0.6550 - acc: 0.7771 - val_loss: 0.5901 - val_acc: 0.8059

Epoch 8/50

338/338 [==============================] - 4s 13ms/step - loss: 0.6026 - acc: 0.7938 - val_loss: 0.5549 - val_acc: 0.8204

Epoch 9/50

338/338 [==============================] - 4s 13ms/step - loss: 0.5292 - acc: 0.8214 - val_loss: 0.5406 - val_acc: 0.8241

Epoch 10/50

338/338 [==============================] - 4s 13ms/step - loss: 0.4870 - acc: 0.8301 - val_loss: 0.4499 - val_acc: 0.8476

Epoch 11/50

338/338 [==============================] - 4s 13ms/step - loss: 0.4475 - acc: 0.8472 - val_loss: 0.4546 - val_acc: 0.8557

Epoch 12/50

338/338 [==============================] - 7s 20ms/step - loss: 0.4080 - acc: 0.8585 - val_loss: 0.4426 - val_acc: 0.8596

Epoch 13/50

338/338 [==============================] - 5s 14ms/step - loss: 0.3665 - acc: 0.8783 - val_loss: 0.4329 - val_acc: 0.8519

Epoch 14/50

338/338 [==============================] - 4s 12ms/step - loss: 0.3358 - acc: 0.8849 - val_loss: 0.4190 - val_acc: 0.8672

Epoch 15/50

338/338 [==============================] - 4s 12ms/step - loss: 0.3230 - acc: 0.8931 - val_loss: 0.3936 - val_acc: 0.8731

Epoch 16/50

338/338 [==============================] - 4s 12ms/step - loss: 0.3043 - acc: 0.8981 - val_loss: 0.4540 - val_acc: 0.8665

Epoch 17/50

338/338 [==============================] - 4s 12ms/step - loss: 0.2924 - acc: 0.9022 - val_loss: 0.4456 - val_acc: 0.8700

Epoch 18/50

338/338 [==============================] - 4s 12ms/step - loss: 0.2775 - acc: 0.9075 - val_loss: 0.4688 - val_acc: 0.8537

Epoch 19/50

338/338 [==============================] - 4s 13ms/step - loss: 0.2710 - acc: 0.9125 - val_loss: 0.4495 - val_acc: 0.8615

Epoch 20/50

338/338 [==============================] - 5s 13ms/step - loss: 0.2379 - acc: 0.9242 - val_loss: 0.4951 - val_acc: 0.8665

Epoch 21/50

338/338 [==============================] - 4s 13ms/step - loss: 0.2434 - acc: 0.9217 - val_loss: 0.4205 - val_acc: 0.8802

Epoch 22/50

338/338 [==============================] - 4s 12ms/step - loss: 0.2262 - acc: 0.9260 - val_loss: 0.5502 - val_acc: 0.8580

Epoch 23/50

338/338 [==============================] - 4s 13ms/step - loss: 0.2204 - acc: 0.9267 - val_loss: 0.5431 - val_acc: 0.8596

Epoch 24/50

338/338 [==============================] - 5s 13ms/step - loss: 0.2078 - acc: 0.9308 - val_loss: 0.4343 - val_acc: 0.8783

Epoch 25/50

338/338 [==============================] - 4s 13ms/step - loss: 0.1947 - acc: 0.9363 - val_loss: 0.4322 - val_acc: 0.8843

Epoch 26/50

338/338 [==============================] - 4s 12ms/step - loss: 0.1974 - acc: 0.9359 - val_loss: 0.4244 - val_acc: 0.8830

Epoch 27/50

338/338 [==============================] - 5s 13ms/step - loss: 0.1771 - acc: 0.9416 - val_loss: 0.4802 - val_acc: 0.8709

Epoch 28/50

338/338 [==============================] - 4s 12ms/step - loss: 0.1971 - acc: 0.9354 - val_loss: 0.5854 - val_acc: 0.8509

Epoch 29/50

338/338 [==============================] - 4s 13ms/step - loss: 0.1911 - acc: 0.9387 - val_loss: 0.4493 - val_acc: 0.8807

Epoch 30/50

338/338 [==============================] - 4s 12ms/step - loss: 0.1683 - acc: 0.9445 - val_loss: 0.6811 - val_acc: 0.8461

Epoch 31/50

338/338 [==============================] - 4s 13ms/step - loss: 0.1841 - acc: 0.9408 - val_loss: 0.5370 - val_acc: 0.8630

Epoch 32/50

338/338 [==============================] - 5s 13ms/step - loss: 0.1654 - acc: 0.9470 - val_loss: 0.5669 - val_acc: 0.8791

Epoch 33/50

338/338 [==============================] - 4s 13ms/step - loss: 0.1556 - acc: 0.9494 - val_loss: 0.5341 - val_acc: 0.8685

Epoch 34/50

338/338 [==============================] - 5s 15ms/step - loss: 0.1597 - acc: 0.9481 - val_loss: 0.5213 - val_acc: 0.8741

Epoch 35/50

338/338 [==============================] - 4s 13ms/step - loss: 0.1584 - acc: 0.9482 - val_loss: 0.5172 - val_acc: 0.8759

Epoch 36/50

338/338 [==============================] - 4s 13ms/step - loss: 0.1427 - acc: 0.9532 - val_loss: 0.5618 - val_acc: 0.8785

Epoch 37/50

338/338 [==============================] - 5s 14ms/step - loss: 0.1460 - acc: 0.9527 - val_loss: 0.5293 - val_acc: 0.8759

Epoch 38/50

338/338 [==============================] - 4s 13ms/step - loss: 0.1438 - acc: 0.9524 - val_loss: 0.5097 - val_acc: 0.8748

Epoch 39/50

338/338 [==============================] - 4s 13ms/step - loss: 0.1515 - acc: 0.9530 - val_loss: 0.5562 - val_acc: 0.8669

Epoch 40/50

338/338 [==============================] - 5s 13ms/step - loss: 0.1414 - acc: 0.9545 - val_loss: 0.4819 - val_acc: 0.8798

Epoch 41/50

338/338 [==============================] - 5s 16ms/step - loss: 0.1333 - acc: 0.9578 - val_loss: 0.4976 - val_acc: 0.8828

Epoch 42/50

338/338 [==============================] - 7s 20ms/step - loss: 0.1211 - acc: 0.9598 - val_loss: 0.5087 - val_acc: 0.8843

Epoch 43/50

338/338 [==============================] - 7s 20ms/step - loss: 0.1180 - acc: 0.9611 - val_loss: 0.4979 - val_acc: 0.8883

Epoch 44/50

338/338 [==============================] - 8s 22ms/step - loss: 0.1233 - acc: 0.9583 - val_loss: 0.5827 - val_acc: 0.8748

Epoch 45/50

338/338 [==============================] - 6s 17ms/step - loss: 0.1251 - acc: 0.9586 - val_loss: 0.5901 - val_acc: 0.8691

Epoch 46/50

338/338 [==============================] - 6s 16ms/step - loss: 0.1091 - acc: 0.9624 - val_loss: 0.7245 - val_acc: 0.8611

Epoch 47/50

338/338 [==============================] - 5s 14ms/step - loss: 0.1220 - acc: 0.9615 - val_loss: 0.5465 - val_acc: 0.8667

Epoch 48/50

338/338 [==============================] - 5s 13ms/step - loss: 0.1278 - acc: 0.9586 - val_loss: 0.6265 - val_acc: 0.8681

Epoch 49/50

338/338 [==============================] - 4s 13ms/step - loss: 0.1247 - acc: 0.9597 - val_loss: 0.6129 - val_acc: 0.8781

Epoch 50/50

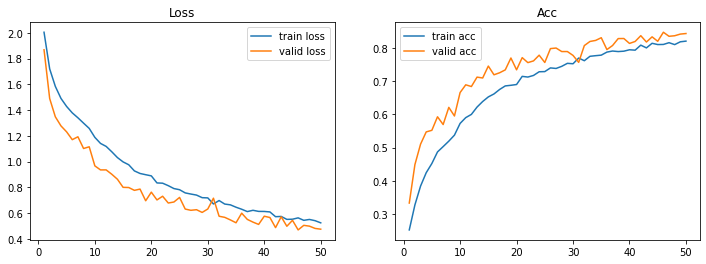

338/338 [==============================] - 4s 13ms/step - loss: 0.1163 - acc: 0.9612 - val_loss: 0.5351 - val_acc: 0.8756def plot_loss_acc(history,epoch):

loss,val_loss = history.history['loss'],history.history['val_loss']

acc,val_acc = history.history['acc'],history.history['val_acc']

fig , axes = plt.subplots(1,2,figsize=(12,4))

axes[0].plot(range(1,epoch+1), loss, label ='train loss')

axes[0].plot(range(1,epoch+1), val_loss, label ='valid loss')

axes[0].legend(loc='best')

axes[0].set_title('Loss')

axes[1].plot(range(1,epoch+1), acc, label ='train acc')

axes[1].plot(range(1,epoch+1), val_acc, label ='valid acc')

axes[1].legend(loc='best')

axes[1].set_title('Acc')

plt.show()

#plot_loss_acc(history,50)

데이터 증강

#데이터 증강

image_batch , label_batch = next(iter(train_data.take(1)))#하나(.take(1)) return = next(다음거를 가져오겠다)

image_batch.shape,label_batch.shape

image = image_batch[0]

label = label_batch[0].numpy()

image,label#map함수에 0~1사이값으로 조정해서 결과거 0~1사이값

plt.imshow(image)

plt.title(info.features['label'].int2str(label))

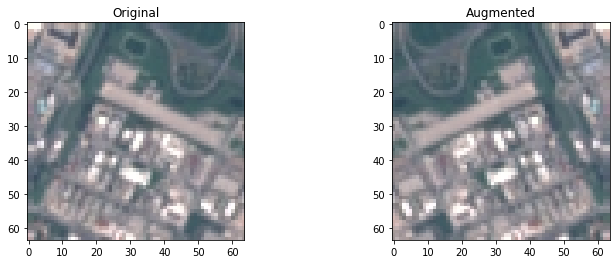

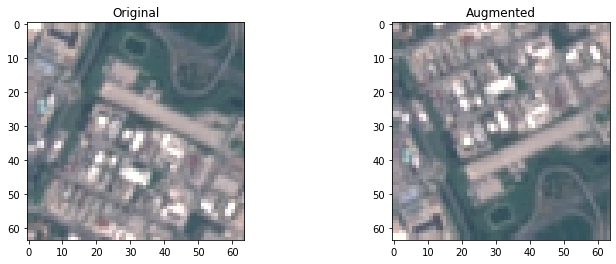

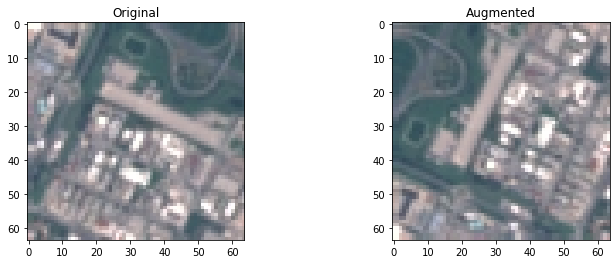

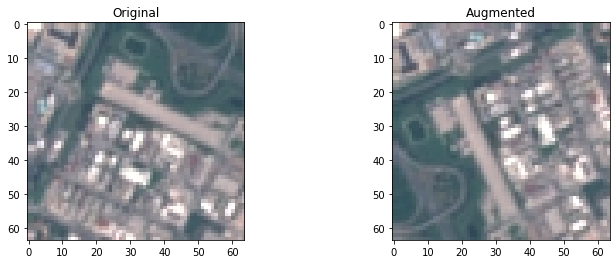

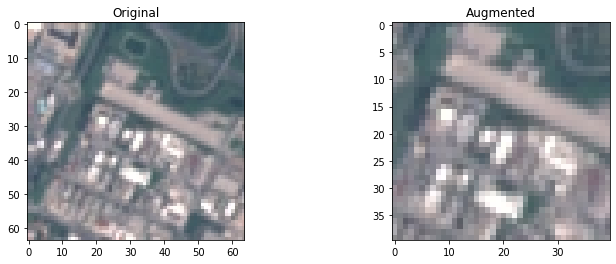

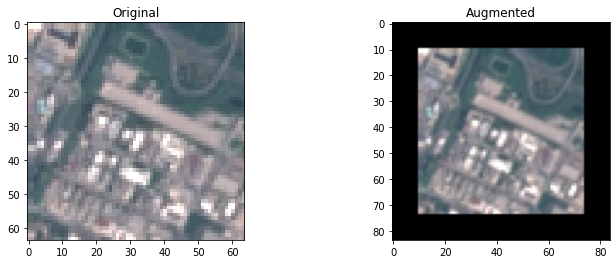

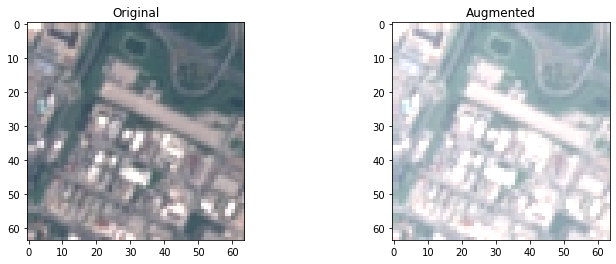

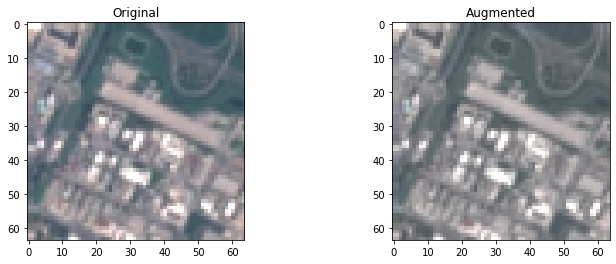

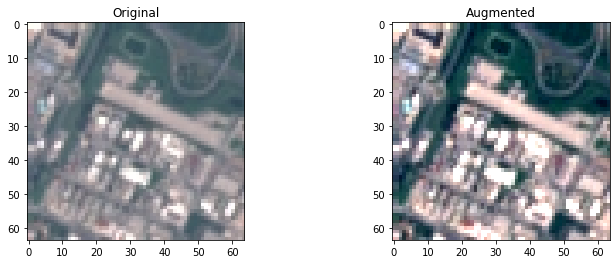

#TensorShape([64]) batchsize가 64라서(TensorShape([64, 64, 64, 3]), TensorShape([64]))def plot_augmentation(original,augmented):

fig,axes = plt.subplots(1,2,figsize=(12,4))#1행 2열

axes[0].imshow(original)

axes[0].set_title('Original')

axes[1].imshow(augmented)

axes[1].set_title('Augmented')

plt.show()plot_augmentation(image,tf.image.random_flip_left_right(image)) #.random : 랜덤하게 적용

plot_augmentation(image,tf.image.flip_up_down(image))

plot_augmentation(image,tf.image.rot90(image))

plot_augmentation(image,tf.image.transpose(image)) #transpose :행 열 전환

plot_augmentation(image,tf.image.central_crop(image,central_fraction=0.6)) #central_crop 이미지 자르기

plot_augmentation(image,tf.image.resize_with_crop_or_pad(image,64+20,64+20))

plot_augmentation(image,tf.image.adjust_brightness(image,0.3))WARNING:matplotlib.image:Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).

plot_augmentation(image,tf.image.adjust_saturation(image,0.5))

plot_augmentation(image,tf.image.adjust_contrast(image,2))WARNING:matplotlib.image:Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).

plot_augmentation(image,tf.image.random_crop(image,size=[32,32,3]))

batch_size=64

buffer_size = 1000

def preprocess_data(image,label):

image = tf.image.random_flip_left_right(image)

image = tf.image.random_flip_up_down(image)

image = tf.image.random_brightness(image,max_delta=0.3)

image = tf.image.random_contrast(image,0.5,1.5)

image = tf.cast(image,tf.float32)/255.

return image,label

train_aug = train_ds.map(preprocess_data,num_parallel_calls=tf.data.AUTOTUNE)

valid_aug = valid_ds.map(preprocess_data,num_parallel_calls=tf.data.AUTOTUNE)

train_aug = train_aug.shuffle(buffer_size).batch(batch_size).prefetch(tf.data.AUTOTUNE)

valid_aug = valid_aug.batch(batch_size).cache().prefetch(tf.data.AUTOTUNE)#데이터 증강

image_batch , label_batch = next(iter(train_aug.take(1)))

image_batch.shape,label_batch.shape

image = image_batch[0]

label = label_batch[0].numpy()

image,label

plt.imshow(image)

plt.title(info.features['label'].int2str(label))Text(0.5, 1.0, 'AnnualCrop')

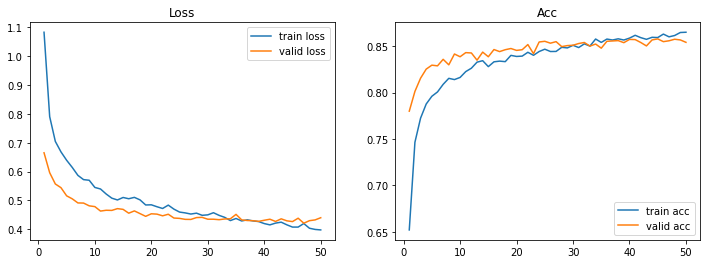

def plot_loss_acc(history,epoch):

loss,val_loss = history.history['loss'],history.history['val_loss']

acc,val_acc = history.history['acc'],history.history['val_acc']

fig , axes = plt.subplots(1,2,figsize=(12,4))

axes[0].plot(range(1,epoch+1), loss, label ='train loss')

axes[0].plot(range(1,epoch+1), val_loss, label ='valid loss')

axes[0].legend(loc='best')

axes[0].set_title('Loss')

axes[1].plot(range(1,epoch+1), acc, label ='train acc')

axes[1].plot(range(1,epoch+1), val_acc, label ='valid acc')

axes[1].legend(loc='best')

axes[1].set_title('Acc')

plt.show()aug_model = build_model()

aug_model.compile(optimizer='adam',loss='sparse_categorical_crossentropy',metrics=['acc'])

aug_history = aug_model.fit(train_aug,validation_data=valid_aug,epochs=50)

plot_loss_acc(aug_history,50)Epoch 1/50

338/338 [==============================] - 10s 25ms/step - loss: 2.0050 - acc: 0.2520 - val_loss: 1.8688 - val_acc: 0.3330

Epoch 2/50

338/338 [==============================] - 8s 22ms/step - loss: 1.7203 - acc: 0.3275 - val_loss: 1.4900 - val_acc: 0.4489

Epoch 3/50

338/338 [==============================] - 8s 22ms/step - loss: 1.5846 - acc: 0.3841 - val_loss: 1.3484 - val_acc: 0.5104

Epoch 4/50

338/338 [==============================] - 8s 22ms/step - loss: 1.4909 - acc: 0.4243 - val_loss: 1.2776 - val_acc: 0.5472

Epoch 5/50

338/338 [==============================] - 8s 23ms/step - loss: 1.4285 - acc: 0.4520 - val_loss: 1.2313 - val_acc: 0.5520

Epoch 6/50

338/338 [==============================] - 8s 22ms/step - loss: 1.3788 - acc: 0.4869 - val_loss: 1.1707 - val_acc: 0.5928

Epoch 7/50

338/338 [==============================] - 8s 22ms/step - loss: 1.3405 - acc: 0.5030 - val_loss: 1.1931 - val_acc: 0.5694

Epoch 8/50

338/338 [==============================] - 9s 26ms/step - loss: 1.2990 - acc: 0.5194 - val_loss: 1.1020 - val_acc: 0.6211

Epoch 9/50

338/338 [==============================] - 7s 21ms/step - loss: 1.2582 - acc: 0.5375 - val_loss: 1.1154 - val_acc: 0.5952

Epoch 10/50

338/338 [==============================] - 7s 21ms/step - loss: 1.1886 - acc: 0.5728 - val_loss: 0.9671 - val_acc: 0.6657

Epoch 11/50

338/338 [==============================] - 8s 24ms/step - loss: 1.1416 - acc: 0.5902 - val_loss: 0.9358 - val_acc: 0.6891

Epoch 12/50

338/338 [==============================] - 7s 21ms/step - loss: 1.1184 - acc: 0.6000 - val_loss: 0.9359 - val_acc: 0.6839

Epoch 13/50

338/338 [==============================] - 8s 23ms/step - loss: 1.0765 - acc: 0.6220 - val_loss: 0.9020 - val_acc: 0.7122

Epoch 14/50

338/338 [==============================] - 9s 26ms/step - loss: 1.0315 - acc: 0.6388 - val_loss: 0.8631 - val_acc: 0.7096

Epoch 15/50

338/338 [==============================] - 11s 32ms/step - loss: 0.9980 - acc: 0.6526 - val_loss: 0.8005 - val_acc: 0.7456

Epoch 16/50

338/338 [==============================] - 10s 28ms/step - loss: 0.9758 - acc: 0.6616 - val_loss: 0.7990 - val_acc: 0.7193

Epoch 17/50

338/338 [==============================] - 7s 21ms/step - loss: 0.9281 - acc: 0.6750 - val_loss: 0.7770 - val_acc: 0.7254

Epoch 18/50

338/338 [==============================] - 7s 22ms/step - loss: 0.9081 - acc: 0.6857 - val_loss: 0.7868 - val_acc: 0.7341

Epoch 19/50

338/338 [==============================] - 8s 22ms/step - loss: 0.8985 - acc: 0.6876 - val_loss: 0.6961 - val_acc: 0.7698

Epoch 20/50

338/338 [==============================] - 7s 21ms/step - loss: 0.8895 - acc: 0.6900 - val_loss: 0.7630 - val_acc: 0.7343

Epoch 21/50

338/338 [==============================] - 7s 21ms/step - loss: 0.8346 - acc: 0.7145 - val_loss: 0.7026 - val_acc: 0.7709

Epoch 22/50

338/338 [==============================] - 8s 22ms/step - loss: 0.8327 - acc: 0.7125 - val_loss: 0.7313 - val_acc: 0.7559

Epoch 23/50

338/338 [==============================] - 8s 22ms/step - loss: 0.8134 - acc: 0.7173 - val_loss: 0.6786 - val_acc: 0.7611

Epoch 24/50

338/338 [==============================] - 8s 22ms/step - loss: 0.7909 - acc: 0.7284 - val_loss: 0.6865 - val_acc: 0.7783

Epoch 25/50

338/338 [==============================] - 8s 22ms/step - loss: 0.7819 - acc: 0.7289 - val_loss: 0.7220 - val_acc: 0.7563

Epoch 26/50

338/338 [==============================] - 8s 22ms/step - loss: 0.7573 - acc: 0.7400 - val_loss: 0.6317 - val_acc: 0.7981

Epoch 27/50

338/338 [==============================] - 8s 22ms/step - loss: 0.7484 - acc: 0.7383 - val_loss: 0.6221 - val_acc: 0.7998

Epoch 28/50

338/338 [==============================] - 8s 22ms/step - loss: 0.7403 - acc: 0.7447 - val_loss: 0.6267 - val_acc: 0.7893

Epoch 29/50

338/338 [==============================] - 8s 22ms/step - loss: 0.7202 - acc: 0.7538 - val_loss: 0.6056 - val_acc: 0.7893

Epoch 30/50

338/338 [==============================] - 10s 28ms/step - loss: 0.7184 - acc: 0.7525 - val_loss: 0.6322 - val_acc: 0.7776

Epoch 31/50

338/338 [==============================] - 13s 38ms/step - loss: 0.6712 - acc: 0.7697 - val_loss: 0.7160 - val_acc: 0.7567

Epoch 32/50

338/338 [==============================] - 9s 24ms/step - loss: 0.6979 - acc: 0.7617 - val_loss: 0.5759 - val_acc: 0.8072

Epoch 33/50

338/338 [==============================] - 10s 28ms/step - loss: 0.6706 - acc: 0.7750 - val_loss: 0.5674 - val_acc: 0.8196

Epoch 34/50

338/338 [==============================] - 11s 33ms/step - loss: 0.6645 - acc: 0.7768 - val_loss: 0.5473 - val_acc: 0.8228

Epoch 35/50

338/338 [==============================] - 11s 32ms/step - loss: 0.6460 - acc: 0.7784 - val_loss: 0.5252 - val_acc: 0.8309

Epoch 36/50

338/338 [==============================] - 10s 29ms/step - loss: 0.6304 - acc: 0.7876 - val_loss: 0.5998 - val_acc: 0.7952

Epoch 37/50

338/338 [==============================] - 8s 22ms/step - loss: 0.6121 - acc: 0.7908 - val_loss: 0.5514 - val_acc: 0.8074

Epoch 38/50

338/338 [==============================] - 9s 26ms/step - loss: 0.6222 - acc: 0.7892 - val_loss: 0.5303 - val_acc: 0.8283

Epoch 39/50

338/338 [==============================] - 9s 25ms/step - loss: 0.6136 - acc: 0.7904 - val_loss: 0.5125 - val_acc: 0.8285

Epoch 40/50

338/338 [==============================] - 9s 25ms/step - loss: 0.6132 - acc: 0.7946 - val_loss: 0.5761 - val_acc: 0.8137

Epoch 41/50

338/338 [==============================] - 12s 33ms/step - loss: 0.6095 - acc: 0.7934 - val_loss: 0.5661 - val_acc: 0.8196

Epoch 42/50

338/338 [==============================] - 11s 30ms/step - loss: 0.5724 - acc: 0.8086 - val_loss: 0.4875 - val_acc: 0.8370

Epoch 43/50

338/338 [==============================] - 10s 28ms/step - loss: 0.5744 - acc: 0.8003 - val_loss: 0.5718 - val_acc: 0.8180

Epoch 44/50

338/338 [==============================] - 11s 30ms/step - loss: 0.5516 - acc: 0.8141 - val_loss: 0.4982 - val_acc: 0.8335

Epoch 45/50

338/338 [==============================] - 8s 22ms/step - loss: 0.5528 - acc: 0.8103 - val_loss: 0.5443 - val_acc: 0.8202

Epoch 46/50

338/338 [==============================] - 8s 22ms/step - loss: 0.5633 - acc: 0.8106 - val_loss: 0.4697 - val_acc: 0.8474

Epoch 47/50

338/338 [==============================] - 8s 22ms/step - loss: 0.5442 - acc: 0.8159 - val_loss: 0.5053 - val_acc: 0.8352

Epoch 48/50

338/338 [==============================] - 8s 23ms/step - loss: 0.5510 - acc: 0.8102 - val_loss: 0.4989 - val_acc: 0.8369

Epoch 49/50

338/338 [==============================] - 8s 22ms/step - loss: 0.5414 - acc: 0.8186 - val_loss: 0.4814 - val_acc: 0.8419

Epoch 50/50

338/338 [==============================] - 10s 28ms/step - loss: 0.5247 - acc: 0.8206 - val_loss: 0.4754 - val_acc: 0.8435

전이학습

#전이학습, 미리 사전에 학습된 모델을 가져와서 끝에 분류기만 바꿔보기

from keras.applications import ResNet50V2

from keras.utils import plot_model

pre_trained_base = ResNet50V2()

plot_model(pre_trained_base,show_shapes=True,show_layer_names=True)Output hidden; open in https://colab.research.google.com to view.pre_trained_base = ResNet50V2(include_top=False,input_shape=[64,64,3])

pre_trained_base.trainable = False

plot_model(pre_trained_base,show_shapes=True,show_layer_names=True)Output hidden; open in https://colab.research.google.com to view.pre_trained_base.summary()Model: "resnet50v2"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_3 (InputLayer) [(None, 64, 64, 3)] 0 []

conv1_pad (ZeroPadding2D) (None, 70, 70, 3) 0 ['input_3[0][0]']

conv1_conv (Conv2D) (None, 32, 32, 64) 9472 ['conv1_pad[0][0]']

pool1_pad (ZeroPadding2D) (None, 34, 34, 64) 0 ['conv1_conv[0][0]']

pool1_pool (MaxPooling2D) (None, 16, 16, 64) 0 ['pool1_pad[0][0]']

conv2_block1_preact_bn (BatchN (None, 16, 16, 64) 256 ['pool1_pool[0][0]']

ormalization)

conv2_block1_preact_relu (Acti (None, 16, 16, 64) 0 ['conv2_block1_preact_bn[0][0]']

vation)

conv2_block1_1_conv (Conv2D) (None, 16, 16, 64) 4096 ['conv2_block1_preact_relu[0][0]'

]

conv2_block1_1_bn (BatchNormal (None, 16, 16, 64) 256 ['conv2_block1_1_conv[0][0]']

ization)

conv2_block1_1_relu (Activatio (None, 16, 16, 64) 0 ['conv2_block1_1_bn[0][0]']

n)

conv2_block1_2_pad (ZeroPaddin (None, 18, 18, 64) 0 ['conv2_block1_1_relu[0][0]']

g2D)

conv2_block1_2_conv (Conv2D) (None, 16, 16, 64) 36864 ['conv2_block1_2_pad[0][0]']

conv2_block1_2_bn (BatchNormal (None, 16, 16, 64) 256 ['conv2_block1_2_conv[0][0]']

ization)

conv2_block1_2_relu (Activatio (None, 16, 16, 64) 0 ['conv2_block1_2_bn[0][0]']

n)

conv2_block1_0_conv (Conv2D) (None, 16, 16, 256) 16640 ['conv2_block1_preact_relu[0][0]'

]

conv2_block1_3_conv (Conv2D) (None, 16, 16, 256) 16640 ['conv2_block1_2_relu[0][0]']

conv2_block1_out (Add) (None, 16, 16, 256) 0 ['conv2_block1_0_conv[0][0]',

'conv2_block1_3_conv[0][0]']

conv2_block2_preact_bn (BatchN (None, 16, 16, 256) 1024 ['conv2_block1_out[0][0]']

ormalization)

conv2_block2_preact_relu (Acti (None, 16, 16, 256) 0 ['conv2_block2_preact_bn[0][0]']

vation)

conv2_block2_1_conv (Conv2D) (None, 16, 16, 64) 16384 ['conv2_block2_preact_relu[0][0]'

]

conv2_block2_1_bn (BatchNormal (None, 16, 16, 64) 256 ['conv2_block2_1_conv[0][0]']

ization)

conv2_block2_1_relu (Activatio (None, 16, 16, 64) 0 ['conv2_block2_1_bn[0][0]']

n)

conv2_block2_2_pad (ZeroPaddin (None, 18, 18, 64) 0 ['conv2_block2_1_relu[0][0]']

g2D)

conv2_block2_2_conv (Conv2D) (None, 16, 16, 64) 36864 ['conv2_block2_2_pad[0][0]']

conv2_block2_2_bn (BatchNormal (None, 16, 16, 64) 256 ['conv2_block2_2_conv[0][0]']

ization)

conv2_block2_2_relu (Activatio (None, 16, 16, 64) 0 ['conv2_block2_2_bn[0][0]']

n)

conv2_block2_3_conv (Conv2D) (None, 16, 16, 256) 16640 ['conv2_block2_2_relu[0][0]']

conv2_block2_out (Add) (None, 16, 16, 256) 0 ['conv2_block1_out[0][0]',

'conv2_block2_3_conv[0][0]']

conv2_block3_preact_bn (BatchN (None, 16, 16, 256) 1024 ['conv2_block2_out[0][0]']

ormalization)

conv2_block3_preact_relu (Acti (None, 16, 16, 256) 0 ['conv2_block3_preact_bn[0][0]']

vation)

conv2_block3_1_conv (Conv2D) (None, 16, 16, 64) 16384 ['conv2_block3_preact_relu[0][0]'

]

conv2_block3_1_bn (BatchNormal (None, 16, 16, 64) 256 ['conv2_block3_1_conv[0][0]']

ization)

conv2_block3_1_relu (Activatio (None, 16, 16, 64) 0 ['conv2_block3_1_bn[0][0]']

n)

conv2_block3_2_pad (ZeroPaddin (None, 18, 18, 64) 0 ['conv2_block3_1_relu[0][0]']

g2D)

conv2_block3_2_conv (Conv2D) (None, 8, 8, 64) 36864 ['conv2_block3_2_pad[0][0]']

conv2_block3_2_bn (BatchNormal (None, 8, 8, 64) 256 ['conv2_block3_2_conv[0][0]']

ization)

conv2_block3_2_relu (Activatio (None, 8, 8, 64) 0 ['conv2_block3_2_bn[0][0]']

n)

max_pooling2d_14 (MaxPooling2D (None, 8, 8, 256) 0 ['conv2_block2_out[0][0]']

)

conv2_block3_3_conv (Conv2D) (None, 8, 8, 256) 16640 ['conv2_block3_2_relu[0][0]']

conv2_block3_out (Add) (None, 8, 8, 256) 0 ['max_pooling2d_14[0][0]',

'conv2_block3_3_conv[0][0]']

conv3_block1_preact_bn (BatchN (None, 8, 8, 256) 1024 ['conv2_block3_out[0][0]']

ormalization)

conv3_block1_preact_relu (Acti (None, 8, 8, 256) 0 ['conv3_block1_preact_bn[0][0]']

vation)

conv3_block1_1_conv (Conv2D) (None, 8, 8, 128) 32768 ['conv3_block1_preact_relu[0][0]'

]

conv3_block1_1_bn (BatchNormal (None, 8, 8, 128) 512 ['conv3_block1_1_conv[0][0]']

ization)

conv3_block1_1_relu (Activatio (None, 8, 8, 128) 0 ['conv3_block1_1_bn[0][0]']

n)

conv3_block1_2_pad (ZeroPaddin (None, 10, 10, 128) 0 ['conv3_block1_1_relu[0][0]']

g2D)

conv3_block1_2_conv (Conv2D) (None, 8, 8, 128) 147456 ['conv3_block1_2_pad[0][0]']

conv3_block1_2_bn (BatchNormal (None, 8, 8, 128) 512 ['conv3_block1_2_conv[0][0]']

ization)

conv3_block1_2_relu (Activatio (None, 8, 8, 128) 0 ['conv3_block1_2_bn[0][0]']

n)

conv3_block1_0_conv (Conv2D) (None, 8, 8, 512) 131584 ['conv3_block1_preact_relu[0][0]'

]

conv3_block1_3_conv (Conv2D) (None, 8, 8, 512) 66048 ['conv3_block1_2_relu[0][0]']

conv3_block1_out (Add) (None, 8, 8, 512) 0 ['conv3_block1_0_conv[0][0]',

'conv3_block1_3_conv[0][0]']

conv3_block2_preact_bn (BatchN (None, 8, 8, 512) 2048 ['conv3_block1_out[0][0]']

ormalization)

conv3_block2_preact_relu (Acti (None, 8, 8, 512) 0 ['conv3_block2_preact_bn[0][0]']

vation)

conv3_block2_1_conv (Conv2D) (None, 8, 8, 128) 65536 ['conv3_block2_preact_relu[0][0]'

]

conv3_block2_1_bn (BatchNormal (None, 8, 8, 128) 512 ['conv3_block2_1_conv[0][0]']

ization)

conv3_block2_1_relu (Activatio (None, 8, 8, 128) 0 ['conv3_block2_1_bn[0][0]']

n)

conv3_block2_2_pad (ZeroPaddin (None, 10, 10, 128) 0 ['conv3_block2_1_relu[0][0]']

g2D)

conv3_block2_2_conv (Conv2D) (None, 8, 8, 128) 147456 ['conv3_block2_2_pad[0][0]']

conv3_block2_2_bn (BatchNormal (None, 8, 8, 128) 512 ['conv3_block2_2_conv[0][0]']

ization)

conv3_block2_2_relu (Activatio (None, 8, 8, 128) 0 ['conv3_block2_2_bn[0][0]']

n)

conv3_block2_3_conv (Conv2D) (None, 8, 8, 512) 66048 ['conv3_block2_2_relu[0][0]']

conv3_block2_out (Add) (None, 8, 8, 512) 0 ['conv3_block1_out[0][0]',

'conv3_block2_3_conv[0][0]']

conv3_block3_preact_bn (BatchN (None, 8, 8, 512) 2048 ['conv3_block2_out[0][0]']

ormalization)

conv3_block3_preact_relu (Acti (None, 8, 8, 512) 0 ['conv3_block3_preact_bn[0][0]']

vation)

conv3_block3_1_conv (Conv2D) (None, 8, 8, 128) 65536 ['conv3_block3_preact_relu[0][0]'

]

conv3_block3_1_bn (BatchNormal (None, 8, 8, 128) 512 ['conv3_block3_1_conv[0][0]']

ization)

conv3_block3_1_relu (Activatio (None, 8, 8, 128) 0 ['conv3_block3_1_bn[0][0]']

n)

conv3_block3_2_pad (ZeroPaddin (None, 10, 10, 128) 0 ['conv3_block3_1_relu[0][0]']

g2D)

conv3_block3_2_conv (Conv2D) (None, 8, 8, 128) 147456 ['conv3_block3_2_pad[0][0]']

conv3_block3_2_bn (BatchNormal (None, 8, 8, 128) 512 ['conv3_block3_2_conv[0][0]']

ization)

conv3_block3_2_relu (Activatio (None, 8, 8, 128) 0 ['conv3_block3_2_bn[0][0]']

n)

conv3_block3_3_conv (Conv2D) (None, 8, 8, 512) 66048 ['conv3_block3_2_relu[0][0]']

conv3_block3_out (Add) (None, 8, 8, 512) 0 ['conv3_block2_out[0][0]',

'conv3_block3_3_conv[0][0]']

conv3_block4_preact_bn (BatchN (None, 8, 8, 512) 2048 ['conv3_block3_out[0][0]']

ormalization)

conv3_block4_preact_relu (Acti (None, 8, 8, 512) 0 ['conv3_block4_preact_bn[0][0]']

vation)

conv3_block4_1_conv (Conv2D) (None, 8, 8, 128) 65536 ['conv3_block4_preact_relu[0][0]'

]

conv3_block4_1_bn (BatchNormal (None, 8, 8, 128) 512 ['conv3_block4_1_conv[0][0]']

ization)

conv3_block4_1_relu (Activatio (None, 8, 8, 128) 0 ['conv3_block4_1_bn[0][0]']

n)

conv3_block4_2_pad (ZeroPaddin (None, 10, 10, 128) 0 ['conv3_block4_1_relu[0][0]']

g2D)

conv3_block4_2_conv (Conv2D) (None, 4, 4, 128) 147456 ['conv3_block4_2_pad[0][0]']

conv3_block4_2_bn (BatchNormal (None, 4, 4, 128) 512 ['conv3_block4_2_conv[0][0]']

ization)

conv3_block4_2_relu (Activatio (None, 4, 4, 128) 0 ['conv3_block4_2_bn[0][0]']

n)

max_pooling2d_15 (MaxPooling2D (None, 4, 4, 512) 0 ['conv3_block3_out[0][0]']

)

conv3_block4_3_conv (Conv2D) (None, 4, 4, 512) 66048 ['conv3_block4_2_relu[0][0]']

conv3_block4_out (Add) (None, 4, 4, 512) 0 ['max_pooling2d_15[0][0]',

'conv3_block4_3_conv[0][0]']

conv4_block1_preact_bn (BatchN (None, 4, 4, 512) 2048 ['conv3_block4_out[0][0]']

ormalization)

conv4_block1_preact_relu (Acti (None, 4, 4, 512) 0 ['conv4_block1_preact_bn[0][0]']

vation)

conv4_block1_1_conv (Conv2D) (None, 4, 4, 256) 131072 ['conv4_block1_preact_relu[0][0]'

]

conv4_block1_1_bn (BatchNormal (None, 4, 4, 256) 1024 ['conv4_block1_1_conv[0][0]']

ization)

conv4_block1_1_relu (Activatio (None, 4, 4, 256) 0 ['conv4_block1_1_bn[0][0]']

n)

conv4_block1_2_pad (ZeroPaddin (None, 6, 6, 256) 0 ['conv4_block1_1_relu[0][0]']

g2D)

conv4_block1_2_conv (Conv2D) (None, 4, 4, 256) 589824 ['conv4_block1_2_pad[0][0]']

conv4_block1_2_bn (BatchNormal (None, 4, 4, 256) 1024 ['conv4_block1_2_conv[0][0]']

ization)

conv4_block1_2_relu (Activatio (None, 4, 4, 256) 0 ['conv4_block1_2_bn[0][0]']

n)

conv4_block1_0_conv (Conv2D) (None, 4, 4, 1024) 525312 ['conv4_block1_preact_relu[0][0]'

]

conv4_block1_3_conv (Conv2D) (None, 4, 4, 1024) 263168 ['conv4_block1_2_relu[0][0]']

conv4_block1_out (Add) (None, 4, 4, 1024) 0 ['conv4_block1_0_conv[0][0]',

'conv4_block1_3_conv[0][0]']

conv4_block2_preact_bn (BatchN (None, 4, 4, 1024) 4096 ['conv4_block1_out[0][0]']

ormalization)

conv4_block2_preact_relu (Acti (None, 4, 4, 1024) 0 ['conv4_block2_preact_bn[0][0]']

vation)

conv4_block2_1_conv (Conv2D) (None, 4, 4, 256) 262144 ['conv4_block2_preact_relu[0][0]'

]

conv4_block2_1_bn (BatchNormal (None, 4, 4, 256) 1024 ['conv4_block2_1_conv[0][0]']

ization)

conv4_block2_1_relu (Activatio (None, 4, 4, 256) 0 ['conv4_block2_1_bn[0][0]']

n)

conv4_block2_2_pad (ZeroPaddin (None, 6, 6, 256) 0 ['conv4_block2_1_relu[0][0]']

g2D)

conv4_block2_2_conv (Conv2D) (None, 4, 4, 256) 589824 ['conv4_block2_2_pad[0][0]']

conv4_block2_2_bn (BatchNormal (None, 4, 4, 256) 1024 ['conv4_block2_2_conv[0][0]']

ization)

conv4_block2_2_relu (Activatio (None, 4, 4, 256) 0 ['conv4_block2_2_bn[0][0]']

n)

conv4_block2_3_conv (Conv2D) (None, 4, 4, 1024) 263168 ['conv4_block2_2_relu[0][0]']

conv4_block2_out (Add) (None, 4, 4, 1024) 0 ['conv4_block1_out[0][0]',

'conv4_block2_3_conv[0][0]']

conv4_block3_preact_bn (BatchN (None, 4, 4, 1024) 4096 ['conv4_block2_out[0][0]']

ormalization)

conv4_block3_preact_relu (Acti (None, 4, 4, 1024) 0 ['conv4_block3_preact_bn[0][0]']

vation)

conv4_block3_1_conv (Conv2D) (None, 4, 4, 256) 262144 ['conv4_block3_preact_relu[0][0]'

]

conv4_block3_1_bn (BatchNormal (None, 4, 4, 256) 1024 ['conv4_block3_1_conv[0][0]']

ization)

conv4_block3_1_relu (Activatio (None, 4, 4, 256) 0 ['conv4_block3_1_bn[0][0]']

n)

conv4_block3_2_pad (ZeroPaddin (None, 6, 6, 256) 0 ['conv4_block3_1_relu[0][0]']

g2D)

conv4_block3_2_conv (Conv2D) (None, 4, 4, 256) 589824 ['conv4_block3_2_pad[0][0]']

conv4_block3_2_bn (BatchNormal (None, 4, 4, 256) 1024 ['conv4_block3_2_conv[0][0]']

ization)

conv4_block3_2_relu (Activatio (None, 4, 4, 256) 0 ['conv4_block3_2_bn[0][0]']

n)

conv4_block3_3_conv (Conv2D) (None, 4, 4, 1024) 263168 ['conv4_block3_2_relu[0][0]']

conv4_block3_out (Add) (None, 4, 4, 1024) 0 ['conv4_block2_out[0][0]',

'conv4_block3_3_conv[0][0]']

conv4_block4_preact_bn (BatchN (None, 4, 4, 1024) 4096 ['conv4_block3_out[0][0]']

ormalization)

conv4_block4_preact_relu (Acti (None, 4, 4, 1024) 0 ['conv4_block4_preact_bn[0][0]']

vation)

conv4_block4_1_conv (Conv2D) (None, 4, 4, 256) 262144 ['conv4_block4_preact_relu[0][0]'

]

conv4_block4_1_bn (BatchNormal (None, 4, 4, 256) 1024 ['conv4_block4_1_conv[0][0]']

ization)

conv4_block4_1_relu (Activatio (None, 4, 4, 256) 0 ['conv4_block4_1_bn[0][0]']

n)

conv4_block4_2_pad (ZeroPaddin (None, 6, 6, 256) 0 ['conv4_block4_1_relu[0][0]']

g2D)

conv4_block4_2_conv (Conv2D) (None, 4, 4, 256) 589824 ['conv4_block4_2_pad[0][0]']

conv4_block4_2_bn (BatchNormal (None, 4, 4, 256) 1024 ['conv4_block4_2_conv[0][0]']

ization)

conv4_block4_2_relu (Activatio (None, 4, 4, 256) 0 ['conv4_block4_2_bn[0][0]']

n)

conv4_block4_3_conv (Conv2D) (None, 4, 4, 1024) 263168 ['conv4_block4_2_relu[0][0]']

conv4_block4_out (Add) (None, 4, 4, 1024) 0 ['conv4_block3_out[0][0]',

'conv4_block4_3_conv[0][0]']

conv4_block5_preact_bn (BatchN (None, 4, 4, 1024) 4096 ['conv4_block4_out[0][0]']

ormalization)

conv4_block5_preact_relu (Acti (None, 4, 4, 1024) 0 ['conv4_block5_preact_bn[0][0]']

vation)

conv4_block5_1_conv (Conv2D) (None, 4, 4, 256) 262144 ['conv4_block5_preact_relu[0][0]'

]

conv4_block5_1_bn (BatchNormal (None, 4, 4, 256) 1024 ['conv4_block5_1_conv[0][0]']

ization)

conv4_block5_1_relu (Activatio (None, 4, 4, 256) 0 ['conv4_block5_1_bn[0][0]']

n)

conv4_block5_2_pad (ZeroPaddin (None, 6, 6, 256) 0 ['conv4_block5_1_relu[0][0]']

g2D)

conv4_block5_2_conv (Conv2D) (None, 4, 4, 256) 589824 ['conv4_block5_2_pad[0][0]']

conv4_block5_2_bn (BatchNormal (None, 4, 4, 256) 1024 ['conv4_block5_2_conv[0][0]']

ization)

conv4_block5_2_relu (Activatio (None, 4, 4, 256) 0 ['conv4_block5_2_bn[0][0]']

n)

conv4_block5_3_conv (Conv2D) (None, 4, 4, 1024) 263168 ['conv4_block5_2_relu[0][0]']

conv4_block5_out (Add) (None, 4, 4, 1024) 0 ['conv4_block4_out[0][0]',

'conv4_block5_3_conv[0][0]']

conv4_block6_preact_bn (BatchN (None, 4, 4, 1024) 4096 ['conv4_block5_out[0][0]']

ormalization)

conv4_block6_preact_relu (Acti (None, 4, 4, 1024) 0 ['conv4_block6_preact_bn[0][0]']

vation)

conv4_block6_1_conv (Conv2D) (None, 4, 4, 256) 262144 ['conv4_block6_preact_relu[0][0]'

]

conv4_block6_1_bn (BatchNormal (None, 4, 4, 256) 1024 ['conv4_block6_1_conv[0][0]']

ization)

conv4_block6_1_relu (Activatio (None, 4, 4, 256) 0 ['conv4_block6_1_bn[0][0]']

n)

conv4_block6_2_pad (ZeroPaddin (None, 6, 6, 256) 0 ['conv4_block6_1_relu[0][0]']

g2D)

conv4_block6_2_conv (Conv2D) (None, 2, 2, 256) 589824 ['conv4_block6_2_pad[0][0]']

conv4_block6_2_bn (BatchNormal (None, 2, 2, 256) 1024 ['conv4_block6_2_conv[0][0]']

ization)

conv4_block6_2_relu (Activatio (None, 2, 2, 256) 0 ['conv4_block6_2_bn[0][0]']

n)

max_pooling2d_16 (MaxPooling2D (None, 2, 2, 1024) 0 ['conv4_block5_out[0][0]']

)

conv4_block6_3_conv (Conv2D) (None, 2, 2, 1024) 263168 ['conv4_block6_2_relu[0][0]']

conv4_block6_out (Add) (None, 2, 2, 1024) 0 ['max_pooling2d_16[0][0]',

'conv4_block6_3_conv[0][0]']

conv5_block1_preact_bn (BatchN (None, 2, 2, 1024) 4096 ['conv4_block6_out[0][0]']

ormalization)

conv5_block1_preact_relu (Acti (None, 2, 2, 1024) 0 ['conv5_block1_preact_bn[0][0]']

vation)

conv5_block1_1_conv (Conv2D) (None, 2, 2, 512) 524288 ['conv5_block1_preact_relu[0][0]'

]

conv5_block1_1_bn (BatchNormal (None, 2, 2, 512) 2048 ['conv5_block1_1_conv[0][0]']

ization)

conv5_block1_1_relu (Activatio (None, 2, 2, 512) 0 ['conv5_block1_1_bn[0][0]']

n)

conv5_block1_2_pad (ZeroPaddin (None, 4, 4, 512) 0 ['conv5_block1_1_relu[0][0]']

g2D)

conv5_block1_2_conv (Conv2D) (None, 2, 2, 512) 2359296 ['conv5_block1_2_pad[0][0]']

conv5_block1_2_bn (BatchNormal (None, 2, 2, 512) 2048 ['conv5_block1_2_conv[0][0]']

ization)

conv5_block1_2_relu (Activatio (None, 2, 2, 512) 0 ['conv5_block1_2_bn[0][0]']

n)

conv5_block1_0_conv (Conv2D) (None, 2, 2, 2048) 2099200 ['conv5_block1_preact_relu[0][0]'

]

conv5_block1_3_conv (Conv2D) (None, 2, 2, 2048) 1050624 ['conv5_block1_2_relu[0][0]']

conv5_block1_out (Add) (None, 2, 2, 2048) 0 ['conv5_block1_0_conv[0][0]',

'conv5_block1_3_conv[0][0]']

conv5_block2_preact_bn (BatchN (None, 2, 2, 2048) 8192 ['conv5_block1_out[0][0]']

ormalization)

conv5_block2_preact_relu (Acti (None, 2, 2, 2048) 0 ['conv5_block2_preact_bn[0][0]']

vation)

conv5_block2_1_conv (Conv2D) (None, 2, 2, 512) 1048576 ['conv5_block2_preact_relu[0][0]'

]

conv5_block2_1_bn (BatchNormal (None, 2, 2, 512) 2048 ['conv5_block2_1_conv[0][0]']

ization)

conv5_block2_1_relu (Activatio (None, 2, 2, 512) 0 ['conv5_block2_1_bn[0][0]']

n)

conv5_block2_2_pad (ZeroPaddin (None, 4, 4, 512) 0 ['conv5_block2_1_relu[0][0]']

g2D)

conv5_block2_2_conv (Conv2D) (None, 2, 2, 512) 2359296 ['conv5_block2_2_pad[0][0]']

conv5_block2_2_bn (BatchNormal (None, 2, 2, 512) 2048 ['conv5_block2_2_conv[0][0]']

ization)

conv5_block2_2_relu (Activatio (None, 2, 2, 512) 0 ['conv5_block2_2_bn[0][0]']

n)

conv5_block2_3_conv (Conv2D) (None, 2, 2, 2048) 1050624 ['conv5_block2_2_relu[0][0]']

conv5_block2_out (Add) (None, 2, 2, 2048) 0 ['conv5_block1_out[0][0]',

'conv5_block2_3_conv[0][0]']

conv5_block3_preact_bn (BatchN (None, 2, 2, 2048) 8192 ['conv5_block2_out[0][0]']

ormalization)

conv5_block3_preact_relu (Acti (None, 2, 2, 2048) 0 ['conv5_block3_preact_bn[0][0]']

vation)

conv5_block3_1_conv (Conv2D) (None, 2, 2, 512) 1048576 ['conv5_block3_preact_relu[0][0]'

]

conv5_block3_1_bn (BatchNormal (None, 2, 2, 512) 2048 ['conv5_block3_1_conv[0][0]']

ization)

conv5_block3_1_relu (Activatio (None, 2, 2, 512) 0 ['conv5_block3_1_bn[0][0]']

n)

conv5_block3_2_pad (ZeroPaddin (None, 4, 4, 512) 0 ['conv5_block3_1_relu[0][0]']

g2D)

conv5_block3_2_conv (Conv2D) (None, 2, 2, 512) 2359296 ['conv5_block3_2_pad[0][0]']

conv5_block3_2_bn (BatchNormal (None, 2, 2, 512) 2048 ['conv5_block3_2_conv[0][0]']

ization)

conv5_block3_2_relu (Activatio (None, 2, 2, 512) 0 ['conv5_block3_2_bn[0][0]']

n)

conv5_block3_3_conv (Conv2D) (None, 2, 2, 2048) 1050624 ['conv5_block3_2_relu[0][0]']

conv5_block3_out (Add) (None, 2, 2, 2048) 0 ['conv5_block2_out[0][0]',

'conv5_block3_3_conv[0][0]']

post_bn (BatchNormalization) (None, 2, 2, 2048) 8192 ['conv5_block3_out[0][0]']

post_relu (Activation) (None, 2, 2, 2048) 0 ['post_bn[0][0]']

==================================================================================================

Total params: 23,564,800

Trainable params: 0

Non-trainable params: 23,564,800

__________________________________________________________________________________________________def build_trainsfer_model():

model = Sequential([

pre_trained_base,

Flatten(),

Dense(128,activation='relu'),

Dropout(0.3),

Dense(64,activation='relu'),

Dropout(0.3),

Dense(num_classes,activation='softmax'),

])

return modelt_model = build_trainsfer_model()

t_model.summary()Model: "sequential_4"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

resnet50v2 (Functional) (None, 2, 2, 2048) 23564800

flatten_4 (Flatten) (None, 8192) 0

dense_12 (Dense) (None, 128) 1048704

dropout_8 (Dropout) (None, 128) 0

dense_13 (Dense) (None, 64) 8256

dropout_9 (Dropout) (None, 64) 0

dense_14 (Dense) (None, 10) 650

=================================================================

Total params: 24,622,410

Trainable params: 1,057,610

Non-trainable params: 23,564,800

_________________________________________________________________t_model.compile(optimizer='adam',loss='sparse_categorical_crossentropy',metrics=['acc'])

t_history = t_model.fit(train_aug,validation_data=valid_aug,epochs=50)

plot_loss_acc(t_history,50)Epoch 1/50

338/338 [==============================] - 18s 37ms/step - loss: 1.0830 - acc: 0.6519 - val_loss: 0.6652 - val_acc: 0.7800

Epoch 2/50

338/338 [==============================] - 11s 32ms/step - loss: 0.7906 - acc: 0.7464 - val_loss: 0.5961 - val_acc: 0.8013

Epoch 3/50

338/338 [==============================] - 11s 32ms/step - loss: 0.7046 - acc: 0.7725 - val_loss: 0.5570 - val_acc: 0.8156

Epoch 4/50

338/338 [==============================] - 11s 32ms/step - loss: 0.6683 - acc: 0.7877 - val_loss: 0.5442 - val_acc: 0.8254

Epoch 5/50

338/338 [==============================] - 11s 33ms/step - loss: 0.6393 - acc: 0.7961 - val_loss: 0.5160 - val_acc: 0.8296

Epoch 6/50

338/338 [==============================] - 13s 39ms/step - loss: 0.6144 - acc: 0.8007 - val_loss: 0.5055 - val_acc: 0.8289

Epoch 7/50

338/338 [==============================] - 13s 38ms/step - loss: 0.5868 - acc: 0.8089 - val_loss: 0.4914 - val_acc: 0.8359

Epoch 8/50

338/338 [==============================] - 13s 36ms/step - loss: 0.5722 - acc: 0.8154 - val_loss: 0.4909 - val_acc: 0.8300

Epoch 9/50

338/338 [==============================] - 12s 35ms/step - loss: 0.5700 - acc: 0.8141 - val_loss: 0.4813 - val_acc: 0.8417

Epoch 10/50

338/338 [==============================] - 13s 37ms/step - loss: 0.5451 - acc: 0.8164 - val_loss: 0.4786 - val_acc: 0.8387

Epoch 11/50

338/338 [==============================] - 13s 38ms/step - loss: 0.5397 - acc: 0.8227 - val_loss: 0.4631 - val_acc: 0.8431

Epoch 12/50

338/338 [==============================] - 17s 51ms/step - loss: 0.5224 - acc: 0.8263 - val_loss: 0.4659 - val_acc: 0.8428

Epoch 13/50

338/338 [==============================] - 13s 36ms/step - loss: 0.5079 - acc: 0.8329 - val_loss: 0.4655 - val_acc: 0.8350

Epoch 14/50

338/338 [==============================] - 14s 40ms/step - loss: 0.5017 - acc: 0.8344 - val_loss: 0.4716 - val_acc: 0.8437

Epoch 15/50

338/338 [==============================] - 13s 38ms/step - loss: 0.5103 - acc: 0.8281 - val_loss: 0.4691 - val_acc: 0.8387

Epoch 16/50

338/338 [==============================] - 11s 33ms/step - loss: 0.5059 - acc: 0.8332 - val_loss: 0.4558 - val_acc: 0.8465

Epoch 17/50

338/338 [==============================] - 11s 32ms/step - loss: 0.5107 - acc: 0.8340 - val_loss: 0.4637 - val_acc: 0.8443

Epoch 18/50

338/338 [==============================] - 11s 32ms/step - loss: 0.5019 - acc: 0.8334 - val_loss: 0.4543 - val_acc: 0.8463

Epoch 19/50

338/338 [==============================] - 12s 34ms/step - loss: 0.4843 - acc: 0.8402 - val_loss: 0.4449 - val_acc: 0.8476

Epoch 20/50

338/338 [==============================] - 11s 31ms/step - loss: 0.4849 - acc: 0.8390 - val_loss: 0.4537 - val_acc: 0.8456

Epoch 21/50

338/338 [==============================] - 11s 31ms/step - loss: 0.4782 - acc: 0.8394 - val_loss: 0.4525 - val_acc: 0.8463

Epoch 22/50

338/338 [==============================] - 11s 32ms/step - loss: 0.4721 - acc: 0.8435 - val_loss: 0.4467 - val_acc: 0.8519

Epoch 23/50

338/338 [==============================] - 11s 31ms/step - loss: 0.4838 - acc: 0.8403 - val_loss: 0.4522 - val_acc: 0.8420

Epoch 24/50

338/338 [==============================] - 11s 32ms/step - loss: 0.4699 - acc: 0.8444 - val_loss: 0.4390 - val_acc: 0.8546

Epoch 25/50

338/338 [==============================] - 11s 32ms/step - loss: 0.4597 - acc: 0.8469 - val_loss: 0.4378 - val_acc: 0.8554

Epoch 26/50

338/338 [==============================] - 11s 31ms/step - loss: 0.4568 - acc: 0.8444 - val_loss: 0.4345 - val_acc: 0.8533

Epoch 27/50

338/338 [==============================] - 12s 36ms/step - loss: 0.4528 - acc: 0.8444 - val_loss: 0.4342 - val_acc: 0.8550

Epoch 28/50

338/338 [==============================] - 11s 31ms/step - loss: 0.4559 - acc: 0.8489 - val_loss: 0.4406 - val_acc: 0.8496

Epoch 29/50

338/338 [==============================] - 11s 31ms/step - loss: 0.4485 - acc: 0.8482 - val_loss: 0.4414 - val_acc: 0.8507

Epoch 30/50

338/338 [==============================] - 11s 32ms/step - loss: 0.4500 - acc: 0.8511 - val_loss: 0.4349 - val_acc: 0.8511

Epoch 31/50

338/338 [==============================] - 11s 33ms/step - loss: 0.4575 - acc: 0.8486 - val_loss: 0.4349 - val_acc: 0.8530

Epoch 32/50

338/338 [==============================] - 11s 31ms/step - loss: 0.4483 - acc: 0.8526 - val_loss: 0.4333 - val_acc: 0.8541

Epoch 33/50

338/338 [==============================] - 11s 32ms/step - loss: 0.4413 - acc: 0.8502 - val_loss: 0.4358 - val_acc: 0.8502

Epoch 34/50

338/338 [==============================] - 11s 32ms/step - loss: 0.4302 - acc: 0.8578 - val_loss: 0.4364 - val_acc: 0.8524

Epoch 35/50

338/338 [==============================] - 11s 32ms/step - loss: 0.4375 - acc: 0.8542 - val_loss: 0.4518 - val_acc: 0.8480

Epoch 36/50

338/338 [==============================] - 12s 34ms/step - loss: 0.4288 - acc: 0.8578 - val_loss: 0.4326 - val_acc: 0.8554

Epoch 37/50

338/338 [==============================] - 11s 31ms/step - loss: 0.4328 - acc: 0.8568 - val_loss: 0.4304 - val_acc: 0.8557

Epoch 38/50

338/338 [==============================] - 11s 31ms/step - loss: 0.4290 - acc: 0.8581 - val_loss: 0.4297 - val_acc: 0.8561

Epoch 39/50

338/338 [==============================] - 11s 32ms/step - loss: 0.4268 - acc: 0.8568 - val_loss: 0.4274 - val_acc: 0.8539

Epoch 40/50

338/338 [==============================] - 11s 32ms/step - loss: 0.4198 - acc: 0.8588 - val_loss: 0.4313 - val_acc: 0.8574

Epoch 41/50

338/338 [==============================] - 11s 31ms/step - loss: 0.4153 - acc: 0.8619 - val_loss: 0.4349 - val_acc: 0.8572

Epoch 42/50

338/338 [==============================] - 11s 31ms/step - loss: 0.4214 - acc: 0.8593 - val_loss: 0.4272 - val_acc: 0.8541

Epoch 43/50

338/338 [==============================] - 11s 31ms/step - loss: 0.4247 - acc: 0.8575 - val_loss: 0.4363 - val_acc: 0.8504

Epoch 44/50

338/338 [==============================] - 12s 34ms/step - loss: 0.4155 - acc: 0.8597 - val_loss: 0.4293 - val_acc: 0.8569

Epoch 45/50

338/338 [==============================] - 11s 33ms/step - loss: 0.4077 - acc: 0.8594 - val_loss: 0.4267 - val_acc: 0.8580

Epoch 46/50

338/338 [==============================] - 11s 32ms/step - loss: 0.4077 - acc: 0.8631 - val_loss: 0.4384 - val_acc: 0.8550

Epoch 47/50

338/338 [==============================] - 11s 32ms/step - loss: 0.4200 - acc: 0.8602 - val_loss: 0.4211 - val_acc: 0.8559

Epoch 48/50

338/338 [==============================] - 11s 32ms/step - loss: 0.4036 - acc: 0.8616 - val_loss: 0.4298 - val_acc: 0.8576

Epoch 49/50

338/338 [==============================] - 12s 35ms/step - loss: 0.3995 - acc: 0.8649 - val_loss: 0.4325 - val_acc: 0.8569

Epoch 50/50

338/338 [==============================] - 11s 32ms/step - loss: 0.3978 - acc: 0.8651 - val_loss: 0.4401 - val_acc: 0.8543

#학습된 모델 예측

pred = t_model.predict(image.numpy().reshape(-1,64,64,3)) #image.numpy() 데이터 1건이라 3차원 -> reshape 4차원으로 만들어 줘야 함

np.argmax(pred)1/1 [==============================] - 0s 44ms/step

0print(info.features['label'].int2str(np.argmax(pred)))AnnualCrop#train image 불러오는 imagegenerator

압축풀기

import tensorflow as tf

from keras.preprocessing.image import ImageDataGenerator

import numpy as np

import matplotlib.pyplot as plt

import zipfile #압축풀기from google.colab import drive

drive.mount("/content/drive")Mounted at /content/drivepath = '/content/drive/MyDrive/Colab Notebooks/sesac_deeplerning/cats_and_dogs.zip.zip의 사본'

zip_ref = zipfile.ZipFile(path,'r')

zip_ref.extractall('dataset/')

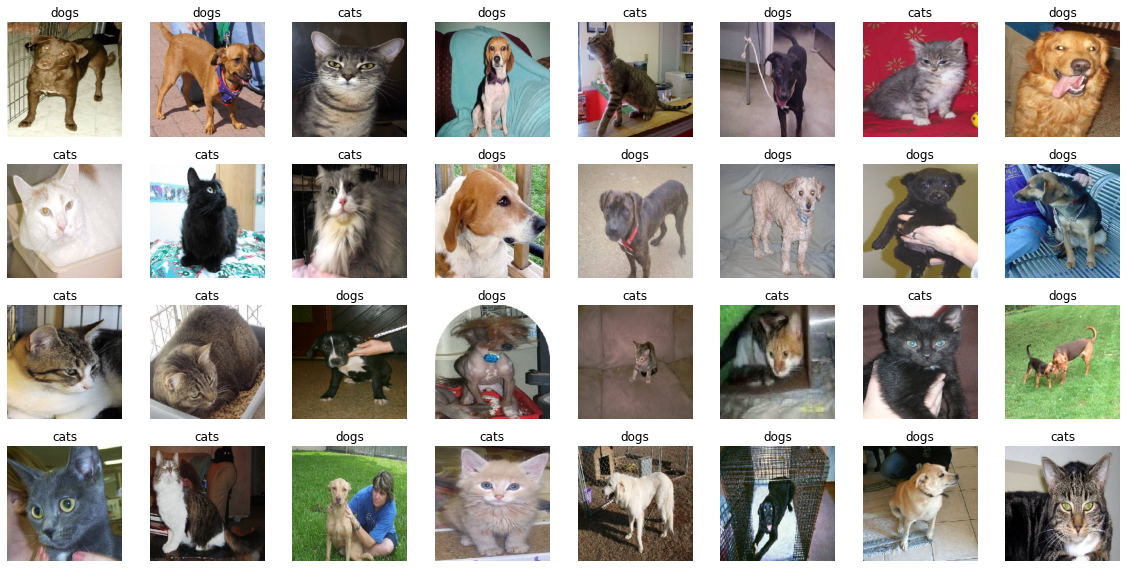

zip_ref.close()#imagedatagenerator 생성

#label값이 없으면 trian - cats, dogs ->가 label로 자동으로 들어간다.

#이미지를 구분하고자 하는 폴터별로 이미지를 구분해야 한다.

image_gen = ImageDataGenerator(rescale=(1/255.)) #0~1사이값으로 변환

train_dir = '/content/dataset/cats_and_dogs_filtered/train'

valid_dir = '/content/dataset/cats_and_dogs_filtered/validation'

train_gen = image_gen.flow_from_directory(train_dir,

target_size=(224,224),

batch_size=32,

classes=['cats','dogs'],

class_mode='binary',

seed=2020)

valid_gen = image_gen.flow_from_directory(valid_dir,

target_size=(224,224),

batch_size=32,

classes=['cats','dogs'],

class_mode='binary',

seed=2020)

Found 2000 images belonging to 2 classes.

Found 1000 images belonging to 2 classes.class_labels=['cats','dogs']

batch = next(train_gen)

#len(batch) #2 = image,label

images,labels = batch[0],batch[1]

plt.figure(figsize=(16,8))

for i in range(32):

ax = plt.subplot(4,8,i+1)

plt.imshow(images[i])

plt.title(class_labels[labels[i].astype(int)])

plt.axis('off') #눈금제거

plt.tight_layout()

plt.show()

images.shape(32, 224, 224, 3)#모델을 return하는 함수

from keras import Sequential

from keras.layers import Dense,Dropout,Flatten,Conv2D,MaxPooling2D,BatchNormalization

from keras.optimizers import Adam

from keras.losses import BinaryCrossentropy

def build_model():

model = Sequential([

BatchNormalization(),

Conv2D(32,(3,3),padding='same',activation='relu'),

MaxPooling2D((2,2)),

BatchNormalization(),

Conv2D(64,(3,3),padding='same',activation='relu'),

MaxPooling2D((2,2)),

BatchNormalization(),

Conv2D(128,(3,3),padding='same',activation='relu'),

MaxPooling2D((2,2)),

#전이학습하면

Flatten(),

Dense(256,activation='relu'),

Dropout(0.3),

Dense(1,activation='sigmoid') #이진분류 출력1, sigmoid, 다중분류 softmax

])

return model

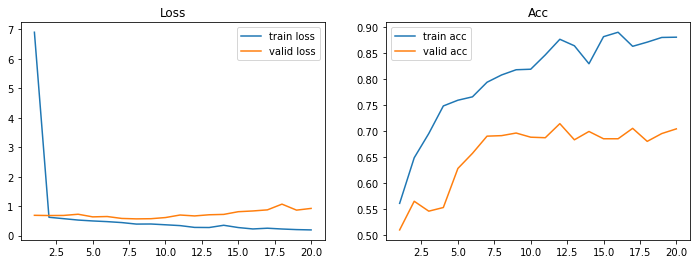

model = build_model()컴파일

#컴파일, 학습

model.compile(optimizer=Adam(0.001),loss=BinaryCrossentropy(),metrics=['accuracy'])

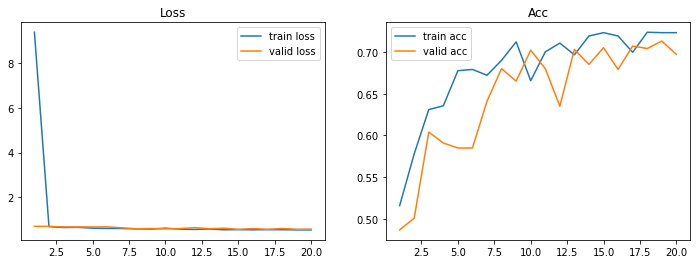

history = model.fit(train_gen,validation_data=valid_gen,epochs=20)Epoch 1/20

63/63 [==============================] - 15s 205ms/step - loss: 6.9030 - accuracy: 0.5610 - val_loss: 0.6888 - val_accuracy: 0.5100

Epoch 2/20

63/63 [==============================] - 11s 169ms/step - loss: 0.6225 - accuracy: 0.6485 - val_loss: 0.6814 - val_accuracy: 0.5650

Epoch 3/20

63/63 [==============================] - 12s 197ms/step - loss: 0.5753 - accuracy: 0.6950 - val_loss: 0.6844 - val_accuracy: 0.5460

Epoch 4/20

63/63 [==============================] - 11s 168ms/step - loss: 0.5279 - accuracy: 0.7480 - val_loss: 0.7253 - val_accuracy: 0.5530

Epoch 5/20

63/63 [==============================] - 11s 169ms/step - loss: 0.4976 - accuracy: 0.7590 - val_loss: 0.6363 - val_accuracy: 0.6280

Epoch 6/20

63/63 [==============================] - 16s 248ms/step - loss: 0.4744 - accuracy: 0.7655 - val_loss: 0.6475 - val_accuracy: 0.6570

Epoch 7/20

63/63 [==============================] - 13s 206ms/step - loss: 0.4423 - accuracy: 0.7935 - val_loss: 0.5826 - val_accuracy: 0.6900

Epoch 8/20

63/63 [==============================] - 11s 169ms/step - loss: 0.3912 - accuracy: 0.8075 - val_loss: 0.5680 - val_accuracy: 0.6910

Epoch 9/20

63/63 [==============================] - 11s 169ms/step - loss: 0.3940 - accuracy: 0.8175 - val_loss: 0.5736 - val_accuracy: 0.6960

Epoch 10/20

63/63 [==============================] - 11s 167ms/step - loss: 0.3673 - accuracy: 0.8185 - val_loss: 0.6128 - val_accuracy: 0.6880

Epoch 11/20

63/63 [==============================] - 10s 166ms/step - loss: 0.3396 - accuracy: 0.8460 - val_loss: 0.6988 - val_accuracy: 0.6870

Epoch 12/20

63/63 [==============================] - 11s 171ms/step - loss: 0.2784 - accuracy: 0.8760 - val_loss: 0.6680 - val_accuracy: 0.7140

Epoch 13/20

63/63 [==============================] - 11s 176ms/step - loss: 0.2745 - accuracy: 0.8635 - val_loss: 0.7073 - val_accuracy: 0.6830

Epoch 14/20

63/63 [==============================] - 11s 166ms/step - loss: 0.3509 - accuracy: 0.8290 - val_loss: 0.7200 - val_accuracy: 0.6990

Epoch 15/20

63/63 [==============================] - 11s 167ms/step - loss: 0.2734 - accuracy: 0.8810 - val_loss: 0.8114 - val_accuracy: 0.6850

Epoch 16/20

63/63 [==============================] - 10s 166ms/step - loss: 0.2259 - accuracy: 0.8895 - val_loss: 0.8366 - val_accuracy: 0.6850

Epoch 17/20

63/63 [==============================] - 10s 167ms/step - loss: 0.2510 - accuracy: 0.8625 - val_loss: 0.8737 - val_accuracy: 0.7050

Epoch 18/20

63/63 [==============================] - 11s 168ms/step - loss: 0.2240 - accuracy: 0.8705 - val_loss: 1.0683 - val_accuracy: 0.6800

Epoch 19/20

63/63 [==============================] - 11s 167ms/step - loss: 0.2038 - accuracy: 0.8795 - val_loss: 0.8646 - val_accuracy: 0.6950

Epoch 20/20

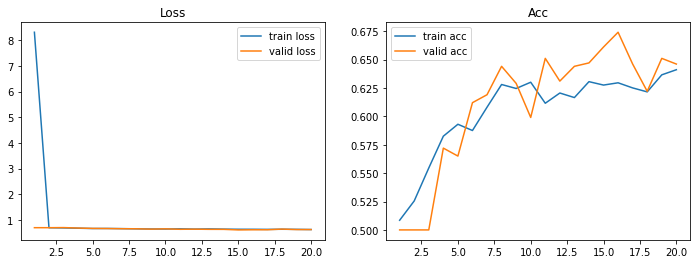

63/63 [==============================] - 10s 165ms/step - loss: 0.1932 - accuracy: 0.8800 - val_loss: 0.9232 - val_accuracy: 0.7040def plot_loss_acc(history,epoch):

loss,val_loss = history.history['loss'],history.history['val_loss']

acc,val_acc = history.history['accuracy'],history.history['val_accuracy']

fig , axes = plt.subplots(1,2,figsize=(12,4))

axes[0].plot(range(1,epoch+1), loss, label ='train loss')

axes[0].plot(range(1,epoch+1), val_loss, label ='valid loss')

axes[0].legend(loc='best')

axes[0].set_title('Loss')

axes[1].plot(range(1,epoch+1), acc, label ='train acc')

axes[1].plot(range(1,epoch+1), val_acc, label ='valid acc')

axes[1].legend(loc='best')

axes[1].set_title('Acc')

plt.show()

plot_loss_acc(history,20)

image_gen = ImageDataGenerator(rescale=(1/255.),

horizontal_flip=True,

zoom_range=0.2,

rotation_range=35)

train_dir = '/content/dataset/cats_and_dogs_filtered/train'

valid_dir = '/content/dataset/cats_and_dogs_filtered/validation'

train_gen_aug = image_gen.flow_from_directory(train_dir,

target_size=(224,224),

batch_size=32,

classes=['cats','dogs'],

class_mode='binary',

seed=2020)

valid_gen_aug = image_gen.flow_from_directory(valid_dir,

target_size=(224,224),

batch_size=32,

classes=['cats','dogs'],

class_mode='binary',

seed=2020)

Found 2000 images belonging to 2 classes.

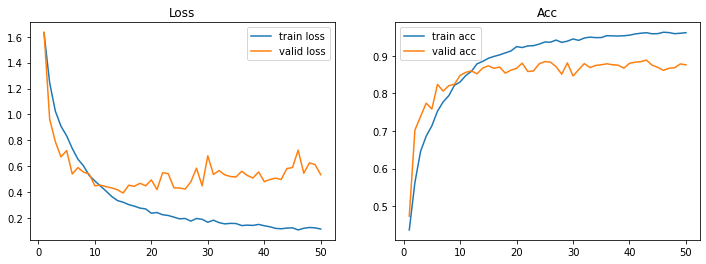

Found 1000 images belonging to 2 classes.model_aug = build_model()

model_aug.compile(optimizer=Adam(0.001),loss=BinaryCrossentropy(),metrics=['accuracy'])

history_aug = model_aug.fit(train_gen_aug,validation_data=valid_gen_aug,epochs=20)Epoch 1/20

63/63 [==============================] - 39s 610ms/step - loss: 8.3149 - accuracy: 0.5085 - val_loss: 0.6921 - val_accuracy: 0.5000

Epoch 2/20

63/63 [==============================] - 41s 649ms/step - loss: 0.6912 - accuracy: 0.5255 - val_loss: 0.6918 - val_accuracy: 0.5000

Epoch 3/20

63/63 [==============================] - 38s 604ms/step - loss: 0.6832 - accuracy: 0.5545 - val_loss: 0.6958 - val_accuracy: 0.5000

Epoch 4/20

63/63 [==============================] - 38s 602ms/step - loss: 0.6716 - accuracy: 0.5825 - val_loss: 0.6778 - val_accuracy: 0.5720

Epoch 5/20

63/63 [==============================] - 38s 610ms/step - loss: 0.6598 - accuracy: 0.5930 - val_loss: 0.6642 - val_accuracy: 0.5650

Epoch 6/20

63/63 [==============================] - 36s 581ms/step - loss: 0.6621 - accuracy: 0.5875 - val_loss: 0.6592 - val_accuracy: 0.6120

Epoch 7/20

63/63 [==============================] - 36s 574ms/step - loss: 0.6556 - accuracy: 0.6080 - val_loss: 0.6524 - val_accuracy: 0.6190

Epoch 8/20

63/63 [==============================] - 36s 569ms/step - loss: 0.6481 - accuracy: 0.6280 - val_loss: 0.6490 - val_accuracy: 0.6440

Epoch 9/20

63/63 [==============================] - 36s 569ms/step - loss: 0.6413 - accuracy: 0.6245 - val_loss: 0.6361 - val_accuracy: 0.6290

Epoch 10/20

63/63 [==============================] - 43s 683ms/step - loss: 0.6395 - accuracy: 0.6300 - val_loss: 0.6471 - val_accuracy: 0.5990

Epoch 11/20

63/63 [==============================] - 37s 587ms/step - loss: 0.6478 - accuracy: 0.6115 - val_loss: 0.6298 - val_accuracy: 0.6510

Epoch 12/20

63/63 [==============================] - 36s 573ms/step - loss: 0.6371 - accuracy: 0.6205 - val_loss: 0.6344 - val_accuracy: 0.6310

Epoch 13/20

63/63 [==============================] - 37s 584ms/step - loss: 0.6444 - accuracy: 0.6165 - val_loss: 0.6280 - val_accuracy: 0.6440

Epoch 14/20

63/63 [==============================] - 36s 572ms/step - loss: 0.6363 - accuracy: 0.6305 - val_loss: 0.6273 - val_accuracy: 0.6470

Epoch 15/20

63/63 [==============================] - 37s 590ms/step - loss: 0.6309 - accuracy: 0.6275 - val_loss: 0.6053 - val_accuracy: 0.6610

Epoch 16/20

63/63 [==============================] - 36s 570ms/step - loss: 0.6303 - accuracy: 0.6295 - val_loss: 0.6113 - val_accuracy: 0.6740

Epoch 17/20

63/63 [==============================] - 36s 573ms/step - loss: 0.6264 - accuracy: 0.6250 - val_loss: 0.6116 - val_accuracy: 0.6460

Epoch 18/20

63/63 [==============================] - 36s 571ms/step - loss: 0.6311 - accuracy: 0.6215 - val_loss: 0.6373 - val_accuracy: 0.6220

Epoch 19/20

63/63 [==============================] - 36s 570ms/step - loss: 0.6274 - accuracy: 0.6365 - val_loss: 0.6193 - val_accuracy: 0.6510

Epoch 20/20

63/63 [==============================] - 37s 589ms/step - loss: 0.6206 - accuracy: 0.6410 - val_loss: 0.6190 - val_accuracy: 0.6460plot_loss_acc(history_aug,20)

#ResNet이용한 전이학습, 전이학습은 앞쪽의 모델 구성만 바꾸기

from keras.applications import ResNet50V2

pre_trained_base = ResNet50V2(include_top=False,

weights='imagenet',

input_shape=[224, 224, 3])

pre_trained_base.trainable = False

def build_trainsfer_model():

model = tf.keras.Sequential([

# Pre-trained Base, 최상위

pre_trained_base,

# Classifier 출력층, 앞에 것과 동일하게 맞춰줌

Flatten(),

Dense(256,activation='relu'),

Dropout(0.3),

Dense(1,activation='sigmoid'),

])

return modelDownloading data from https://storage.googleapis.com/tensorflow/keras-applications/resnet/resnet50v2_weights_tf_dim_ordering_tf_kernels_notop.h5

94668760/94668760 [==============================] - 0s 0us/steptc_model = build_model()

tc_model.compile(optimizer=Adam(0.001),loss=BinaryCrossentropy(),metrics=['accuracy'])

history_t = tc_model.fit(train_gen_aug,validation_data=valid_gen_aug,epochs=20)Epoch 1/20

63/63 [==============================] - 37s 580ms/step - loss: 9.3990 - accuracy: 0.5160 - val_loss: 0.6958 - val_accuracy: 0.4870

Epoch 2/20

63/63 [==============================] - 37s 589ms/step - loss: 0.6830 - accuracy: 0.5780 - val_loss: 0.6936 - val_accuracy: 0.5010

Epoch 3/20

63/63 [==============================] - 37s 583ms/step - loss: 0.6421 - accuracy: 0.6310 - val_loss: 0.6695 - val_accuracy: 0.6040

Epoch 4/20

63/63 [==============================] - 36s 569ms/step - loss: 0.6500 - accuracy: 0.6355 - val_loss: 0.6663 - val_accuracy: 0.5910

Epoch 5/20

63/63 [==============================] - 36s 569ms/step - loss: 0.6119 - accuracy: 0.6775 - val_loss: 0.6691 - val_accuracy: 0.5850

Epoch 6/20

63/63 [==============================] - 36s 568ms/step - loss: 0.6003 - accuracy: 0.6790 - val_loss: 0.6723 - val_accuracy: 0.5850

Epoch 7/20

63/63 [==============================] - 41s 657ms/step - loss: 0.5973 - accuracy: 0.6720 - val_loss: 0.6268 - val_accuracy: 0.6410

Epoch 8/20

63/63 [==============================] - 38s 603ms/step - loss: 0.5809 - accuracy: 0.6900 - val_loss: 0.5878 - val_accuracy: 0.6800

Epoch 9/20

63/63 [==============================] - 40s 632ms/step - loss: 0.5715 - accuracy: 0.7120 - val_loss: 0.5977 - val_accuracy: 0.6650

Epoch 10/20

63/63 [==============================] - 36s 568ms/step - loss: 0.6122 - accuracy: 0.6655 - val_loss: 0.5805 - val_accuracy: 0.7020

Epoch 11/20

63/63 [==============================] - 36s 571ms/step - loss: 0.5678 - accuracy: 0.7000 - val_loss: 0.5951 - val_accuracy: 0.6800

Epoch 12/20

63/63 [==============================] - 38s 599ms/step - loss: 0.5558 - accuracy: 0.7105 - val_loss: 0.6383 - val_accuracy: 0.6350

Epoch 13/20

63/63 [==============================] - 37s 591ms/step - loss: 0.5745 - accuracy: 0.6965 - val_loss: 0.5878 - val_accuracy: 0.7030

Epoch 14/20

63/63 [==============================] - 36s 570ms/step - loss: 0.5406 - accuracy: 0.7190 - val_loss: 0.6114 - val_accuracy: 0.6850

Epoch 15/20

63/63 [==============================] - 36s 569ms/step - loss: 0.5475 - accuracy: 0.7230 - val_loss: 0.5610 - val_accuracy: 0.7050

Epoch 16/20

63/63 [==============================] - 36s 570ms/step - loss: 0.5384 - accuracy: 0.7190 - val_loss: 0.5964 - val_accuracy: 0.6790

Epoch 17/20

63/63 [==============================] - 36s 571ms/step - loss: 0.5469 - accuracy: 0.6995 - val_loss: 0.5611 - val_accuracy: 0.7070

Epoch 18/20

63/63 [==============================] - 37s 596ms/step - loss: 0.5421 - accuracy: 0.7235 - val_loss: 0.5977 - val_accuracy: 0.7040

Epoch 19/20

63/63 [==============================] - 36s 568ms/step - loss: 0.5372 - accuracy: 0.7230 - val_loss: 0.5649 - val_accuracy: 0.7130

Epoch 20/20

63/63 [==============================] - 36s 571ms/step - loss: 0.5345 - accuracy: 0.7230 - val_loss: 0.5665 - val_accuracy: 0.6970plot_loss_acc(history_t,20)