이번에는 사진의 진위 여부를 판단하는, 즉 Real과 Fake를 구분하는 실습을 진행한다.

이를 위한 모델은 Xception으로, 항상 이미지는 비슷하게 진행된다.

1. Xception

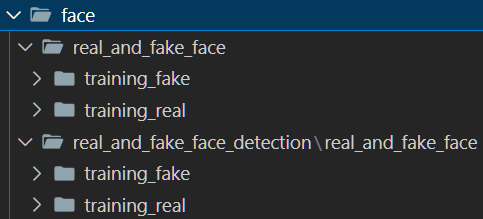

real_and_fake_face가 train이며 그 안에 진짜 가짜

real_and_fake_face_detection이 test이며 그 안에 진짜 가짜로 사용한다.

1.1. 데이터 전처리

# Train Data

train_data_gen=ImageDataGenerator(rescale=1/255.0,

horizontal_flip=True, width_shift_range=0.1, height_shift_range=0.1,

fill_mode='nearest')

train_generator=train_data_gen.flow_from_directory(directory='data/face/real_and_fake_face',

target_size=(300,300), batch_size=32,

class_mode='binary') # Real/Fake

train_generator.class_indicesFound 2041 images belonging to 2 classes.

{'training_fake': 0, 'training_real': 1}# Test Data

test_data_gen=ImageDataGenerator(rescale=1/255.0) # 정규화만

test_generator=test_data_gen.flow_from_directory(directory='data/face/real_and_fake_face_detection/real_and_fake_face',

target_size=(300, 300), batch_size=32,

class_mode='binary')

test_generator.class_indicesFound 2041 images belonging to 2 classes.

{'training_fake': 0, 'training_real': 1}1.2. Xception 신경망 모델 불러오기

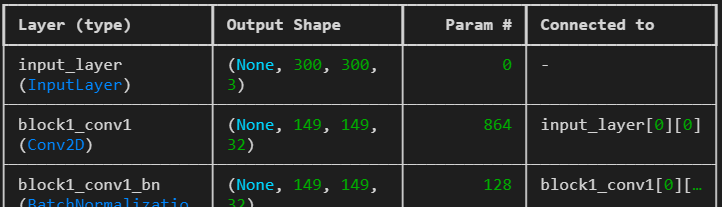

transfer_model=Xception(weights='imagenet',

include_top=False,

input_shape=(300,300,3))

transfer_model.trainable=False

transfer_model.summary()Model: "xception"

Total params: 20,861,480 (79.58 MB)

Trainable params: 0 (0.00 B)

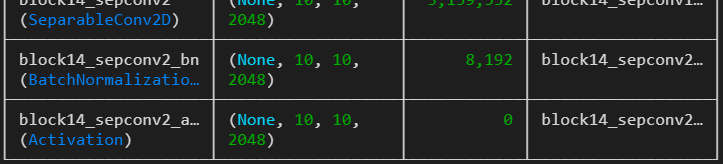

Non-trainable params: 20,861,480 (79.58 MB)model=keras.Sequential()

model.add(transfer_model)

model.add(keras.layers.Flatten())

model.add(keras.layers.Dense(64, activation='relu'))

model.add(keras.layers.Dense(1, activation='sigmoid'))

model.summary()Model: "sequential"┏━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━┓ ┃ Layer (type) ┃ Output Shape ┃ Param # ┃ ┡━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━┩ │ xception (Functional) │ (None, 10, 10, 2048) │ 20,861,480 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ flatten (Flatten) │ (None, 204800) │ 0 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ dense (Dense) │ (None, 64) │ 13,107,264 │ ├─────────────────────────────────┼────────────────────────┼───────────────┤ │ dense_1 (Dense) │ (None, 1) │ 65 │ └─────────────────────────────────┴────────────────────────┴───────────────┘

Total params: 33,968,809 (129.58 MB)

Trainable params: 13,107,329 (50.00 MB)

Non-trainable params: 20,861,480 (79.58 MB)

1.3. 모델 학습 및 실행

이번에도 오래 걸리기 때문에 2 정도로 돌려본다.

model.compile(optimizer=Adam(learning_rate=0.002), loss='binary_crossentropy', metrics=['accuracy'])

early_stopping_callback = EarlyStopping(monitor='val_loss', patience=5)

history=model.fit(train_generator,

#epochs=50,

epochs=2,

validation_data=test_generator,

validation_steps=24,

callbacks=[early_stopping_callback])Epoch 1/2

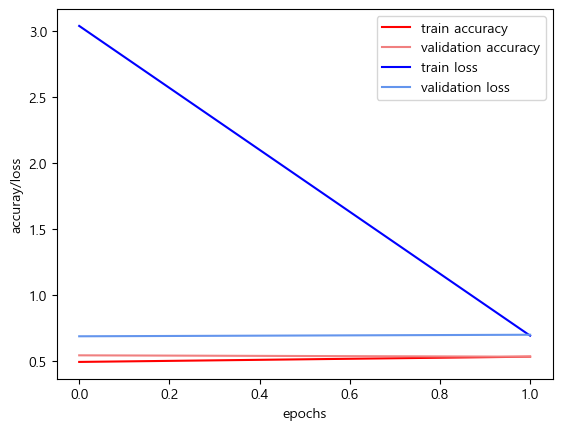

[1m64/64[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m301s[0m 5s/step - accuracy: 0.5062 - loss: 7.4390 - val_accuracy: 0.5443 - val_loss: 0.6884

Epoch 2/2

[1m64/64[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m323s[0m 5s/step - accuracy: 0.5331 - loss: 0.6898 - val_accuracy: 0.5339 - val_loss: 0.7000model.evaluate(test_generator)[1m64/64[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m162s[0m 3s/step - accuracy: 0.5455 - loss: 0.6883

[0.6927319765090942, 0.5374816060066223]plt.plot(history.history['accuracy'], label='train accuracy', color='red')

plt.plot(history.history['val_accuracy'], label='validation accuracy', color='lightcoral')

plt.plot(history.history['loss'], label='train loss', color='blue')

plt.plot(history.history['val_loss'], label='validation loss', color='cornflowerblue')

plt.legend()

plt.xlabel('epochs')

plt.ylabel('accuray/loss')

plt.show()

steps=test_generator.n // 5

images, labels=[], []

for i in range(steps):

image, label=next(test_generator)

images.extend(image)

labels.extend(label)

images=np.asarray(images)

labels=np.asarray(labels).astype(int)

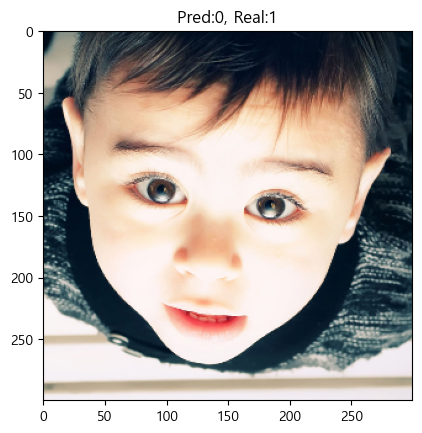

labels[0]np.int64(1)1.4. 결과 예측

pred_prob=model.predict(images)

pred_prob[0][1m407/407[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m1247s[0m 3s/step

array([0.50595057], dtype=float32)pred=np.argmax(pred_prob, axis=1)

pred[0]np.int64(0)plt.imshow(images[0])

plt.title(f"Pred:{pred[0]}, Real:{labels[0]}")

plt.show()

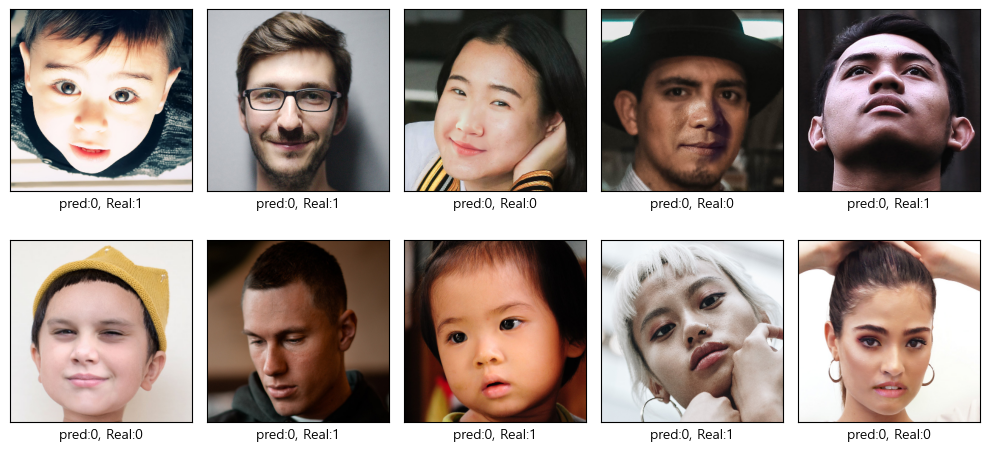

fig, axes=plt.subplots(2,5,figsize=(10,5))

for ax, image, pre, label in zip(axes.flat, images[:10], pred[:10], labels[:10]):

ax.imshow(image)

ax.set_xlabel(f"pred:{pre}, Real:{label}")

ax.set_xticks([])

ax.set_yticks([])

plt.tight_layout()

plt.show()

print(metrics.classification_report(labels, pred))precision recall f1-score support

0 0.47 1.00 0.64 6112

1 0.00 0.00 0.00 6902

accuracy 0.47 13014

macro avg 0.23 0.50 0.32 13014

weighted avg 0.22 0.47 0.30 13014