Pytorch Tutorial

1.04-2. Loading data

Lab 4-2: Load Data Author: Seungjae Lee (이승재)님의 slide를 참고하였습니다. Slicing 1D Array [0, 1, 2, 3, 4] index 2에서 4 전까지 가져와라. (앞 포함, 뒤 비포함) [2, 3] index 2부터 다 가져와라. [2, 3, 4] index 2 전까지...

2.05. logistic classification

Lab 5: Logistic Classification Reminder: Logistic Regression Hypothesis $$H(X) = \frac{1}{1+e^{-W^T X}}$$ Cost $$cost(W) = -\frac{1}{m} \sum y \log\left(H(x)\right) + (1-y) \left( \log(1-H(x) \r...

3.06-1. Softmax Classification

Lab 6-1: Softmax Classification 목차 Softmax Cross Entropy Low-level implementation High-level implementation Training Example Discrete Probability Distribution Discrete Probability Distribution co...

4.06-2. Fancy Softmax Classification

Lab 6-2: Fancy Softmax Classification Imports Cross-entropy Loss with torch.nn.functional PyTorch has F.log_softmax() function. tensor([[1., 0., 0., 0., 0.], [0., 0., 1., 0., 0.],...

5.07-1 Tips

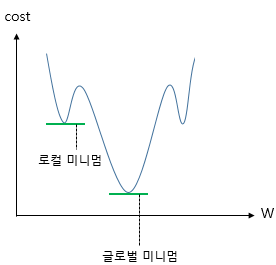

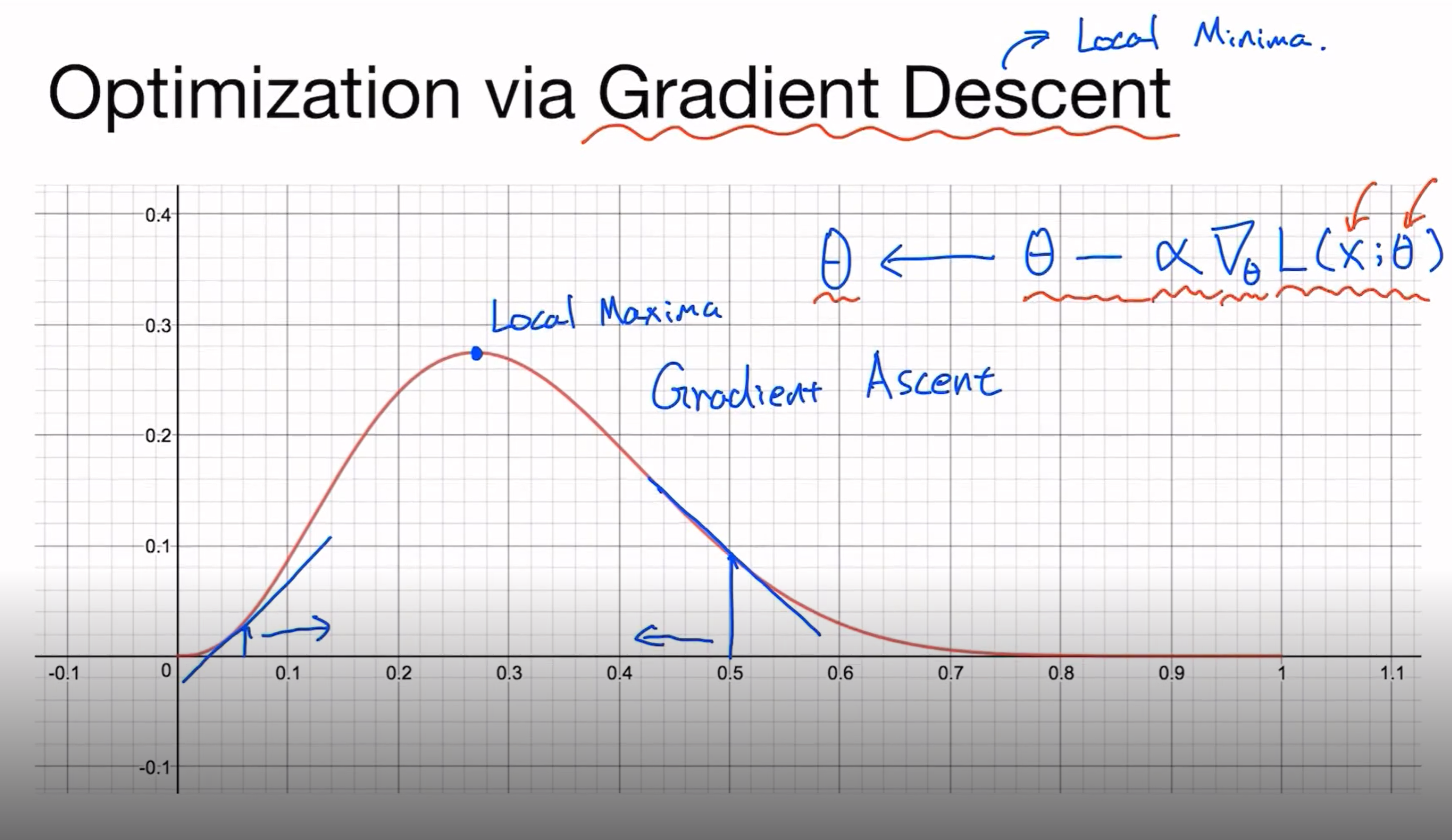

Maximum Likelihood Estimation, Gradient Descent, Training dataset, Overfitting, Regularization

6.07-2 mnist_introduction

mnist

7.08-1 perceptron

목차erceptronND, ORORCode: XOR

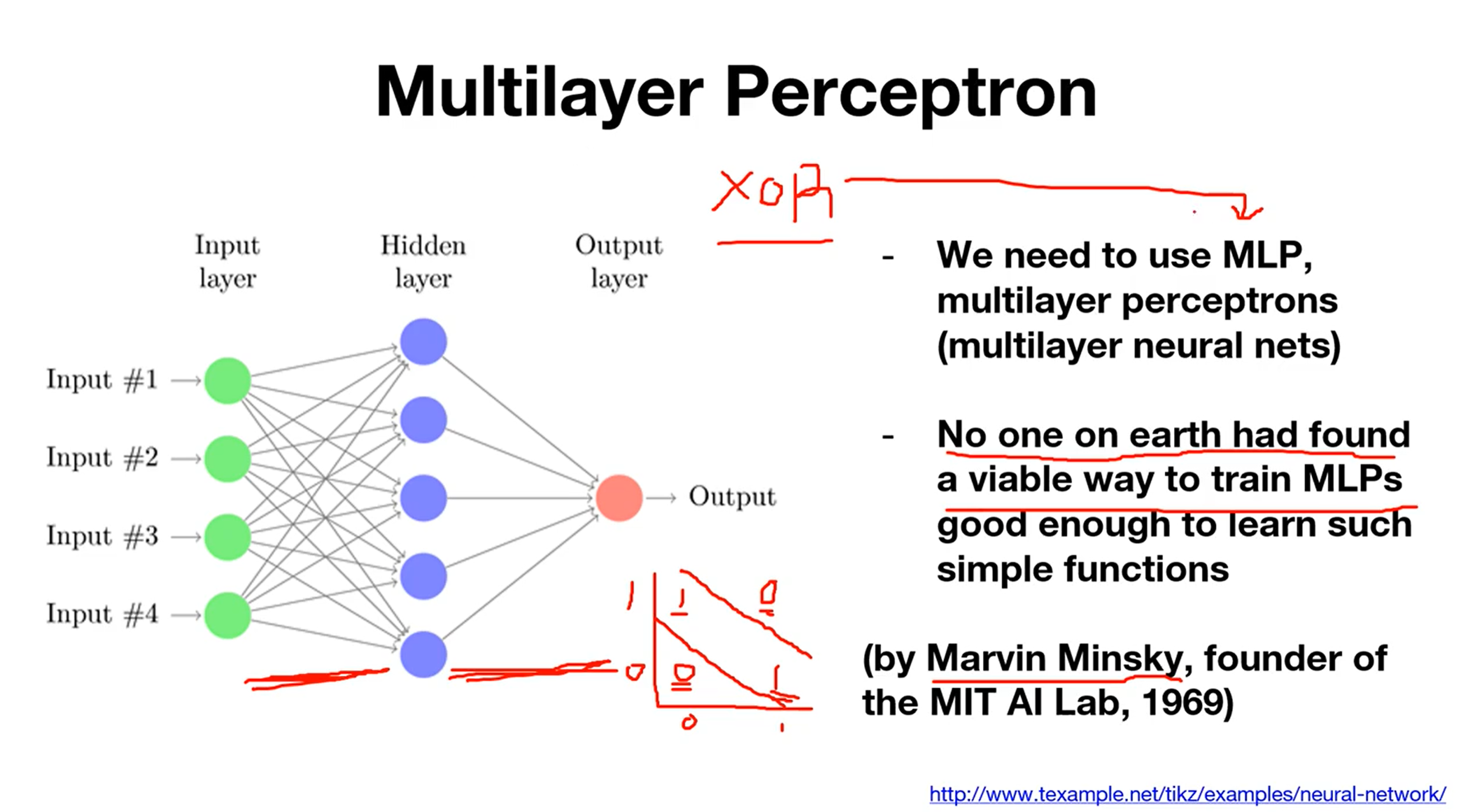

8.08-2 Multi-Layer Perceptron

Multilayer Perceptron이후 Backpropagation algorithm의 등장으로 MLP 학습이 가능하게 되었습니다!참고 영상: 모두를 위한 딥러닝 시즌1 Kim Sung