PCA(Principal Component Analysis) is one of well-known dimensionality reduction techniques. Simply put, we are representing 100-dimensional data with three-dimensional data. Before we get to the complicated part, let's first find out why we should reduce dimensionality.

Why do we reduce dimensionality?

Curse of Dimensionality

Curse of dimensionality is one of the main reasons why we reduce dimensionality. As the catchy name suggests, it means the increase of dimensionality is not that preferable. Image source

Image source

As shown above, the data densely distributed in two-dimensional space are distributed relatively sparsely in three-dimensional space. In other words, as the dimensionality increases, the data needed for explanation increases rapidly. Therefore, if the dimensionality of data is higher than necessary, it becomes more difficult to train a model (too little data given for the dimensionality). This is why we use dimensionality-reduced inputs for a model when the dimensionality of input is too high.

Data Visualization

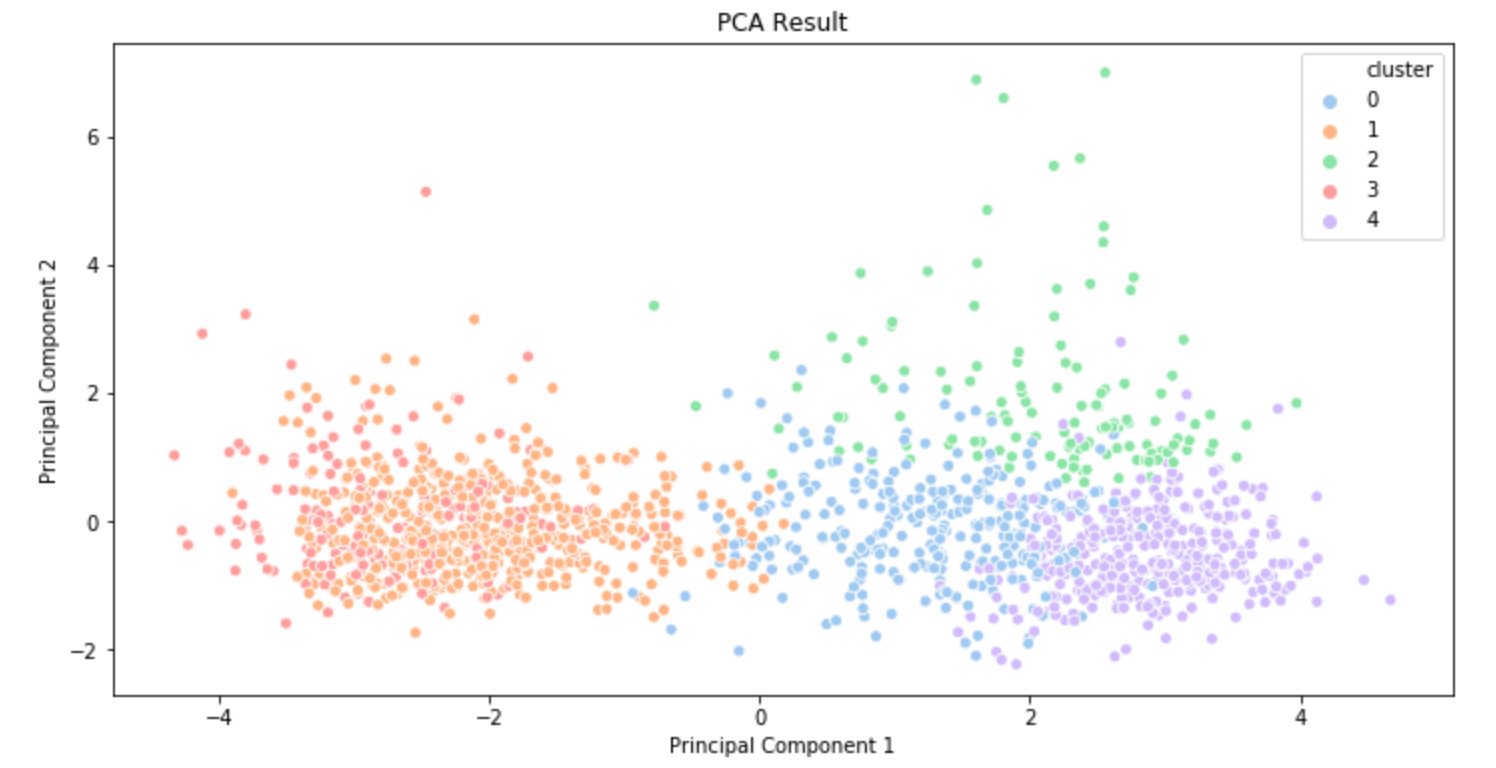

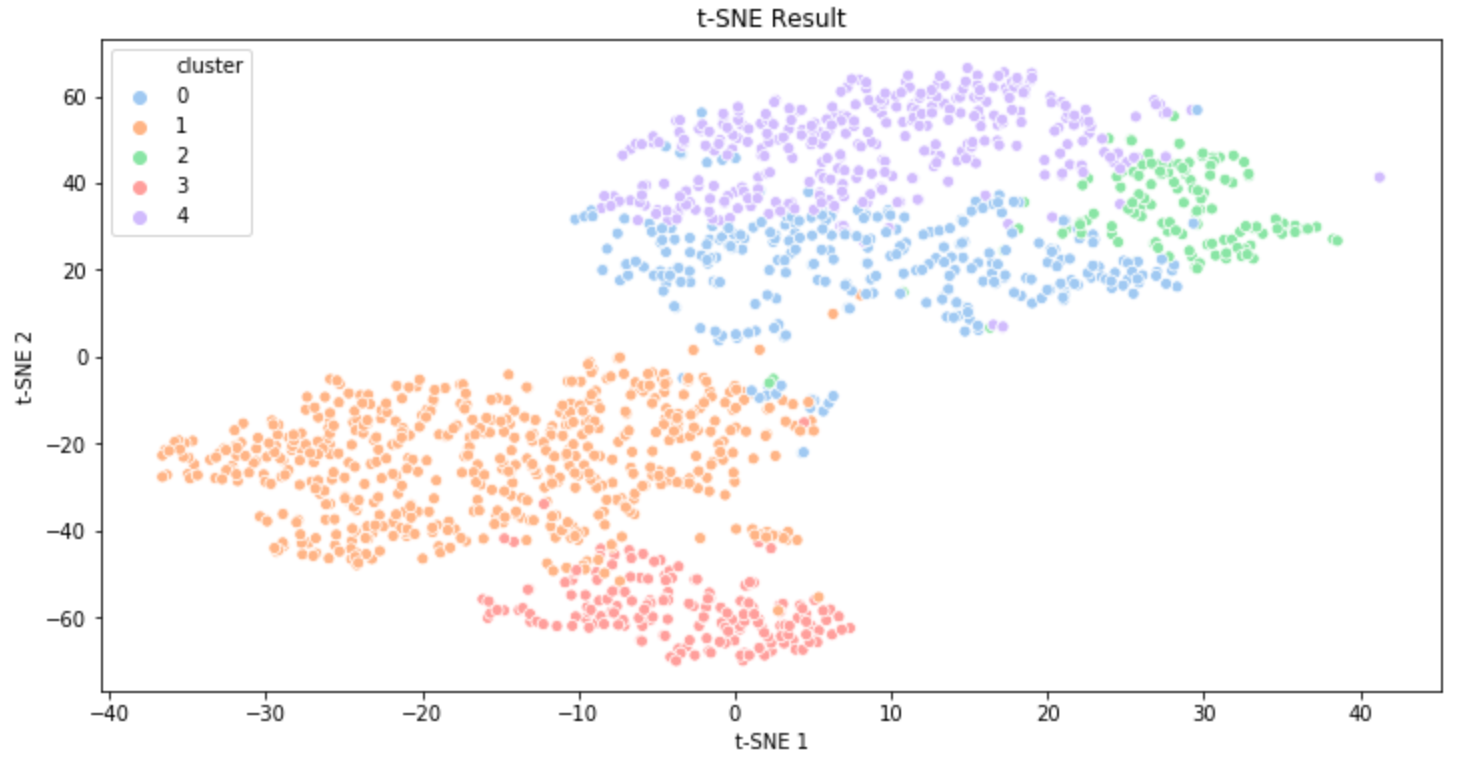

Dimensionality reduction is also used in data visualization. Suppose we just finished a clustering task on 100-dimensional data. In order to "see" if clustering is well performed, 100-dimensional data should be reduced to two-dimensional or three-dimensional data while maintaining the characteristics of data as much as possible. This is when dimensionality reduction techniques such as PCA are used.

The plots above are the clustering results of music data that I created a few months ago. The first result was visualized using PCA and the second result was created using t-SNE(t-distributed Stochastic Neighbor Embedding). (t-SNE is also a commonly used dimensionality reduction technique. You can get some information about t-SNE in this post.

The plots above are the clustering results of music data that I created a few months ago. The first result was visualized using PCA and the second result was created using t-SNE(t-distributed Stochastic Neighbor Embedding). (t-SNE is also a commonly used dimensionality reduction technique. You can get some information about t-SNE in this post.

How PCA Works

Basic Idea

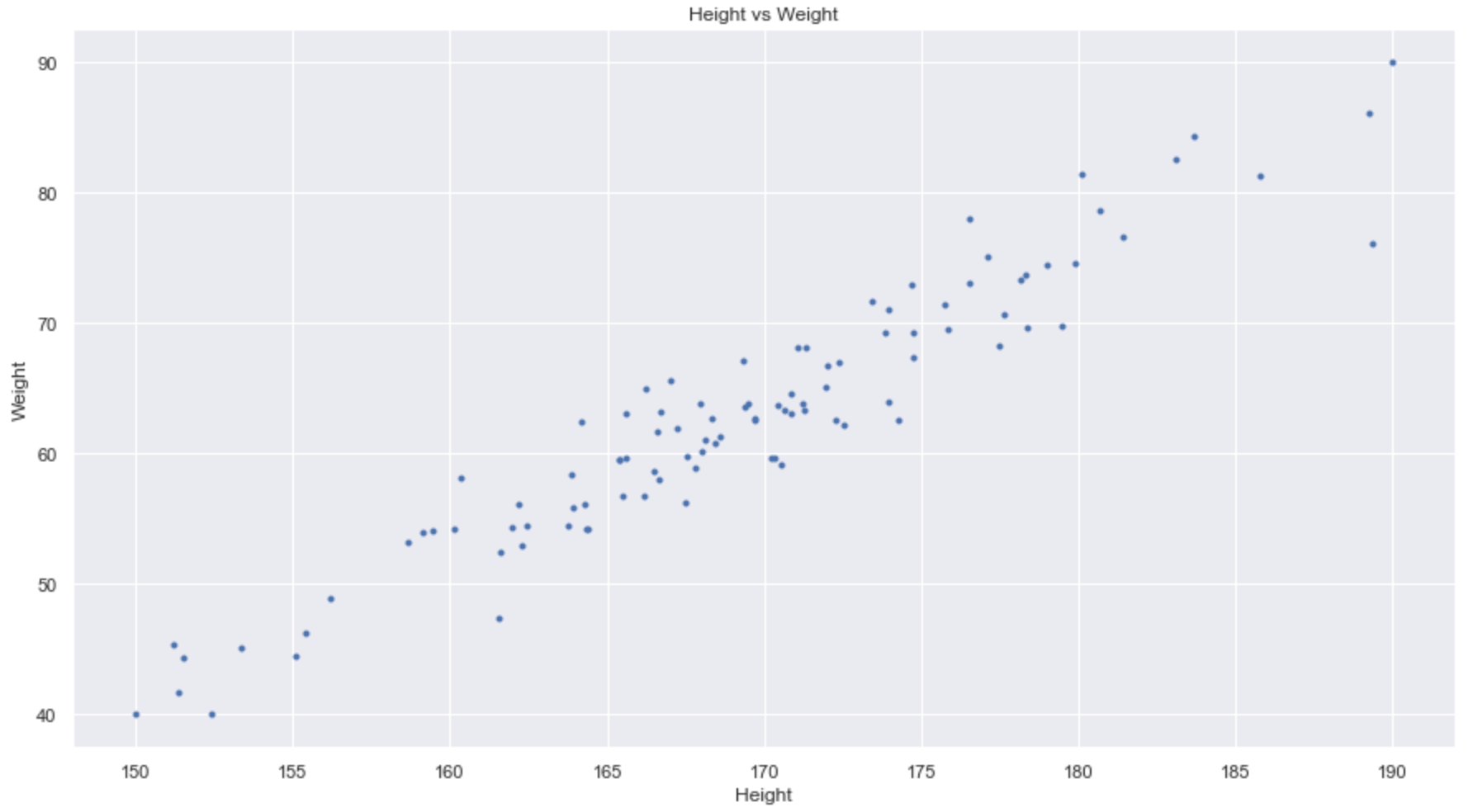

The fundamental idea of PCA is maintaining the characteristics of data. Let's say that a set of two-dimensional data is given as follows. Suppose we reduce the data to one-dimensional data. What does it mean to reduce dimensionality while maintaining the characteristics of the data?

Suppose we reduce the data to one-dimensional data. What does it mean to reduce dimensionality while maintaining the characteristics of the data? Image source

Image source

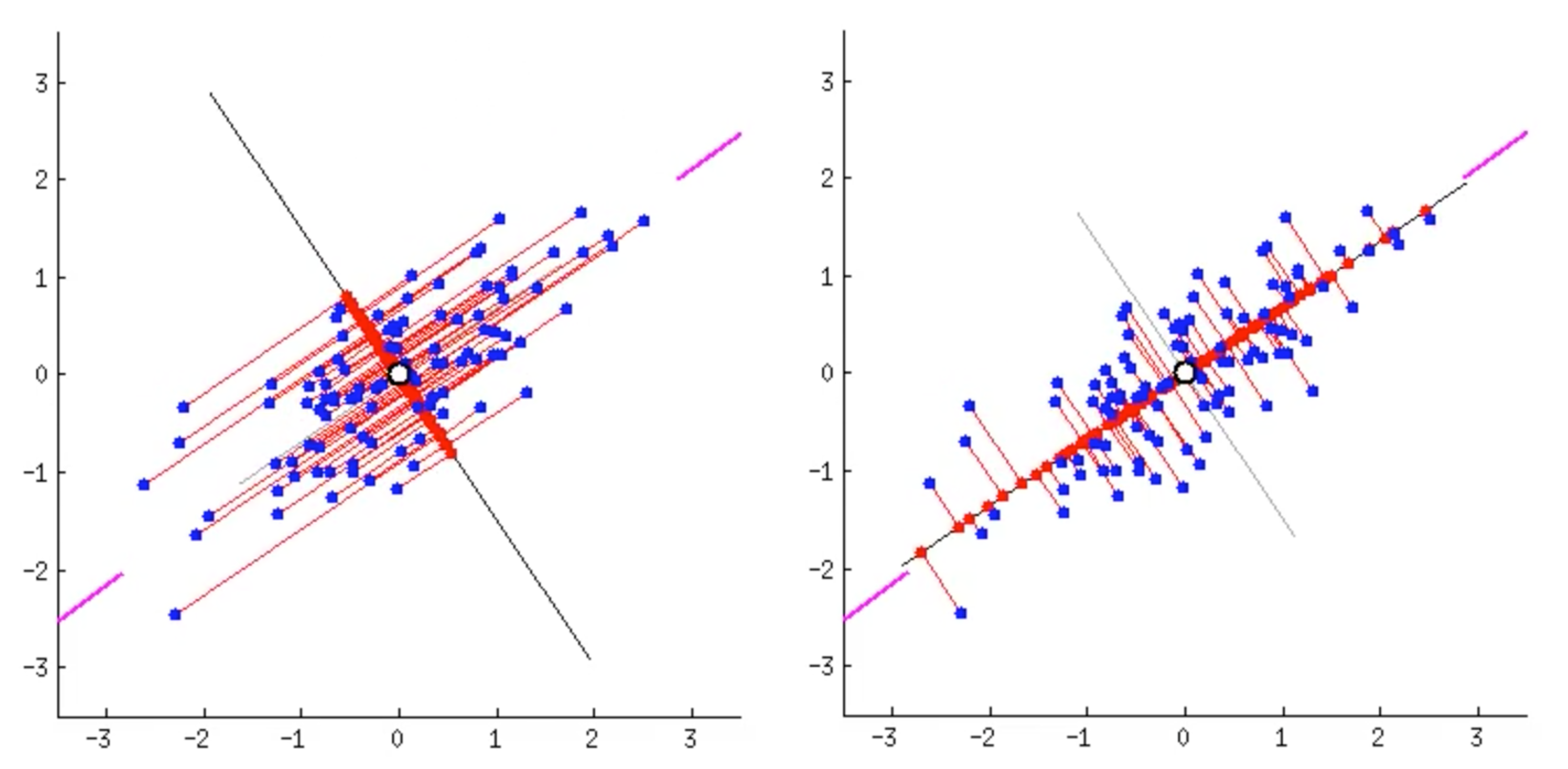

The plots above show two different cases of dimensionality reduction(blue dots to red dots). Projection is used in both cases, yet the directions of the projection are different. In the left case, the projected data(red dots) are densely concentrated in the center. Even the distant data points are projected close to other points. But the situation on the right is different. The projected data are quite widely distributed on the black line (variance is large). In other words, the characteristics of data is well preserved in the right case.

Mathematical Interpretation

So, the key is maintaining the characteristics of data. As mentioned above, it can be done by keeping the variance of data to the maximum. Since the basic ideas have been organized, let's get into the math part.

Let's say we have -dimensional data. If we say that the first vector to project the data (black line in the example above) is , the problem can be summarized as follows.

To solve this problem, we first need to centralize .

Centralizing simply means moving the whole data to the origin. Centralizing only moves the location of data and does not change the overall shape of the data. Since we only care about the direction of , we may use instead of . Therefore the optimization problem can be rewritten as below.

Let's look at for a moment. can be written ( is the -th column vector of )

The expectation of is thus

Since is a centralized matrix, the value of is zero. Therefore equals zero. Now let's get back to the original problem.

Since is a quadratic form, we cannot find without any constraint. But as mentioned above, we only care about the direction of . Thus, the length of can be set to 1. Using Lagrangian multiplier method,

Let's apply the result to the optimization problem.

Voilà! The we need is the vector that maximizes . Since is an eigenvalue of () according to (1), is the eigenvector of the covariance matrix of corresponding to the largest eigenvalue. Such is called the first principal component. The second principal component is the vector that is orthogonal to the first principal component and maximizes the variance of the projected data (you can get the rest of the principal components in the same way).

How PCA is Related to SVD

PCA is also a concept deeply related to SVD(Singular Value Decomposition).

consists of the eigenvectors of and consists of the eigenvectors of . What happens if we centralize ? (The ,, below are different from the ,, above, but for convenience they are denoted as ,,)

The column vectors of will be the eigenvectors of . Yes, the column vectors of are actually the eigenvectors of the covariance matrix of . If the singular values in are listed in descending order, the first column vector of will be the first principal component of . Simply put, we can get the principal components of by implementing SVD on centralized .

So far, we have looked at the most basic form of PCA. There are some advanced PCA techniques that are suitable for particular cases - one example is dual PCA which is used when the value of is much greater than (when the dimensionality is much higher than the number of data). This lecture video will be quite helpful if you want to know additional PCA techniques.