Prompting

1.P-Tuning v2: Prompt Tuning Can be Comparable to Finetuning Universally Across Scales and Tasks (ACL 2021)

Prompt Tuning의 장점: memory와 storage를 아낄 수 있음이전까지 Prompt Tuning의 한계normal-sized pretrained model에서는 성능이 잘 안 나옴어려운(hard) labeling task 잘 안 됨 (범용성 미비)본 논문

2.The Power of Scale for Parameter-Efficient Prompt Tuning (EMNLP 2021)

본 논문에서 "model tuning"이라 함은 "fine-tuning"을 의미함"prompt tuning" to learn "soft prompts"few shot setting에서 GPT-3를 사용한 discrte prompt보다 더 좋은 성능여러 크기의 T5를 사

3.SPoT: Better Frozen Model Adaptation through Soft Prompt Transfer (ACL 2022)

일러두기 해당 논문에서 ModelTuning 이라 하는 것은 finetuning과 같음: 모델의 parameter를 모두 학습하는 그 finetuning model parameter size 11 bln을 기준으로 Prompt Tuning이 finetuning의 성능과

4.On Transferability of Prompt Tuning for Natural Language Processing (NAACL 2022)

Prompt Tuning의 장점?: PLM의 parameter를 다 학습할 필요 없이 소수의 parameter만 학습시켜도 괜찮은 성능을 내기 때문에!Prompt Tuning의 단점?: 학습되는 parameter가 너무 적죠? 그래서 convergence 오래 걸리죠?

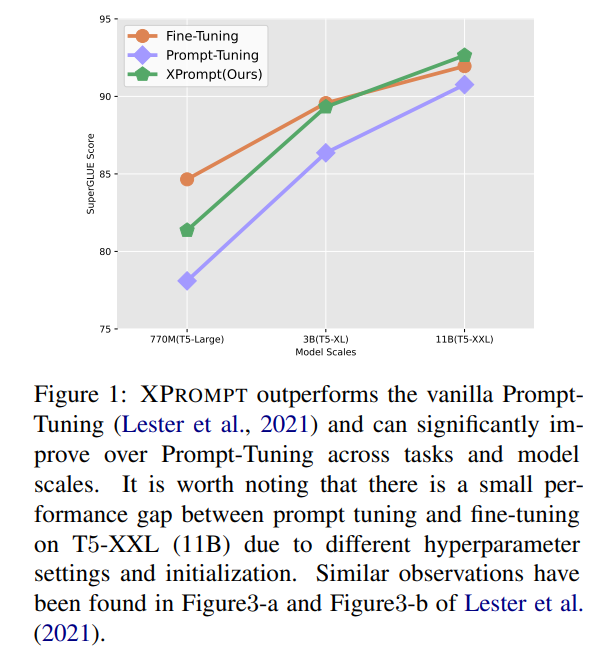

5.XPrompt: Exploring the Extreme of Prompt Tuning (EMNLP 2022)

Ma, F., Zhang, C., Ren, L., Wang, J., Wang, Q., Wu, W., ... & Song, D. (2022). XPrompt: Exploring the Extreme of Prompt Tuning. arXiv preprint arXiv:2