논문리뷰

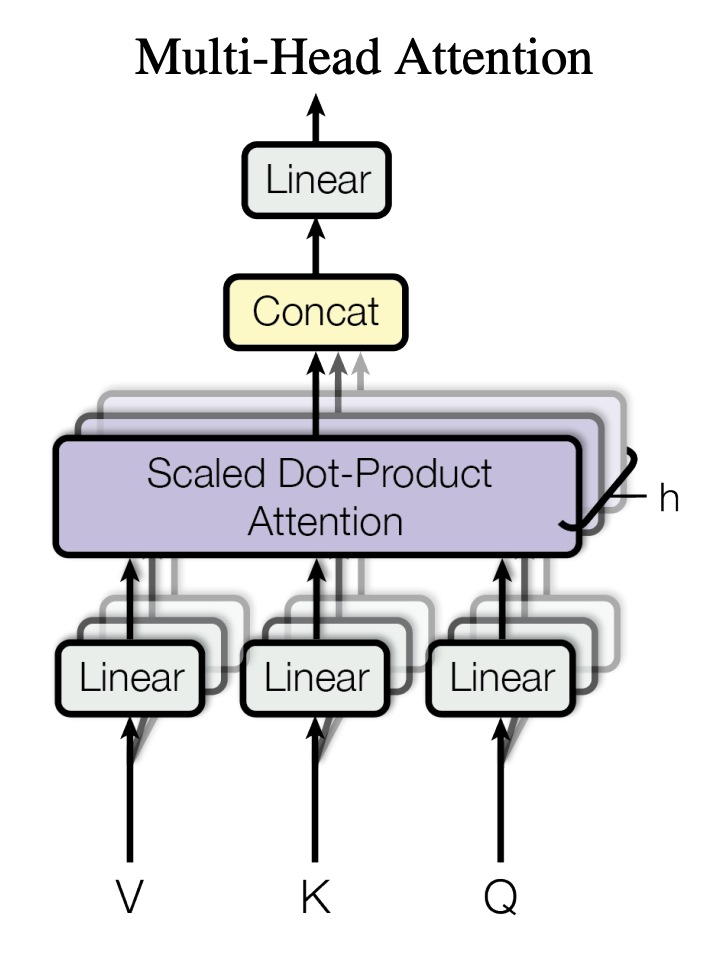

1.[논문리뷰] Attention is all you need (Transformer 구조 파헤치기) - 1. Attention이란?

Chat-GPT에 이어 GPT-4까지 등장한 지금, 자연어처리에서 LLM(Large Language Model)은 빼놓을 수 없는 주제입니다. 현재 유명한 GPT와 그동안 많이 사용되었고 사용되고 있는 모델인 BERT 모두 시작은 Transformer라는 구조가 등장한

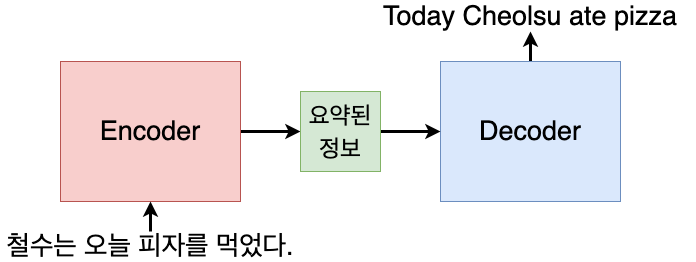

2.[논문리뷰] Attention is all you need (Transformer 구조 파헤치기) - 2. Transformer의 Encoder, Decoder

이전글: [논문리뷰] Attention is all you need (Transformer 구조 파헤치기) - 1. Attention이란? ChatGPT, GPT-4 그리고 BARD의 근간이 되는 모델은 Attention is all you need 논문에서 소개된

3.[논문리뷰] Gorilla: Large Language Model Connected with Massive APIs

논문 출처: https://arxiv.org/abs/2305.15334 챗GPT 이후로 최근은 자연어처리의 리즈 시절이라고 불릴 수 있습니다. 이 리즈 시기에 라마, 알파카 등 온갖 동물 이름으로 지어진 귀여운 LLM(Large Language Model)들이 많이

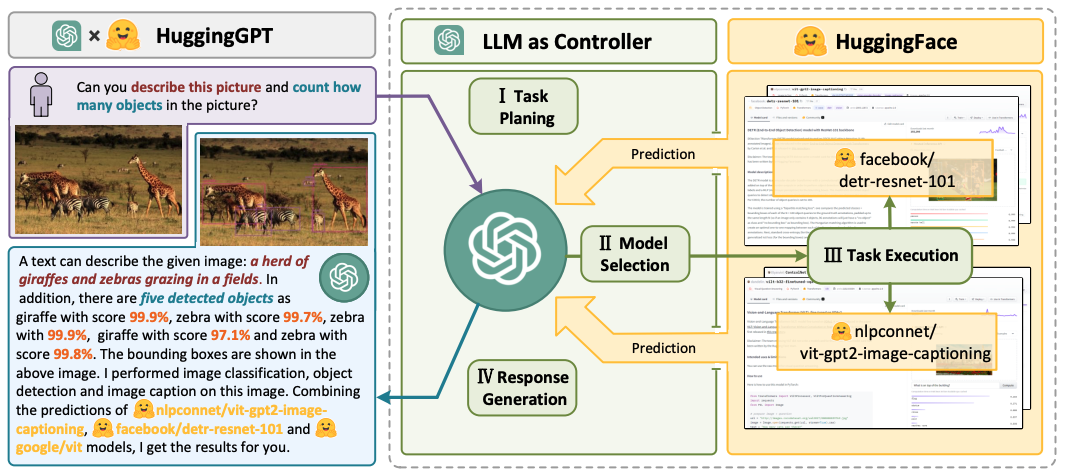

4.[논문리뷰] HuggingGPT: Solving AI Tasks with ChatGPT and its friends in Hugging Face

논문 출처: https://arxiv.org/pdf/2303.17580.pdf데모 출처: https://huggingface.co/spaces/microsoft/HuggingGPTLLM(Large Language Model)을 포함한 성능이 너무나 좋

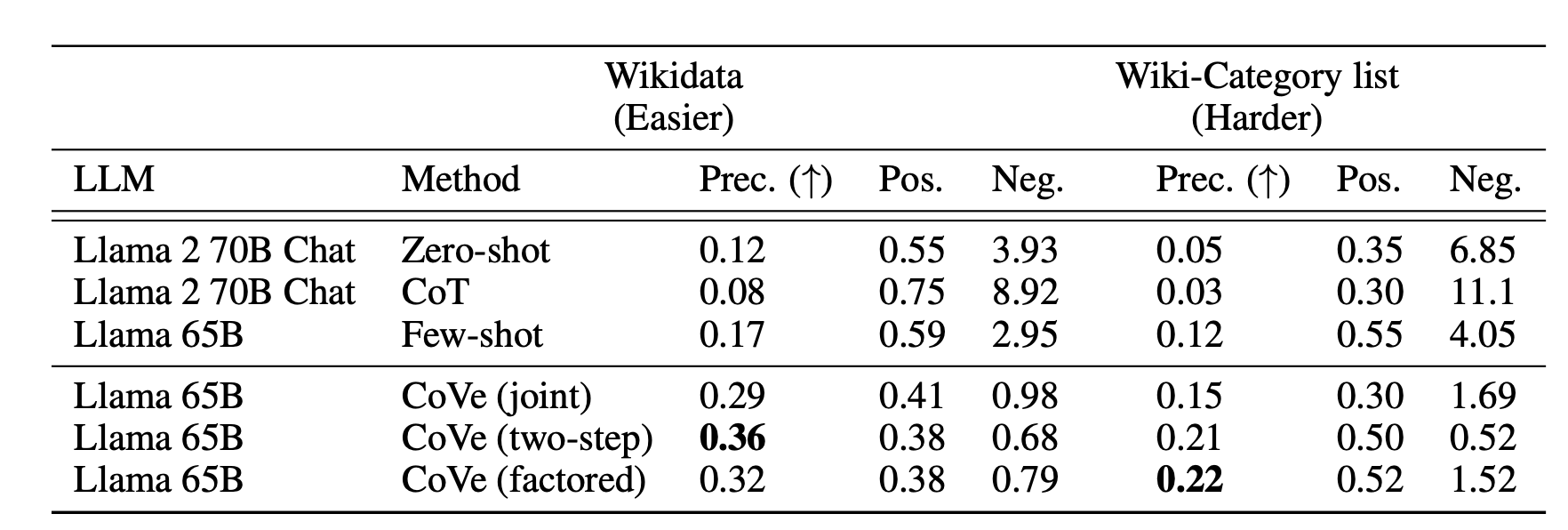

5.[논문리뷰] Chain-of-Verification Reduces Hallucination in Large Language Models

논문 출처: https://arxiv.org/abs/2309.11495 Hallucination(환각, 허위 사실을 생성하는 것)은 LLM(Large Language Model)의 대표적인 단점으로 꼽히는 현상입니다. 이를 줄이고자 하는 연구는 계속되고 있으며, 그

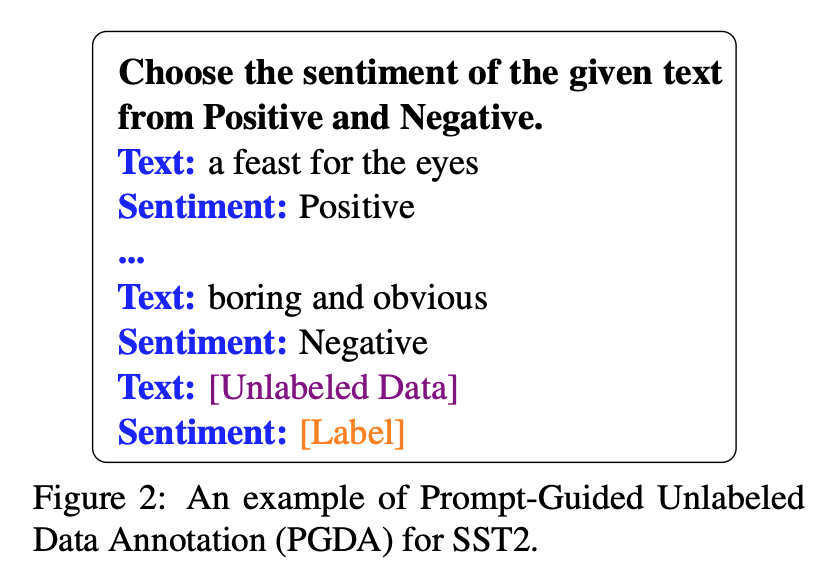

6.[논문리뷰] Is GPT-3 a Good Data Annotator?

출처: https://arxiv.org/abs/2212.10450 개요 여러 NLP 테스크들은 다량의 라벨링된 데이터가 필요하며, 라벨링 작업을 위해 GPT-3를 사용했을 때의 성능이 reasonable하다는 논문 논문링크: https://arxiv.org/abs

7.The COT COLLECTION: Improving Zero-shot and Few-shot Learning of Language Models via Chain-of-Thought Fine-Tuning

출처: https://arxiv.org/abs/2305.14045LLM에게 "잘 물어보는 방법"을 연구하는 Prompt engineering 방법 중 대표적인 것이 <span style="color: 앞서 말했듯이, CoT를 이용한 prompting이 L