💡 문제

이미지의 각 픽셀에 대한 값을 활용하여 0~9까지의 숫자를 예측하는 모델을 구현한다.

🔥 예측에 사용할 모델 : DNN (Deep Neural Network) - 다중분류

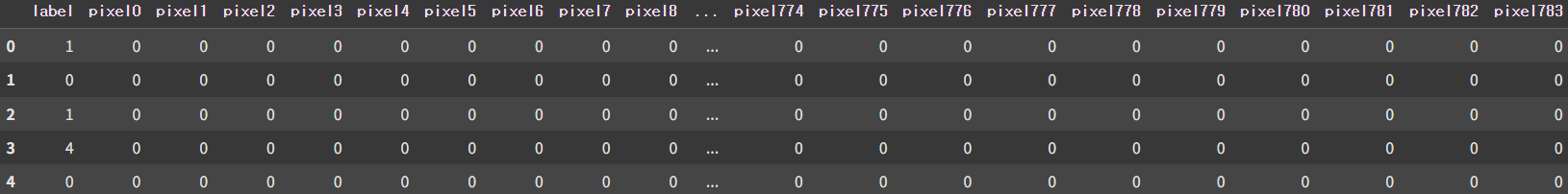

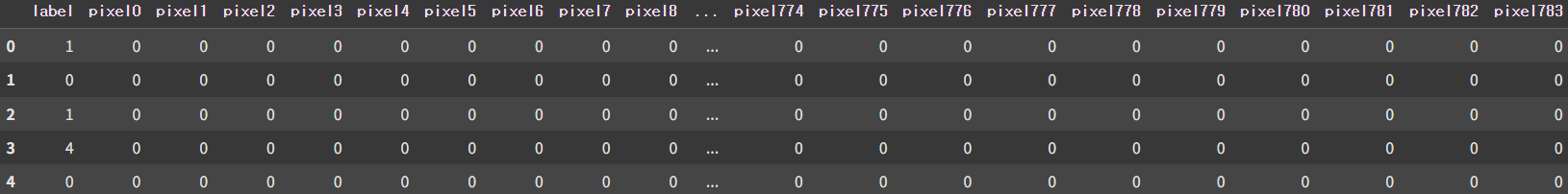

📖 데이터 셋

28 x 28 (784) 크기의 숫자 손글씨에 대한 2차원 픽셀 데이터가 1차원 형태로 들어있다.

📒 코드

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from sklearn.preprocessing import MinMaxScaler

from sklearn.model_selection import train_test_split

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Flatten, Dense

from tensorflow.keras.optimizers import SGD

from tensorflow.keras.callbacks import EarlyStopping

from sklearn.metrics import classification_report📚 Raw Data Loading

# Raw Data Loading

df = pd.read_csv('/content/drive/MyDrive/KDT/kaggle/Digit Recognizer/01. train.csv')

display(df.head())

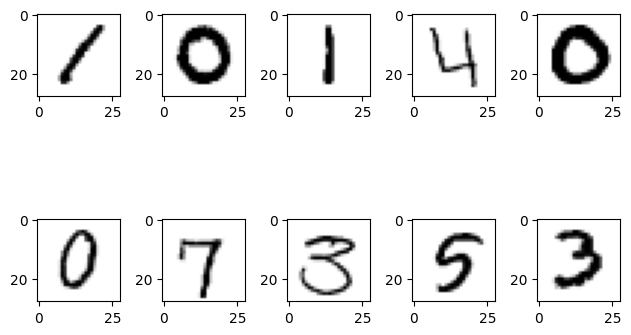

📚 데이터 시각화

fig = plt.figure()

axes = []

for i in range(10):

axes.append(fig.add_subplot(2, 5, i + 1))

axes[i].imshow(x_data[i].reshape(28, 28), cmap='gray_r')

plt.tight_layout()

plt.show()

어떤 데이터가 있는지 10개의 데이터만 뽑아 이미지로 출력해 보았다. 또한, 이 데이터 셋의 경우 결측치, 이상치, 중복 데이터에 대한 처리가 완벽하게 진행되어있어 따로 전처리 작업을 할 것이 없다.

📚 정규화

x_data = df.drop('label', axis=1, inplace=False).values

t_data = df['label'].values.reshape(-1, 1)

scaler = MinMaxScaler()

scaler.fit(x_data)

x_data_norm = scaler.transform(x_data)0~255까지의 연속적인 실수 데이터 값이 들어있으므로 모델의 학습을 위해 Min-Max Scaling 처리를 하였다.

📚 데이터 분할

x_data_train_norm, x_data_test_norm, t_data_train, t_data_test = \

train_test_split(x_data_norm, t_data,

test_size=0.2, stratify=t_data)모델 학습 후 모델 검증을 위해 학습데이터와 테스트 데이터를 나누어주었다.

📚 DNN Model 구현

model = Sequential()

model.add(Flatten(input_shape=(784,)))

model.add(Dense(units=64, activation='relu'))

model.add(Dense(units=128, activation='relu'))

model.add(Dense(units=10, activation='softmax'))

model.compile(optimizer=SGD(learning_rate=1e-1),

loss = 'sparse_categorical_crossentropy',

metrics=['acc'])

es_callback = EarlyStopping(monitor='val_loss', patience=5,

restore_best_weights=True, verbose=1)

model.fit(x_data_train_norm, t_data_train,

epochs=100, verbose=1,

validation_split=0.3, batch_size=100,

callbacks=[es_callback])이진 로지스틱 모델이기 때문에 활성화 함수로 'softmax' 함수를 사용하였고, 이 모델의 경우 다중 분류 모델로, 0~9까지에 대한 종속변수에 대해 One-Hot Encoding 작업을 해야하지만 모델 내부적으로 처리할 수 있도록 손실 함수로는 'sparse_categorical_crossentropy' 함수를 사용하여 매우 간단한 DNN 모델을 구현하였다.

📚 모델 평가

print(classification_report(t_data_test, np.argmax(model.predict(x_data_test_norm), axis=1)))

# precision recall f1-score support

# 0 0.97 0.98 0.98 827

# 1 0.97 0.99 0.98 937

# 2 0.96 0.96 0.96 835

# 3 0.94 0.96 0.95 870

# 4 0.98 0.94 0.96 814

# 5 0.95 0.95 0.95 759

# 6 0.98 0.97 0.98 827

# 7 0.97 0.96 0.97 880

# 8 0.95 0.94 0.95 813

# 9 0.93 0.95 0.94 838

# accuracy 0.96 8400

# macro avg 0.96 0.96 0.96 8400

# weighted avg 0.96 0.96 0.96 8400모델 평가 결과 F1 Score가 0.96이 출력된 것을 확인할 수 있었다.

📚 Test Set 처리 및 정답 데이터 처리

# Test Data Loading

test = pd.read_csv('/content/drive/MyDrive/KDT/kaggle/Digit Recognizer/02. test.csv')

# Test Data Preprocessing

test_data_norm = scaler.transform(test.values)

# 예측

test_result = model.predict(test_data_norm)

test_result = np.argmax(test_result,axis=1)

# Submission Data Loading

submission = pd.read_csv('/content/drive/MyDrive/KDT/kaggle/Digit Recognizer/03. sample_submission.csv')

# 정답 입력 및 추출

submission['Label'] = test_result

submission.to_csv('Digit_Recognition_DNN.csv', index=False)테스트 데이터를 가져와서 훈련 데이터와 똑같이 전처리를 진행하였고, 예측 결과를 제출 데이터에 삽입하여 제출하였다.

✨ 결과

💭 후기

이 데이터 셋의 경우 매우 잘 정제된 데이터로 따로 전처리 작업 없이도 꽤 높은 점수를 받을 수 있었다. 이를 바탕으로 더 어려운 이미지 처리 문제도 경험해 봐야겠다.

🔗 문제 출처

https://www.kaggle.com/competitions/digit-recognizer