Fuzzy C-Means Clustering

Introduction

Fuzzy C-Means (FCM) is an extension of the traditional K-Means clustering algorithm that allows data points to belong to multiple clusters with varying degrees of membership. This approach is based on the concept of fuzzy logic, where the binary membership in conventional clustering methods is replaced with a probability or degree ranging between 0 and 1. FCM is particularly useful in scenarios where the boundaries between clusters are not clearly defined, making it a popular choice for pattern recognition, image segmentation, and data analysis where overlapping clusters are expected.

Background and Theory

In contrast to hard clustering methods like K-Means, where each data point is assigned to exactly one cluster, FCM introduces the concept of soft clustering. Each data point has a degree of belonging to all clusters, represented by a membership grade. This fuzzy partitioning is achieved by minimizing an objective function that reflects the distance of data points from the cluster centers, weighted by their membership grades.

Objectives

The objective function of FCM is defined as:

where:

- is the number of data points,

- is the number of clusters,

- is the membership degree of data point in cluster ,

- is the centroid of cluster ,

- is the squared Euclidean distance between data point and the centroid of cluster ,

- is the fuzzification parameter that controls the level of cluster fuzziness; a higher value results in fuzzier clusters.

The goal is to minimize under the constraints that:

Procedural Steps

- Initialization: Choose the number of clusters , initialize cluster centroids randomly, and set the fuzzification parameter .

- Membership Update: Update the membership for each data point and each cluster.

- Centroid Update: Update the centroids for each cluster.

- Repeat: Repeat steps 2 and 3 until the centroids do not significantly change between iterations or a predefined number of iterations is reached.

Mathematical Formulations

Membership Degree

The membership degree indicates how strongly data point belongs to cluster , calculated as:

This formulation ensures that the sum of the membership degrees of across all clusters equals 1.

Centroid Calculation

The centroid of each cluster is the weighted mean of all points, where the weights are the membership degrees raised to the power of :

This reflects the fuzzy nature of the clustering by considering the degree to which each point belongs to each cluster.

Implementation

Parameters

n_clusters:int

Number of clusters to estimate

max_iter:int, default = 100

Maximum iteration

m:float, default = 2.0

Fuzziness parameter (larger makes labels softer)

tol:float, default = 0.00001

Threshold for early convergence

Examples

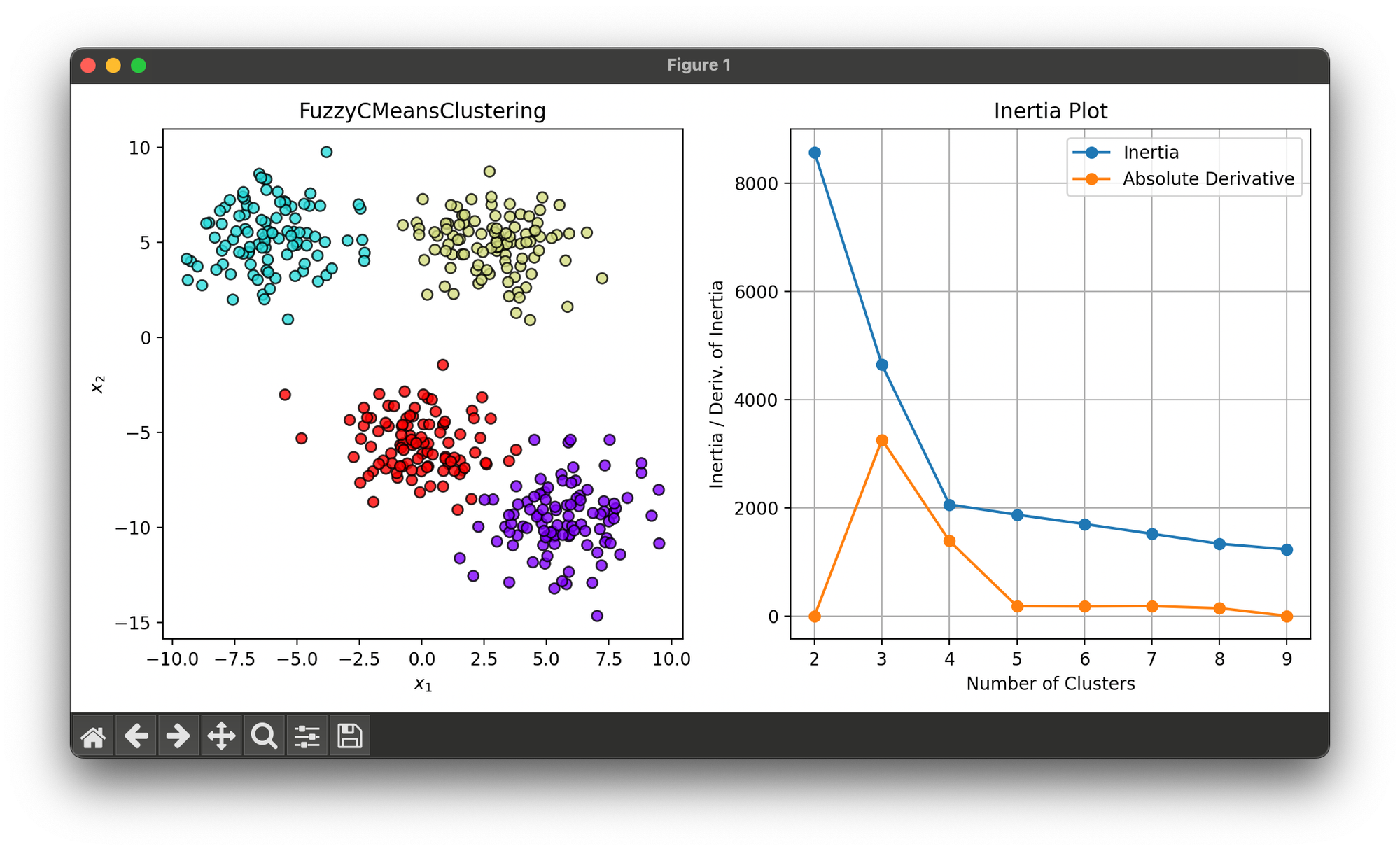

Test on synthesized dataset with 4 blobs:

from luma.clustering.kmeans import FuzzyCMeansClustering

from luma.metric.clustering import Inertia

from luma.visual.evaluation import ClusterPlot, InertiaPlot

from sklearn.datasets import make_blobs

import matplotlib.pyplot as plt

X, y = make_blobs(n_samples=400,

centers=4,

cluster_std=1.7,

random_state=10)

scores, labels, models = [], [], []

for i in range(2, 10):

fcm = FuzzyCMeansClustering(n_clusters=i,

max_iter=100,

m=2.0,

random_state=42)

fcm.fit(X)

scores.append(Inertia.score(X, fcm.centers))

labels.append(fcm.labels)

models.append(fcm)

fig = plt.figure(figsize=(10, 5))

ax1 = fig.add_subplot(1, 2, 1)

ax2 = fig.add_subplot(1, 2, 2)

clst = ClusterPlot(models[2], X)

clst.plot(ax=ax1)

inp = InertiaPlot(scores, list(range(2, 10)))

inp.plot(ax=ax2, show=True)

Applications

- Image Processing and Segmentation: Identifying and separating different regions or objects within an image.

- Pattern Recognition: Classifying patterns where ambiguity or overlapping classes are present.

- Data Analysis: Analyzing complex datasets with overlapping clusters or non-distinct cluster boundaries.

Strengths and Limitations

Strengths

- Flexibility: Allows for more nuanced cluster assignments, which can capture the inherent fuzziness in real-world data.

- Robustness: More robust to noise and outliers compared to hard clustering methods.

Limitations

- Sensitivity to Initialization: The choice of initial centroids can affect the final clustering result.

- Selection of Parameters: The number of clusters and the fuzzification parameter need to be chosen carefully and can significantly impact the outcome.

- Computational Complexity: Requires more computation, especially for large datasets, due to the iterative update of membership degrees and centroids.

Advanced Topics

- Optimization Techniques: Methods to optimize the selection of initial centroids and parameters to improve convergence and robustness.

- Hybrid Models: Combining FCM with other algorithms, such as genetic algorithms or neural networks, to enhance clustering performance.

- Application-Specific Adaptations: Customizing FCM for specific domains, such as spatial data clustering or bioinformatics, by integrating domain-specific knowledge into the clustering process.

References

- Bezdek, James C. "Pattern recognition with fuzzy objective function algorithms." Kluwer Academic Publishers, 1981.

- Pal, Nikhil R., and James C. Bezdek. "On cluster validity for the fuzzy c-means model." IEEE Transactions on Fuzzy Systems 3.3 (1995): 370-379.