Precision

Introduction

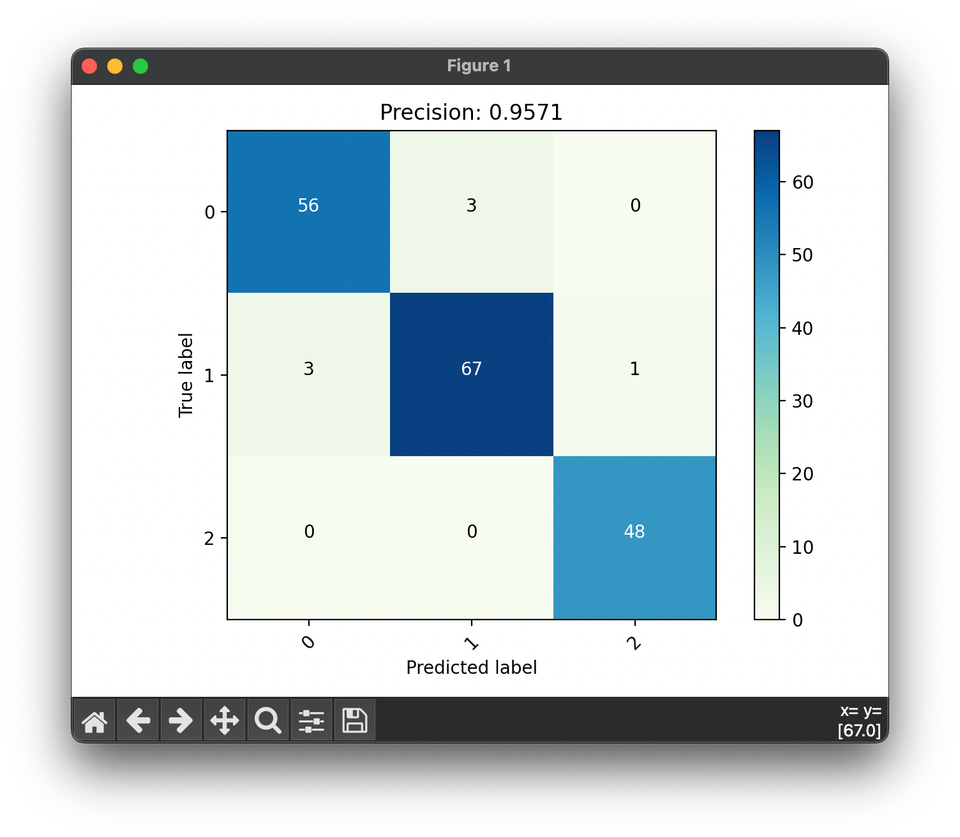

Precision is a critical metric in the evaluation of classification models within machine learning, particularly in scenarios where the cost of false positives is high. It measures the accuracy of the positive predictions made by the model, i.e., the proportion of true positive predictions in all positive predictions. This metric is crucial in fields such as medical diagnosis, spam detection, and any domain where the cost of false alarms is significant.

Background and Theory

Precision, also known as positive predictive value, focuses on the quality of the positive class predictions. It is defined as the ratio of true positive predictions to the total number of positive predictions (the sum of true positives and false positives). Precision is particularly important in situations where the goal is to reduce false positives.

The formula for precision is given by:

where:

- (True Positives) is the number of positive instances correctly identified,

- (False Positives) is the number of negative instances incorrectly identified as positive.

Procedural Steps

To calculate the precision of a machine learning model, follow these steps:

- Model Prediction: Use your model to make predictions on a test dataset.

- Identify Positive Predictions: From the model's predictions, identify which instances are predicted as positive (both correctly and incorrectly).

- Calculate Precision: Apply the precision formula using the identified true positives and false positives.

Mathematical Formulation

Precision can be expressed mathematically as:

This formulation emphasizes the importance of minimizing false positives to achieve high precision.

Applications

Precision is particularly useful in domains where the cost of false positives is high, such as:

- Medical diagnosis: Misdiagnosing a healthy patient as sick can lead to unnecessary stress and treatment.

- Spam detection: Incorrectly filtering legitimate emails as spam can result in missed important communications.

- Financial fraud detection: False alerts can lead to wasted resources and customer dissatisfaction.

Strengths and Limitations

Strengths

- Emphasis on False Positives: Precision is valuable in applications where avoiding false positives is critical.

- Specificity: It provides a specific measure of the predictive power of your model regarding the positive class.

Limitations

- Ignores True Negatives: Precision does not take into account the true negatives and can provide a skewed view of the model's overall performance if used alone.

- Not Suitable for Imbalanced Classes: Like accuracy, precision can be misleading in cases of highly imbalanced datasets where the negative class significantly outnumbers the positive class.

Advanced Topics

In practice, precision is often used in conjunction with recall (also known as sensitivity or true positive rate) to provide a more complete picture of a model's performance. The F1 Score, which is the harmonic mean of precision and recall, is commonly used to balance the trade-off between these two metrics.

References

- Davis, Jesse, and Mark Goadrich. "The relationship between Precision-Recall and ROC curves." Proceedings of the 23rd international conference on Machine learning. 2006.

- Saito, Takaya, and Marc Rehmsmeier. "The Precision-Recall plot is more informative than the ROC plot when evaluating binary classifiers on imbalanced datasets." PloS one 10.3 (2015): e0118432.