Recall

Introduction

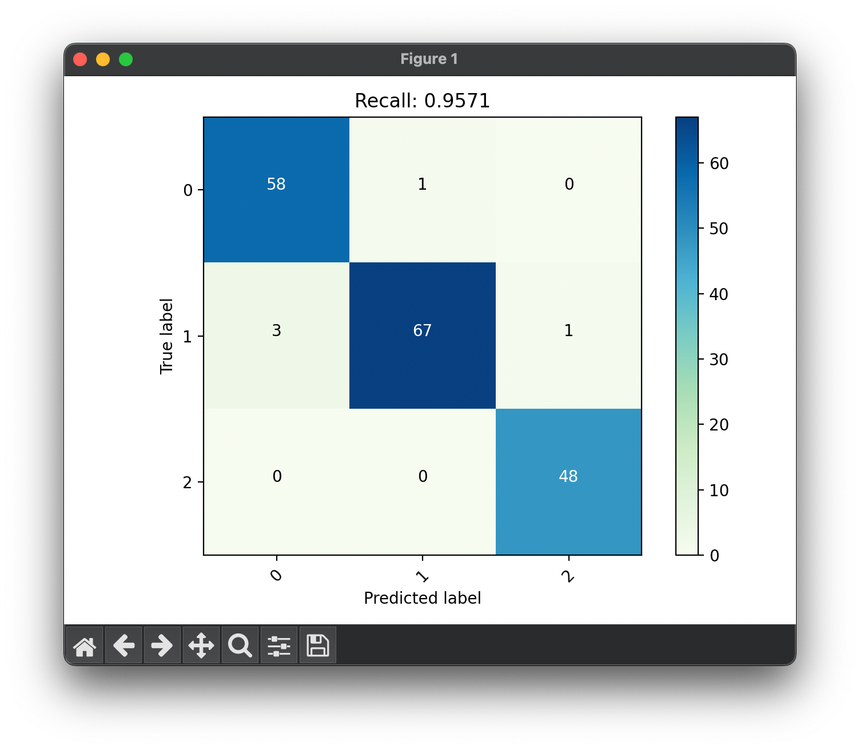

Recall, also known as sensitivity or true positive rate, is a vital metric for evaluating the performance of classification models, especially in contexts where missing a positive instance (i.e., a false negative) carries significant consequences. It measures the proportion of actual positives that are correctly identified by the model, providing insight into the model's ability to capture positive cases.

Background and Theory

The concept of recall becomes critical in scenarios where the cost of false negatives is much higher than the cost of false positives. For instance, in medical diagnostics, failing to identify a disease (false negative) could be far more serious than incorrectly diagnosing a disease (false positive). Recall addresses this by focusing on the model's capability to identify all relevant instances.

Recall is defined as the ratio of true positives to the sum of true positives and false negatives:

where:

- (True Positives) are the instances correctly identified as positive,

- (False Negatives) are the instances wrongly identified as negative.

Procedural Steps

Calculating recall for a machine learning model involves the following steps:

- Model Prediction: Predict outcomes using your model on a dataset.

- Identify Actual Positives: Determine which instances are actual positives based on the ground truth.

- Calculate Recall: Use the recall formula with your identified true positives and false negatives.

Mathematical Formulation

The mathematical expression for recall is:

This formulation emphasizes maximizing the number of true positives while minimizing the number of false negatives to achieve a high recall.

Applications

Recall is crucial in fields where missing a positive prediction has severe implications, such as:

- Medical diagnosis: Ensuring diseases are detected to avoid missing treatments.

- Fraud detection: Identifying as many fraudulent transactions as possible to prevent financial loss.

- Information retrieval: Finding all relevant documents in a search to ensure completeness of information.

Strengths and Limitations

Strengths

- Focus on False Negatives: Recall is invaluable in minimizing false negatives, making it essential for sensitive applications.

- Comprehensiveness: It ensures that the model captures as many positives as possible.

Limitations

- Potential for Overestimation: Focusing solely on recall can lead to models that over-predict the positive class, potentially increasing false positives.

- Imbalanced Classes Sensitivity: Recall may not provide a complete picture of the model's performance in cases of class imbalance.

Advanced Topics

To balance the trade-off between recall and precision, metrics such as the F1 score and the Precision-Recall (PR) curve are used. The F1 score is the harmonic mean of precision and recall, providing a single metric to assess a model's balance between these two measures. The PR curve, meanwhile, shows the trade-off between precision and recall for different thresholds, offering a more detailed view of a model's performance.

References

- Powers, David M. W. "Evaluation: From Precision, Recall and F-Measure to ROC, Informedness, Markedness & Correlation." Journal of Machine Learning Technologies 2.1 (2011): 37-63.

- Davis, Jesse, and Mark Goadrich. "The relationship between Precision-Recall and ROC curves." Proceedings of the 23rd international conference on Machine learning. 2006.