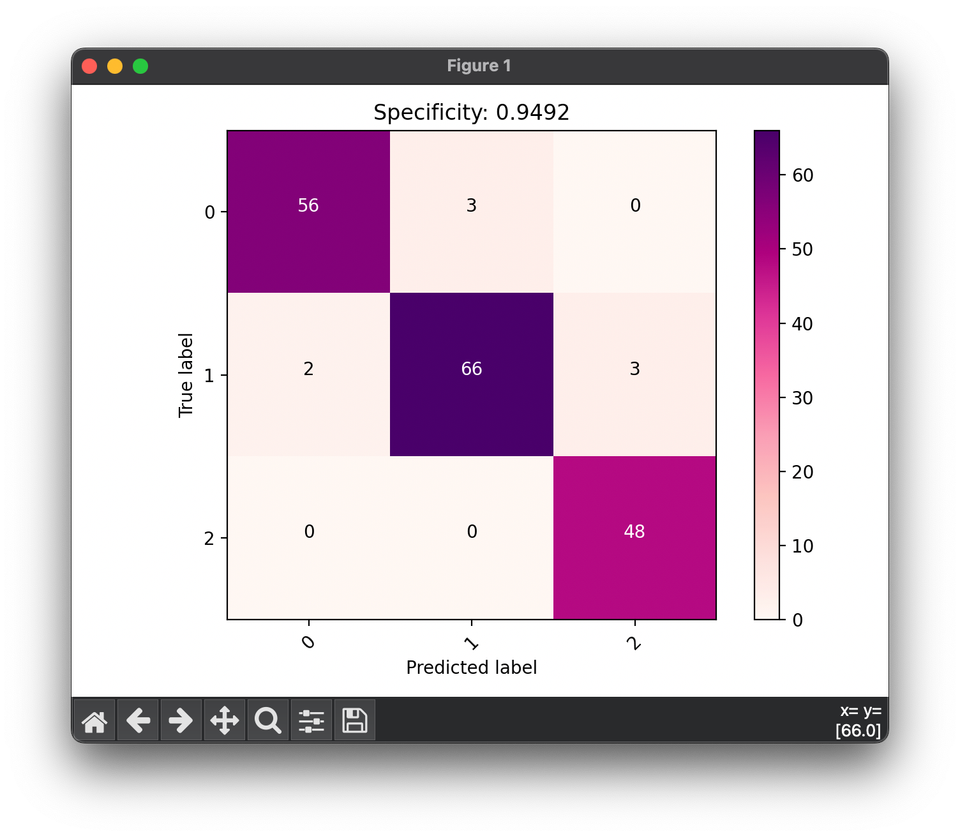

Specificity

Introduction

Specificity, also known as the true negative rate, is a performance metric used to evaluate the effectiveness of a classification model in identifying negative instances correctly. It measures the proportion of actual negatives that are accurately predicted as negative, making it a crucial metric for scenarios where the cost of falsely identifying a negative instance as positive (false positive) is high.

Background and Theory

Specificity complements sensitivity (recall) by focusing on the model's ability to identify negative outcomes. In medical testing, for example, specificity is the ability of a test to correctly identify those without the disease (true negatives), which is as important as sensitivity, the test's ability to identify those with the disease (true positives).

The formula for specificity is given by:

where:

- (True Negatives) are the instances correctly identified as negative,

- (False Positives) are the instances wrongly identified as positive.

Procedural Steps

To calculate the specificity of a machine learning model, follow these steps:

- Model Prediction: Utilize your model to make predictions on a dataset.

- Identify Negative Predictions: Determine which instances are actual negatives based on the ground truth and the model's predictions.

- Calculate Specificity: Use the specificity formula with your identified true negatives and false positives.

Mathematical Formulation

Specificity can be mathematically expressed as:

This formulation emphasizes the importance of correctly identifying negative instances while minimizing the misclassification of negative instances as positive.

Applications

Specificity is particularly important in areas such as:

- Medical diagnostics: Avoiding false alarms in disease screening to prevent unnecessary worry or treatment.

- Security systems: Minimizing false alarms to avoid desensitization to warnings or unnecessary resource expenditure.

- Content moderation: Correctly identifying acceptable content to prevent over-censorship.

Strengths and Limitations

Strengths

- Important for Negative Class Identification: Specificity is crucial for evaluating a model's performance in correctly identifying negative cases, balancing the focus on positive predictions.

- Useful in Imbalanced Datasets: Provides insights into the model's performance on the majority class in cases of class imbalance.

Limitations

- Does Not Reflect Positive Class Performance: By focusing on the negative class, specificity alone does not provide information on the model's ability to identify positive instances.

- Can Be Misleading in Highly Imbalanced Datasets: High specificity can be achieved even if the model poorly identifies positive instances in highly imbalanced datasets.

Advanced Topics

In practice, specificity is often used alongside other metrics like sensitivity (recall) and precision to provide a more comprehensive evaluation of a model's performance. The trade-off between sensitivity and specificity is a key consideration in designing and evaluating models, especially in fields like medical diagnostics where both identifying the condition and avoiding false alarms are critical.

References

- Parikh, R., Mathai, A., Parikh, S., Chandra Sekhar, G., & Thomas, R. (2008). Understanding and using sensitivity, specificity and predictive values. Indian Journal of Ophthalmology, 56(1), 45–50.

- Flach, P., & Kull, M. (2015). Precision-Recall-Gain curves: PR analysis done right. Advances in Neural Information Processing Systems, 28.