[Paper Review] Model Compression - Quantization, etc

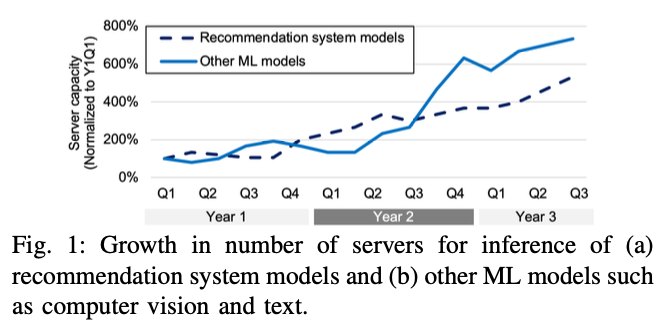

1.[Simple Review] First-Generation Inference Accelerator Deployment at Facebook

Paper Info Anderson, Michael, et al. "First-generation inference accelerator deployment at facebook." arXiv preprint arXiv:2107.04140 (2021). Abstra

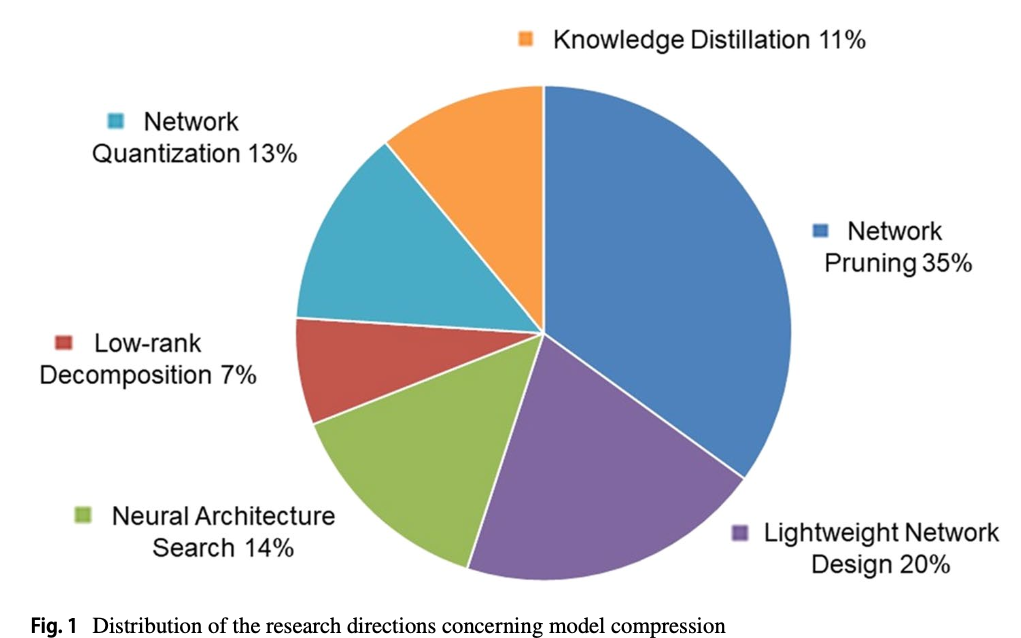

2.A survey of model compression strategies for object detection

Lyu, Z., Yu, T., Pan, F. et al. A survey of model compression strategies for object detection. Multimed Tools Appl 83, 48165–48236 (2024). https:

3.Any-Precision LLM: Low-Cost Deployment of Multiple, Different-Sized LLMs

Park, Yeonhong, et al. "Any-Precision LLM: Low-Cost Deployment of Multiple, Different-Sized LLMs." arXiv preprint arXiv:2402.10517 (2024).최근, Large La

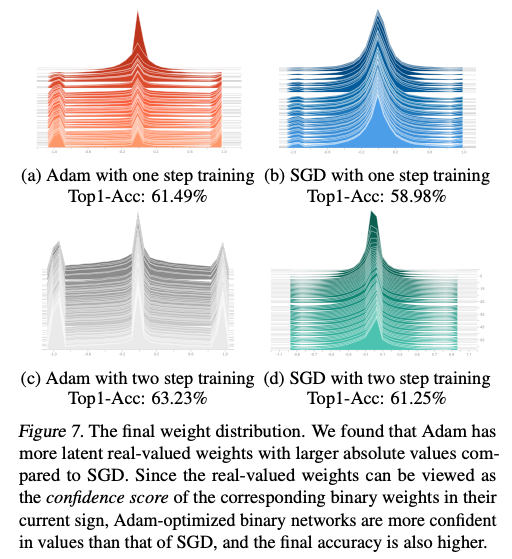

4.[2017 ICML] How Do Adam and Training Strategies Help BNNs Optimization?

ICML 2017Binary Neural Networks, BNNs은 일반적으로 Adam optimization을 사용하여 얻어진다.하지만, Adam이 BNN optimization에서 SGD와 같은 다른 optimizers보다 우수한 근본적인 이유를 탐구하거나 특정

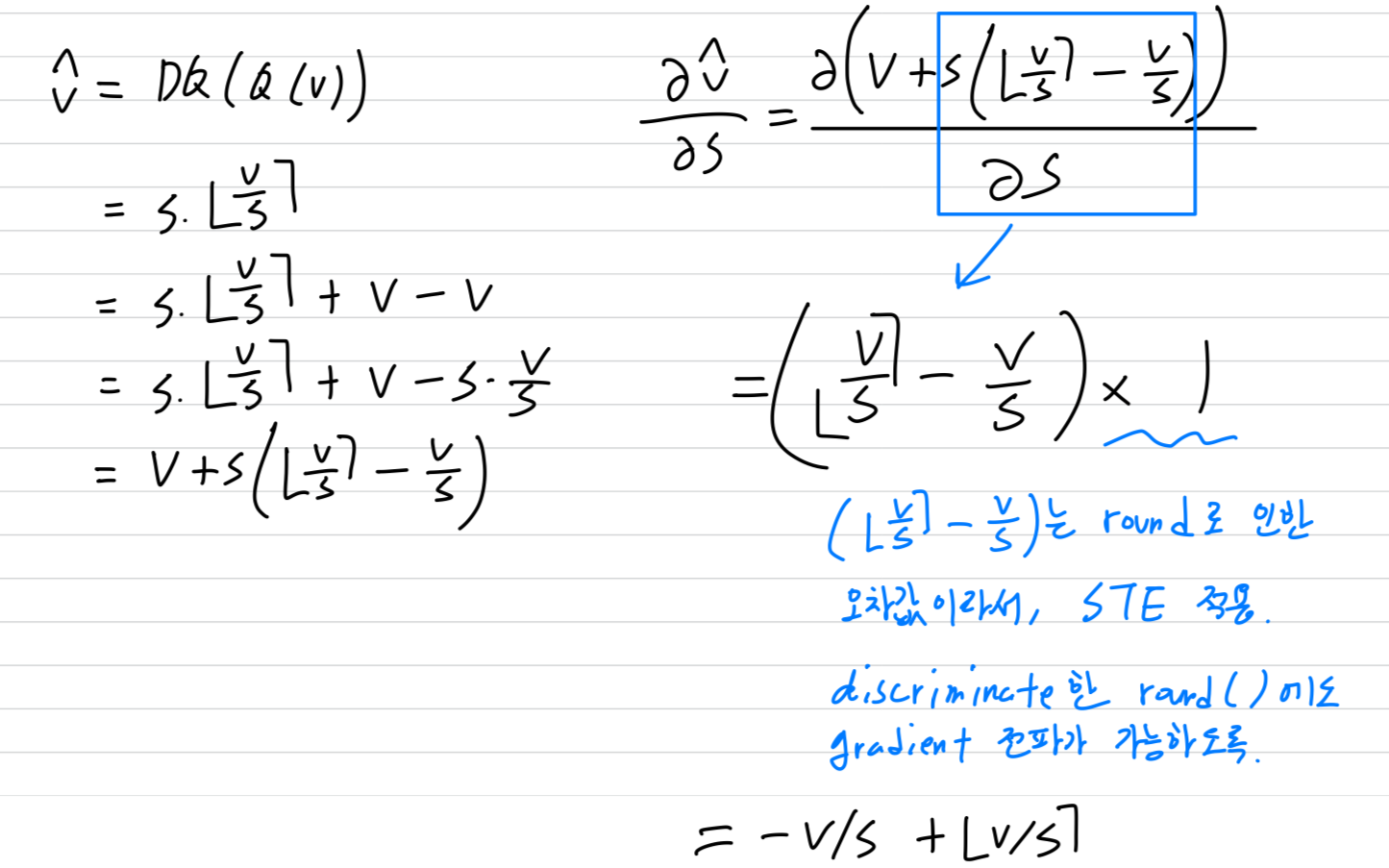

5.[2020 ICLR] LEARNED STEP SIZE QUANTIZATION

Paper Info. https://arxiv.org/pdf/1902.08153 Absract Deep networks는 inference 시 low precision operations을 사용하면 high precision에 비해 power and space a

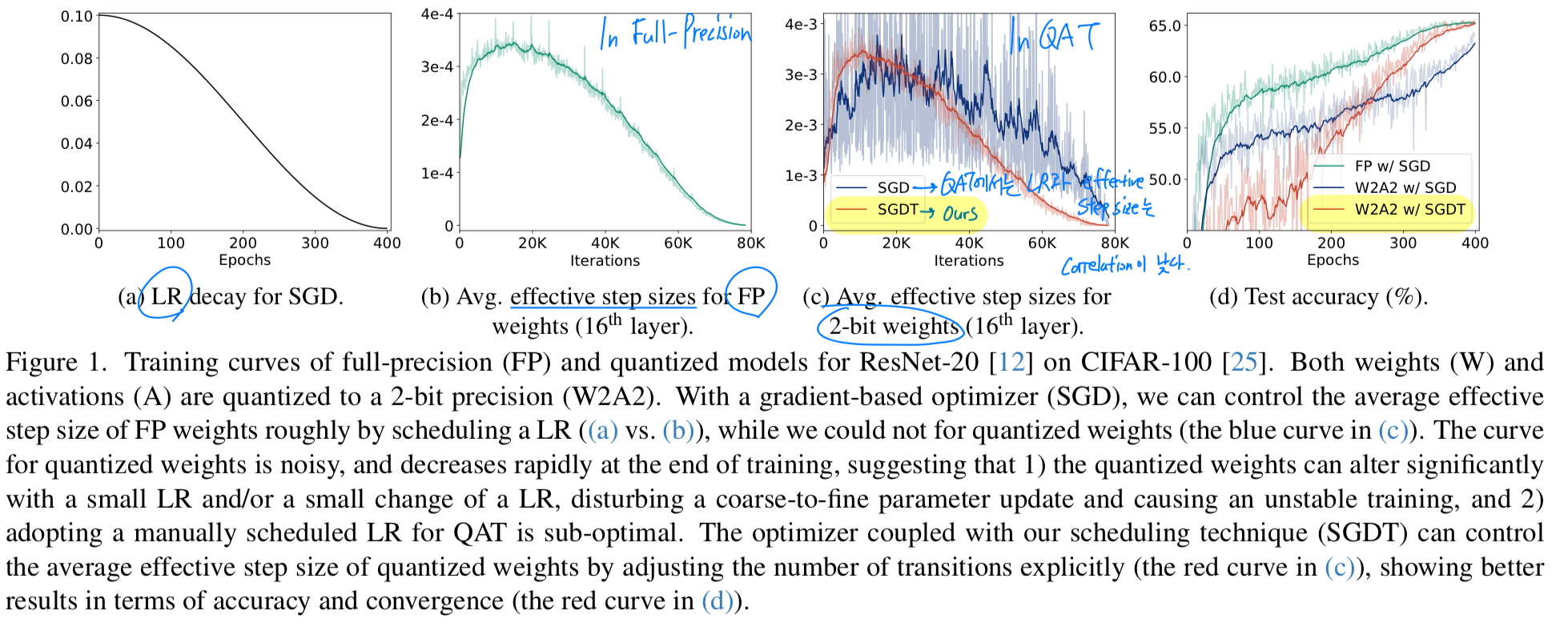

6.[2025 ICCV] Scheduling Weight Transitions for Quantization-Aware Training

https://openaccess.thecvf.com/content/ICCV2025/papers/Lee_Scheduling_Weight_Transitions_for_Quantization-Aware_Training_ICCV_2025_paper.pdf(backg