Transformer / Vi-T / Attention and Convolution

1.[논문리뷰] On The Relationship Between Self-Attention and Convolution Layers

Paper: ON THE RELATIONSHIP BETWEEN SELF-ATTENTIONAND CONVOLUTIONAL LAYERS

2021년 8월 9일

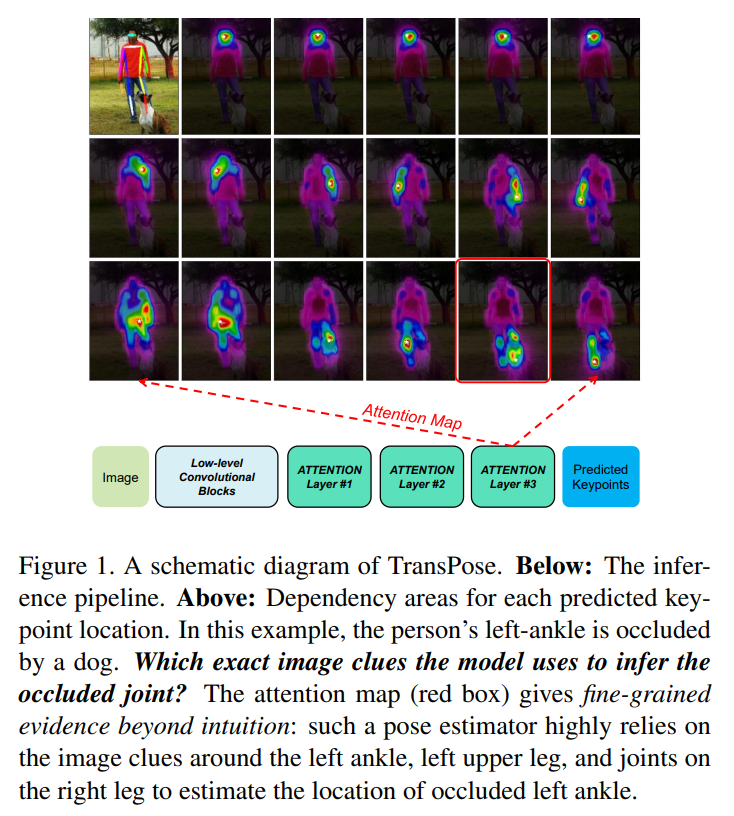

2.[논문리뷰] TransPose: Keypoint Localization via Transformer

Paper: TransPose: Keypoint Localization via Transformer

2021년 8월 10일

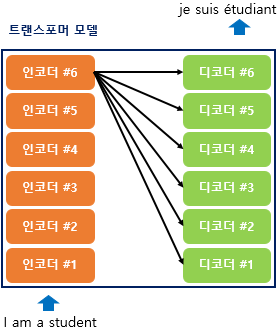

3.[이론&코드] Transformer in Pytorch

Transformer의 각 구성 요소에 대한 이론적인 내용과, 그에 대응하는 pytorch 기반 code에 대해 작성한 글입니다.

2021년 10월 6일

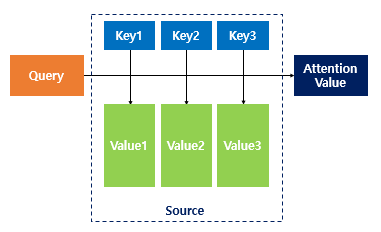

4.[개념정리] Attention Mechanism

Transformer의 기반이 되는 Attention 기법에 대해 정리한 글입니다.

2021년 10월 6일

5.Dot product Self-attention은 Lipchitz인가?

linear, Non-linaer, Lipchitzs on Dot-product Multi-head Attention

2021년 11월 30일

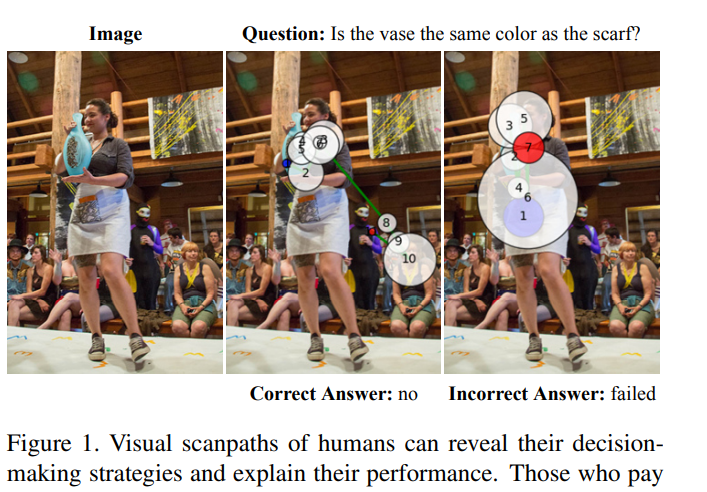

6.[논문리뷰] Predicting Human Scanpaths in Visual Questions Answering, in CVPR 2021.

Paper: Predicting Human Scanpaths in Visual Question Answering

2021년 12월 13일