Artificial Intelligence

- Science of making things smart

ex. Human intelligence exhibited by machine

Where we are at

- narrow AI - a system that can do just on(or a few) defined things as well or better than humans

ex. Human intelligence exhibited by machine

Common Use Cases

- object recognition

- speech recognition/ sound detection

- Natural Language Processing

- Prediction

- Translation

- Resoration/Transformation

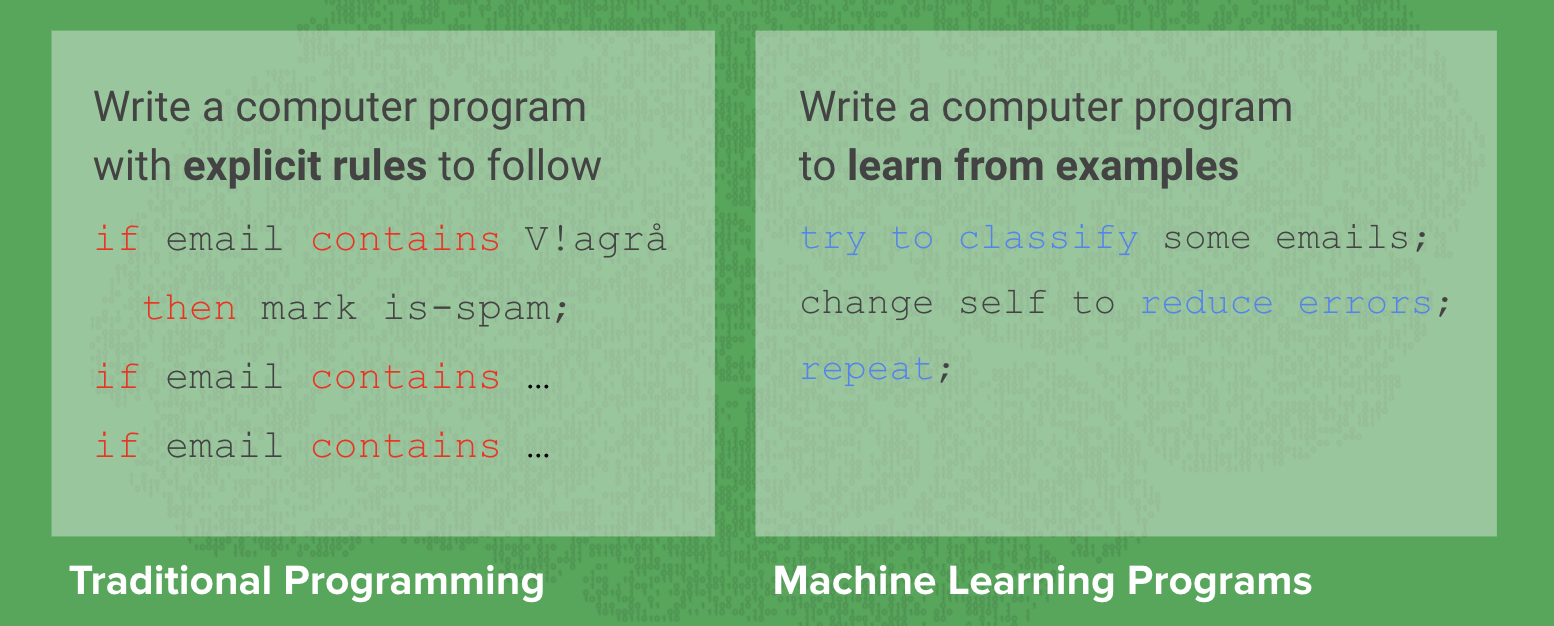

Machine Learning

- An approach to achieve artificial intelligence through systems that can learn from ecperience to find patterns in a set of data

→ teaching a computer to recognize patterns by example, rather than programming it with specifi rules - ML is all about predicting stuff essentially

1. It takes some data

2. Learns patterns

3. Classifies new data it has not seen before

→ same ML system can then be re-used to learn any feature object without re-writing the code

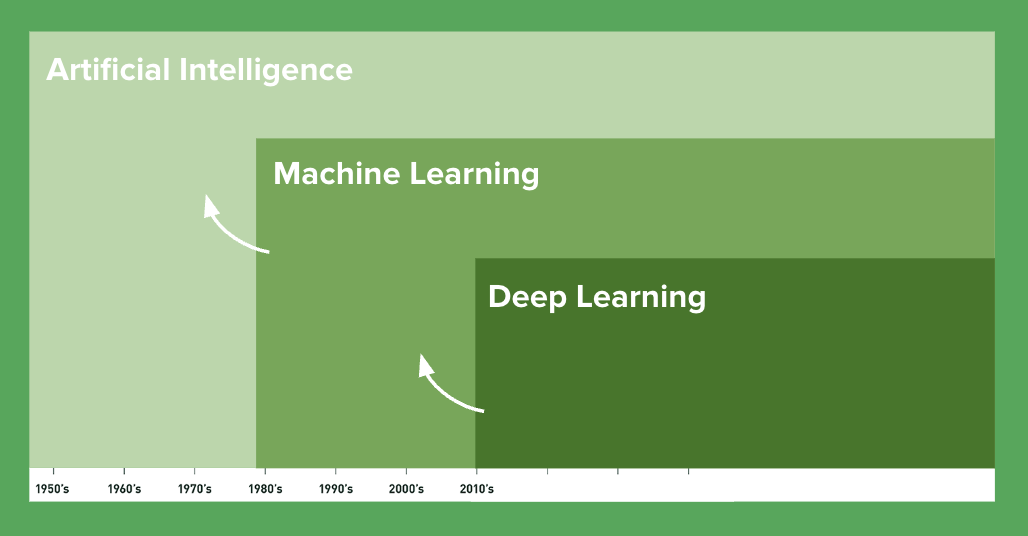

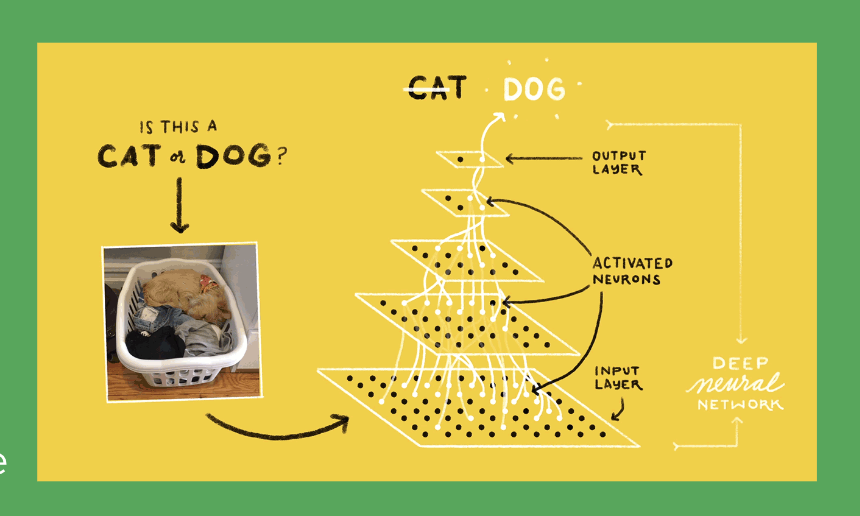

Deep Learning

- Technique for implementing Machine Learning

→ DL technique: deep netural networks(DNNs) - code structures you write are arranged in layers that loosely mimic the human brain, learning patterns of patterns.

- 1950s: idea of AI goes back

- 1980s: ML begins to grow in popularity

- around 2010: DL drices large progress towared acheiving narrow AI systems

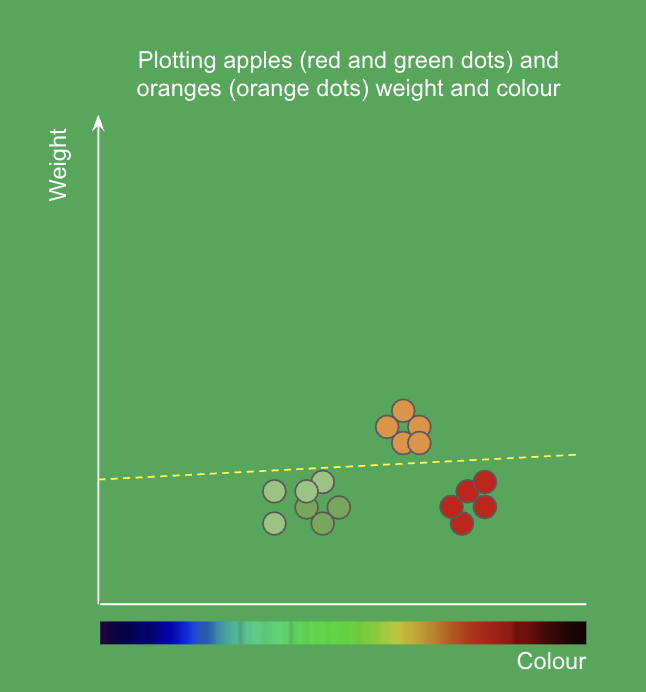

Data choose to train ML

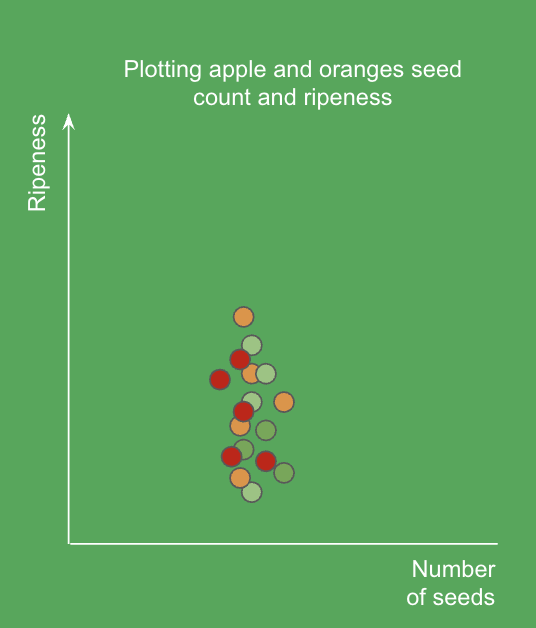

- Features(Attributes): Featrues are used to train an ML system → properties of the things you are trying to learn about

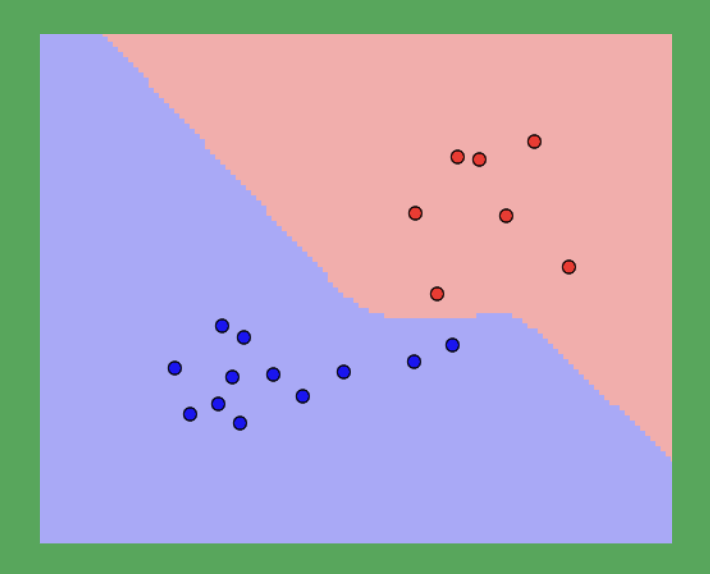

- features of a fruit might be weight and colour. 2 features, would mean there are 2 dimensions. ML system can learn to split the data up with a line to seperate apples from oranges. This can now be used to make future classifications when we plot new points the system has not seen

- Choosing useful input features can have big impact on the quality of the ML system. Some ffeatures may note be useful enough to seperate the data points.

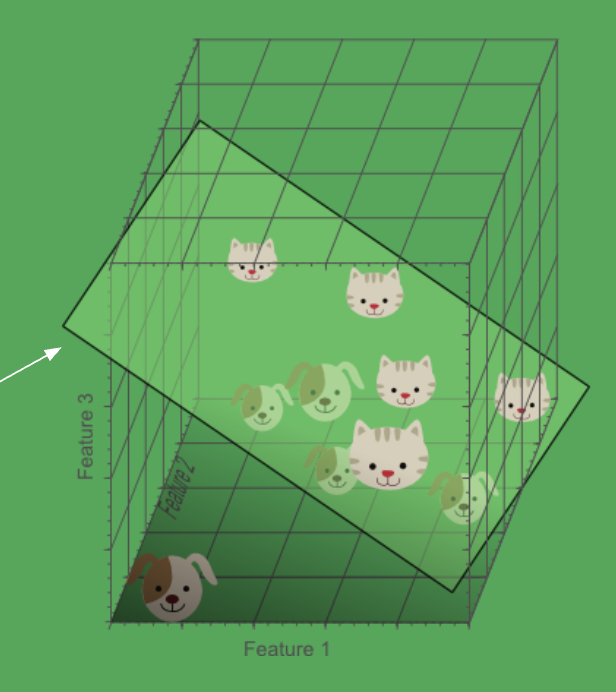

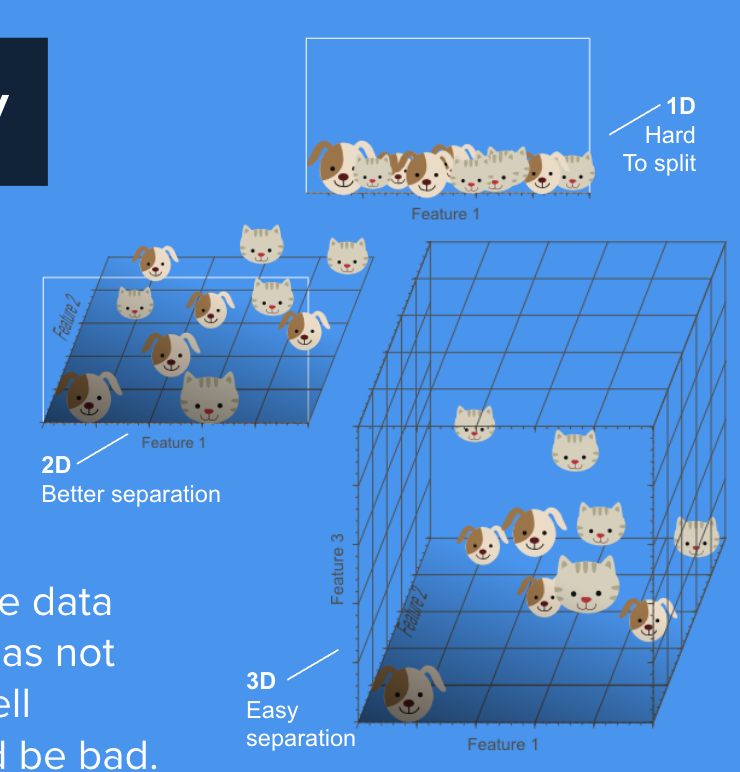

Non trivial data

- If you needed 3 dimensions to separate the data meaningfully we coule plot it in a 3D charts, and separate the clusters of points with a plane that devides the two sets of data as shown. Most ML problems have enem higher dimensionality! Like 20D or even millions in the case of image recognition.

Dimensionality

- Adding more dimensions can often help seperate out the data points, allowing the ML system to split the data up into groupings for classification as shown on the right. Too many dimensions however can lead to overfitting.

Data hunting

- finding enough unbiased triaing data for all of those features in a format that can be fed into an ML system to learn from.

How ML systems are trained

Supervised Learing

- provided with traing data that is labeled

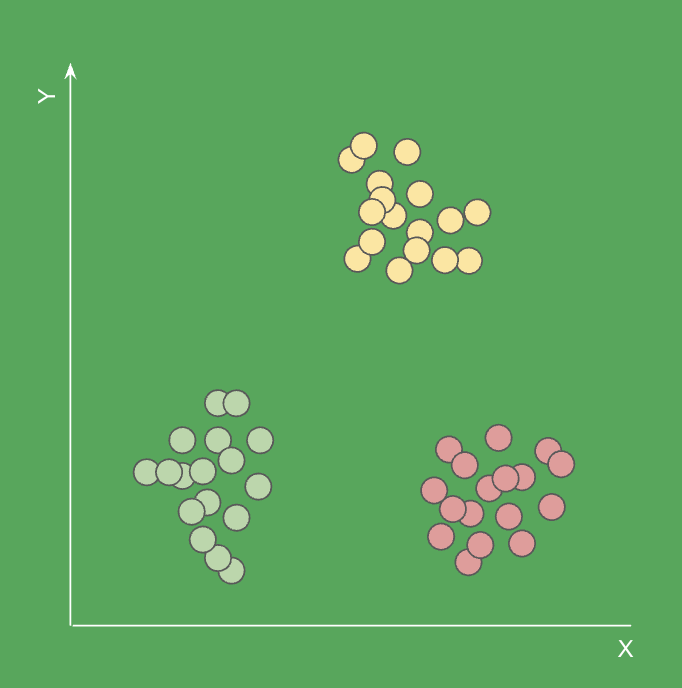

Unsupervised Learing

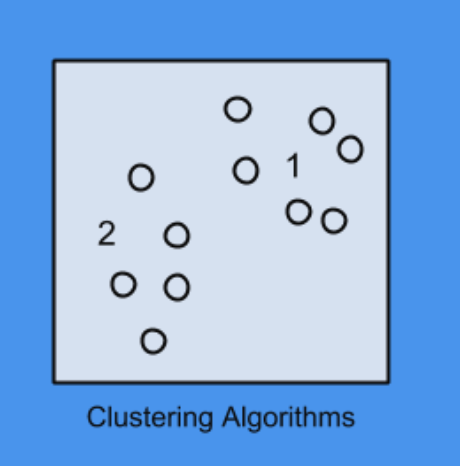

- machine must learn from an unlabelled data set → as the number of clusters may not be known in advance, so it has to take a best guess. Sometimes the clusters are not as clear as the ones shown here.

Reinforcement Learing

- learning by trial-and-error through reward or punishment.

→ program learns by playing the game millions of times. we reward the program when it makes a goos move. When it loses we give no reward(or negative reward)

How does it all work

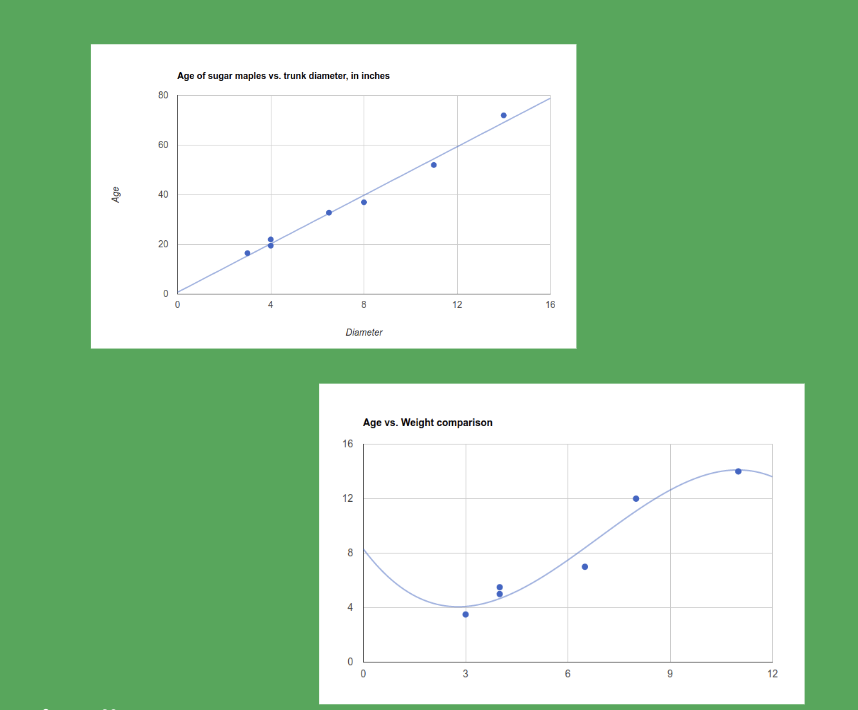

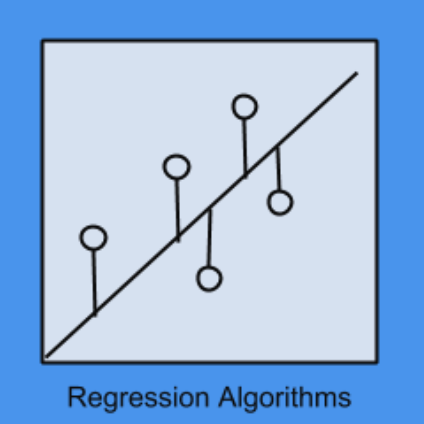

- regerssion

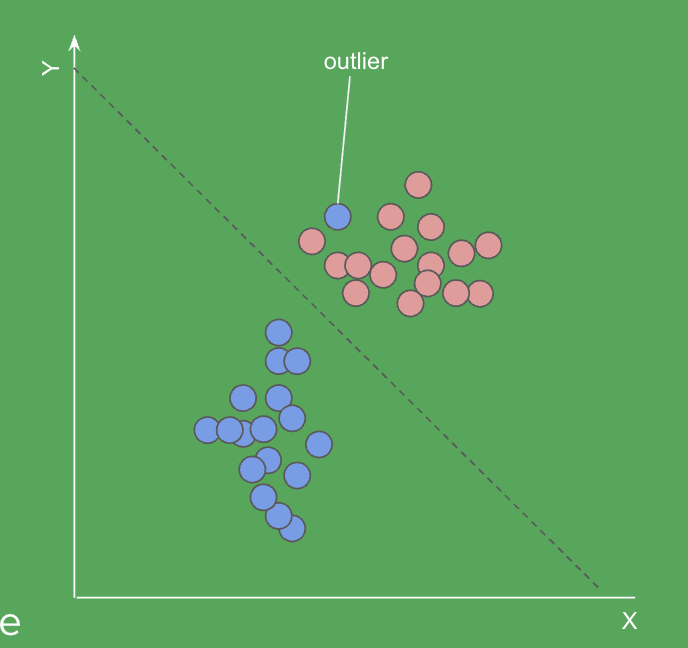

- use a line to devide the two clusters

→ ther are may be a few outliers - classify two clusters of points by sampling x known points close by → k-nearnest neighbour

Neural Networks

- In ML, artificial neural networks (loosely modeled on the brain) are used to calculate probabilities for features they are trained to look out for.

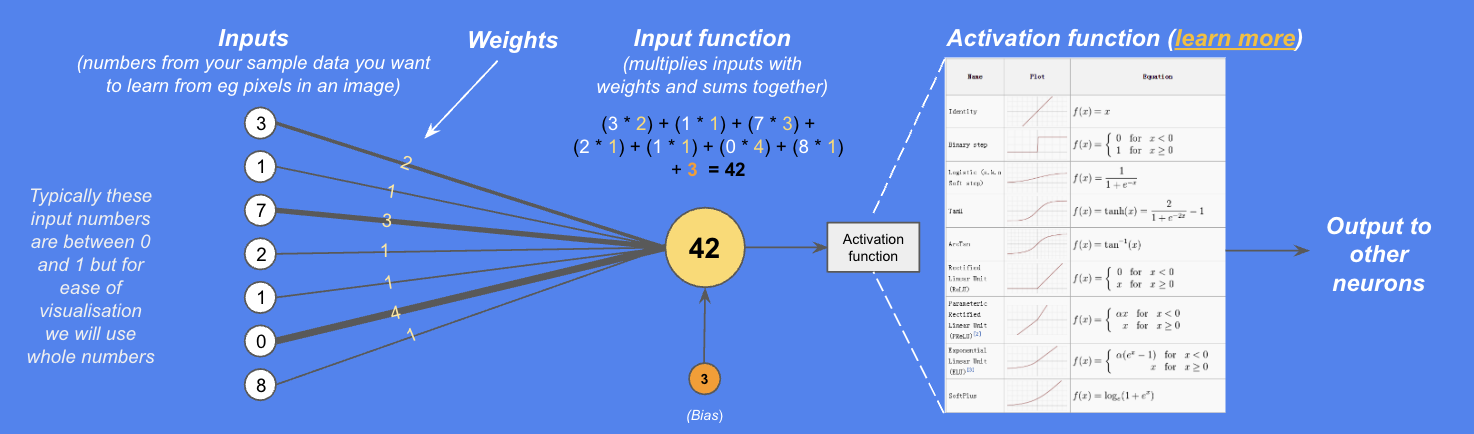

Artificial neuron (perception)

- a bunch of weighted inputs that are summed together.

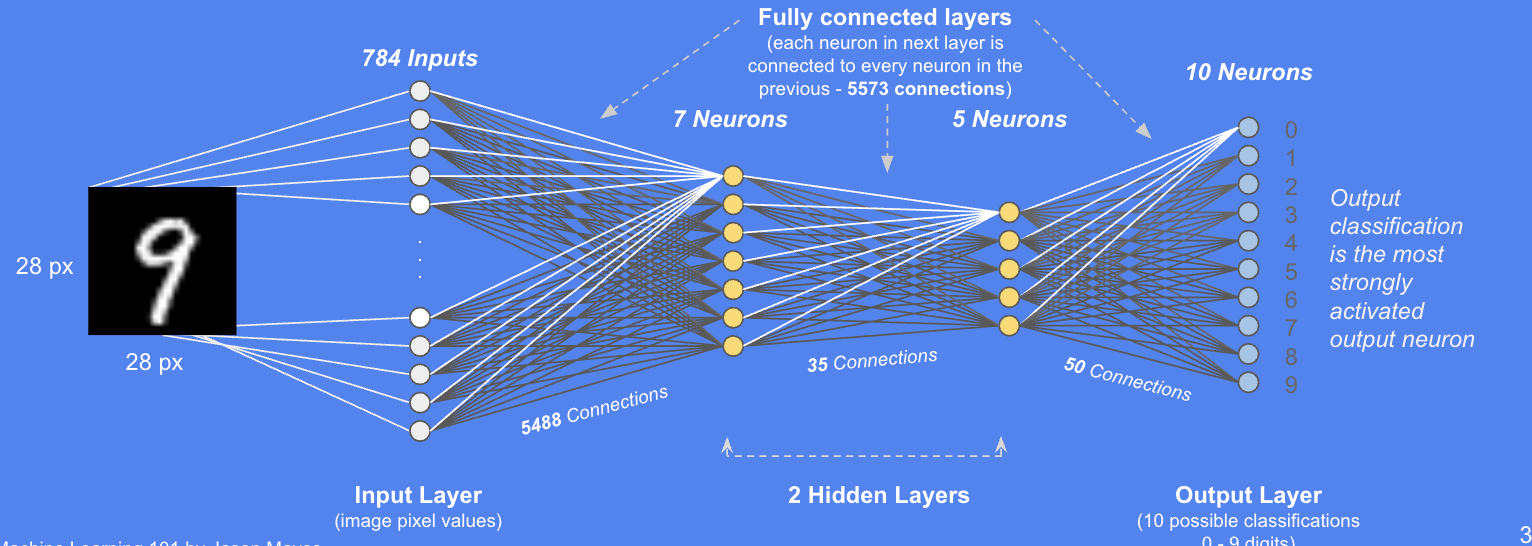

Multi layered perceptrons

- multi layered perceptron(or deep neural network): one of the oldest forms of" neural nets"

- Each layer is fully connected to the next and data flows forward only

Deep Neural Networks

- Deep Neural Networks simply consists of many "hidden layers" between the input and the output. These hidden layers typically are of lower dimensionality so they can generalise better and not overfit to the input data.

Types of Ml output

- Regression: predict numerical values

- Classification: one of n labels

- Clustering: most similar other examples

- Sequence prediction: What comes next?

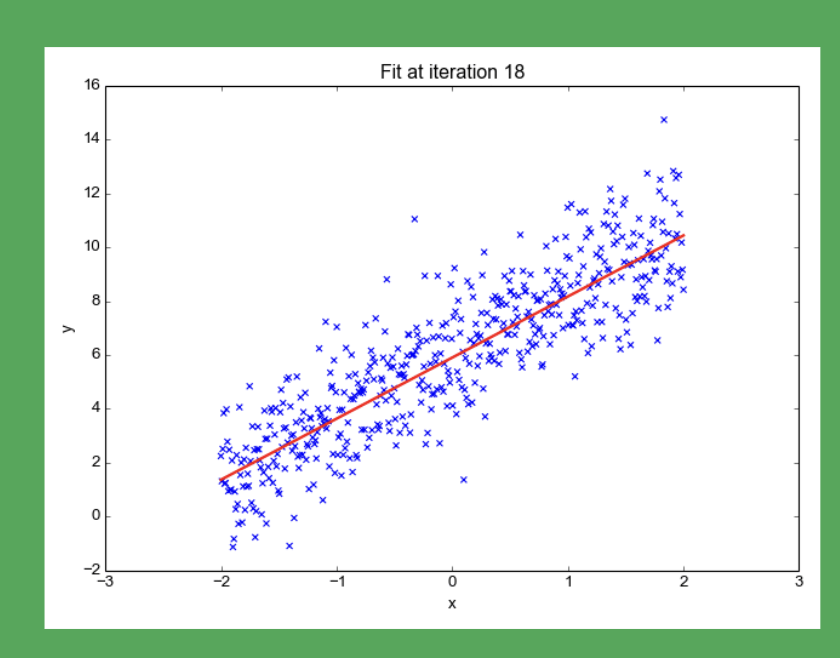

Continuous Output

- The output of the ML system is a decimal number. Linear regerssion for example tries to fir a straight line to the example data.

Classification

- Determining the class for a set of inputs. The output is a label, not a number like regerssion or probability etimation.

Probability Estimation

- Output of the ML system is some decimal number between 0 and 1 (0.751 == 75.1%)

Using output

- Once you have an output, it is then down to the programmer to do something useful with that gained knowledge

Types of ML algorithms

-

Regerssion: iteratively modeling the realtionship between variables using a measure of error in the predictions made by the model.

-

Instance-Based: dicision problem with instances or examples of training data that are deemed important or required to the model

-

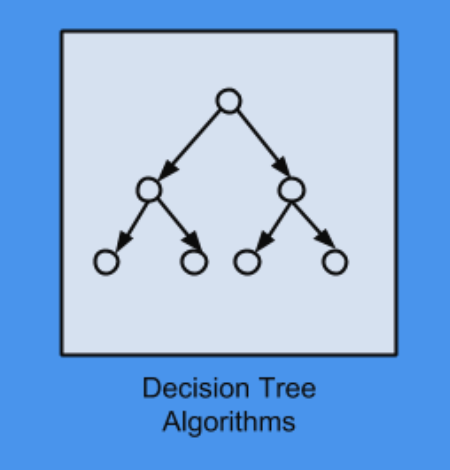

Decision Trees: Decision Trees: construct a model of decisions made based on actual values of attributes in the data. Decisions fork in tree structures until a prediction decision is made for a given record.

-

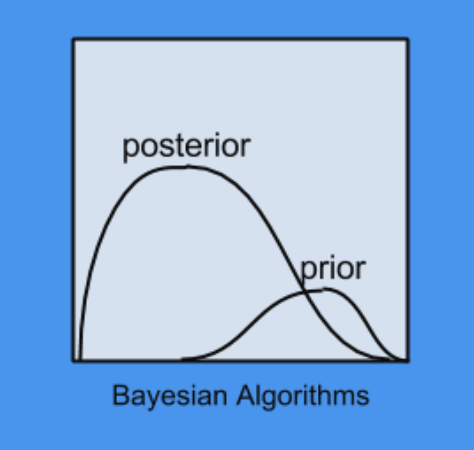

Bayesian: explicitly apply Bayes' Theorem for problems such as classification and regression.

-

Clustering: describes the class of problem and the class of methods. Clustering methods are typically organized by the modeling approaches such as centroid-based and hierarchical.

-

Association Rules: extract rules that best explain observed relationships between variables in data.

-

Artificial Neural Networks: inspired by the structure and/or function of biological neural networks in the brain.

-

Deep Learning: modern update to Artificial Neural Networks that exploit abundant cheap computation.

-

Dimensionality Reduction: seeks and exploits the inherent structure in the data. In this case it's in an unsupervised manner or order to summarize or describe data using less information.

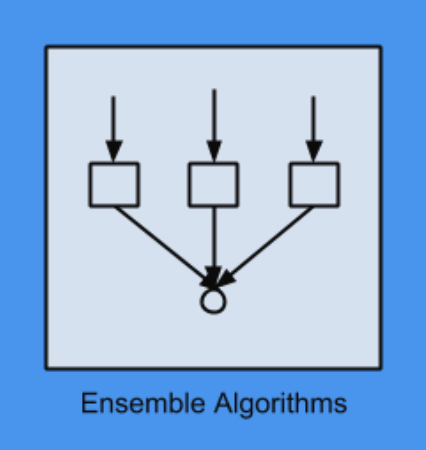

-

Ensemble: composed of multiple weaker models that are independently trained and whose predictions are combined in some way to make the overall prediction.