논문 리뷰

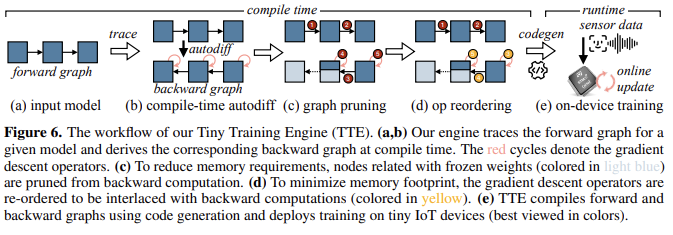

1.On-Device Training Under 256KB Memory

On-Device Training Under 256KB Memory 논문에 대하여 ...

2024년 12월 18일

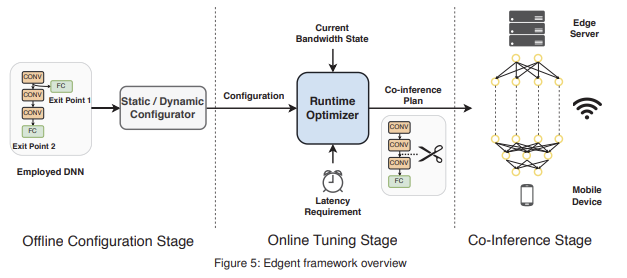

2.Edge AI: On-Demand Accelerating Deep Neural Network Inference via Edge Computing

Edge AI: On-Demand Accelerating Deep Neural Network Inference via Edge Computing 논문에 대하여...

2024년 12월 16일

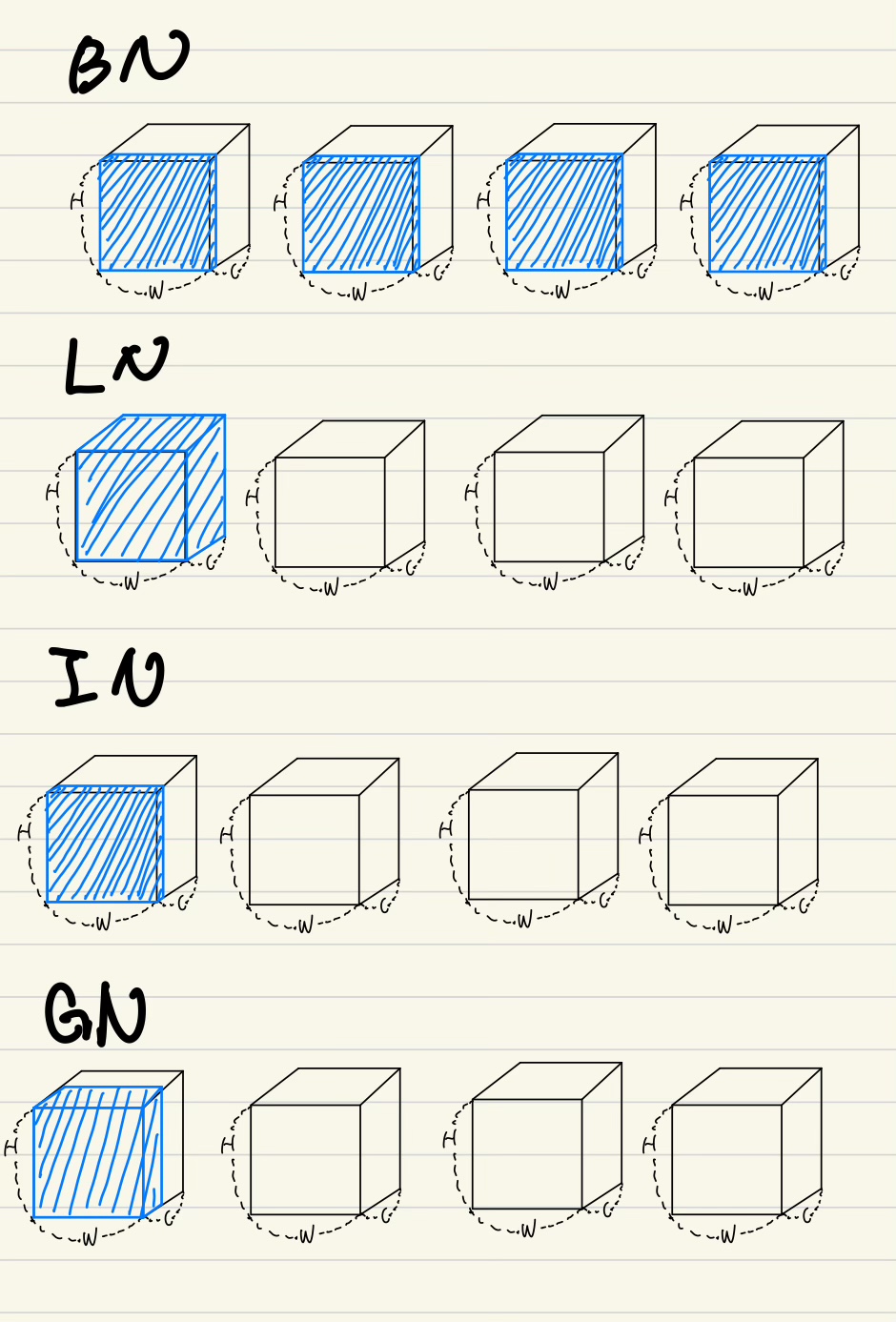

3.Group Normalization

Group Normalization 논문에 대하여...

2024년 11월 21일

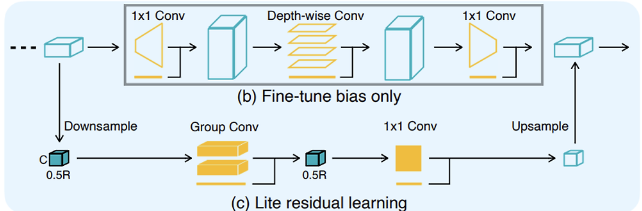

4.TinyTL

TinyTL 논문에 관하여...

2024년 11월 19일

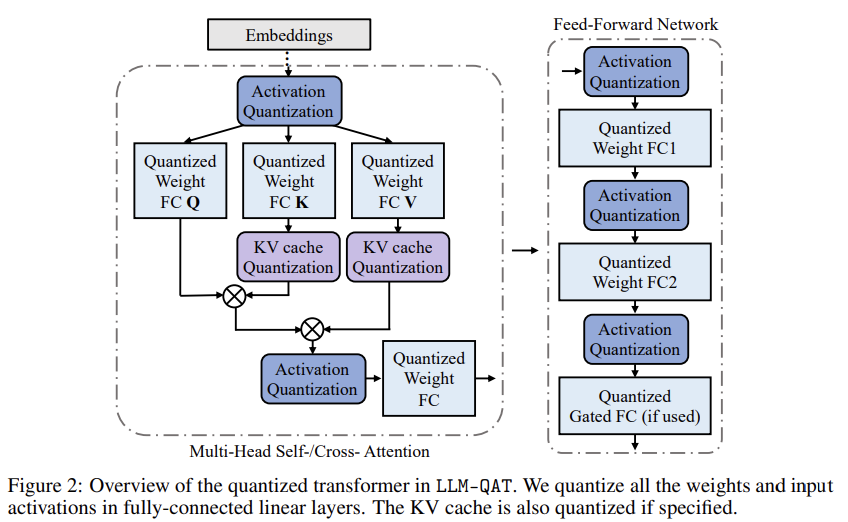

5.LLM-QAT: Data-Free Quantization Aware Training for Large Language Models

LLM-QAT: Data-Free Quantization Aware Training for Large Language Models 논문에 대하여...

2024년 12월 26일

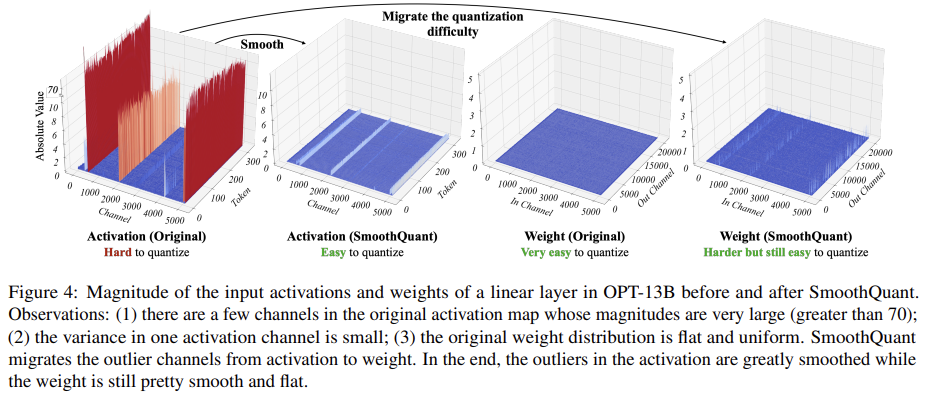

6.SmoothQuant: Accurate and Efficient Post-Training Quantization for Large Language Models

SmoothQuant: Accurate and Efficient Post-Training Quantization for Large Language Models 논문에 대하여...

2024년 12월 26일

7.A Survey of Quantization Methods for Efficient Neural Network Inference

A Survey of Quantization Methods for Efficient Neural Network Inference 논문에 대하여...

2025년 1월 2일