논문 읽으며 정리

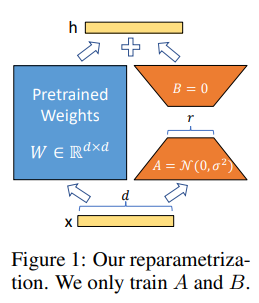

1.LORA: LOW-RANK ADAPTATION OF LARGE LANGUAGE MODELS 논문 읽기

본 논문은 Microsoft에서 21년도에 만든 논문입니다

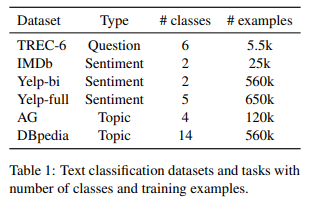

2.Universal Language Model Fine-tuning for Text Classification

논문의 링크: 논문 링크 논문의 open source 링크 : github link

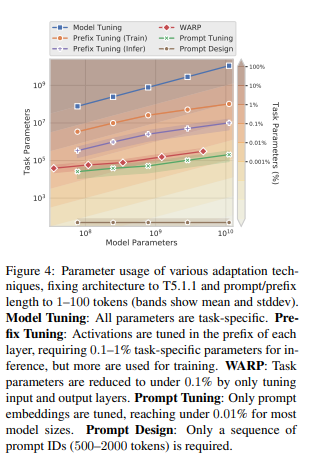

3.The Power of Scale for Parameter-Efficient Prompt Tuning 논문 읽기

본 논문은 Open ai에서 발표한 논문이며, Instruct GPT라고도 불리는 논문입니다.

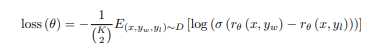

4.Training language models to follow instructions with human feedback 논문 읽기

Abstract 언어모델이 더 커진다고 해서 더 잘따르는건 아니다. -> 사용자의 의도하고는 맞지 않는걸 output으로 내보낼 수 있다. 본 논문은 사람의 피드백에 따라 fine-tuning하여 언어 모델이 광범위한 작업에 대해서 사용자의 의도를 맞추는 방법을 제시한다. 과정 GPT-3 를 fine-tuning하기 위한 labeler demonstrati...

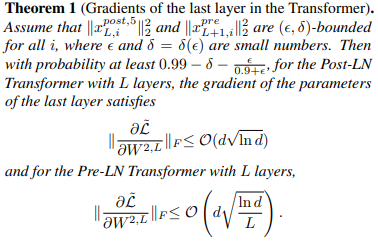

5.On Layer Normalization in the Transformer Architecture 논문 읽기

해당 논문은 ICML 2020에서 소개된 논문이며, Microsoft에서 나온 논문이다.

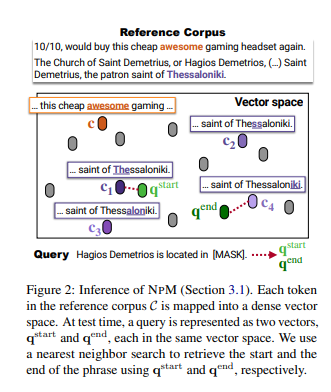

6.Nonparametric Masked Language Modeling 논문 읽기

논문 링크 논문 코드 ACL 2023에서 공개가 된 논문입니다. Abstract 기존의 언어 모델의 문제 softmax로 토큰을 예측하여 희귀 token이나 phrases 는 예측하기 어려움 -> 본 논문은 이를 nonparametric distribution로 대체하여 만든 NPM을 제안 어떠한 점이 좋아졌는가? 전체 말뭉치 검색에 대한 대조적인 목표와...

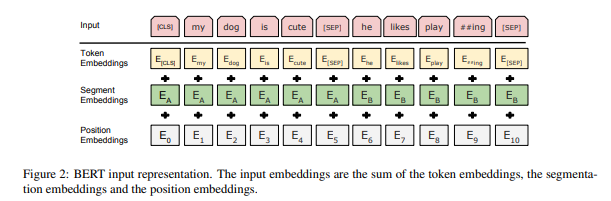

7.BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding 논문 읽기

bert 본 논문 Abstract BERT라는 언어 모델을 설명한다 BERT라는 모델은 양방향 context를 조건화하여 label이 지정되지 않은 텍스트에서 deep bidrectional representation을 사전 훈련하도록 설계된 모델이다. Introduction 언어 모델의 사전훈련은 NLP 작업을 개선하는데 효과적이였다. (이전 부터 ...

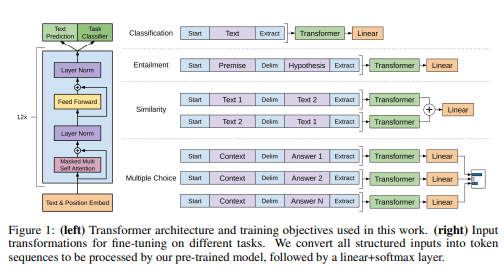

8.Improving Language Understanding by Generative Pre-Training (GPT1) 논문 읽기

논문 링크 : 논문 링크 AI 붐을 일으킨 GPT-3의 초기 모델인 GPT 모델에 대한 논문 입니다. OpenAI에서 출간한 논문입니다.

9.Learning to summarize from human feedback 논문 읽기

OpenAI에서 만든 논문입니다.

10.Attention is all you need 리뷰

본 논문은 2017년 구글 리서치 팀이 NIPS 2017에서 발표한 논문으로 현재 NLP분야에서 쓰이는 bert, bart, GPT 등의 backbone으로 사용 되는 Transformer가 소개된 논문이다.

11.BART: Denoising Sequence-to-Sequence Pre-training for Natural Language Generation, Translation, and Comprehension

논문 링크 Abstract sequence to sequence model을 pretrain 하기 위한 denoising auto encoder인 BART를 제안 하였다. BART는 표준 Transformer model을 사용한다. -> BERT가 bidirectional encoder의 성능을 보임 -> GPT가 left to right decoder의...

12.SELF-INSTRUCT: Aligning Language Models with Self-Generated Instructions

논문 링크 논문 코드 링크 Abstract instruction-tuned model은 일반화 능력을 입증 -> 그러나 종종 작성한 prompt에 의존하여 일반성을 방해한다 논문에서는 bootstraping으로 pre-trained model을 개선하기 위한 SELF-INSTRUCT를 소개 -> LM에서 input, ouput 등을 생성 한 뒤 fine...