[관련연구정리] 읽어 볼 논문들 - Keyword: XAI, Object detection, Transformer, Attention, Vision Transformer

XAI / Object Detection

Paper

블로그 내 정리할 논문들

XAI and Object Detection 위주 논문

Towards Interpretable Object Detection by Unfolding Latent Structures

- 제목에 읽을 이유가 있다.

- RoI를 계층적으로 구성하는 등 RoI내 정보를 풍부하게 하여 설명을 제공하는 듯.

Interpretable Learning for Self-Driving Cars by Visualizing Causal Attention

- causal을 어떤식으로 처리했는지.

- 또한, Attention이 DETR과 다른 개념인지? attention heat map이란 개념이 transformer와 어떻게 다른 지 궁금.

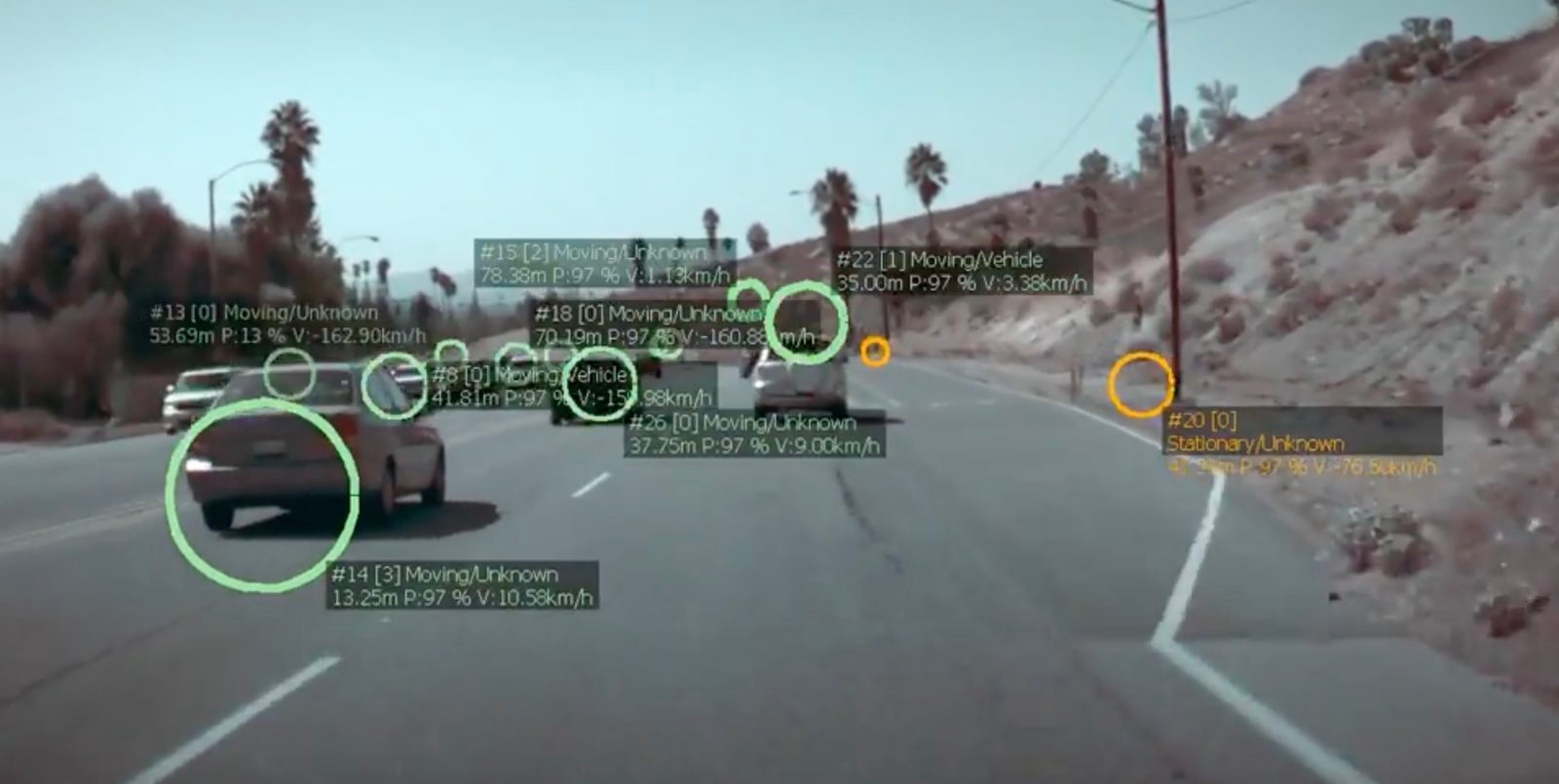

Building explainable ai evaluation for autonomous perception

- 극단적인 날씨, 저조도 등의 이슈를 어떤 방식으로 해결했는지.

MAF: Multimodal Alignment Framework for Weakly-Supervised Phrase Grounding (aclweb.org)

- VQA 관련 태스크인데, 어떤 식으로 Object detecting을 수행했는지?

Conditional Variational Autoencoder with Adversarial Learning for End-to-End Text-to-Speech

- Adversarial Learning이 어떻게 적용되는 지?

Attention 위주 논문

- Attention은 설명이 아니다.

Attention is not not Explanation

- Attention은 설명이 아닌게 아니다.

Transformer 위주

- 필수

Stand-Alone Self-Attention in Vision Models

- Vision model에서 CNNs 관련 개념을 완전히 제거한 연구

An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale

- Vision transformer

- Attention에 관련된 내용이 있으며, DETR에 있는 self-attention map 생성과 결부지어 비교할 필요 있음.

- 참고 : DMQA

- DETR후속

RelationNet++: Bridging Visual Representations for Object Detection via Transformer Decoder

- 제목 참고.

- Vision transformer, Object detection 관련 분야의 Backbone으로 자주 쓰이는 듯.

positional encodings 관련

On the relationship between self-attention and convolutional layers

- self-attention 개념을 vision(즉, cnn기반) 분야와 연관지은 논문.

- Multi-head attention layer 관련 내용 有

- 이는 아래의 positional encodings과 더욱 관련 있다.

즉, self-attention map의 개념에 대해 언급된 논문 중 하나.

Image transformer. In: ICML (2018)

- 2D positional encodings

Attention augmented convolutional networks. In: ICCV (2019)

- DETR에서 Transformer 내부의 FFN이 이와 유사한 역할을 한다고 한다.

positional encodings 관련 개념만 발췌하자.

Follow up study for DETR

XAI

SCOUTER: Slot Attention-based Classifier for Explainable Image Recognition

Explainable Vision Transformer Based COVID-19 Screening Using Radiograph

Attention

TransPose: Keypoint Localization via Transformer

Transformer Interpretability Beyond Attention Visualization

Positional Encodings

Conditional Positional Encodings for Vision Transformers

Adversarial

On the Adversarial Robustness of Visual Transformers